- Created `StellaOps.TestKit.Tests` project for unit tests related to determinism. - Implemented `DeterminismManifestTests` to validate deterministic output for canonical bytes and strings, file read/write operations, and error handling for invalid schema versions. - Added `SbomDeterminismTests` to ensure identical inputs produce consistent SBOMs across SPDX 3.0.1 and CycloneDX 1.6/1.7 formats, including parallel execution tests. - Updated project references in `StellaOps.Integration.Determinism` to include the new determinism testing library.

36 KiB

Below are current, actionable best practices to strengthen Stella Ops testing end‑to‑end, structured by test layer, with modern tools/standards and a prioritized checklist for this week. This is written for a mature, self-owned routing stack (Stella Router already in place), with compliance and operational rigor in mind.

Supersedes/extends:

docs/product-advisories/archived/2025-12-21-testing-strategy/20-Dec-2025 - Testing strategy.mdDoc sync:docs/testing/testing-strategy-models.md,docs/testing/TEST_CATALOG.yml,docs/benchmarks/testing/better-testing-strategy-samples.md

1. Unit Testing (fast, deterministic, zero I/O)

What’s new / sharper in practice

- Property-based testing is no longer optional for routing, parsing, and validation logic. It surfaces edge cases humans won’t enumerate.

- Mutation testing is increasingly used selectively (critical logic only) to detect “false confidence” tests.

- Golden master tests for routing decisions (input → normalized output) are effective when paired with strict diffing.

Best practices

- Enforce pure functions at this layer; mock nothing except time/randomness.

- Test invariants, not examples (e.g., “routing result is deterministic for same inputs”).

- Keep unit tests <50ms total per module.

Recommended tools

- Property testing:

fast-check,Hypothesis - Mutation testing:

Stryker - Snapshot/golden tests: built-in snapshot tooling (Jest, pytest)

2. Module / Source-Level Testing (logic + boundaries)

What’s evolving

- Teams are moving from “mock everything” to contract-verified mocks.

- Static + dynamic analysis are converging at this layer.

Best practices

- Test modules with real schemas and real validation rules.

- Fail fast on schema drift (JSON Schema / OpenAPI).

- Combine static analysis with executable tests to block entire bug classes.

Recommended tools / standards

- Schema validation: OpenAPI 3.1 + JSON Schema

- Static analysis: Semgrep, CodeQL

- Type-driven testing (where applicable): TypeScript strict mode, mypy

3. Integration Testing (service-to-service truth)

Key 2025 shift

- Consumer‑driven contracts are now expected, not advanced.

- Integration tests increasingly run against ephemeral environments, not shared staging.

Best practices

- Treat integration tests as API truth, not unit tests with mocks.

- Verify timeouts, retries, and partial failures explicitly.

- Run integration tests against real infra dependencies spun up per test run.

Recommended tools

- Contract testing: Pact

- Containers: Testcontainers

- API testing: Postman / REST Assured

4. Deployment & E2E Testing (system reality)

Current best practices

- E2E tests are fewer, but higher value—they validate business‑critical paths only.

- Synthetic traffic now complements E2E: run probes continuously in prod-like environments.

- Test deployment mechanics, not just features.

What to test explicitly

- Zero-downtime deploys (canary / blue‑green)

- Rollback correctness

- Config reload without restart

- Rate limiting & abuse paths

Recommended tools

- Browser / API E2E: Playwright

- Synthetic monitoring: Grafana k6

- Deployment verification: custom health probes + assertions

5. Competitor Parity Testing (often neglected, high leverage)

Why it matters

- Routing, latency, correctness, and edge behavior define credibility.

- Competitor parity tests catch regressions before users do.

Best practices

-

Maintain a parity test suite that:

- Sends identical inputs to Stella Ops and competitors

- Compares outputs, latency, error modes, and headers

-

Store results as time-series data to detect drift.

What to compare

- Request normalization

- Error semantics (codes, retries)

- Latency percentiles (p50/p95/p99)

- Failure behavior under load

Tools

- Custom harness + k6

- Snapshot diffs + alerting

- Prometheus-compatible metrics

6. Cross-Cutting Standards You Should Enforce

Observability-first testing

- Every integration/E2E test should emit traces.

- Assertions should reference trace IDs, not just responses.

Recommended standard

- OpenTelemetry (traces + metrics + logs as test artifacts)

Security & compliance

-

Negative tests for:

- Auth bypass

- Token expiry

- Rate-limit evasion

-

Tests are part of compliance evidence—store results immutably.

7. Short, Prioritized Checklist (This Week)

High impact / low friction

- Add property-based tests to Stella Router core decision logic.

- Introduce one Pact contract for the most critical upstream/downstream API.

- Convert 1–2 flaky E2E tests into deterministic integration tests.

- Add trace assertions (OpenTelemetry) to one integration test suite.

Medium effort 5. Stand up ephemeral integration envs via Testcontainers. 6. Define a competitor parity harness for one critical request path. 7. Add latency percentile tracking to CI (fail on regression).

Strategic 8. Identify 5 invariants Stella Ops must never violate—encode them as tests. 9. Make test results part of release gating, not advisory. 10. Treat failed tests as operational incidents, not dev noise.

Bottom line

The strongest Stella Ops testing posture is:

- Thin unit tests, strong invariants

- Contracts over mocks

- Few but brutal E2E tests

- Parity and observability baked in

- Tests treated as operational evidence

This keeps velocity high while preventing silent regressions—exactly where routing and ops systems fail if testing is superficial. Below is a concrete implementation plan that turns the strategy into an executable testing system, using “module per model” (project-type) rules so you can apply it consistently across the ~330 non-test projects you listed.

I am going to do three things:

- Define test models (L0/S1/W1/…): what every project of that type must have.

- Map your repo into those models and give module-family plans (Scanner/Concelier/Excititor/…).

- Provide the buildable plumbing: conventions, CI lanes, fixtures, determinism harness, and sample code.

0) Your repo, normalized into “test models”

From the csproj list you posted, your production projects roughly fall into:

- L0 Library/Core: ~148 projects (pure-ish logic, serializers, models, merge engines, policies).

- C1 Connector/External: ~46 projects (HTTP ingest, vendor/distro feeds, CSAF/OpenVEX, S3 stores).

- AN1 Analyzer/SourceGen: ~32 projects (Roslyn analyzers, source generators).

- W1 WebService/API: ~25 projects (ASP.NET web services, APIs, gateways).

- WK1 Worker/Indexer: ~22 projects (workers, indexers, schedulers, ingestors).

- S1 Storage(Postgres): ~14 projects (storage adapters, postgres infrastructure).

- T1 Transport/Queue: ~11 projects (router transports, messaging transports, queues).

- PERF Benchmark: ~8 projects.

- CLI1 Tool/CLI: ~25 projects (tools, CLIs, smoke utilities).

The point: you do not need 300 bespoke testing strategies. You need ~9 models, rigorously enforced.

1) Test models (the rules that drive everything)

Model L0 — Library/Core

Applies to: *.Core, *.Models, *.Normalization, *.Merge, *.Policy, *.Formats.*, *.Diff, *.ProofSpine, *.Reachability, *.Unknowns, *.VersionComparison, etc.

Must have

- Unit tests for invariants and edge cases.

- Property-based tests for the critical transformations (merge, normalize, compare, evaluate).

- Golden/snapshot tests for any external format emission (JSON, CSAF/OpenVEX/CycloneDX, policy verdict artifacts).

- Determinism checks: same semantic input ⇒ same canonical output bytes.

Explicit “Do Not”

- No real network; no real DB; no global clock; no random without seed.

Definition of Done

- Every public “engine” method has at least one invariant test and one property test.

- Any

ToJson/Serialize/Exportpath has a canonical snapshot test.

Model S1 — Storage(Postgres)

Applies to: *.Storage.Postgres, StellaOps.Infrastructure.Postgres, *.Indexer.Storage.Postgres, etc.

Must have

- Migration compatibility tests: apply migrations from scratch; apply from N-1 snapshot; verify expected schema.

- Idempotency tests: inserting the same domain entity twice does not duplicate state.

- Concurrency tests: two writers, one key ⇒ correct conflict behavior.

- Query determinism: same inputs ⇒ stable ordering (explicit

ORDER BYchecks).

Definition of Done

- There is a shared

PostgresFixtureused everywhere; no hand-rolled connection strings per project. - Tests can run in parallel (separate schemas per test or per class).

Model T1 — Transport/Queue

Applies to: StellaOps.Messaging.Transport.*, StellaOps.Router.Transport.*, *.Queue

Must have

- Protocol property tests: framing/encoding/decoding roundtrips; fuzz invalid payloads.

- At-least-once semantics tests: duplicates delivered ⇒ consumer idempotency.

- Backpressure/timeouts: verify retry and cancellation behavior deterministically (fake clock).

Definition of Done

- Shared “transport compliance suite” runs against each transport implementation.

Model C1 — Connector/External

Applies to: *.Connector.*, *.Connectors.*, *.ArtifactStores.*

Must have

- Fixture-based parser tests (offline): raw upstream payload fixture ⇒ normalized internal model snapshot.

- Resilience tests: partial/bad input ⇒ deterministic failure classification.

- Optional live smoke tests (opt-in): fetch current upstream; compare schema drift; never gating PR by default.

- Security tests: URL allowlist, redirect handling, max payload size, decompression bombs.

Definition of Done

- Every connector has a

Fixtures/folder and aFixtureUpdatermode. - Normalization output is canonical JSON snapshot-tested.

Model W1 — WebService/API

Applies to: *.WebService, *.Api, *.Gateway, *.TokenService, *.Server

Must have

- HTTP contract tests: OpenAPI schema stays compatible; error envelope stable.

- Authentication/authorization tests: “deny by default”; token expiry; tenant isolation.

- OTel trace assertions: each endpoint emits trace with required attributes.

- Negative tests: malformed content types, oversized payloads, method mismatch.

Definition of Done

- A shared

WebServiceFixture<TProgram>hosts the service in tests with deterministic config. - Contract is emitted and verified (snapshot) each build.

Model WK1 — Worker/Indexer

Applies to: *.Worker, *.Indexer, *.Observer, *.Ingestor, scheduler worker hosts

Must have

- End-to-end job tests: enqueue → process → persisted side effects.

- Retry and poison handling: permanent failure routed correctly.

- Idempotency: same job ID processed twice ⇒ no duplicate results.

- Telemetry: spans around each job stage; correlation IDs persisted.

Definition of Done

- Runs in ephemeral environment: Postgres + Valkey + transport (in-memory/postgres transport) by default.

Model AN1 — Analyzer/SourceGen

Applies to: *.Analyzers, *.SourceGen

Must have

-

Compiler-based tests using Roslyn test harness:

- diagnostics emitted exactly

- code fixes and generators stable

-

Golden tests for generated code output.

Model CLI1 — Tool/CLI

Applies to: *.Cli, src/Tools/*, smoke tools

Must have

- Exit-code tests

- Golden stdout/stderr tests

- Deterministic output (ordering, formatting, stable timestamps unless explicitly disabled)

Model PERF — Benchmarks

Applies to: *Bench*, *Perf*, *Benchmarks*

Must have

- Bench projects run on demand; in CI only a “smoke perf” subset runs.

- Regression gate based on relative thresholds, not absolute numbers.

2) Repository-wide foundations (must be implemented first)

You already have many test csprojs. The missing piece is uniformity: a single harness, a single taxonomy, and single CI routing.

2.1 Create a shared test kit (one place for deterministic infrastructure)

Create:

src/__Libraries/StellaOps.TestKit/StellaOps.TestKit.csproj(new)src/__Libraries/StellaOps.TestKit.AspNet/StellaOps.TestKit.AspNet.csproj(optional)src/__Libraries/StellaOps.TestKit.Containers/StellaOps.TestKit.Containers.csproj(optional if you prefer)

TestKit must provide

DeterministicTime(wrappingTimeProvider)DeterministicRandom(seed)CanonicalJsonAssert(reusingStellaOps.Canonical.Json)SnapshotAssert(thin wrapper; you can use Verify.Xunit or your own stable snapshot)PostgresFixture(Testcontainers or your own docker compose runner)ValkeyFixtureOtelCapture(in-memory span exporter + assertion helpers)HttpFixtureServerorHttpMessageHandlerStub(to avoid license friction and keep tests hermetic)- Common

[Trait]constants and filters

Minimal primitives to standardize immediately

public static class TestCategories

{

public const string Unit = "Unit";

public const string Property = "Property";

public const string Snapshot = "Snapshot";

public const string Integration = "Integration";

public const string Contract = "Contract";

public const string Security = "Security";

public const string Performance = "Performance";

public const string Live = "Live"; // opt-in only

}

2.2 Standard trait rules (so CI can filter correctly)

-

Every test class must be tagged with exactly one “lane” trait:

- Unit / Integration / Contract / Security / Performance / Live

-

Property tests are a sub-trait (Unit + Property), or stand-alone (Property) if you prefer.

-

Snapshot tests must be Unit or Contract lane (depending on what they snapshot).

2.3 A single way to run tests locally and in CI

Add a root script (or dotnet tool) so everyone uses the same invocation:

./build/test.ps1and./build/test.sh

Example lane commands:

dotnet test -c Release --filter "Category=Unit"dotnet test -c Release --filter "Category=Integration"dotnet test -c Release --filter "Category=Contract"dotnet test -c Release --filter "Category=Security"dotnet test -c Release --filter "Category=Performance"dotnet test -c Release --filter "Category=Live"(never default)

2.4 Determinism baseline across the entire repo

Define a single “determinism contract”:

-

Canonical JSON serialization is mandatory for:

- SBOM, VEX, CSAF/OpenVEX exports

- policy verdict artifacts

- evidence bundles

- ingestion normalized models

-

Every determinism test writes:

- canonical bytes hash (SHA-256)

- version stamps of inputs (feed snapshot hash, policy manifest hash)

- toolchain version (where meaningful)

You already have tests/integration/StellaOps.Integration.Determinism. Expand it into the central gate.

2.5 Architecture enforcement tests (your “lattice placement” rule)

You have an architectural rule:

- lattice algorithms run in

scanner.webservice, not in Concelier or Excititor - Concelier and Excititor “preserve prune source”

Turn this into a build gate using architecture tests (NetArchTest.Rules or similar):

- Concelier assemblies must not reference Scanner lattice engine assemblies

- Excititor assemblies must not reference Scanner lattice engine assemblies

- Scanner.WebService may reference lattice engine

This prevents “accidental creep” forever.

3) Module-family implementation plan (applies your models to your modules)

Below are the major module families and what to implement, using the models above. I’m not repeating every csproj name; I’m specifying what each family must contain and which existing test projects should be upgraded.

3.1 Scanner (dominant surface area)

Projects: src/Scanner/* including analyzers, reachability, proof spine, smart diff, storage, webservice, worker.

Models present: L0 + AN1 + S1 + T1 + W1 + WK1 + PERF.

A) L0 libraries: must add/upgrade

Scanner.Core,Diff,SmartDiff,Reachability,ReachabilityDrift,ProofSpine,EntryTrace,Surface.*,Triage,VulnSurfaces,CallGraph, analyzers.

Unit + property

-

Version/range resolution invariants: monotonicity, transitivity, boundary behavior.

-

Graph invariants:

- reachability subgraph is acyclic where expected

- deterministic node IDs

- stable ordering in emitted graphs

-

SmartDiff invariants:

- adding an unrelated component does not change unrelated deltas

- changes are minimal and stable

Snapshot

-

For each emission format:

- SBOM canonical JSON snapshot

- reachability evidence snapshot

- delta verdict snapshot

Determinism

-

identical scan manifest + fixture inputs ⇒ identical hashes of:

- SBOM

- reachability evidence

- triage output

- verdict artifact payload

B) AN1 analyzers

Must

-

Roslyn compilation tests for each analyzer:

- expected diagnostics

- no false positives on common patterns

-

Golden generated code output for SourceGen (if any).

C) S1 storage (Scanner.Storage)

Must

- Migration tests + idempotent inserts for scan results.

- Query determinism tests (explicit ordering).

D) W1 webservice (Scanner.WebService)

Must

-

Endpoint contract snapshot (OpenAPI or your own schema).

-

Auth/tenant isolation tests.

-

OTel trace assertions:

- request span created

- trace includes scan_id / tenant_id / policy_id tags

-

Negative tests:

- reject unsupported media types

- size limits enforced

E) WK1 worker (Scanner.Worker)

Must

- End-to-end: enqueue scan job → worker runs → stored evidence exists → events emitted.

- Retry tests: transient failure uses backoff; permanent failure routes to poison.

F) PERF

-

Keep benchmarks; add “perf smoke” in CI to detect 2× regressions on key algorithms:

- reachability calculation

- smart diff

- canonical serialization

Primary deliverable for Scanner

- Expand

tests/integration/StellaOps.Integration.ReachabilityandStellaOps.Integration.Determinismto be the main scan-pipeline gates.

3.2 Concelier (vulnerability aggregation + normalization)

Projects: src/Concelier/* connectors + core + normalization + merge + storage + webservice.

Models present: C1 + L0 + S1 + W1 + AN1.

A) C1 connectors (most of Concelier)

For each Concelier.Connector.*:

Fixture tests (mandatory)

Fixtures/<source>/<case>.json(raw)Expected/<case>.canonical.json(normalized internal model)

Resilience tests

-

missing fields, unexpected enum values, invalid date formats:

- should produce deterministic error classification (e.g.,

ParseError.SchemaDrift)

- should produce deterministic error classification (e.g.,

-

large payload behavior (bounded)

Security

- Only allow configured base URLs; reject redirects to non-allowlisted domains.

- Limit decompression output size.

Live smoke (opt-in)

- Run weekly/nightly; compare schema drift; generate PR that updates fixtures.

B) L0 core/merge/normalization

-

Merge correctness properties:

- commutativity/associativity only where intended

- if “link not merge”, prove you never destroy original source identity

-

Canonical output snapshot of merged normalized DB export.

C) S1 storage

- ingestion idempotency (same advisory ID, same source snapshot ⇒ no duplicates)

- query ordering determinism

D) W1 webservice

- contract tests + OTel tests for endpoints like “latest feed snapshot”, “advisory lookup”.

E) Architecture test (your rule)

- Concelier must not reference scanner lattice evaluation.

3.3 Excititor (VEX/CSAF ingest + preserve prune source)

Projects: src/Excititor/* connectors + formats + policy + storage + webservice + worker.

Models present: C1 + L0 + S1 + W1 + WK1.

A) C1 connectors (CSAF/OpenVEX)

Same fixture discipline as Concelier:

-

raw CSAF/OpenVEX fixture

-

normalized VEX claim model snapshot

-

explicit tests for edge semantics:

- multiple product branches

- status transitions

- “not affected” with justification evidence

B) L0 formats/export

-

Canonical formatting:

Formats.CSAF,Formats.OpenVEX,Formats.CycloneDX

-

Snapshot every emitted document.

C) WK1 worker

- end-to-end ingest job tests

- poison handling

- OTel correlation

D) “Preserve prune source” tests (mandatory)

- Input VEX with prune markers ⇒ output must preserve source references and pruning rationale.

- Explicitly test that Excititor does not compute lattice decisions (only preserves and transports).

3.4 Policy (engine, DSL, scoring, unknowns)

Projects: src/Policy/* + PolicyDsl + storage + gateway.

Models present: L0 + S1 + W1.

A) L0 policy engine and scoring

Property tests

-

Policy evaluation monotonicity:

- tightening risk budget cannot decrease severity

-

Unknown handling:

- if unknowns>N then fail verdict (where configured)

-

Merge semantics:

- if you have lattice merge rules, verify join/meet properties that you claim to support.

Snapshot

- Verdict artifact canonical JSON snapshot (the auditor-facing output)

- Policy evaluation trace summary snapshot (stable structure)

B) Policy DSL

- DSL parser: property tests for roundtrips (parse → print → parse).

- Validator tool (

PolicyDslValidator) should have golden tests for common invalid policy patterns.

C) S1 storage

- policy versioning immutability (published policies cannot be mutated)

- retrieval ordering

D) W1 gateway

- contract tests, auth, OTel.

3.5 Attestor + Signer + Provenance + Cryptography plugins

Projects: src/Attestor/*, src/Signer/*, src/Provenance/*, src/__Libraries/StellaOps.Cryptography*, ops/crypto/*, CryptoPro services.

Models present: L0 + S1 (where applicable) + W1 + CLI1 + C1 (KMS/remote plugins).

Key principle

Signatures may be non-deterministic depending on algorithm/provider. Your determinism gate must focus on:

- deterministic payload canonicalization

- deterministic hashes and envelope structure

- signature verification correctness (not byte equality) unless you use deterministic signing.

A) Canonical JSON + DSSE/in-toto envelopes

Must

- canonical payload bytes snapshot

- stable digest computation tests

- verification tests with fixed keys

B) Plugin tests

For each crypto plugin (BouncyCastle/CryptoPro/OpenSslGost/Pkcs11Gost/SimRemote/SmRemote/etc):

- capability detection tests

- sign/verify roundtrip tests

- error classification tests (e.g., key not present, provider unavailable)

C) W1 services

- token issuance and signing endpoints: auth + negative tests.

- OTel trace presence.

D) “Proof chain” integration

Expand tests/integration/StellaOps.Integration.ProofChain:

- build evidence bundle → sign → store → verify → replay → same digest

3.6 EvidenceLocker + Findings Ledger + Replay

Projects: EvidenceLocker, Findings.Ledger, Replay.Core, Audit.ReplayToken. Models present: L0 + S1 + W1 + WK1.

A) Immutability and append-only behavior (EvidenceLocker)

- once stored, artifact cannot be overwritten

- same key + different payload ⇒ rejected or versioned (explicit behavior)

- concurrency tests for simultaneous writes

B) Ledger determinism (Findings)

- replay yields identical state

- ordering is deterministic (explicit checks)

C) Replay token security

- token expiration

- tamper detection

3.7 Graph + TimelineIndexer

Projects: Graph.Api, Graph.Indexer, TimelineIndexer.* Models present: L0 + S1 + W1 + WK1.

Must

- indexer end-to-end test: ingest events → build graph → query expected shape

- query determinism tests (stable ordering)

- contract tests for API schema

3.8 Scheduler + TaskRunner

Projects: Scheduler.* and TaskRunner.* Models present: L0 + S1 + W1 + WK1 + CLI1.

Must

- scheduling invariants (property tests): next-run computations, backfill ranges

- end-to-end: enqueue tasks → worker executes → completion recorded

- retry/backoff deterministically with fake clock

- storage idempotency

3.9 Router + Messaging (core platform plumbing)

Projects: src/__Libraries/StellaOps.Router.*, StellaOps.Messaging.*, transports.

Models present: L0 + T1 + W1 + S1.

Must

-

transport compliance suite:

- in-memory transport

- tcp/udp/tls

- messaging transport

- rabbitmq (if kept) — run only in integration lane

-

property tests for framing and routing determinism

-

integration tests that verify:

- same message produces same route under same config

- “at least once” behavior + consumer idempotency harness

3.10 Notify/Notifier

Projects: Notify.* and Notifier.* Models present: L0 + C1 + S1 + W1 + WK1.

Must

-

connector offline tests for email/slack/teams/webhook:

- payload formatting snapshots

- error handling snapshots

-

worker end-to-end: event → notification queued → delivered via stub handler

-

rate limit behavior if present

3.11 AirGap

Projects: AirGap.Controller, Importer, Policy, Policy.Analyzers, Storage, Time. Models present: L0 + AN1 + S1 + W1 (controller) + CLI1 (if tools).

Must

-

export/import bundle determinism:

- same inputs ⇒ same bundle hash

-

policy analyzers compilation tests

-

controller API contract tests

-

storage idempotency

4) CI lanes and release gates (exactly how to run it)

Lane 1: Unit (fast, PR gate)

- Runs all

Category=UnitandCategory=Contracttests that are offline. - Must complete quickly; fail fast.

Lane 2: Integration (PR gate or merge gate)

-

Runs

Category=Integrationwith Testcontainers:- Postgres (required)

- Valkey (required where used)

- optional RabbitMQ (only for those transports)

Lane 3: Determinism (merge gate)

-

Runs

tests/integration/StellaOps.Integration.Determinism -

Runs canonical hash checks; produces artifacts:

determinism.jsonper suitesha256.txtper artifact

Lane 4: Security (nightly + on demand)

- Runs

tests/security/StellaOps.Security.Tests - Runs fuzz-style negative tests for parsers/decoders (bounded).

Lane 5: Live connectors (nightly/weekly, never default)

-

Runs

Category=Live:- fetch upstream sources (NVD, OSV, GHSA, vendor CSAF hubs)

- compares schema drift

- generates updated fixtures (or fails with a diff)

Lane 6: Perf smoke (nightly + optional merge gate)

- Runs a small subset of perf tests and compares to baseline.

5) Concrete implementation backlog (what to do, in order)

Epic A — Foundations (required before module work)

- Add

StellaOps.TestKit(+ optionally.AspNet,.Containers). - Standardize

[Trait("Category", …)]across existing test projects. - Add root test runner scripts with lane filters.

- Add canonical snapshot utilities (hook into

StellaOps.Canonical.Json). - Add

OtelCapturehelper so integration tests assert traces.

Epic B — Determinism gate everywhere

-

Define “determinism manifest” format used by:

- Scanner pipelines

- AirGap bundle export

- Policy verdict artifacts

-

Update determinism tests to emit stable hashes and store as CI artifacts.

Epic C — Storage harness

-

Implement Postgres fixture:

- start container

- apply migrations automatically per module

- reset DB state between tests (schema-per-test or truncation)

-

Implement Valkey fixture similarly.

Epic D — Connector fixture discipline

-

For each connector project:

Fixtures/+Expected/- parser test: raw ⇒ normalized snapshot

-

Wire

FixtureUpdaterto update fixtures (opt-in).

Epic E — WebService contract + telemetry

-

For each webservice:

- OpenAPI snapshot (or schema snapshot)

- auth tests

- OTel trace assertions

-

Make contract drift a PR gate.

Epic F — Architecture tests

-

Add assembly dependency rules:

- Concelier/Excititor do not depend on scanner lattice engine

-

Add “no forbidden package” rules (e.g., Redis library) if you want compliance gates.

6) Code patterns you can copy immediately

6.1 Property test example (FsCheck-style)

using Xunit;

using FsCheck;

using FsCheck.Xunit;

public sealed class VersionComparisonProperties

{

[Property(Arbitrary = new[] { typeof(Generators) })]

[Trait("Category", "Unit")]

[Trait("Category", "Property")]

public void Compare_is_antisymmetric(SemVer a, SemVer b)

{

var ab = VersionComparer.Compare(a, b);

var ba = VersionComparer.Compare(b, a);

Assert.Equal(Math.Sign(ab), -Math.Sign(ba));

}

private static class Generators

{

public static Arbitrary<SemVer> SemVer() =>

Arb.From(Gen.Elements(

new SemVer(0,0,0),

new SemVer(1,0,0),

new SemVer(1,2,3),

new SemVer(10,20,30)

));

}

}

6.2 Canonical JSON determinism assertion

public static class DeterminismAssert

{

public static void CanonicalJsonStable<T>(T value, string expectedSha256)

{

byte[] canonical = CanonicalJson.SerializeToUtf8Bytes(value); // your library

string actual = Convert.ToHexString(SHA256.HashData(canonical)).ToLowerInvariant();

Assert.Equal(expectedSha256, actual);

}

}

6.3 Postgres fixture skeleton (Testcontainers)

public sealed class PostgresFixture : IAsyncLifetime

{

public string ConnectionString => _container.GetConnectionString();

private readonly PostgreSqlContainer _container =

new PostgreSqlBuilder().WithImage("postgres:16").Build();

public async Task InitializeAsync()

{

await _container.StartAsync();

await ApplyMigrationsAsync(ConnectionString);

}

public async Task DisposeAsync() => await _container.DisposeAsync();

private static async Task ApplyMigrationsAsync(string cs)

{

// call your migration runner for the module under test

}

}

6.4 OTel trace capture assertion

public sealed class OtelCapture : IDisposable

{

private readonly List<Activity> _activities = new();

private readonly ActivityListener _listener;

public OtelCapture()

{

_listener = new ActivityListener

{

ShouldListenTo = _ => true,

Sample = (ref ActivityCreationOptions<ActivityContext> _) => ActivitySamplingResult.AllData,

ActivityStopped = a => _activities.Add(a)

};

ActivitySource.AddActivityListener(_listener);

}

public void AssertHasSpan(string name) =>

Assert.Contains(_activities, a => a.DisplayName == name);

public void Dispose() => _listener.Dispose();

}

7) Deliverable you should add to the repo: a “Test Catalog” file

Create docs/testing/TEST_CATALOG.yml that acts as your enforcement checklist.

Example starter:

models:

L0:

required: [unit, property, snapshot, determinism]

S1:

required: [integration_postgres, migrations, idempotency, concurrency]

C1:

required: [fixtures, snapshot, resilience, security]

W1:

required: [contract, authz, otel, negative]

WK1:

required: [end_to_end, retries, idempotency, otel]

T1:

required: [protocol_roundtrip, fuzz_invalid, semantics]

AN1:

required: [diagnostics, codefix, golden_generated]

CLI1:

required: [exit_codes, golden_output, determinism]

PERF:

required: [benchmark, perf_smoke]

modules:

Scanner:

models: [L0, AN1, S1, T1, W1, WK1, PERF]

gates: [determinism, reachability_evidence, proof_spine]

Concelier:

models: [C1, L0, S1, W1, AN1]

gates: [fixture_coverage, normalization_determinism, no_lattice_dependency]

Excititor:

models: [C1, L0, S1, W1, WK1]

gates: [preserve_prune_source, format_snapshots, no_lattice_dependency]

Policy:

models: [L0, S1, W1]

gates: [unknown_budget, verdict_snapshot]

This file becomes your roadmap and your enforcement ledger.

8) What I would implement first (highest leverage)

If you do only five concrete steps first, do these:

- StellaOps.TestKit + trait standardization across all test projects.

- Expand Determinism integration tests to cover SBOM/VEX/verdict/evidence bundles (hash artifacts).

- Implement a single PostgresFixture and migrate every storage test to it.

- Add connector fixture discipline (raw ⇒ normalized snapshot) for Concelier + Excititor.

- Add architecture tests enforcing your lattice placement rule (Scanner.WebService only).

Everything else becomes routine once these are in place.

----------------------------------------------- Part #2 ----------------------------------------------- I’m sharing this because the SBOM and attestation standards you’re tracking are actively evolving into more auditable, cryptographically strong building blocks for supply‑chain CI/CD workflows — and both CycloneDX and Sigstore/in‑toto are key to that future.

CycloneDX 1.6 & SPDX 3.0.1: • CycloneDX v1.6 was released with major enhancements around cryptographic transparency — including Cryptographic Bill of Materials (CBOM) and native attestation support (CDXA), making it easier to attach and verify evidence about components and their properties. (CycloneDX) • CycloneDX is designed to serve modern SBOM needs and can represent a wide range of supply‑chain artifacts and relationships directly in the BOM. (FOSSA) • SPDX 3.0.1 is the latest revision of the SPDX standard, continuing its role as a highly expressive SBOM format with broad metadata support and international recognition. (Wikipedia) • Together, these formats give you strong foundations for “golden” SBOMs — standardized, richly described BOM artifacts that tools and auditors can easily consume.

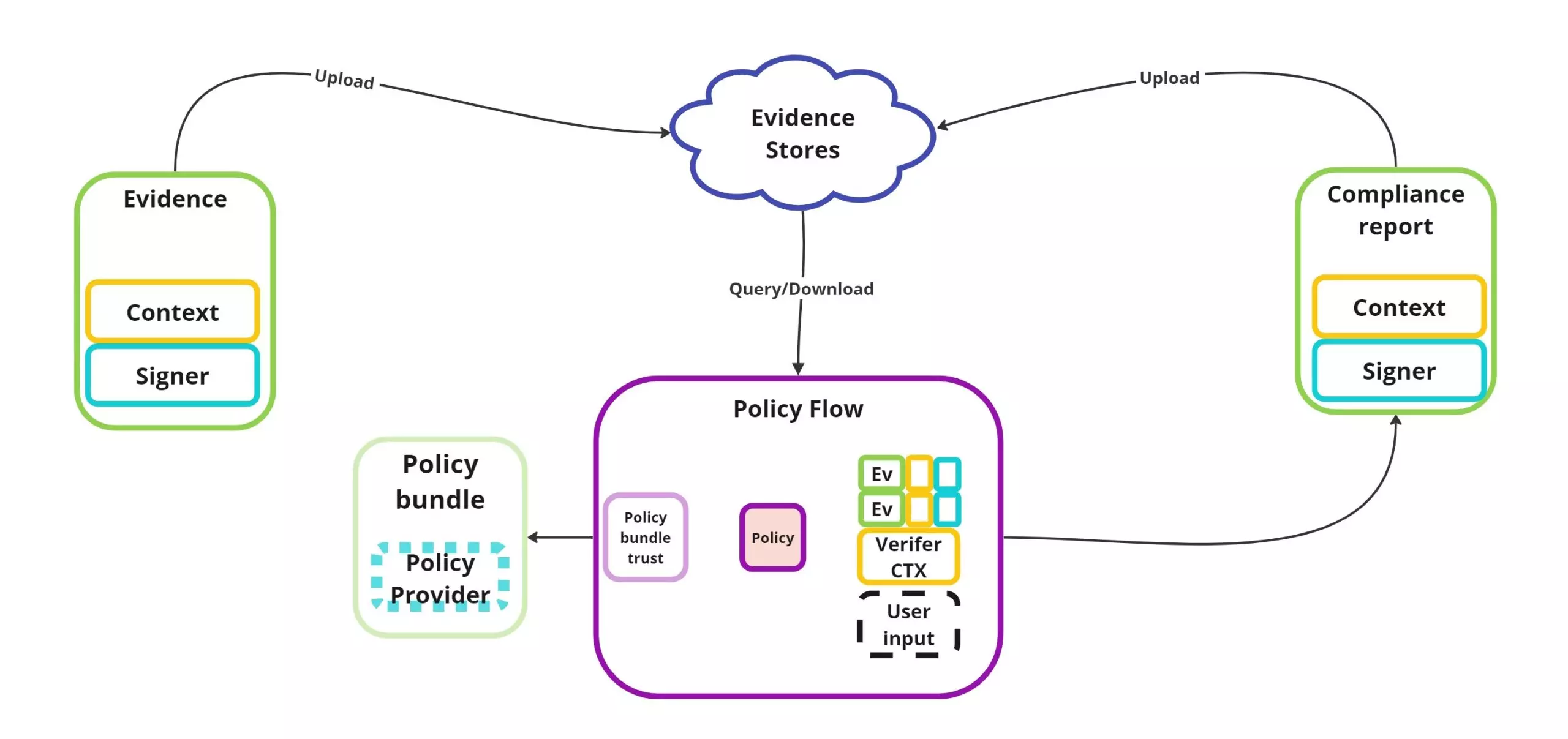

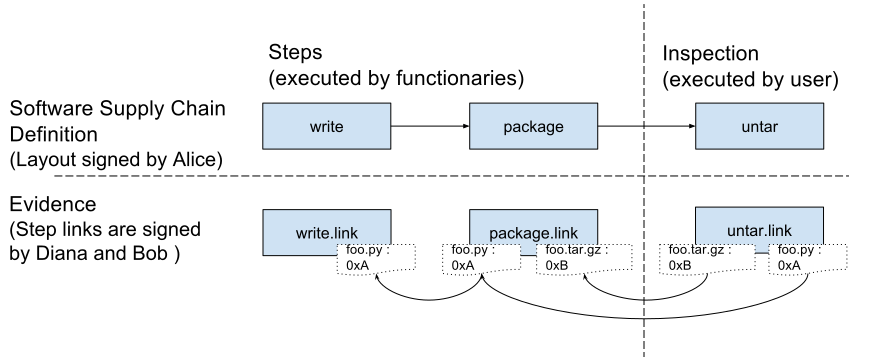

Attestations & DSSE: • Modern attestation workflows (e.g., via in‑toto and Sigstore/cosign) revolve around DSSE (Dead Simple Signing Envelope) for signing arbitrary predicate data like SBOMs or build metadata. (Sigstore) • Tools like cosign let you sign SBOMs as attestations (e.g., SBOM is the predicate about an OCI image subject) and then verify those attestations in CI or downstream tooling. (Trivy) • DSSE provides a portable envelope format so that your signed evidence (attestations) is replayable and verifiable in CI builds, compliance scans, or deployment gates. (JFrog)

Why it matters for CI/CD: • Standardizing on CycloneDX 1.6 and SPDX 3.0.1 formats ensures your SBOMs are both rich and interoperable. • Embedding DSSE‑signed attestations (in‑toto/Sigstore) into your pipeline gives you verifiable, replayable evidence that artifacts were produced and scanned according to policy. • This aligns with emerging supply‑chain security practices where artifacts are not just built but cryptographically attested before release — enabling stronger traceability, non‑repudiation, and audit readiness.

In short: focus first on adopting CycloneDX 1.6 + SPDX 3.0.1 as your canonical SBOM formats, and then integrate DSSE‑based attestations (in‑toto/Sigstore) to ensure those SBOMs and related CI artifacts are signed and verifiable across environments.