23 KiB

Here are some key developments in the software‑supply‑chain and vulnerability‑scoring world that you’ll want on your radar.

1. CVSS v4.0 – traceable scoring with richer context

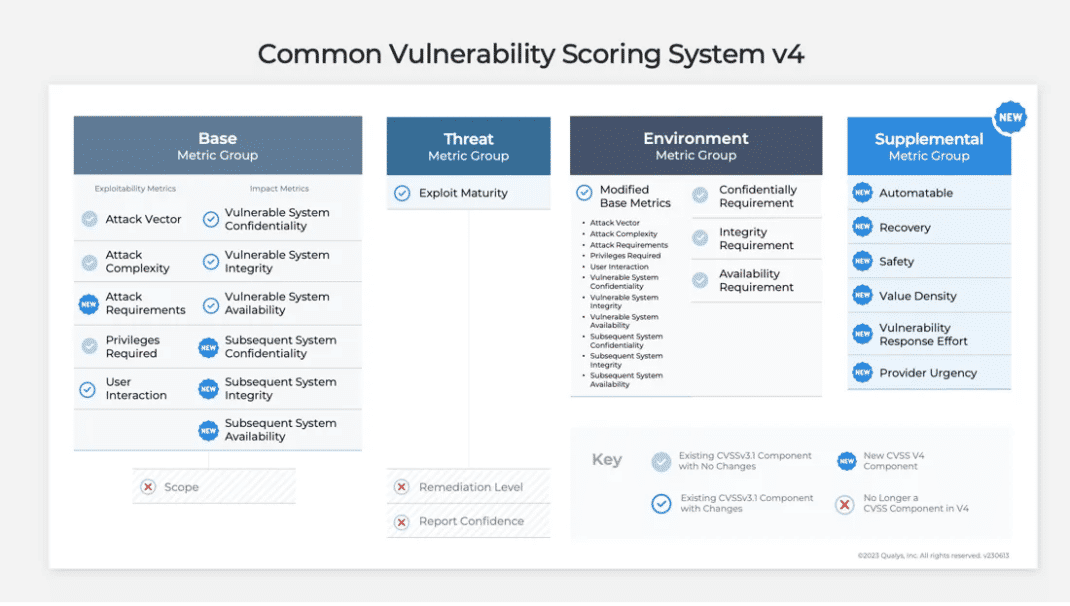

- CVSS v4.0 was officially released by FIRST (Forum of Incident Response & Security Teams) on November 1, 2023. (first.org)

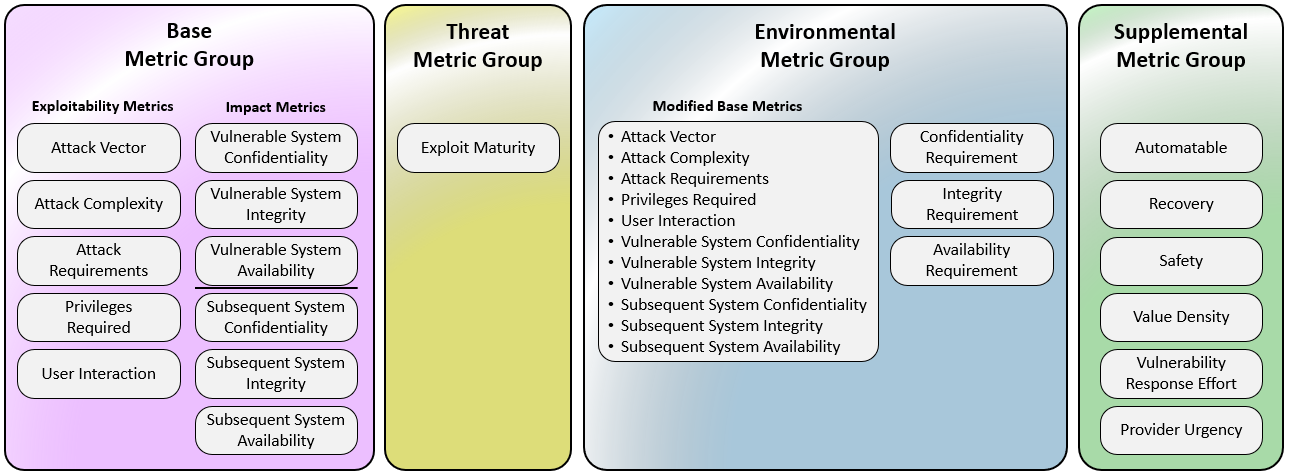

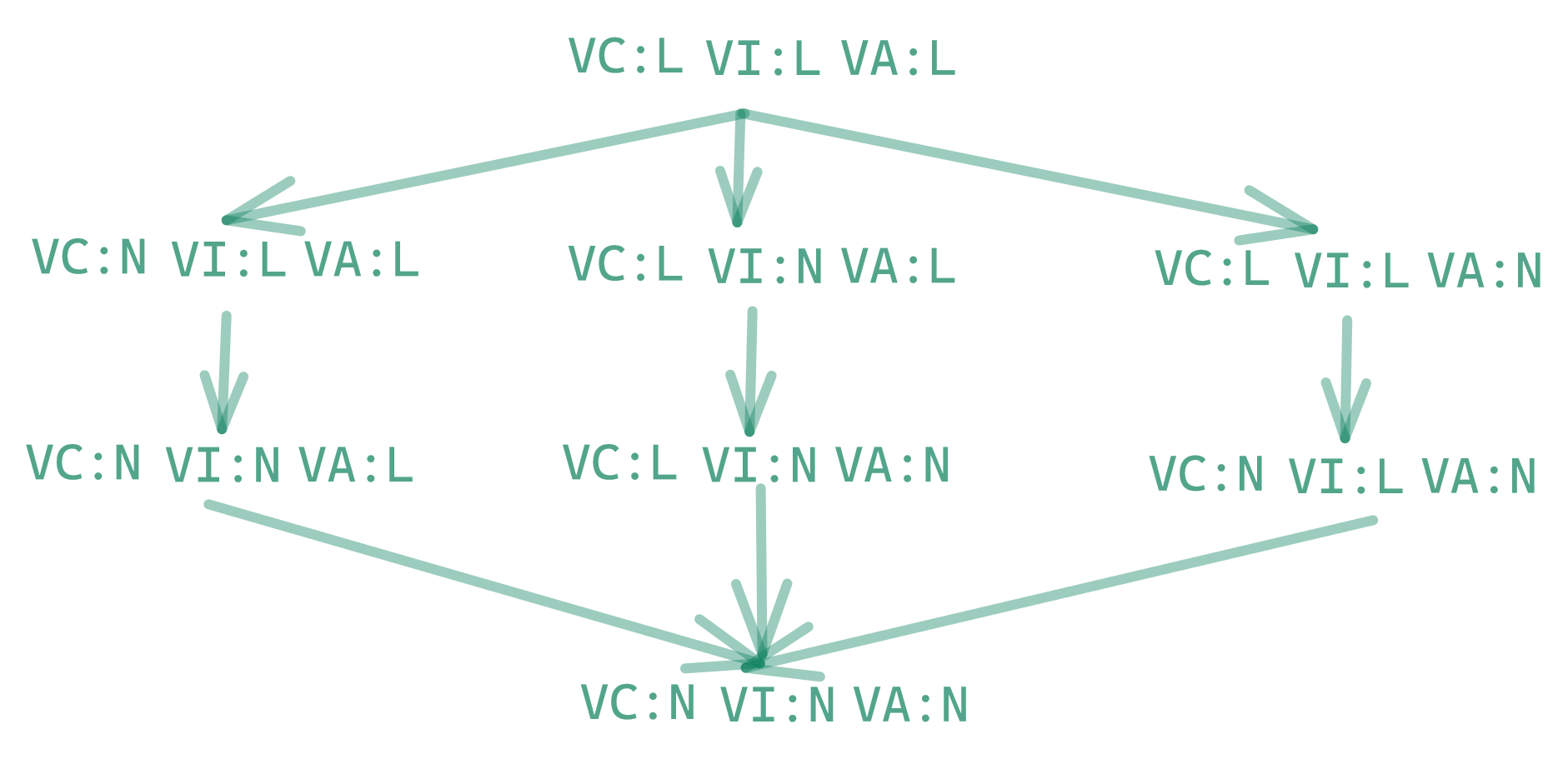

- The specification now clearly divides metrics into four groups: Base, Threat, Environmental, and Supplemental. (first.org)

- The National Vulnerability Database (NVD) has added support for CVSS v4.0 — meaning newer vulnerability records can carry v4‑style scores, vector strings and search filters. (NVD)

- What’s new/tangible: better granularity, explicit “Attack Requirements” and richer metadata to better reflect real‑world contextual risk. (Seemplicity)

- Why this matters: Enables more traceable evidence of how a score was derived (which metrics used, what context), supporting auditing, prioritisation and transparency.

Take‑away for your world: If you’re leveraging vulnerability scanning, SBOM enrichment or compliance workflows (given your interest in SBOM/VEX/provenance), then moving to or supporting CVSS v4.0 ensures you have stronger traceability and richer scoring context that maps into policy, audit and remediation workflows.

2. CycloneDX v1.7 – SBOM/VEX/provenance with cryptographic & IP transparency

- Version 1.7 of the SBOM standard from OWASP Foundation (CycloneDX) launched on October 21, 2025. (CycloneDX)

- Key enhancements: Cryptography Bill of Materials (CBOM) support (listing algorithm families, elliptic curves, etc) and structured citations (who provided component info, how, when) to improve provenance. (CycloneDX)

- Provenance use‑cases: The spec enables declaring supplier/author/publisher metadata, component origin, external references. (CycloneDX)

- Broadening scope: CycloneDX now supports not just SBOM (software), but hardware BOMs (HBOM), machine learning BOMs, cryptographic BOMs (CBOM) and supports VEX/attestation use‑cases. (openssf.org)

- Why this matters: For your StellaOps architecture (with a strong emphasis on provenance, deterministic scans, trust‑frameworks) CycloneDX v1.7 provides native standard support for deeper audit‑ready evidence, cryptographic algorithm visibility (which matters for crypto‑sovereign readiness) and formal attestations/citations in the BOM.

Take‑away: Aligning your SBOM/VEX/provenance stack (e.g., scanner.webservice) to output CycloneDX v1.7‑compliant artifacts means you jump ahead in terms of traceability, auditability and future‑proofing (crypto and IP).

3. SLSA v1.2 Release Candidate 2 – supply‑chain build provenance standard

- On November 10, 2025, the Open Source Security Foundation (via the SLSA community) announced RC2 of SLSA v1.2, open for public comment until November 24, 2025. (SLSA)

- What’s new: Introduction of a Source Track (in addition to the Build Track) to capture source control provenance, distributed provenance, artifact attestations. (SLSA)

- Specification clarifies provenance/attestation formats, how builds should be produced, distributed, verified. (SLSA)

- Why this matters: SLSA gives you a standard framework for “I can trace this binary back to the code, the build system, the signer, the provenance chain,” which aligns directly with your strategic moats around deterministic replayable scans, proof‑of‑integrity graph, and attestations.

Take‑away: If you integrate SLSA v1.2 (once finalised) into StellaOps, you gain an industry‑recognised standard for build provenance and attestation, complementing your SBOM/VEX and CVSS code bases.

Why I’m sharing this with you

Given your interest in cryptographic‑sovereign readiness, deterministic scanning, provenance and audit‑grade supply‑chain tooling (your StellaOps moat list), this trifecta (CVSS v4.0 + CycloneDX v1.7 + SLSA v1.2) represents the major standards you need to converge on. They each address different layers: vulnerability scoring, component provenance and build/trust chain assurance. Aligning all three will give you a strong governance and tooling stack.

If you like, I can pull together a detailed gap‑analysis table (your current architecture versus what these standards demand) and propose roadmap steps for StellaOps to adopt them.

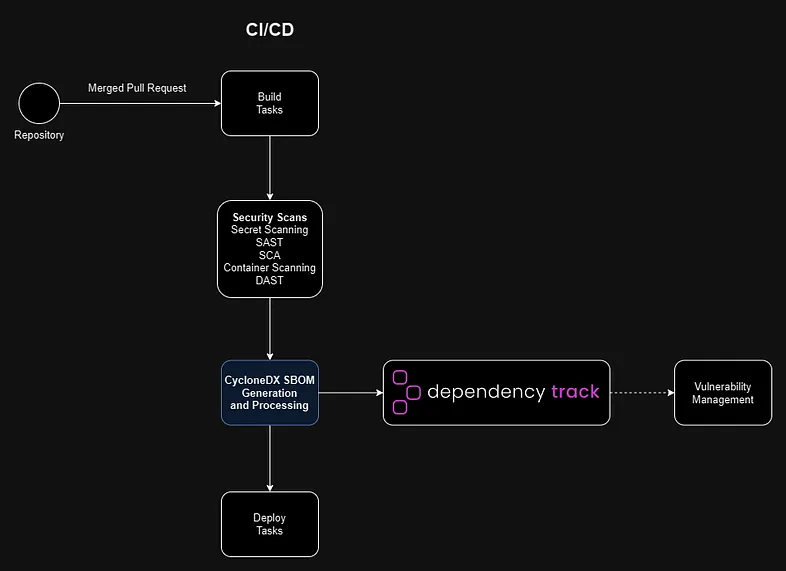

Cool, let’s turn all that standards talk into something your engineers can actually build against.

Below is a concrete implementation plan, broken into 3 workstreams, each with phases, tasks and clear acceptance criteria:

- A — CVSS v4.0 integration (scoring & evidence)

- B — CycloneDX 1.7 SBOM/CBOM + provenance

- C — SLSA 1.2 (build + source provenance)

- X — Cross‑cutting (APIs, UX, docs, rollout)

I’ll assume you have:

- A scanner / ingestion pipeline,

- A central data model (DB or graph),

- An API + UI layer (StellaOps console or similar),

- CI/CD on GitHub/GitLab/whatever.

A. CVSS v4.0 integration

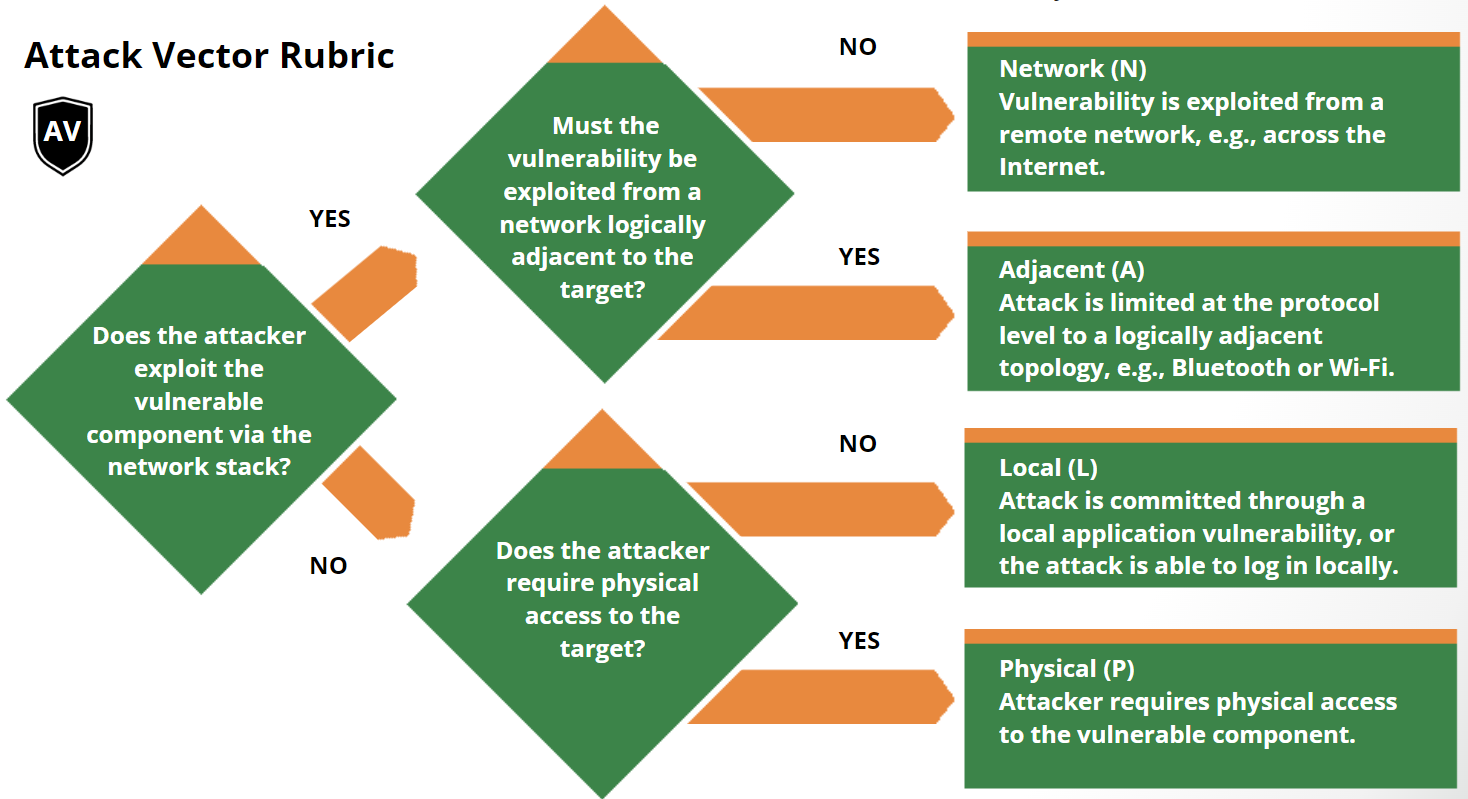

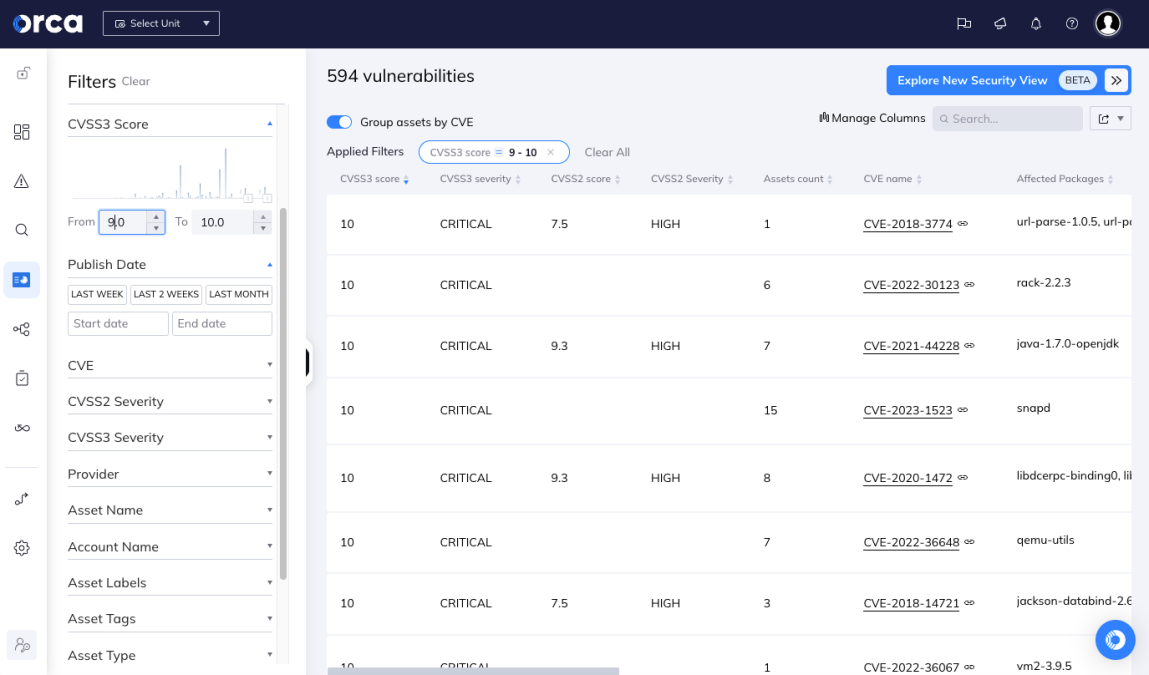

Goal: Your platform can ingest, calculate, store and expose CVSS v4.0 scores and vectors alongside (or instead of) v3.x, using the official FIRST spec and NVD data. (FIRST)

A1. Foundations & decisions

Tasks

-

Pick canonical CVSSv4 library or implementation

-

Evaluate existing OSS libraries for your main language(s), or plan an internal one based directly on FIRST’s spec (Base, Threat, Environmental, Supplemental groups).

-

Decide:

- Supported metric groups (Base only vs. Base+Threat+Environmental+Supplemental).

- Which groups your UI will expose/edit vs. read-only from upstream feeds.

-

-

Versioning strategy

-

Decide how to represent CVSS v3.0/v3.1/v4.0 in your DB:

vulnerability_scorestable withversion,vector,base_score,environmental_score,temporal_score,severity_band.

-

Define precedence rules: if both v3.1 and v4.0 exist, which one your “headline” severity uses.

-

Acceptance criteria

- Tech design doc reviewed & approved.

- Decision on library vs. custom implementation recorded.

- DB schema migration plan ready.

A2. Data model & storage

Tasks

-

DB schema changes

-

Add a

cvss_scorestable or expand the existing vulnerability table, e.g.:cvss_scores id (PK) vuln_id (FK) source (enum: NVD, scanner, manual) version (enum: 2.0, 3.0, 3.1, 4.0) vector (string) base_score (float) temporal_score (float, nullable) environmental_score (float, nullable) severity (enum: NONE/LOW/MEDIUM/HIGH/CRITICAL) metrics_json (JSONB) // raw metrics for traceability created_at / updated_at

-

-

Traceable evidence

-

Store:

- Raw CVSS vector string (e.g.

CVSS:4.0/AV:N/...(etc)). - Parsed metrics as JSON for audit (show “why” a score is what it is).

- Raw CVSS vector string (e.g.

-

Optional: add

calculated_by+calculated_atfor your internal scoring runs.

-

Acceptance criteria

- Migrations applied in dev.

- Read/write repository functions implemented and unit‑tested.

A3. Ingestion & calculation

Tasks

-

NVD / external feeds

- Update your NVD ingestion to read CVSS v4.0 when present in JSON

metricsfields. (NVD) - Map NVD → internal

cvss_scoresmodel.

- Update your NVD ingestion to read CVSS v4.0 when present in JSON

-

Local CVSSv4 calculator service

-

Implement a service (or module) that:

-

Accepts metric values (Base/Threat/Environmental/Supplemental).

-

Produces:

- Canonical vector.

- Base/Threat/Environmental scores.

- Severity band.

-

-

Make this callable by:

- Scanner engine (calculating scores for private vulns).

- UI (recalculate button).

- API (for automated clients).

-

Acceptance criteria

- Given a set of reference vectors from FIRST, your calculator returns exact expected scores.

- NVD ingestion for a sample of CVEs produces v4 scores in your DB.

A4. UI & API

Tasks

-

API

-

Extend vulnerability API payload with:

{ "id": "CVE-2024-XXXX", "cvss": [ { "version": "4.0", "source": "NVD", "vector": "CVSS:4.0/AV:N/...", "base_score": 8.3, "severity": "HIGH", "metrics": { "...": "..." } } ] } -

Add filters:

cvss.version,cvss.min_score,cvss.severity.

-

-

UI

-

On vulnerability detail:

- Show v3.x and v4.0 side-by-side.

- Expandable panel with metric breakdown and “explain my score” text.

-

On list views:

- Support sorting & filtering by v4.0 base score & severity.

-

Acceptance criteria

- Frontend can render v4.0 vectors and scores.

- QA can filter vulnerabilities using v4 metrics via API and UI.

A5. Migration & rollout

Tasks

-

Backfill

-

For all stored vulnerabilities where metrics exist:

- If v4 not present but inputs available, compute v4.

-

Store both historical (v3.x) and new v4 for comparison.

-

-

Feature flag / rollout

- Introduce feature flag

cvss_v4_enabledper tenant or environment. - Run A/B comparison internally before enabling for all users.

- Introduce feature flag

Acceptance criteria

- Backfill job runs successfully on staging data.

- Rollout plan + rollback strategy documented.

B. CycloneDX 1.7 SBOM/CBOM + provenance

CycloneDX 1.7 is now the current spec; it adds things like a Cryptography BOM (CBOM) and structured citations/provenance to strengthen trust and traceability. (CycloneDX)

B1. Decide scope & generators

Tasks

-

Select BOM formats & languages

-

JSON as your primary format (

application/vnd.cyclonedx+json). (CycloneDX) -

Components you’ll cover:

- Application BOMs (packages, containers).

- Optional: infrastructure (IaC, images).

- Optional: CBOM for crypto usage.

-

-

Choose or implement generators

-

For each ecosystem (e.g., Maven, NPM, PyPI, containers), choose:

- Existing tools (

cyclonedx-maven-plugin,cyclonedx-npm, etc). - Or central generator using lockfiles/manifests.

- Existing tools (

-

Acceptance criteria

- Matrix of ecosystems → generator tool finalized.

- POC shows valid CycloneDX 1.7 JSON BOM for one representative project.

B2. Schema alignment & validation

Tasks

-

Model updates

-

Extend your internal SBOM model to include:

spec_version: "1.7"bomFormat: "CycloneDX"serialNumber(UUID/URI).metadata.tools(how BOM was produced).properties,licenses,crypto(for CBOM).

-

For provenance:

metadata.authors,metadata.manufacture,metadata.supplier.components[x].evidenceandcomponents[x].propertiesfor evidence & citations. (CycloneDX)

-

-

Validation pipeline

-

Integrate the official CycloneDX JSON schema validation step into:

- CI (for projects generating BOMs).

- Your ingestion path (reject/flag invalid BOMs).

-

Acceptance criteria

- Any BOM produced must pass CycloneDX 1.7 JSON schema validation in CI.

- Ingestion rejects malformed BOMs with clear error messages.

B3. Provenance & citations in BOMs

Tasks

-

Define provenance policy

-

Minimal set for every BOM:

- Author (CI system / team).

- Build pipeline ID, commit, repo URL.

- Build time.

-

Extended:

-

externalReferencesfor:- Build logs.

- SLSA attestations.

- Security reports (e.g., scanner runs).

-

-

-

Implement metadata injection

-

In your CI templates:

- Capture build info (commit SHA, pipeline ID, creator, environment).

- Add it into CycloneDX

metadataandproperties.

-

For evidence:

- Use

components[x].evidenceto reference where a component was detected (e.g., file paths, manifest lines).

- Use

-

Acceptance criteria

-

For any BOM, engineers can trace:

- WHO built it.

- WHEN it was built.

- WHICH repo/commit/pipeline it came from.

B4. CBOM (Cryptography BOM) support (optional but powerful)

Tasks

-

Crypto inventory

-

Scanner enhancement:

- Detect crypto libraries & primitives used (e.g., OpenSSL, bcrypt, TLS versions).

- Map them into CycloneDX CBOM structures in

cryptosections (per spec).

-

-

Policy hooks

-

Define policy checks:

- “Disallow SHA-1,”

- “Warn on RSA < 2048 bits,”

- “Flag non-FIPS-approved algorithms.”

-

Acceptance criteria

- From a BOM, you can list all cryptographic algorithms and libraries used in an application.

- At least one simple crypto policy implemented (e.g., SHA-1 usage alert).

B5. Ingestion, correlation & UI

Tasks

-

Ingestion service

-

API endpoint:

POST /sbomsaccepting CycloneDX 1.7 JSON. -

Store:

-

Raw BOM (for evidence).

-

Normalized component graph (packages, relationships).

-

Link BOM to:

- Repo/project.

- Build (from SLSA provenance).

- Deployed asset.

-

-

-

Correlation

-

Join SBOM components with:

- Vulnerability data (CVE/CWE/CPE/PURL).

- Crypto policy results.

-

Maintain “asset → BOM → components → vulnerabilities” graph.

-

-

UI

-

For any service/image:

- Show latest BOM metadata (CycloneDX version, timestamp).

- Component list with vulnerability badges.

- Crypto tab (if CBOM enabled).

- Provenance tab (author, build pipeline, SLSA attestation links).

-

Acceptance criteria

-

Given an SBOM upload, the UI shows:

- Components.

- Associated vulnerabilities.

- Provenance metadata.

-

API consumers can fetch SBOM + correlated risk in a single call.

C. SLSA 1.2 build + source provenance

SLSA 1.2 (final) introduces a Source Track in addition to the Build Track, defining levels and attestation formats for both source control and build provenance. (SLSA)

C1. Target SLSA levels & scope

Tasks

-

Choose target levels

-

For each critical product:

- Pick Build Track level (e.g., target L2 now, L3 later).

- Pick Source Track level (e.g., L1 for all, L2 for sensitive repos).

-

-

Repo inventory

-

Classify repos by risk:

- Critical (agents, scanners, control-plane).

- Important (integrations).

- Low‑risk (internal tools).

-

Map target SLSA levels accordingly.

-

Acceptance criteria

- For every repo, there is an explicit target SLSA Build + Source level.

- Gap analysis doc exists (current vs target).

C2. Build provenance in CI/CD

Tasks

-

Attestation generation

-

For each CI pipeline:

- Use SLSA-compatible builders or tooling (e.g.,

slsa-github-generator,slsa-frameworkactions, Tekton Chains, etc.) to produce build provenance attestations in SLSA 1.2 format.

- Use SLSA-compatible builders or tooling (e.g.,

-

Attestation content includes:

- Builder identity.

- Build inputs (commit, repo, config).

- Build parameters.

- Produced artifacts (digest, image tags).

-

-

Signing & storage

-

Sign attestations (Sigstore/cosign or equivalent).

-

Store:

- In an OCI registry (as artifacts).

- Or in a dedicated provenance store.

-

Expose pointer to attestation in:

- BOM (

externalReferences). - Your StellaOps metadata.

- BOM (

-

Acceptance criteria

-

For any built artifact (image/binary), you can retrieve a SLSA attestation proving:

- What source it came from.

- Which builder ran.

- What steps were executed.

C3. Source Track controls

Tasks

-

Source provenance

-

Implement controls to support SLSA Source Track:

- Enforce protected branches.

- Require code review (e.g., 2 reviewers) for main branches.

- Require signed commits for critical repos.

-

Log:

- Author, reviewers, branch, PR ID, merge SHA.

-

-

Source attestation

-

For each release:

-

Generate source attestations capturing:

- Repo URL and commit.

- Review status.

- Policy compliance (review count, checks passing).

-

-

Link these to build attestations (Source → Build provenance chain).

-

Acceptance criteria

-

For a release, you can prove:

- Which reviews happened.

- Which branch strategy was followed.

- That policies were met at merge time.

C4. Verification & policy in StellaOps

Tasks

-

Verifier service

-

Implement a service that:

-

Fetches SLSA attestations (source + build).

-

Verifies signatures and integrity.

-

Evaluates them against policies:

- “Artifact must have SLSA Build L2 attestation from trusted builders.”

- “Critical services must have Source L2 attestation (review, branch protections).”

-

-

-

Runtime & deployment gates

-

Integrate verification into:

- Admission controller (Kubernetes or deployment gate).

- CI release stage (block promotion if SLSA requirements not met).

-

-

UI

-

On artifact/service detail page:

- Surface SLSA level achieved (per track).

- Status (pass/fail).

- Drill-down view of attestation evidence (who built, when, from where).

-

Acceptance criteria

- A deployment can be blocked (in a test env) when SLSA requirements are not satisfied.

- Operators can visually see SLSA status for an artifact/service.

X. Cross‑cutting: APIs, UX, docs, rollout

X1. Unified data model & APIs

Tasks

-

Graph relationships

-

Model the relationship:

- Source repo → SLSA Source attestation → Build attestation → Artifact → SBOM (CycloneDX 1.7) → Components → Vulnerabilities (CVSS v4).

-

-

Graph queries

-

Build API endpoints for:

- “Given a CVE, show all affected artifacts and their SLSA + BOM evidence.”

- “Given an artifact, show its full provenance chain and risk posture.”

-

Acceptance criteria

-

At least 2 end‑to‑end queries work:

- CVE → impacted assets with scores + provenance.

- Artifact → SBOM + vulnerabilities + SLSA + crypto posture.

X2. Observability & auditing

Tasks

-

Audit logs

-

Log:

- BOM uploads and generators.

- SLSA attestation creation/verification.

- CVSS recalculations (who/what triggered them).

-

-

Metrics

-

Track:

- % of builds with valid SLSA attestations.

- % artifacts with CycloneDX 1.7 BOMs.

- % vulns with v4 scores.

-

Expose dashboards (Prometheus/Grafana or similar).

-

Acceptance criteria

-

Dashboards exist showing coverage for:

- CVSSv4 adoption.

- CycloneDX 1.7 coverage.

- SLSA coverage.

X3. Documentation & developer experience

Tasks

-

Developer playbooks

-

Short, repo‑friendly docs:

- “How to enable CycloneDX BOM generation in this repo.”

- “How to ensure your service reaches SLSA Build L2.”

- “How to interpret CVSS v4 in StellaOps.”

-

-

Templates

-

CI templates:

bom-enabled-pipeline.yamlslsa-enabled-pipeline.yaml

-

Code snippets:

- API examples for pushing SBOMs.

- API examples for querying risk posture.

-

Acceptance criteria

-

A new project can:

- Copy a CI template.

- Produce a validated CycloneDX 1.7 BOM.

- Generate SLSA attestations.

- Show up correctly in StellaOps with CVSS v4 scoring.

If you’d like, next step I can:

- Turn this into a Jira-ready epic + stories breakdown, or

- Draft concrete API schemas (OpenAPI/JSON) for SBOM ingestion, CVSS scoring, and SLSA attestation verification.