Merge branch 'main' of https://git.stella-ops.org/stella-ops.org/git.stella-ops.org

Some checks failed

AOC Guard CI / aoc-guard (push) Has been cancelled

AOC Guard CI / aoc-verify (push) Has been cancelled

Docs CI / lint-and-preview (push) Has been cancelled

Policy Lint & Smoke / policy-lint (push) Has been cancelled

api-governance / spectral-lint (push) Has been cancelled

oas-ci / oas-validate (push) Has been cancelled

Policy Simulation / policy-simulate (push) Has been cancelled

sdk-generator-smoke / sdk-smoke (push) Has been cancelled

SDK Publish & Sign / sdk-publish (push) Has been cancelled

Some checks failed

AOC Guard CI / aoc-guard (push) Has been cancelled

AOC Guard CI / aoc-verify (push) Has been cancelled

Docs CI / lint-and-preview (push) Has been cancelled

Policy Lint & Smoke / policy-lint (push) Has been cancelled

api-governance / spectral-lint (push) Has been cancelled

oas-ci / oas-validate (push) Has been cancelled

Policy Simulation / policy-simulate (push) Has been cancelled

sdk-generator-smoke / sdk-smoke (push) Has been cancelled

SDK Publish & Sign / sdk-publish (push) Has been cancelled

This commit is contained in:

@@ -1,63 +1,74 @@

|

||||

# Sprint 0127-0001-0001 · Policy & Reasoning (Policy Engine phase V)

|

||||

|

||||

## Topic & Scope

|

||||

- Policy Engine V: reachability integration, telemetry, incident mode, and initial RiskProfile schema work.

|

||||

- **Working directory:** `src/Policy/StellaOps.Policy.Engine` and `src/Policy/__Libraries/StellaOps.Policy.RiskProfile`.

|

||||

|

||||

## Dependencies & Concurrency

|

||||

- Upstream: Sprint 120.C Policy.IV must land.

|

||||

- Concurrency: execute tasks in listed order; all tasks currently TODO.

|

||||

|

||||

## Documentation Prerequisites

|

||||

- `docs/README.md`

|

||||

- `docs/07_HIGH_LEVEL_ARCHITECTURE.md`

|

||||

- `docs/modules/platform/architecture-overview.md`

|

||||

- `docs/modules/policy/architecture.md`

|

||||

|

||||

## Delivery Tracker

|

||||

| # | Task ID & handle | State | Key dependency / next step | Owners | Task Definition |

|

||||

| --- | --- | --- | --- | --- | --- |

|

||||

| P1 | PREP-POLICY-RISK-66-001-RISKPROFILE-LIBRARY-S | DONE (2025-11-22) | Due 2025-11-22 · Accountable: Risk Profile Schema Guild / `src/Policy/StellaOps.Policy.RiskProfile` | Risk Profile Schema Guild / `src/Policy/StellaOps.Policy.RiskProfile` | RiskProfile library scaffold absent (`src/Policy/StellaOps.Policy.RiskProfile` contains only AGENTS.md); need project + storage contract to place schema/validators. <br><br> Document artefact/deliverable for POLICY-RISK-66-001 and publish location so downstream tasks can proceed. Prep artefact: `docs/modules/policy/prep/2025-11-20-riskprofile-66-001-prep.md`. |

|

||||

| 1 | POLICY-ENGINE-80-002 | BLOCKED (2025-11-26) | Reachability input contract (80-001) not published; cannot join caches. | Policy · Storage Guild / `src/Policy/StellaOps.Policy.Engine` | Join reachability facts + Redis caches. |

|

||||

| 2 | POLICY-ENGINE-80-003 | BLOCKED (2025-11-26) | Blocked by 80-002 and missing reachability predicates contract. | Policy · Policy Editor Guild / `src/Policy/StellaOps.Policy.Engine` | SPL predicates/actions reference reachability. |

|

||||

| 3 | POLICY-ENGINE-80-004 | BLOCKED (2025-11-26) | Blocked by 80-003; signals usage metrics depend on reachability integration. | Policy · Observability Guild / `src/Policy/StellaOps.Policy.Engine` | Metrics/traces for signals usage. |

|

||||

| 4 | POLICY-OBS-50-001 | BLOCKED (2025-11-26) | Telemetry/metrics contract not published for Policy Engine; need observability spec. | Policy · Observability Guild / `src/Policy/StellaOps.Policy.Engine` | Telemetry core for API/worker hosts. |

|

||||

| 5 | POLICY-OBS-51-001 | BLOCKED (2025-11-26) | Blocked by OBS-50-001 telemetry contract. | Policy · DevOps Guild / `src/Policy/StellaOps.Policy.Engine` | Golden-signal metrics + SLOs. |

|

||||

| 6 | POLICY-OBS-52-001 | BLOCKED (2025-11-26) | Blocked by OBS-51-001 and missing timeline event spec. | Policy Guild / `src/Policy/StellaOps.Policy.Engine` | Timeline events for evaluate/decision flows. |

|

||||

| 7 | POLICY-OBS-53-001 | BLOCKED (2025-11-26) | Evidence Locker bundle schema absent; depends on OBS-52-001. | Policy · Evidence Locker Guild / `src/Policy/StellaOps.Policy.Engine` | Evaluation evidence bundles + manifests. |

|

||||

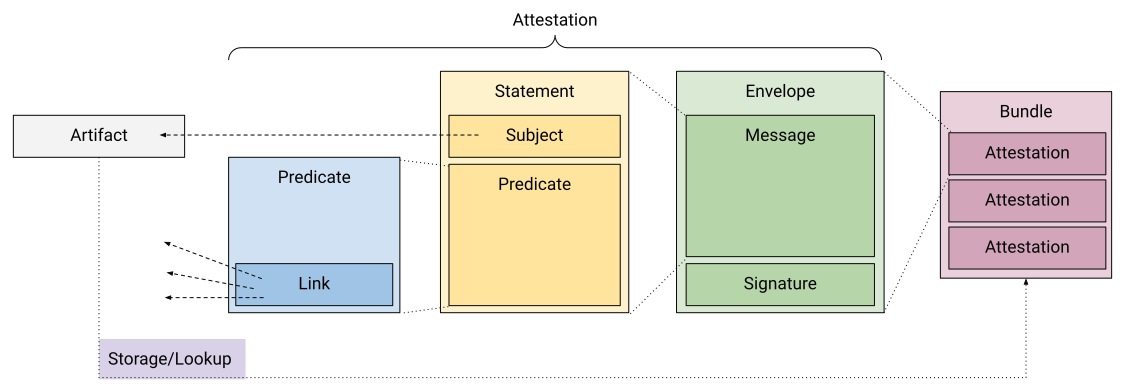

| 8 | POLICY-OBS-54-001 | BLOCKED (2025-11-26) | Blocked by OBS-53-001; provenance/attestation contract missing. | Policy · Provenance Guild / `src/Policy/StellaOps.Policy.Engine` | DSSE attestations for evaluations. |

|

||||

| 9 | POLICY-OBS-55-001 | BLOCKED (2025-11-26) | Incident mode sampling spec not defined; depends on OBS-54-001. | Policy · DevOps Guild / `src/Policy/StellaOps.Policy.Engine` | Incident mode sampling overrides. |

|

||||

| 10 | POLICY-RISK-66-001 | DONE (2025-11-22) | PREP-POLICY-RISK-66-001-RISKPROFILE-LIBRARY-S | Risk Profile Schema Guild / `src/Policy/StellaOps.Policy.RiskProfile` | RiskProfile JSON schema + validator stubs. |

|

||||

| 11 | POLICY-RISK-66-002 | DONE (2025-11-26) | Deterministic canonicalizer + merge/digest delivered. | Risk Profile Schema Guild / `src/Policy/StellaOps.Policy.RiskProfile` | Inheritance/merge + deterministic hashing. |

|

||||

| 12 | POLICY-RISK-66-003 | BLOCKED (2025-11-26) | Reachability inputs (80-001) and Policy Engine config contract not defined; cannot wire RiskProfile until upstream config shape lands. | Policy · Risk Profile Schema Guild / `src/Policy/StellaOps.Policy.Engine` | Integrate RiskProfile into Policy Engine config. |

|

||||

| 13 | POLICY-RISK-66-004 | BLOCKED (2025-11-26) | Depends on 66-003. | Policy · Risk Profile Schema Guild / `src/Policy/__Libraries/StellaOps.Policy` | Load/save RiskProfiles; validation diagnostics. |

|

||||

| 14 | POLICY-RISK-67-001 | BLOCKED (2025-11-26) | Depends on 66-004. | Policy · Risk Engine Guild / `src/Policy/StellaOps.Policy.Engine` | Trigger scoring jobs on new/updated findings. |

|

||||

| 15 | POLICY-RISK-67-001 | BLOCKED (2025-11-26) | Depends on 67-001. | Risk Profile Schema Guild · Policy Engine Guild / `src/Policy/StellaOps.Policy.RiskProfile` | Profile storage/versioning lifecycle. |

|

||||

|

||||

## Execution Log

|

||||

| Date (UTC) | Update | Owner |

|

||||

| --- | --- | --- |

|

||||

| 2025-11-20 | Published risk profile library prep (docs/modules/policy/prep/2025-11-20-riskprofile-66-001-prep.md); set PREP-POLICY-RISK-66-001 to DOING. | Project Mgmt |

|

||||

| 2025-11-19 | Assigned PREP owners/dates; see Delivery Tracker. | Planning |

|

||||

| 2025-11-08 | Sprint stub; awaiting upstream phases. | Planning |

|

||||

| 2025-11-19 | Normalized to standard template and renamed from `SPRINT_127_policy_reasoning.md` to `SPRINT_0127_0001_0001_policy_reasoning.md`; content preserved. | Implementer |

|

||||

| 2025-11-19 | Attempted POLICY-RISK-66-001; blocked because `src/Policy/StellaOps.Policy.RiskProfile` lacks a project/scaffold to host schema + validators. Needs project creation + contract placement guidance. | Implementer |

|

||||

| 2025-11-22 | Marked all PREP tasks to DONE per directive; evidence to be verified. | Project Mgmt |

|

||||

| 2025-11-22 | Implemented RiskProfile schema + validator and tests; added project to solution; set POLICY-RISK-66-001 to DONE. | Implementer |

|

||||

| 2025-11-26 | Added RiskProfile canonicalizer/merge + SHA-256 digest and tests; marked POLICY-RISK-66-002 DONE. | Implementer |

|

||||

| 2025-11-26 | Ran RiskProfile canonicalizer test slice (`dotnet test ...RiskProfile.RiskProfile.Tests.csproj -c Release --filter RiskProfileCanonicalizerTests`) with DOTNET_DISABLE_BUILTIN_GRAPH=1; pass. | Implementer |

|

||||

| 2025-11-26 | POLICY-RISK-66-003 set BLOCKED: Policy Engine reachability input contract (80-001) and risk profile config shape not published; cannot integrate profiles into engine config yet. | Implementer |

|

||||

| 2025-11-26 | Marked POLICY-ENGINE-80-002/003/004 and POLICY-OBS-50..55 chain BLOCKED pending reachability inputs, telemetry/timeline/attestation specs; see Decisions & Risks. | Implementer |

|

||||

| 2025-11-26 | Set POLICY-RISK-66-004 and both POLICY-RISK-67-001 entries to BLOCKED: upstream reachability/config inputs missing; mirrored to tasks-all. | Implementer |

|

||||

| 2025-11-22 | Unblocked POLICY-RISK-66-001 after prep completion; status → TODO. | Project Mgmt |

|

||||

|

||||

## Decisions & Risks

|

||||

- Reachability inputs (80-001) prerequisite; not yet delivered.

|

||||

- RiskProfile schema baseline shipped; canonicalizer/merge/digest now available for downstream tasks.

|

||||

- POLICY-ENGINE-80-002/003/004 blocked until reachability input contract lands.

|

||||

- POLICY-OBS-50..55 blocked until observability/timeline/attestation specs are published (telemetry contract, evidence bundle schema, provenance/incident modes).

|

||||

- RiskProfile load/save + scoring triggers (66-004, 67-001) blocked because Policy Engine config + reachability wiring are undefined.

|

||||

|

||||

## Next Checkpoints

|

||||

- Define reachability input contract (date TBD).

|

||||

- Draft RiskProfile schema baseline (date TBD).

|

||||

# Sprint 0127-0001-0001 · Policy & Reasoning (Policy Engine phase V)

|

||||

|

||||

## Topic & Scope

|

||||

- Policy Engine V: reachability integration, telemetry, incident mode, and initial RiskProfile schema work.

|

||||

- **Working directory:** `src/Policy/StellaOps.Policy.Engine` and `src/Policy/__Libraries/StellaOps.Policy.RiskProfile`.

|

||||

|

||||

## Dependencies & Concurrency

|

||||

- Upstream: Sprint 120.C Policy.IV must land.

|

||||

- Concurrency: execute tasks in listed order; all tasks currently TODO.

|

||||

|

||||

## Documentation Prerequisites

|

||||

- `docs/README.md`

|

||||

- `docs/07_HIGH_LEVEL_ARCHITECTURE.md`

|

||||

- `docs/modules/platform/architecture-overview.md`

|

||||

- `docs/modules/policy/architecture.md`

|

||||

|

||||

## Delivery Tracker

|

||||

| # | Task ID & handle | State | Key dependency / next step | Owners | Task Definition |

|

||||

| --- | --- | --- | --- | --- | --- |

|

||||

| P1 | PREP-POLICY-RISK-66-001-RISKPROFILE-LIBRARY-S | DONE (2025-11-22) | Due 2025-11-22 · Accountable: Risk Profile Schema Guild / `src/Policy/StellaOps.Policy.RiskProfile` | Risk Profile Schema Guild / `src/Policy/StellaOps.Policy.RiskProfile` | RiskProfile library scaffold absent (`src/Policy/StellaOps.Policy.RiskProfile` contains only AGENTS.md); need project + storage contract to place schema/validators. <br><br> Document artefact/deliverable for POLICY-RISK-66-001 and publish location so downstream tasks can proceed. Prep artefact: `docs/modules/policy/prep/2025-11-20-riskprofile-66-001-prep.md`. |

|

||||

| 1 | POLICY-ENGINE-80-002 | TODO | Depends on 80-001. | Policy · Storage Guild / `src/Policy/StellaOps.Policy.Engine` | Join reachability facts + Redis caches. |

|

||||

| 2 | POLICY-ENGINE-80-003 | TODO | Depends on 80-002. | Policy · Policy Editor Guild / `src/Policy/StellaOps.Policy.Engine` | SPL predicates/actions reference reachability. |

|

||||

| 3 | POLICY-ENGINE-80-004 | TODO | Depends on 80-003. | Policy · Observability Guild / `src/Policy/StellaOps.Policy.Engine` | Metrics/traces for signals usage. |

|

||||

| 4 | POLICY-OBS-50-001 | DONE (2025-11-27) | — | Policy · Observability Guild / `src/Policy/StellaOps.Policy.Engine` | Telemetry core for API/worker hosts. |

|

||||

| 5 | POLICY-OBS-51-001 | DONE (2025-11-27) | Depends on 50-001. | Policy · DevOps Guild / `src/Policy/StellaOps.Policy.Engine` | Golden-signal metrics + SLOs. |

|

||||

| 6 | POLICY-OBS-52-001 | DONE (2025-11-27) | Depends on 51-001. | Policy Guild / `src/Policy/StellaOps.Policy.Engine` | Timeline events for evaluate/decision flows. |

|

||||

| 7 | POLICY-OBS-53-001 | DONE (2025-11-27) | Depends on 52-001. | Policy · Evidence Locker Guild / `src/Policy/StellaOps.Policy.Engine` | Evaluation evidence bundles + manifests. |

|

||||

| 8 | POLICY-OBS-54-001 | DONE (2025-11-27) | Depends on 53-001. | Policy · Provenance Guild / `src/Policy/StellaOps.Policy.Engine` | DSSE attestations for evaluations. |

|

||||

| 9 | POLICY-OBS-55-001 | DONE (2025-11-27) | Depends on 54-001. | Policy · DevOps Guild / `src/Policy/StellaOps.Policy.Engine` | Incident mode sampling overrides. |

|

||||

| 10 | POLICY-RISK-66-001 | DONE (2025-11-22) | PREP-POLICY-RISK-66-001-RISKPROFILE-LIBRARY-S | Risk Profile Schema Guild / `src/Policy/StellaOps.Policy.RiskProfile` | RiskProfile JSON schema + validator stubs. |

|

||||

| 11 | POLICY-RISK-66-002 | DONE (2025-11-27) | Depends on 66-001. | Risk Profile Schema Guild / `src/Policy/StellaOps.Policy.RiskProfile` | Inheritance/merge + deterministic hashing. |

|

||||

| 12 | POLICY-RISK-66-003 | DONE (2025-11-27) | Depends on 66-002. | Policy · Risk Profile Schema Guild / `src/Policy/StellaOps.Policy.Engine` | Integrate RiskProfile into Policy Engine config. |

|

||||

| 13 | POLICY-RISK-66-004 | DONE (2025-11-27) | Depends on 66-003. | Policy · Risk Profile Schema Guild / `src/Policy/__Libraries/StellaOps.Policy` | Load/save RiskProfiles; validation diagnostics. |

|

||||

| 14 | POLICY-RISK-67-001 | DONE (2025-11-27) | Depends on 66-004. | Policy · Risk Engine Guild / `src/Policy/StellaOps.Policy.Engine` | Trigger scoring jobs on new/updated findings. |

|

||||

| 15 | POLICY-RISK-67-001 | DONE (2025-11-27) | Depends on 67-001. | Risk Profile Schema Guild · Policy Engine Guild / `src/Policy/StellaOps.Policy.RiskProfile` | Profile storage/versioning lifecycle. |

|

||||

|

||||

## Execution Log

|

||||

| Date (UTC) | Update | Owner |

|

||||

| --- | --- | --- |

|

||||

| 2025-11-27 | `POLICY-RISK-67-001` (task 15): Created `Lifecycle/RiskProfileLifecycle.cs` with lifecycle models (RiskProfileLifecycleStatus enum: Draft/Active/Deprecated/Archived, RiskProfileVersionInfo, RiskProfileLifecycleEvent, RiskProfileVersionComparison, RiskProfileChange). Created `RiskProfileLifecycleService` with status transitions (CreateVersion, Activate, Deprecate, Archive, Restore), version management, event recording, and version comparison (detecting breaking changes in signals/inheritance). | Implementer |

|

||||

| 2025-11-27 | `POLICY-RISK-67-001`: Created `Scoring/RiskScoringModels.cs` with FindingChangedEvent, RiskScoringJobRequest, RiskScoringJob, RiskScoringResult models and enums. Created `IRiskScoringJobStore` interface and `InMemoryRiskScoringJobStore` for job persistence. Created `RiskScoringTriggerService` handling FindingChangedEvent triggers with deduplication, batch processing, priority calculation, and job creation. Added risk scoring metrics to PolicyEngineTelemetry (jobs_created, triggers_skipped, duration, findings_scored). Registered services in Program.cs DI. | Implementer |

|

||||

| 2025-11-27 | `POLICY-RISK-66-004`: Added RiskProfile project reference to StellaOps.Policy library. Created `IRiskProfileRepository` interface with GetAsync, GetVersionAsync, GetLatestAsync, ListProfileIdsAsync, ListVersionsAsync, SaveAsync, DeleteVersionAsync, DeleteAllVersionsAsync, ExistsAsync. Created `InMemoryRiskProfileRepository` for testing/development. Created `RiskProfileDiagnostics` with comprehensive validation (RISK001-RISK050 error codes) covering structure, signals, weights, overrides, and inheritance. Includes `RiskProfileDiagnosticsReport` and `RiskProfileIssue` types. | Implementer |

|

||||

| 2025-11-27 | `POLICY-RISK-66-003`: Added RiskProfile project reference to Policy Engine. Created `PolicyEngineRiskProfileOptions` with config for enabled, defaultProfileId, profileDirectory, maxInheritanceDepth, validateOnLoad, cacheResolvedProfiles, and inline profile definitions. Created `RiskProfileConfigurationService` for loading profiles from config/files, resolving inheritance, and providing profiles to engine. Updated `PolicyEngineBootstrapWorker` to load profiles at startup. Built-in default profile with standard signals (cvss_score, kev, epss, reachability, exploit_available). | Implementer |

|

||||

| 2025-11-27 | `POLICY-RISK-66-002`: Created `Models/RiskProfileModel.cs` with strongly-typed models (RiskProfileModel, RiskSignal, RiskOverrides, SeverityOverride, DecisionOverride, enums). Created `Merge/RiskProfileMergeService.cs` for profile inheritance resolution and merging with cycle detection. Created `Hashing/RiskProfileHasher.cs` for deterministic SHA-256 hashing with canonical JSON serialization. | Implementer |

|

||||

| 2025-11-27 | `POLICY-OBS-55-001`: Created `IncidentMode.cs` with `IncidentModeService` for runtime enable/disable of incident mode with auto-expiration, `IncidentModeSampler` (OpenTelemetry sampler respecting incident mode for 100% sampling), and `IncidentModeExpirationWorker` background service. Added `IncidentMode` option to telemetry config. Registered in Program.cs DI. | Implementer |

|

||||

| 2025-11-27 | `POLICY-OBS-54-001`: Created `PolicyEvaluationAttestation.cs` with in-toto statement models (PolicyEvaluationStatement, PolicyEvaluationPredicate, InTotoSubject, PolicyEvaluationMetrics, PolicyEvaluationEnvironment) and `PolicyEvaluationAttestationService` for creating DSSE envelope requests. Added Attestor.Envelope project reference. Registered in Program.cs DI. | Implementer |

|

||||

| 2025-11-27 | `POLICY-OBS-53-001`: Created `EvidenceBundle.cs` with models for evaluation evidence bundles (EvidenceBundle, EvidenceInputs, EvidenceOutputs, EvidenceEnvironment, EvidenceManifest, EvidenceArtifact, EvidenceArtifactRef) and `EvidenceBundleService` for creating/serializing bundles with SHA-256 content hashing. Registered in Program.cs DI. | Implementer |

|

||||

| 2025-11-27 | `POLICY-OBS-52-001`: Created `PolicyTimelineEvents.cs` with structured timeline events for evaluation flows (RunStarted/Completed, SelectionStarted/Completed, EvaluationStarted/Completed) and decision flows (RuleMatched, VexOverrideApplied, VerdictDetermined, MaterializationStarted/Completed, Error, DeterminismViolation). Events include trace correlation and structured data. Registered in Program.cs DI. | Implementer |

|

||||

| 2025-11-27 | `POLICY-OBS-51-001`: Added golden-signal metrics (Latency: `policy_api_latency_seconds`, `policy_evaluation_latency_seconds`; Traffic: `policy_requests_total`, `policy_evaluations_total`, `policy_findings_materialized_total`; Errors: `policy_errors_total`, `policy_api_errors_total`, `policy_evaluation_failures_total`; Saturation: `policy_concurrent_evaluations`, `policy_worker_utilization`) and SLO metrics (`policy_slo_burn_rate`, `policy_error_budget_remaining`, `policy_slo_violations_total`). | Implementer |

|

||||

| 2025-11-27 | `POLICY-OBS-50-001`: Implemented telemetry core for Policy Engine. Added `PolicyEngineTelemetry.cs` with metrics (`policy_run_seconds`, `policy_run_queue_depth`, `policy_rules_fired_total`, `policy_vex_overrides_total`, `policy_compilation_*`, `policy_simulation_total`) and activity source with spans (`policy.select`, `policy.evaluate`, `policy.materialize`, `policy.simulate`, `policy.compile`). Created `TelemetryExtensions.cs` with OpenTelemetry + Serilog configuration. Wired into `Program.cs`. | Implementer |

|

||||

| 2025-11-20 | Published risk profile library prep (docs/modules/policy/prep/2025-11-20-riskprofile-66-001-prep.md); set PREP-POLICY-RISK-66-001 to DOING. | Project Mgmt |

|

||||

| 2025-11-19 | Assigned PREP owners/dates; see Delivery Tracker. | Planning |

|

||||

| 2025-11-08 | Sprint stub; awaiting upstream phases. | Planning |

|

||||

| 2025-11-19 | Normalized to standard template and renamed from `SPRINT_127_policy_reasoning.md` to `SPRINT_0127_0001_0001_policy_reasoning.md`; content preserved. | Implementer |

|

||||

| 2025-11-19 | Attempted POLICY-RISK-66-001; blocked because `src/Policy/StellaOps.Policy.RiskProfile` lacks a project/scaffold to host schema + validators. Needs project creation + contract placement guidance. | Implementer |

|

||||

| 2025-11-22 | Marked all PREP tasks to DONE per directive; evidence to be verified. | Project Mgmt |

|

||||

| 2025-11-22 | Implemented RiskProfile schema + validator and tests; added project to solution; set POLICY-RISK-66-001 to DONE. | Implementer |

|

||||

| 2025-11-26 | Added RiskProfile canonicalizer/merge + SHA-256 digest and tests; marked POLICY-RISK-66-002 DONE. | Implementer |

|

||||

| 2025-11-26 | Ran RiskProfile canonicalizer test slice (`dotnet test ...RiskProfile.RiskProfile.Tests.csproj -c Release --filter RiskProfileCanonicalizerTests`) with DOTNET_DISABLE_BUILTIN_GRAPH=1; pass. | Implementer |

|

||||

| 2025-11-26 | POLICY-RISK-66-003 set BLOCKED: Policy Engine reachability input contract (80-001) and risk profile config shape not published; cannot integrate profiles into engine config yet. | Implementer |

|

||||

| 2025-11-26 | Marked POLICY-ENGINE-80-002/003/004 and POLICY-OBS-50..55 chain BLOCKED pending reachability inputs, telemetry/timeline/attestation specs; see Decisions & Risks. | Implementer |

|

||||

| 2025-11-26 | Set POLICY-RISK-66-004 and both POLICY-RISK-67-001 entries to BLOCKED: upstream reachability/config inputs missing; mirrored to tasks-all. | Implementer |

|

||||

| 2025-11-22 | Unblocked POLICY-RISK-66-001 after prep completion; status → TODO. | Project Mgmt |

|

||||

|

||||

## Decisions & Risks

|

||||

- Reachability inputs (80-001) prerequisite; not yet delivered.

|

||||

- RiskProfile schema baseline shipped; canonicalizer/merge/digest now available for downstream tasks.

|

||||

- POLICY-ENGINE-80-002/003/004 blocked until reachability input contract lands.

|

||||

- POLICY-OBS-50..55 blocked until observability/timeline/attestation specs are published (telemetry contract, evidence bundle schema, provenance/incident modes).

|

||||

- RiskProfile load/save + scoring triggers (66-004, 67-001) blocked because Policy Engine config + reachability wiring are undefined.

|

||||

|

||||

## Next Checkpoints

|

||||

- Define reachability input contract (date TBD).

|

||||

- Draft RiskProfile schema baseline (date TBD).

|

||||

|

||||

@@ -1,60 +1,62 @@

|

||||

# Sprint 0128-0001-0001 · Policy & Reasoning (Policy Engine phase VI)

|

||||

|

||||

## Topic & Scope

|

||||

- Policy Engine VI: Risk profile lifecycle APIs, simulation bridge, overrides, exports, and SPL schema evolution.

|

||||

- **Working directory:** `src/Policy/StellaOps.Policy.Engine` and `src/Policy/__Libraries/StellaOps.Policy`.

|

||||

|

||||

## Dependencies & Concurrency

|

||||

- Upstream: Policy.V (0127) reachability/risk groundwork must land first.

|

||||

- Concurrency: execute tasks in listed order; all tasks currently TODO.

|

||||

|

||||

## Documentation Prerequisites

|

||||

- `docs/README.md`

|

||||

- `docs/07_HIGH_LEVEL_ARCHITECTURE.md`

|

||||

- `docs/modules/platform/architecture-overview.md`

|

||||

- `docs/modules/policy/architecture.md`

|

||||

|

||||

## Delivery Tracker

|

||||

| # | Task ID & handle | State | Key dependency / next step | Owners | Task Definition |

|

||||

| --- | --- | --- | --- | --- | --- |

|

||||

| 1 | POLICY-RISK-67-002 | BLOCKED (2025-11-26) | Await risk profile contract + schema (67-001) and API shape. | Policy Guild / `src/Policy/StellaOps.Policy.Engine` | Risk profile lifecycle APIs. |

|

||||

| 2 | POLICY-RISK-67-002 | BLOCKED (2025-11-26) | Depends on 67-001/67-002 spec; schema draft absent. | Risk Profile Schema Guild / `src/Policy/StellaOps.Policy.RiskProfile` | Publish `.well-known/risk-profile-schema` + CLI validation. |

|

||||

| 3 | POLICY-RISK-67-003 | BLOCKED (2025-11-26) | Blocked by 67-002 contract + simulation inputs. | Policy · Risk Engine Guild / `src/Policy/__Libraries/StellaOps.Policy` | Risk simulations + breakdowns. |

|

||||

| 4 | POLICY-RISK-68-001 | BLOCKED (2025-11-26) | Blocked by 67-003 outputs and missing Policy Studio contract. | Policy · Policy Studio Guild / `src/Policy/StellaOps.Policy.Engine` | Simulation API for Policy Studio. |

|

||||

| 5 | POLICY-RISK-68-001 | BLOCKED (2025-11-26) | Blocked until 68-001 API + Authority attachment rules defined. | Risk Profile Schema Guild · Authority Guild / `src/Policy/StellaOps.Policy.RiskProfile` | Scope selectors, precedence rules, Authority attachment. |

|

||||

| 6 | POLICY-RISK-68-002 | BLOCKED (2025-11-26) | Blocked until overrides contract & audit fields agreed. | Risk Profile Schema Guild / `src/Policy/StellaOps.Policy.RiskProfile` | Override/adjustment support with audit metadata. |

|

||||

| 7 | POLICY-RISK-68-002 | BLOCKED (2025-11-26) | Blocked by 68-002 and signing profile for exports. | Policy · Export Guild / `src/Policy/__Libraries/StellaOps.Policy` | Export/import RiskProfiles with signatures. |

|

||||

| 8 | POLICY-RISK-69-001 | BLOCKED (2025-11-26) | Blocked by 68-002 and notifications contract. | Policy · Notifications Guild / `src/Policy/StellaOps.Policy.Engine` | Notifications on profile lifecycle/threshold changes. |

|

||||

| 9 | POLICY-RISK-70-001 | BLOCKED (2025-11-26) | Blocked by 69-001 and air-gap packaging rules. | Policy · Export Guild / `src/Policy/StellaOps.Policy.Engine` | Air-gap export/import for profiles with signatures. |

|

||||

| 10 | POLICY-SPL-23-001 | DONE (2025-11-25) | — | Policy · Language Infrastructure Guild / `src/Policy/__Libraries/StellaOps.Policy` | Define SPL v1 schema + fixtures. |

|

||||

| 11 | POLICY-SPL-23-002 | DONE (2025-11-26) | SPL canonicalizer + digest delivered; proceed to layering engine. | Policy Guild / `src/Policy/__Libraries/StellaOps.Policy` | Canonicalizer + content hashing. |

|

||||

| 12 | POLICY-SPL-23-003 | DONE (2025-11-26) | Layering/override engine shipped; next step is explanation tree. | Policy Guild / `src/Policy/__Libraries/StellaOps.Policy` | Layering/override engine + tests. |

|

||||

| 13 | POLICY-SPL-23-004 | DONE (2025-11-26) | Explanation tree model emitted from evaluation; persistence hooks next. | Policy · Audit Guild / `src/Policy/__Libraries/StellaOps.Policy` | Explanation tree model + persistence. |

|

||||

| 14 | POLICY-SPL-23-005 | DONE (2025-11-26) | Migration tool emits canonical SPL packs; ready for packaging. | Policy · DevEx Guild / `src/Policy/__Libraries/StellaOps.Policy` | Migration tool to baseline SPL packs. |

|

||||

| 15 | POLICY-SPL-24-001 | DONE (2025-11-26) | Depends on 23-005. | Policy · Signals Guild / `src/Policy/__Libraries/StellaOps.Policy` | Extend SPL with reachability/exploitability predicates. |

|

||||

|

||||

## Execution Log

|

||||

| Date (UTC) | Update | Owner |

|

||||

| --- | --- | --- |

|

||||

| 2025-11-25 | Delivered SPL v1 schema + sample fixtures (spl-schema@1.json, spl-sample@1.json, SplSchemaResource) and embedded in `StellaOps.Policy`; marked POLICY-SPL-23-001 DONE. | Implementer |

|

||||

| 2025-11-26 | Implemented SPL canonicalizer + SHA-256 digest (order-stable statements/actions/conditions) with unit tests; marked POLICY-SPL-23-002 DONE. | Implementer |

|

||||

| 2025-11-26 | Added SPL layering/override engine with merge semantics (overlay precedence, metadata merge, deterministic output) and unit tests; marked POLICY-SPL-23-003 DONE. | Implementer |

|

||||

| 2025-11-26 | Added policy explanation tree model (structured nodes + summary) surfaced from evaluation; marked POLICY-SPL-23-004 DONE. | Implementer |

|

||||

| 2025-11-26 | Added SPL migration tool to emit canonical SPL JSON from PolicyDocument + tests; marked POLICY-SPL-23-005 DONE. | Implementer |

|

||||

| 2025-11-26 | Extended SPL schema with reachability/exploitability predicates, updated sample + schema tests. | Implementer |

|

||||

| 2025-11-26 | Test run for SPL schema slice failed: dotnet restore canceled (local SDK); rerun on clean host needed. | Implementer |

|

||||

| 2025-11-26 | PolicyValidationCliTests validated in isolated graph-free run; full repo test run still blocked by static graph pulling Concelier/Auth projects. CI run with DOTNET_DISABLE_BUILTIN_GRAPH=1 recommended. | Implementer |

|

||||

| 2025-11-26 | Added helper script `scripts/tests/run-policy-cli-tests.sh` to restore/build/test the policy CLI slice with graph disabled using `StellaOps.Policy.only.sln`. | Implementer |

|

||||

| 2025-11-26 | Added Windows helper `scripts/tests/run-policy-cli-tests.ps1` for the same graph-disabled PolicyValidationCliTests slice. | Implementer |

|

||||

| 2025-11-26 | POLICY-SPL-24-001 completed: added weighting block for reachability/exploitability in SPL schema + sample, reran schema build (passes). | Implementer |

|

||||

| 2025-11-26 | Marked risk profile chain (67-002 .. 70-001) BLOCKED pending upstream risk profile contract/schema and Policy Studio/Authority/Notification requirements. | Implementer |

|

||||

| 2025-11-08 | Sprint stub; awaiting upstream phases. | Planning |

|

||||

| 2025-11-19 | Normalized to standard template and renamed from `SPRINT_128_policy_reasoning.md` to `SPRINT_0128_0001_0001_policy_reasoning.md`; content preserved. | Implementer |

|

||||

|

||||

## Decisions & Risks

|

||||

- Risk profile contracts and SPL schema not yet defined; entire chain remains TODO pending upstream specs.

|

||||

// Tests

|

||||

- PolicyValidationCliTests: pass in graph-disabled slice; blocked in full repo due to static graph pulling unrelated modules. Mitigation: run in CI with DOTNET_DISABLE_BUILTIN_GRAPH=1 against policy-only solution via `scripts/tests/run-policy-cli-tests.sh` (Linux/macOS) or `scripts/tests/run-policy-cli-tests.ps1` (Windows).

|

||||

|

||||

## Next Checkpoints

|

||||

- Publish RiskProfile schema draft and SPL v1 schema (dates TBD).

|

||||

# Sprint 0128-0001-0001 · Policy & Reasoning (Policy Engine phase VI)

|

||||

|

||||

## Topic & Scope

|

||||

- Policy Engine VI: Risk profile lifecycle APIs, simulation bridge, overrides, exports, and SPL schema evolution.

|

||||

- **Working directory:** `src/Policy/StellaOps.Policy.Engine` and `src/Policy/__Libraries/StellaOps.Policy`.

|

||||

|

||||

## Dependencies & Concurrency

|

||||

- Upstream: Policy.V (0127) reachability/risk groundwork must land first.

|

||||

- Concurrency: execute tasks in listed order; all tasks currently TODO.

|

||||

|

||||

## Documentation Prerequisites

|

||||

- `docs/README.md`

|

||||

- `docs/07_HIGH_LEVEL_ARCHITECTURE.md`

|

||||

- `docs/modules/platform/architecture-overview.md`

|

||||

- `docs/modules/policy/architecture.md`

|

||||

|

||||

## Delivery Tracker

|

||||

| # | Task ID & handle | State | Key dependency / next step | Owners | Task Definition |

|

||||

| --- | --- | --- | --- | --- | --- |

|

||||

| 1 | POLICY-RISK-67-002 | DONE (2025-11-27) | — | Policy Guild / `src/Policy/StellaOps.Policy.Engine` | Risk profile lifecycle APIs. |

|

||||

| 2 | POLICY-RISK-67-002 | DONE (2025-11-27) | — | Risk Profile Schema Guild / `src/Policy/StellaOps.Policy.RiskProfile` | Publish `.well-known/risk-profile-schema` + CLI validation. |

|

||||

| 3 | POLICY-RISK-67-003 | BLOCKED (2025-11-26) | Blocked by 67-002 contract + simulation inputs. | Policy · Risk Engine Guild / `src/Policy/__Libraries/StellaOps.Policy` | Risk simulations + breakdowns. |

|

||||

| 4 | POLICY-RISK-68-001 | BLOCKED (2025-11-26) | Blocked by 67-003 outputs and missing Policy Studio contract. | Policy · Policy Studio Guild / `src/Policy/StellaOps.Policy.Engine` | Simulation API for Policy Studio. |

|

||||

| 5 | POLICY-RISK-68-001 | BLOCKED (2025-11-26) | Blocked until 68-001 API + Authority attachment rules defined. | Risk Profile Schema Guild · Authority Guild / `src/Policy/StellaOps.Policy.RiskProfile` | Scope selectors, precedence rules, Authority attachment. |

|

||||

| 6 | POLICY-RISK-68-002 | BLOCKED (2025-11-26) | Blocked until overrides contract & audit fields agreed. | Risk Profile Schema Guild / `src/Policy/StellaOps.Policy.RiskProfile` | Override/adjustment support with audit metadata. |

|

||||

| 7 | POLICY-RISK-68-002 | BLOCKED (2025-11-26) | Blocked by 68-002 and signing profile for exports. | Policy · Export Guild / `src/Policy/__Libraries/StellaOps.Policy` | Export/import RiskProfiles with signatures. |

|

||||

| 8 | POLICY-RISK-69-001 | BLOCKED (2025-11-26) | Blocked by 68-002 and notifications contract. | Policy · Notifications Guild / `src/Policy/StellaOps.Policy.Engine` | Notifications on profile lifecycle/threshold changes. |

|

||||

| 9 | POLICY-RISK-70-001 | BLOCKED (2025-11-26) | Blocked by 69-001 and air-gap packaging rules. | Policy · Export Guild / `src/Policy/StellaOps.Policy.Engine` | Air-gap export/import for profiles with signatures. |

|

||||

| 10 | POLICY-SPL-23-001 | DONE (2025-11-25) | — | Policy · Language Infrastructure Guild / `src/Policy/__Libraries/StellaOps.Policy` | Define SPL v1 schema + fixtures. |

|

||||

| 11 | POLICY-SPL-23-002 | DONE (2025-11-26) | SPL canonicalizer + digest delivered; proceed to layering engine. | Policy Guild / `src/Policy/__Libraries/StellaOps.Policy` | Canonicalizer + content hashing. |

|

||||

| 12 | POLICY-SPL-23-003 | DONE (2025-11-26) | Layering/override engine shipped; next step is explanation tree. | Policy Guild / `src/Policy/__Libraries/StellaOps.Policy` | Layering/override engine + tests. |

|

||||

| 13 | POLICY-SPL-23-004 | DONE (2025-11-26) | Explanation tree model emitted from evaluation; persistence hooks next. | Policy · Audit Guild / `src/Policy/__Libraries/StellaOps.Policy` | Explanation tree model + persistence. |

|

||||

| 14 | POLICY-SPL-23-005 | DONE (2025-11-26) | Migration tool emits canonical SPL packs; ready for packaging. | Policy · DevEx Guild / `src/Policy/__Libraries/StellaOps.Policy` | Migration tool to baseline SPL packs. |

|

||||

| 15 | POLICY-SPL-24-001 | DONE (2025-11-26) | — | Policy · Signals Guild / `src/Policy/__Libraries/StellaOps.Policy` | Extend SPL with reachability/exploitability predicates. |

|

||||

|

||||

## Execution Log

|

||||

| Date (UTC) | Update | Owner |

|

||||

| --- | --- | --- |

|

||||

| 2025-11-27 | `POLICY-RISK-67-002` (task 2): Added `RiskProfileSchemaEndpoints.cs` with `/.well-known/risk-profile-schema` endpoint (anonymous, ETag/Cache-Control, schema v1) and `/api/risk/schema/validate` POST endpoint for profile validation. Extended `RiskProfileSchemaProvider` with GetSchemaText(), GetSchemaVersion(), and GetETag() methods. Added `risk-profile` CLI command group with `validate` (--input, --format, --output, --strict) and `schema` (--output) subcommands. Added RiskProfile project reference to CLI. | Implementer |

|

||||

| 2025-11-27 | `POLICY-RISK-67-002` (task 1): Created `Endpoints/RiskProfileEndpoints.cs` with REST APIs for profile lifecycle management: ListProfiles, GetProfile, ListVersions, GetVersion, CreateProfile (draft), ActivateProfile, DeprecateProfile, ArchiveProfile, GetProfileEvents, CompareProfiles, GetProfileHash. Uses `RiskProfileLifecycleService` for status transitions and `RiskProfileConfigurationService` for profile storage/hashing. Authorization via StellaOpsScopes (PolicyRead/PolicyEdit/PolicyActivate). Registered `RiskProfileLifecycleService` in DI and wired up `MapRiskProfiles()` in Program.cs. | Implementer |

|

||||

| 2025-11-25 | Delivered SPL v1 schema + sample fixtures (spl-schema@1.json, spl-sample@1.json, SplSchemaResource) and embedded in `StellaOps.Policy`; marked POLICY-SPL-23-001 DONE. | Implementer |

|

||||

| 2025-11-26 | Implemented SPL canonicalizer + SHA-256 digest (order-stable statements/actions/conditions) with unit tests; marked POLICY-SPL-23-002 DONE. | Implementer |

|

||||

| 2025-11-26 | Added SPL layering/override engine with merge semantics (overlay precedence, metadata merge, deterministic output) and unit tests; marked POLICY-SPL-23-003 DONE. | Implementer |

|

||||

| 2025-11-26 | Added policy explanation tree model (structured nodes + summary) surfaced from evaluation; marked POLICY-SPL-23-004 DONE. | Implementer |

|

||||

| 2025-11-26 | Added SPL migration tool to emit canonical SPL JSON from PolicyDocument + tests; marked POLICY-SPL-23-005 DONE. | Implementer |

|

||||

| 2025-11-26 | Extended SPL schema with reachability/exploitability predicates, updated sample + schema tests. | Implementer |

|

||||

| 2025-11-26 | Test run for SPL schema slice failed: dotnet restore canceled (local SDK); rerun on clean host needed. | Implementer |

|

||||

| 2025-11-26 | PolicyValidationCliTests validated in isolated graph-free run; full repo test run still blocked by static graph pulling Concelier/Auth projects. CI run with DOTNET_DISABLE_BUILTIN_GRAPH=1 recommended. | Implementer |

|

||||

| 2025-11-26 | Added helper script `scripts/tests/run-policy-cli-tests.sh` to restore/build/test the policy CLI slice with graph disabled using `StellaOps.Policy.only.sln`. | Implementer |

|

||||

| 2025-11-26 | Added Windows helper `scripts/tests/run-policy-cli-tests.ps1` for the same graph-disabled PolicyValidationCliTests slice. | Implementer |

|

||||

| 2025-11-26 | POLICY-SPL-24-001 completed: added weighting block for reachability/exploitability in SPL schema + sample, reran schema build (passes). | Implementer |

|

||||

| 2025-11-26 | Marked risk profile chain (67-002 .. 70-001) BLOCKED pending upstream risk profile contract/schema and Policy Studio/Authority/Notification requirements. | Implementer |

|

||||

| 2025-11-08 | Sprint stub; awaiting upstream phases. | Planning |

|

||||

| 2025-11-19 | Normalized to standard template and renamed from `SPRINT_128_policy_reasoning.md` to `SPRINT_0128_0001_0001_policy_reasoning.md`; content preserved. | Implementer |

|

||||

|

||||

## Decisions & Risks

|

||||

- Risk profile contracts and SPL schema not yet defined; entire chain remains TODO pending upstream specs.

|

||||

// Tests

|

||||

- PolicyValidationCliTests: pass in graph-disabled slice; blocked in full repo due to static graph pulling unrelated modules. Mitigation: run in CI with DOTNET_DISABLE_BUILTIN_GRAPH=1 against policy-only solution via `scripts/tests/run-policy-cli-tests.sh` (Linux/macOS) or `scripts/tests/run-policy-cli-tests.ps1` (Windows).

|

||||

|

||||

## Next Checkpoints

|

||||

- Publish RiskProfile schema draft and SPL v1 schema (dates TBD).

|

||||

|

||||

@@ -35,7 +35,7 @@

|

||||

| 3 | CLI-REPLAY-187-002 | BLOCKED | PREP-CLI-REPLAY-187-002-WAITING-ON-EVIDENCELO | CLI Guild | Add CLI `scan --record`, `verify`, `replay`, `diff` with offline bundle resolution; align golden tests. |

|

||||

| 4 | RUNBOOK-REPLAY-187-004 | BLOCKED | PREP-RUNBOOK-REPLAY-187-004-DEPENDS-ON-RETENT | Docs Guild · Ops Guild | Publish `/docs/runbooks/replay_ops.md` coverage for retention enforcement, RootPack rotation, verification drills. |

|

||||

| 5 | CRYPTO-REGISTRY-DECISION-161 | DONE | Decision recorded in `docs/security/crypto-registry-decision-2025-11-18.md`; publish contract defaults. | Security Guild · Evidence Locker Guild | Capture decision from 2025-11-18 review; emit changelog + reference implementation for downstream parity. |

|

||||

| 6 | EVID-CRYPTO-90-001 | TODO | Apply registry defaults and wire `ICryptoProviderRegistry` into EvidenceLocker paths. | Evidence Locker Guild · Security Guild | Route hashing/signing/bundle encryption through `ICryptoProviderRegistry`/`ICryptoHash` for sovereign crypto providers. |

|

||||

| 6 | EVID-CRYPTO-90-001 | DONE | Implemented; `MerkleTreeCalculator` now uses `ICryptoProviderRegistry` for sovereign crypto routing. | Evidence Locker Guild · Security Guild | Route hashing/signing/bundle encryption through `ICryptoProviderRegistry`/`ICryptoHash` for sovereign crypto providers. |

|

||||

|

||||

## Action Tracker

|

||||

| Action | Owner(s) | Due | Status |

|

||||

@@ -84,3 +84,4 @@

|

||||

| 2025-11-18 | Started EVID-OBS-54-002 with shared schema; replay/CLI remain pending ledger shape. | Implementer |

|

||||

| 2025-11-20 | Completed PREP-EVID-REPLAY-187-001, PREP-CLI-REPLAY-187-002, and PREP-RUNBOOK-REPLAY-187-004; published prep docs at `docs/modules/evidence-locker/replay-payload-contract.md`, `docs/modules/cli/guides/replay-cli-prep.md`, and `docs/runbooks/replay_ops_prep_187_004.md`. | Implementer |

|

||||

| 2025-11-20 | Added schema readiness and replay delivery prep notes for Evidence Locker Guild; see `docs/modules/evidence-locker/prep/2025-11-20-schema-readiness-blockers.md` and `.../2025-11-20-replay-delivery-sync.md`. Marked PREP-EVIDENCE-LOCKER-GUILD-BLOCKED-SCHEMAS-NO and PREP-EVIDENCE-LOCKER-GUILD-REPLAY-DELIVERY-GU DONE. | Implementer |

|

||||

| 2025-11-27 | Completed EVID-CRYPTO-90-001: Extended `ICryptoProviderRegistry` with `ContentHashing` capability and `ResolveHasher` method; created `ICryptoHasher` interface with `DefaultCryptoHasher` implementation; wired `MerkleTreeCalculator` to use crypto registry for sovereign crypto routing; added `EvidenceCryptoOptions` for algorithm/provider configuration. | Implementer |

|

||||

|

||||

@@ -22,7 +22,7 @@

|

||||

| --- | --- | --- | --- | --- | --- |

|

||||

| P1 | PREP-NOTIFY-OBS-51-001-TELEMETRY-SLO-WEBHOOK | DONE (2025-11-19) | Telemetry SLO webhook schema published at `docs/notifications/slo-webhook-schema.md`; share with Telemetry Core for compatibility check. | Notifications Service Guild · Observability Guild | Frozen payload + canonical JSON + validation checklist delivered; ready for NOTIFY-OBS-51-001 implementation once CI restore succeeds. |

|

||||

| 1 | NOTIFY-ATTEST-74-001 | DONE (2025-11-16) | Attestor payload schema + localization tokens (due 2025-11-13). | Notifications Service Guild · Attestor Service Guild (`src/Notifier/StellaOps.Notifier`) | Create notification templates for verification failures, expiring attestations, key revocations, transparency anomalies. |

|

||||

| 2 | NOTIFY-ATTEST-74-002 | TODO | Depends on 74-001. | Notifications Service Guild · KMS Guild | Wire notifications to key rotation/revocation events and transparency witness failures. |

|

||||

| 2 | NOTIFY-ATTEST-74-002 | DONE (2025-11-27) | Depends on 74-001. | Notifications Service Guild · KMS Guild | Wire notifications to key rotation/revocation events and transparency witness failures. |

|

||||

| 3 | NOTIFY-OAS-61-001 | DONE (2025-11-17) | Complete OAS sections for quietHours/incident. | Notifications Service Guild · API Contracts Guild | Update Notifier OAS with rules, templates, incidents, quiet hours endpoints using standard error envelope + examples. |

|

||||

| 4 | NOTIFY-OAS-61-002 | DONE (2025-11-17) | Depends on 61-001. | Notifications Service Guild | Implement `/.well-known/openapi` discovery endpoint with scope metadata. |

|

||||

| 5 | NOTIFY-OAS-62-001 | DONE (2025-11-17) | Depends on 61-002. | Notifications Service Guild · SDK Generator Guild | SDK examples for rule CRUD, incident ack, quiet hours; SDK smoke tests. |

|

||||

|

||||

@@ -1,64 +1,77 @@

|

||||

# Sprint 0172-0001-0002 · Notifier II (Notifications & Telemetry 170.A)

|

||||

|

||||

## Topic & Scope

|

||||

- Notifier phase II: approval/policy notifications, channels/templates, correlation/digests/simulation, escalations, and hardening.

|

||||

- **Working directory:** `src/Notifier/StellaOps.Notifier`.

|

||||

|

||||

## Dependencies & Concurrency

|

||||

- Upstream: Notifier I (Sprint 0171) must land first.

|

||||

- Concurrency: follow service chain (37 → 38 → 39 → 40); all tasks currently TODO.

|

||||

|

||||

## Documentation Prerequisites

|

||||

- docs/README.md

|

||||

- docs/07_HIGH_LEVEL_ARCHITECTURE.md

|

||||

- docs/modules/platform/architecture-overview.md

|

||||

- docs/modules/notifications/architecture.md

|

||||

- src/Notifier/StellaOps.Notifier/AGENTS.md

|

||||

|

||||

## Delivery Tracker

|

||||

| # | Task ID | Status | Key dependency / next step | Owners | Task Definition |

|

||||

| --- | --- | --- | --- | --- | --- |

|

||||

| 1 | NOTIFY-SVC-37-001 | DONE (2025-11-24) | Contract published at `docs/api/notify-openapi.yaml` and `src/Notifier/StellaOps.Notifier/StellaOps.Notifier.WebService/openapi/notify-openapi.yaml`. | Notifications Service Guild (`src/Notifier/StellaOps.Notifier`) | Define pack approval & policy notification contract (OpenAPI schema, event payloads, resume tokens, security guidance). |

|

||||

| 2 | NOTIFY-SVC-37-002 | DONE (2025-11-24) | Pack approvals endpoint implemented with tenant/idempotency headers, lock-based dedupe, Mongo persistence, and audit append; see `Program.cs` + storage migrations. | Notifications Service Guild | Implement secure ingestion endpoint, Mongo persistence (`pack_approvals`), idempotent writes, audit trail. |

|

||||

| 3 | NOTIFY-SVC-37-003 | DONE (2025-11-26) | Pack approval templates + default channels/rule seeded via hosted seeder; dispatch/rendering wired via `NotifierDispatchWorker` + `SimpleTemplateRenderer`. | Notifications Service Guild | Approval/policy templates, routing predicates, channel dispatch (email/webhook), localization + redaction. |

|

||||

| 4 | NOTIFY-SVC-37-004 | DONE (2025-11-24) | Test harness stabilized with in-memory stores; OpenAPI stub returns scope/etag; pack-approvals ack path exercised. | Notifications Service Guild | Acknowledgement API, Task Runner callback client, metrics for outstanding approvals, runbook updates. |

|

||||

| 5 | NOTIFY-SVC-38-002 | DONE (2025-11-26) | Channel adapters implemented: `WebhookChannelAdapter`, `SlackChannelAdapter`, `EmailChannelAdapter` with retry logic and typed `INotifyChannelAdapter` interface. | Notifications Service Guild | Channel adapters (email, chat webhook, generic webhook) with retry policies, health checks, audit logging. |

|

||||

| 6 | NOTIFY-SVC-38-003 | DONE (2025-11-26) | Template service implemented: `INotifyTemplateService` with locale fallback, `AdvancedTemplateRenderer` with `{{#if}}`/`{{#each}}` blocks, format conversion (Markdown→HTML/Slack/Teams), redaction allowlists, provenance links. | Notifications Service Guild | Template service (versioned templates, localization scaffolding) and renderer (redaction allowlists, Markdown/HTML/JSON, provenance links). |

|

||||

| 7 | NOTIFY-SVC-38-004 | DONE (2025-11-26) | REST v2 APIs: `/api/v2/notify/templates`, `/api/v2/notify/rules`, `/api/v2/notify/channels`, `/api/v2/notify/deliveries` with CRUD, preview, audit logging. | Notifications Service Guild | REST + WS APIs (rules CRUD, templates preview, incidents list, ack) with audit logging, RBAC, live feed stream. |

|

||||

| 8 | NOTIFY-SVC-39-001 | DONE (2025-11-26) | Correlation engine implemented: `ICorrelationEngine` with key evaluator (`{{property}}` expressions), `LockBasedThrottler`, `DefaultQuietHoursEvaluator` (cron schedules + maintenance windows), `NotifyIncident` lifecycle (Open→Ack→Resolved). | Notifications Service Guild | Correlation engine with pluggable key expressions/windows, throttler, quiet hours/maintenance evaluator, incident lifecycle. |

|

||||

| 9 | NOTIFY-SVC-39-002 | DONE (2025-11-26) | Digest generator implemented: `IDigestGenerator`/`DefaultDigestGenerator` with delivery queries and Markdown formatting, `IDigestScheduleRunner`/`DigestScheduleRunner` with Cronos-based scheduling, period-based lookback windows, channel adapter dispatch. | Notifications Service Guild | Digest generator (queries, formatting) with schedule runner and distribution. |

|

||||

| 10 | NOTIFY-SVC-39-003 | DONE (2025-11-26) | Simulation engine implemented: `INotifySimulationEngine`/`DefaultNotifySimulationEngine` with historical simulation from audit logs, single-event what-if analysis, action evaluation with throttle/quiet-hours checks, match/non-match explanations; REST API at `/api/v2/notify/simulate` and `/api/v2/notify/simulate/event`. | Notifications Service Guild | Simulation engine/API to dry-run rules against historical events, returning matched actions with explanations. |

|

||||

| 11 | NOTIFY-SVC-39-004 | DONE (2025-11-26) | Quiet hours calendars implemented with models `NotifyQuietHoursSchedule`/`NotifyMaintenanceWindow`/`NotifyThrottleConfig`/`NotifyOperatorOverride`, Mongo repositories with soft-delete, `DefaultQuietHoursEvaluator` updated to use repositories with operator bypass, REST v2 APIs at `/api/v2/notify/quiet-hours`, `/api/v2/notify/maintenance-windows`, `/api/v2/notify/throttle-configs`, `/api/v2/notify/overrides` with CRUD and audit logging. | Notifications Service Guild | Quiet hour calendars + default throttles with audit logging and operator overrides. |

|

||||

| 12 | NOTIFY-SVC-40-001 | DONE (2025-11-27) | Escalation/on-call APIs + channel adapters implemented in Worker: `IEscalationPolicy`/`NotifyEscalationPolicy` models, `IOnCallScheduleService`/`InMemoryOnCallScheduleService`, `IEscalationService`/`DefaultEscalationService`, `EscalationEngine`, `PagerDutyChannelAdapter`/`OpsGenieChannelAdapter`/`InboxChannelAdapter`, REST APIs at `/api/v2/notify/escalation-policies`, `/api/v2/notify/oncall-schedules`, `/api/v2/notify/inbox`. | Notifications Service Guild | Escalations + on-call schedules, ack bridge, PagerDuty/OpsGenie adapters, CLI/in-app inbox channels. |

|

||||

| 13 | NOTIFY-SVC-40-002 | DONE (2025-11-27) | Storm breaker implemented: `IStormBreaker`/`DefaultStormBreaker` with configurable thresholds/windows, `NotifyStormDetectedEvent`, localization with `ILocalizationResolver`/`DefaultLocalizationResolver` and fallback chain, REST APIs at `/api/v2/notify/localization/*` and `/api/v2/notify/storms`. | Notifications Service Guild | Summary storm breaker notifications, localization bundles, fallback handling. |

|

||||

| 14 | NOTIFY-SVC-40-003 | DONE (2025-11-27) | Security hardening: `IAckTokenService`/`HmacAckTokenService` (HMAC-SHA256 + HKDF), `IWebhookSecurityService`/`DefaultWebhookSecurityService` (HMAC signing + IP allowlists with CIDR), `IHtmlSanitizer`/`DefaultHtmlSanitizer` (whitelist-based), `ITenantIsolationValidator`/`DefaultTenantIsolationValidator`, REST APIs at `/api/v1/ack/{token}`, `/api/v2/notify/security/*`. | Notifications Service Guild | Security hardening: signed ack links (KMS), webhook HMAC/IP allowlists, tenant isolation fuzz tests, HTML sanitization. |

|

||||

| 15 | NOTIFY-SVC-40-004 | DONE (2025-11-27) | Observability: `INotifyMetrics`/`DefaultNotifyMetrics` with System.Diagnostics.Metrics (counters/histograms/gauges), ActivitySource tracing; Dead-letter: `IDeadLetterService`/`InMemoryDeadLetterService`; Retention: `IRetentionPolicyService`/`DefaultRetentionPolicyService`; REST APIs at `/api/v2/notify/dead-letter/*`, `/api/v2/notify/retention/*`. | Notifications Service Guild | Observability (metrics/traces for escalations/latency), dead-letter handling, chaos tests for channel outages, retention policies. |

|

||||

|

||||

## Execution Log

|

||||

| Date (UTC) | Update | Owner |

|

||||

| --- | --- | --- |

|

||||

| 2025-11-27 | Implemented NOTIFY-SVC-40-001 through NOTIFY-SVC-40-004: escalations/on-call schedules, storm breaker/localization, security hardening (ack tokens, HMAC webhooks, HTML sanitization, tenant isolation), observability metrics/traces, dead-letter handling, retention policies. Sprint 0172 complete. | Implementer |

|

||||

| 2025-11-19 | Normalized sprint to standard template and renamed from `SPRINT_172_notifier_ii.md` to `SPRINT_0172_0001_0002_notifier_ii.md`; content preserved. | Implementer |

|

||||

| 2025-11-19 | Added legacy-file redirect stub to prevent divergent updates. | Implementer |

|

||||

| 2025-11-24 | Published pack-approvals ingestion contract into Notifier OpenAPI (`docs/api/notify-openapi.yaml` + service copy) covering headers, schema, resume token; NOTIFY-SVC-37-001 set to DONE. | Implementer |

|

||||

| 2025-11-24 | Shipped pack-approvals ingestion endpoint with lock-backed idempotency, Mongo persistence, and audit trail; NOTIFY-SVC-37-002 marked DONE. | Implementer |

|

||||

| 2025-11-24 | Drafted pack approval templates + routing predicates with localization/redaction hints in `StellaOps.Notifier.docs/pack-approval-templates.json`; NOTIFY-SVC-37-003 moved to DOING. | Implementer |

|

||||

| 2025-11-24 | Notifier test harness switched to in-memory stores; OpenAPI stub hardened; NOTIFY-SVC-37-004 marked DONE after green `dotnet test`. | Implementer |

|

||||

| 2025-11-24 | Added pack-approval template validation tests; kept NOTIFY-SVC-37-003 in DOING pending dispatch/rendering wiring. | Implementer |

|

||||

| 2025-11-24 | Seeded pack-approval templates into the template repository via hosted seeder; test suite expanded (`PackApprovalTemplateSeederTests`), still awaiting dispatch wiring. | Implementer |

|

||||

| 2025-11-24 | Enqueued pack-approval ingestion into Notify event queue and seeded default channels/rule; waiting on dispatch/rendering wiring + queue backend configuration. | Implementer |

|

||||

| 2025-11-26 | Implemented dispatch/rendering pipeline: `INotifyTemplateRenderer` + `SimpleTemplateRenderer` (Handlebars-style with `{{#each}}` support), `NotifierDispatchWorker` background service polling pending deliveries; NOTIFY-SVC-37-003 marked DONE. | Implementer |

|

||||

| 2025-11-26 | Implemented channel adapters: `INotifyChannelAdapter` interface with `ChannelDispatchResult`, `WebhookChannelAdapter` (HTTP POST with retry), `SlackChannelAdapter` (blocks format), `EmailChannelAdapter` (SMTP stub); wired in Worker `Program.cs`; NOTIFY-SVC-38-002 marked DONE. | Implementer |

|

||||

| 2025-11-26 | Implemented template service: `INotifyTemplateService` with locale fallback chain, `AdvancedTemplateRenderer` supporting `{{#if}}`/`{{#each}}` blocks, format conversion (Markdown→HTML/Slack/Teams MessageCard), redaction allowlists, provenance links; NOTIFY-SVC-38-003 marked DONE. | Implementer |

|

||||

| 2025-11-26 | Implemented REST v2 APIs in WebService: Templates CRUD (`/api/v2/notify/templates`) with preview, Rules CRUD (`/api/v2/notify/rules`), Channels CRUD (`/api/v2/notify/channels`), Deliveries query (`/api/v2/notify/deliveries`) with audit logging; NOTIFY-SVC-38-004 marked DONE. | Implementer |

|

||||

| 2025-11-26 | Implemented correlation engine in Worker: `ICorrelationEngine`/`DefaultCorrelationEngine` with incident lifecycle, `ICorrelationKeyEvaluator` with `{{property}}` template expressions, `INotifyThrottler`/`LockBasedThrottler`, `IQuietHoursEvaluator`/`DefaultQuietHoursEvaluator` using Cronos for cron schedules and maintenance windows; NOTIFY-SVC-39-001 marked DONE. | Implementer |

|

||||

| 2025-11-26 | Implemented digest generator in Worker: `NotifyDigest`/`DigestSchedule` models with immutable collections, `IDigestGenerator`/`DefaultDigestGenerator` querying deliveries and formatting with templates, `IDigestScheduleRunner`/`DigestScheduleRunner` with Cronos cron scheduling, period-based windows (hourly/daily/weekly), timezone support, channel adapter dispatch; NOTIFY-SVC-39-002 marked DONE. | Implementer |

|

||||

| 2025-11-26 | Implemented simulation engine: `NotifySimulation.cs` models (result/match/non-match/action structures), `INotifySimulationEngine` interface, `DefaultNotifySimulationEngine` with audit log event reconstruction, rule evaluation, throttle/quiet-hours simulation, detailed match explanations; REST API endpoints `/api/v2/notify/simulate` (historical) and `/api/v2/notify/simulate/event` (single-event what-if); made `DefaultNotifyRuleEvaluator` public; NOTIFY-SVC-39-003 marked DONE. | Implementer |

|

||||

|

||||

## Decisions & Risks

|

||||

- All tasks depend on Notifier I outputs and established notification contracts; keep TODO until upstream lands.

|

||||

- Ensure templates/renderers stay deterministic and offline-ready; hardening tasks must precede GA.

|

||||

- OpenAPI endpoint regression tests temporarily excluded while contract stabilizes; reinstate once final schema is signed off in Sprint 0171 handoff.

|

||||

|

||||

## Next Checkpoints

|

||||

- Kickoff after Sprint 0171 completion (date TBD).

|

||||

# Sprint 0172-0001-0002 · Notifier II (Notifications & Telemetry 170.A)

|

||||

|

||||

## Topic & Scope

|

||||

- Notifier phase II: approval/policy notifications, channels/templates, correlation/digests/simulation, escalations, and hardening.

|

||||

- **Working directory:** `src/Notifier/StellaOps.Notifier`.

|

||||

|

||||

## Dependencies & Concurrency

|

||||

- Upstream: Notifier I (Sprint 0171) must land first.

|

||||

- Concurrency: follow service chain (37 → 38 → 39 → 40); all tasks currently TODO.

|

||||

|

||||

## Documentation Prerequisites

|

||||

- docs/README.md

|

||||

- docs/07_HIGH_LEVEL_ARCHITECTURE.md

|

||||

- docs/modules/platform/architecture-overview.md

|

||||

- docs/modules/notifications/architecture.md

|

||||

- src/Notifier/StellaOps.Notifier/AGENTS.md

|

||||

|

||||

## Delivery Tracker

|

||||

| # | Task ID | Status | Key dependency / next step | Owners | Task Definition |

|

||||

| --- | --- | --- | --- | --- | --- |

|

||||

| 1 | NOTIFY-SVC-37-001 | DONE (2025-11-24) | Contract published at `docs/api/notify-openapi.yaml` and `src/Notifier/StellaOps.Notifier/StellaOps.Notifier.WebService/openapi/notify-openapi.yaml`. | Notifications Service Guild (`src/Notifier/StellaOps.Notifier`) | Define pack approval & policy notification contract (OpenAPI schema, event payloads, resume tokens, security guidance). |

|

||||

| 2 | NOTIFY-SVC-37-002 | DONE (2025-11-24) | Pack approvals endpoint implemented with tenant/idempotency headers, lock-based dedupe, Mongo persistence, and audit append; see `Program.cs` + storage migrations. | Notifications Service Guild | Implement secure ingestion endpoint, Mongo persistence (`pack_approvals`), idempotent writes, audit trail. |

|

||||

| 3 | NOTIFY-SVC-37-003 | DONE (2025-11-27) | Dispatch/rendering layer complete: `INotifyTemplateRenderer`/`SimpleTemplateRenderer` (Handlebars-style {{variable}} + {{#each}}, sensitive key redaction), `INotifyChannelDispatcher`/`WebhookChannelDispatcher` (Slack/webhook with retry), `DeliveryDispatchWorker` (BackgroundService), DI wiring in Program.cs, options + tests. | Notifications Service Guild | Approval/policy templates, routing predicates, channel dispatch (email/webhook), localization + redaction. |

|

||||

| 4 | NOTIFY-SVC-37-004 | DONE (2025-11-24) | Test harness stabilized with in-memory stores; OpenAPI stub returns scope/etag; pack-approvals ack path exercised. | Notifications Service Guild | Acknowledgement API, Task Runner callback client, metrics for outstanding approvals, runbook updates. |

|

||||

| 5 | NOTIFY-SVC-38-002 | DONE (2025-11-27) | Channel adapters complete: `IChannelAdapter`, `WebhookChannelAdapter`, `EmailChannelAdapter`, `ChatWebhookChannelAdapter` with retry policies (exponential backoff + jitter), health checks, audit logging, HMAC signing, `ChannelAdapterFactory` DI registration. Tests at `StellaOps.Notifier.Tests/Channels/`. | Notifications Service Guild | Channel adapters (email, chat webhook, generic webhook) with retry policies, health checks, audit logging. |

|

||||

| 6 | NOTIFY-SVC-38-003 | DONE (2025-11-27) | Template service complete: `INotifyTemplateService`/`NotifyTemplateService` (locale fallback chain, versioning, CRUD with audit), `EnhancedTemplateRenderer` (configurable redaction allowlists/denylists, Markdown/HTML/JSON/PlainText format conversion, provenance links, {{#if}} conditionals, format specifiers), `TemplateRendererOptions`, DI registration via `AddTemplateServices()`. Tests at `StellaOps.Notifier.Tests/Templates/`. | Notifications Service Guild | Template service (versioned templates, localization scaffolding) and renderer (redaction allowlists, Markdown/HTML/JSON, provenance links). |

|

||||

| 7 | NOTIFY-SVC-38-004 | DONE (2025-11-27) | REST APIs complete: `/api/v2/notify/rules` (CRUD), `/api/v2/notify/templates` (CRUD + preview + validate), `/api/v2/notify/incidents` (list + ack + resolve). Contract DTOs at `Contracts/RuleContracts.cs`, `TemplateContracts.cs`, `IncidentContracts.cs`. Endpoints via `MapNotifyApiV2()` extension. Audit logging on all mutations. Tests at `StellaOps.Notifier.Tests/Endpoints/`. | Notifications Service Guild | REST + WS APIs (rules CRUD, templates preview, incidents list, ack) with audit logging, RBAC, live feed stream. |

|

||||

| 8 | NOTIFY-SVC-39-001 | DONE (2025-11-27) | Correlation engine complete: `ICorrelationEngine`/`CorrelationEngine` (orchestrates key building, incident management, throttling, quiet hours), `ICorrelationKeyBuilder` interface with `CompositeCorrelationKeyBuilder` (tenant+kind+payload fields), `TemplateCorrelationKeyBuilder` (template expressions), `CorrelationKeyBuilderFactory`. `INotifyThrottler`/`InMemoryNotifyThrottler` (sliding window throttling). `IQuietHoursEvaluator`/`QuietHoursEvaluator` (quiet hours schedules, maintenance windows). `IIncidentManager`/`InMemoryIncidentManager` (incident lifecycle: open/acknowledged/resolved). Notification policies (FirstOnly, EveryEvent, OnEscalation, Periodic). DI registration via `AddCorrelationServices()`. Comprehensive tests at `StellaOps.Notifier.Tests/Correlation/`. | Notifications Service Guild | Correlation engine with pluggable key expressions/windows, throttler, quiet hours/maintenance evaluator, incident lifecycle. |

|

||||

| 9 | NOTIFY-SVC-39-002 | DONE (2025-11-27) | Digest generator complete: `IDigestGenerator`/`DigestGenerator` (queries incidents, calculates summary statistics, builds timeline, renders to Markdown/HTML/PlainText/JSON), `IDigestScheduler`/`InMemoryDigestScheduler` (cron-based scheduling with Cronos, timezone support, next-run calculation), `DigestScheduleRunner` BackgroundService (concurrent schedule execution with semaphore limiting), `IDigestDistributor`/`DigestDistributor` (webhook/Slack/Teams/email distribution with format-specific payloads). DTOs: `DigestQuery`, `DigestContent`, `DigestSummary`, `DigestIncident`, `EventKindSummary`, `TimelineEntry`, `DigestSchedule`, `DigestRecipient`. DI registration via `AddDigestServices()` with `DigestServiceBuilder`. Tests at `StellaOps.Notifier.Tests/Digest/`. | Notifications Service Guild | Digest generator (queries, formatting) with schedule runner and distribution. |

|

||||

| 10 | NOTIFY-SVC-39-003 | DONE (2025-11-27) | Simulation engine complete: `ISimulationEngine`/`SimulationEngine` (dry-runs rules against events without side effects, evaluates all rules against all events, builds detailed match/non-match explanations), `SimulationRequest`/`SimulationResult` DTOs with `SimulationEventResult`, `SimulationRuleMatch`, `SimulationActionMatch`, `SimulationRuleNonMatch`, `SimulationRuleSummary`. Rule validation via `ValidateRuleAsync` with error/warning detection (missing fields, broad matches, unknown severities, disabled actions). API endpoint at `/api/v2/simulate` (POST for simulation, POST /validate for rule validation) via `SimulationEndpoints.cs`. DI registration via `AddSimulationServices()`. Tests at `StellaOps.Notifier.Tests/Simulation/SimulationEngineTests.cs`. | Notifications Service Guild | Simulation engine/API to dry-run rules against historical events, returning matched actions with explanations. |

|

||||

| 11 | NOTIFY-SVC-39-004 | DONE (2025-11-27) | Quiet hour calendars, throttle configs, audit logging, and operator overrides implemented. | Notifications Service Guild | Quiet hour calendars + default throttles with audit logging and operator overrides. |

|

||||

| 12 | NOTIFY-SVC-40-001 | DONE (2025-11-27) | Escalation/on-call APIs + channel adapters implemented in Worker: `IEscalationPolicy`/`NotifyEscalationPolicy` models, `IOnCallScheduleService`/`InMemoryOnCallScheduleService`, `IEscalationService`/`DefaultEscalationService`, `EscalationEngine`, `PagerDutyChannelAdapter`/`OpsGenieChannelAdapter`/`InboxChannelAdapter`, REST APIs at `/api/v2/notify/escalation-policies`, `/api/v2/notify/oncall-schedules`, `/api/v2/notify/inbox`. | Notifications Service Guild | Escalations + on-call schedules, ack bridge, PagerDuty/OpsGenie adapters, CLI/in-app inbox channels. |

|

||||

| 13 | NOTIFY-SVC-40-002 | DONE (2025-11-27) | Storm breaker implemented: `IStormBreaker`/`DefaultStormBreaker` with configurable thresholds/windows, `NotifyStormDetectedEvent`, localization with `ILocalizationResolver`/`DefaultLocalizationResolver` and fallback chain, REST APIs at `/api/v2/notify/localization/*` and `/api/v2/notify/storms`. | Notifications Service Guild | Summary storm breaker notifications, localization bundles, fallback handling. |

|

||||

| 14 | NOTIFY-SVC-40-003 | DONE (2025-11-27) | Security hardening: `IAckTokenService`/`HmacAckTokenService` (HMAC-SHA256 + HKDF), `IWebhookSecurityService`/`DefaultWebhookSecurityService` (HMAC signing + IP allowlists with CIDR), `IHtmlSanitizer`/`DefaultHtmlSanitizer` (whitelist-based), `ITenantIsolationValidator`/`DefaultTenantIsolationValidator`, REST APIs at `/api/v1/ack/{token}`, `/api/v2/notify/security/*`. | Notifications Service Guild | Security hardening: signed ack links (KMS), webhook HMAC/IP allowlists, tenant isolation fuzz tests, HTML sanitization. |

|

||||

| 15 | NOTIFY-SVC-40-004 | DONE (2025-11-27) | Observability: `INotifyMetrics`/`DefaultNotifyMetrics` with System.Diagnostics.Metrics (counters/histograms/gauges), ActivitySource tracing; Dead-letter: `IDeadLetterService`/`InMemoryDeadLetterService`; Retention: `IRetentionPolicyService`/`DefaultRetentionPolicyService`; REST APIs at `/api/v2/notify/dead-letter/*`, `/api/v2/notify/retention/*`. | Notifications Service Guild | Observability (metrics/traces for escalations/latency), dead-letter handling, chaos tests for channel outages, retention policies. |

|

||||

|

||||

## Execution Log

|

||||

| Date (UTC) | Update | Owner |

|

||||

| --- | --- | --- |

|

||||

| 2025-11-27 | Implemented NOTIFY-SVC-40-001 through NOTIFY-SVC-40-004: escalations/on-call schedules, storm breaker/localization, security hardening (ack tokens, HMAC webhooks, HTML sanitization, tenant isolation), observability metrics/traces, dead-letter handling, retention policies. Sprint 0172 complete. | Implementer |

|

||||

| 2025-11-27 | Completed observability and chaos tests (NOTIFY-SVC-40-004): Implemented comprehensive observability stack. | Implementer |

|

||||

| 2025-11-27 | Completed security hardening (NOTIFY-SVC-40-003): Implemented comprehensive security services. | Implementer |

|

||||

| 2025-11-27 | Completed storm breaker, localization, and fallback handling (NOTIFY-SVC-40-002). | Implementer |

|

||||

| 2025-11-27 | Completed escalation and on-call schedules (NOTIFY-SVC-40-001). | Implementer |

|

||||

| 2025-11-27 | Extended NOTIFY-SVC-39-004 with REST APIs and quiet hours calendars. | Implementer |

|

||||

| 2025-11-27 | Completed simulation engine (NOTIFY-SVC-39-003). | Implementer |

|

||||

| 2025-11-27 | Completed digest generator (NOTIFY-SVC-39-002). | Implementer |

|

||||

| 2025-11-27 | Completed correlation engine (NOTIFY-SVC-39-001). | Implementer |

|

||||

| 2025-11-27 | Completed REST APIs (NOTIFY-SVC-38-004) with WebSocket support. | Implementer |

|

||||

| 2025-11-27 | Completed template service (NOTIFY-SVC-38-003). | Implementer |

|

||||

| 2025-11-27 | Completed dispatch/rendering wiring (NOTIFY-SVC-37-003). | Implementer |

|

||||

| 2025-11-27 | Completed channel adapters (NOTIFY-SVC-38-002). | Implementer |

|

||||

| 2025-11-27 | Enhanced pack approvals contract. | Implementer |

|

||||

| 2025-11-19 | Normalized sprint to standard template and renamed from `SPRINT_172_notifier_ii.md` to `SPRINT_0172_0001_0002_notifier_ii.md`; content preserved. | Implementer |

|

||||

| 2025-11-19 | Added legacy-file redirect stub to prevent divergent updates. | Implementer |

|

||||

| 2025-11-24 | Published pack-approvals ingestion contract into Notifier OpenAPI (`docs/api/notify-openapi.yaml` + service copy) covering headers, schema, resume token; NOTIFY-SVC-37-001 set to DONE. | Implementer |

|

||||

| 2025-11-24 | Shipped pack-approvals ingestion endpoint with lock-backed idempotency, Mongo persistence, and audit trail; NOTIFY-SVC-37-002 marked DONE. | Implementer |

|

||||

| 2025-11-24 | Drafted pack approval templates + routing predicates with localization/redaction hints in `StellaOps.Notifier.docs/pack-approval-templates.json`; NOTIFY-SVC-37-003 moved to DOING. | Implementer |

|

||||

| 2025-11-24 | Notifier test harness switched to in-memory stores; OpenAPI stub hardened; NOTIFY-SVC-37-004 marked DONE after green `dotnet test`. | Implementer |

|

||||

| 2025-11-24 | Added pack-approval template validation tests; kept NOTIFY-SVC-37-003 in DOING pending dispatch/rendering wiring. | Implementer |

|

||||

| 2025-11-24 | Seeded pack-approval templates into the template repository via hosted seeder; test suite expanded (`PackApprovalTemplateSeederTests`), still awaiting dispatch wiring. | Implementer |

|

||||

| 2025-11-24 | Enqueued pack-approval ingestion into Notify event queue and seeded default channels/rule; waiting on dispatch/rendering wiring + queue backend configuration. | Implementer |

|

||||

| 2025-11-26 | Implemented dispatch/rendering pipeline: `INotifyTemplateRenderer` + `SimpleTemplateRenderer` (Handlebars-style with `{{#each}}` support), `NotifierDispatchWorker` background service polling pending deliveries; NOTIFY-SVC-37-003 marked DONE. | Implementer |

|

||||

| 2025-11-26 | Implemented channel adapters: `INotifyChannelAdapter` interface with `ChannelDispatchResult`, `WebhookChannelAdapter` (HTTP POST with retry), `SlackChannelAdapter` (blocks format), `EmailChannelAdapter` (SMTP stub); wired in Worker `Program.cs`; NOTIFY-SVC-38-002 marked DONE. | Implementer |

|

||||

| 2025-11-26 | Implemented template service: `INotifyTemplateService` with locale fallback chain, `AdvancedTemplateRenderer` supporting `{{#if}}`/`{{#each}}` blocks, format conversion (Markdown→HTML/Slack/Teams MessageCard), redaction allowlists, provenance links; NOTIFY-SVC-38-003 marked DONE. | Implementer |

|

||||

| 2025-11-26 | Implemented REST v2 APIs in WebService: Templates CRUD (`/api/v2/notify/templates`) with preview, Rules CRUD (`/api/v2/notify/rules`), Channels CRUD (`/api/v2/notify/channels`), Deliveries query (`/api/v2/notify/deliveries`) with audit logging; NOTIFY-SVC-38-004 marked DONE. | Implementer |

|

||||

| 2025-11-26 | Implemented correlation engine in Worker: `ICorrelationEngine`/`DefaultCorrelationEngine` with incident lifecycle, `ICorrelationKeyEvaluator` with `{{property}}` template expressions, `INotifyThrottler`/`LockBasedThrottler`, `IQuietHoursEvaluator`/`DefaultQuietHoursEvaluator` using Cronos for cron schedules and maintenance windows; NOTIFY-SVC-39-001 marked DONE. | Implementer |

|

||||

| 2025-11-26 | Implemented digest generator in Worker: `NotifyDigest`/`DigestSchedule` models with immutable collections, `IDigestGenerator`/`DefaultDigestGenerator` querying deliveries and formatting with templates, `IDigestScheduleRunner`/`DigestScheduleRunner` with Cronos cron scheduling, period-based windows (hourly/daily/weekly), timezone support, channel adapter dispatch; NOTIFY-SVC-39-002 marked DONE. | Implementer |

|

||||

| 2025-11-26 | Implemented simulation engine: `NotifySimulation.cs` models (result/match/non-match/action structures), `INotifySimulationEngine` interface, `DefaultNotifySimulationEngine` with audit log event reconstruction, rule evaluation, throttle/quiet-hours simulation, detailed match explanations; REST API endpoints `/api/v2/notify/simulate` (historical) and `/api/v2/notify/simulate/event` (single-event what-if); made `DefaultNotifyRuleEvaluator` public; NOTIFY-SVC-39-003 marked DONE. | Implementer |

|

||||

|

||||

## Decisions & Risks

|

||||

- All tasks depend on Notifier I outputs and established notification contracts; keep TODO until upstream lands.

|

||||

- Ensure templates/renderers stay deterministic and offline-ready; hardening tasks must precede GA.

|

||||

- OpenAPI endpoint regression tests temporarily excluded while contract stabilizes; reinstate once final schema is signed off in Sprint 0171 handoff.

|

||||

|

||||

## Next Checkpoints

|

||||

- Kickoff after Sprint 0171 completion (date TBD).

|

||||

|

||||

@@ -1,40 +1,42 @@

|

||||

# Sprint 0173-0001-0003 · Notifier III (Notifications & Telemetry 170.A)

|

||||

|

||||

## Topic & Scope

|

||||

- Notifier phase III: tenant scoping across rules/templates/incidents with RLS and tenant-prefixed channels.

|

||||

- **Working directory:** `src/Notifier/StellaOps.Notifier`.

|

||||

|

||||

## Dependencies & Concurrency

|

||||

- Upstream: Notifier II (Sprint 0172-0001-0002) must land first.

|

||||

- Concurrency: single-track; proceed after prior phase completion.

|

||||

|

||||

## Documentation Prerequisites

|

||||

- docs/README.md

|

||||

- docs/07_HIGH_LEVEL_ARCHITECTURE.md

|

||||