Add Christmass advisories

This commit is contained in:

@@ -0,0 +1,104 @@

|

|||||||

|

Here’s a tight, practical blueprint for evolving Stella Ops’s policy engine into a **fully deterministic verdict engine**—so the *same SBOM + VEX + reachability subgraph ⇒ the exact same, replayable verdict* every time, with auditor‑grade trails and signed “delta verdicts.”

|

||||||

|

|

||||||

|

# Why this matters (quick)

|

||||||

|

|

||||||

|

* **Reproducibility:** auditors can replay any scan and get identical results.

|

||||||

|

* **Trust & scale:** cross‑agent consensus via content‑addressed inputs and signed outputs.

|

||||||

|

* **Operational clarity:** diffs between builds become crisp, machine‑verifiable artifacts.

|

||||||

|

|

||||||

|

# Core principles

|

||||||

|

|

||||||

|

* **Determinism-first:** no wall‑clock time, no random iteration order, no network during evaluation.

|

||||||

|

* **Content‑addressing:** hash every *input* (SBOM, VEX docs, reachability subgraph, policy set, rule versions, feed snapshots).

|

||||||

|

* **Declarative state:** a compact **Scan Manifest** lists input hashes + policy bundle hash + engine version.

|

||||||

|

* **Pure evaluation:** the verdict function is referentially transparent: `Verdict = f(Manifest)`.

|

||||||

|

|

||||||

|

# Data artifacts

|

||||||

|

|

||||||

|

* **Scan Manifest (`manifest.jsonc`)**

|

||||||

|

|

||||||

|

* `sbom_sha256`, `vex_set_sha256[]`, `reach_subgraph_sha256`, `feeds_snapshot_sha256`, `policy_bundle_sha256`, `engine_version`, `policy_semver`, `options_hash`

|

||||||

|

* **Verdict (`verdict.json`)**

|

||||||

|

|

||||||

|

* canonical JSON (stable key order); includes:

|

||||||

|

|

||||||

|

* `risk_score`, `status` (pass/warn/fail), `unknowns_count`

|

||||||

|

* **evidence_refs:** content IDs for cited VEX statements, nodes/edges from reachability, CVE records, feature‑flags, env‑guards

|

||||||

|

* **explanations:** stable, template‑driven strings (+ machine reasons)

|

||||||

|

* **Delta Verdict (`delta.json`)**

|

||||||

|

|

||||||

|

* computed between two manifests/verdicts:

|

||||||

|

|

||||||

|

* `added_findings[]`, `removed_findings[]`, `severity_shift[]`, `unknowns_delta`, `policy_effects[]`

|

||||||

|

* signed (DSSE/COSE/JWS), time‑stamped, and linkable to both verdicts

|

||||||

|

|

||||||

|

# Engine architecture (deterministic path)

|

||||||

|

|

||||||

|

1. **Normalize inputs**

|

||||||

|

|

||||||

|

* SBOM: sort by `packageUrl`/`name@version`; resolve aliases; freeze semver comparison rules.

|

||||||

|

* VEX: normalize provider → `vex_id`, `product_ref`, `status` (`affected`, `not_affected`, …), *with* source trust score precomputed from a **trust registry** (strict, versioned).

|

||||||

|

* Reachability: store subgraph as adjacency lists sorted by node ID; hash after topological stable ordering.

|

||||||

|

* Feeds: lock to a **snapshot** (timestamp + commit/hash); no live calls.

|

||||||

|

2. **Policy bundle**

|

||||||

|

|

||||||

|

* Declarative rules (e.g., lattice/merge semantics), compiled to a **canonical IR** (e.g., OPA‑Rego → sorted DNF).

|

||||||

|

* Merge precedence is explicit (e.g., `vendor > distro > internal` can be replaced by a lattice‑merge table).

|

||||||

|

* Unknowns policy baked in: e.g., `fail_if_unknowns > N in prod`.

|

||||||

|

3. **Evaluation**

|

||||||

|

|

||||||

|

* Build a **finding set**: `(component, vuln, context)` tuples with deterministic IDs.

|

||||||

|

* Apply **lattice‑based VEX merge** (proof‑carrying): each suppression must carry an evidence pointer (feature flag off, code path unreachable, patched‑backport proof).

|

||||||

|

* Compute final `status` and `risk_score` using fixed‑precision math; round rules are part of the bundle.

|

||||||

|

4. **Emit**

|

||||||

|

|

||||||

|

* Canonicalize verdict JSON; attach **evidence map** (content IDs only).

|

||||||

|

* Sign verdict; attach as **OCI attestation** to image/digest.

|

||||||

|

|

||||||

|

# APIs (minimal but complete)

|

||||||

|

|

||||||

|

* `POST /evaluate` → returns `verdict.json` + attestation

|

||||||

|

* `POST /delta` with `{base_verdict, head_verdict}` → `delta.json` (signed)

|

||||||

|

* `GET /replay?manifest_sha=` → re‑executes using cached snapshot bundles, returns the same `verdict_sha`

|

||||||

|

* `GET /evidence/:cid` → fetches immutable evidence blobs (offline‑ready)

|

||||||

|

|

||||||

|

# Storage & indexing

|

||||||

|

|

||||||

|

* **CAS (content‑addressable store):** `/evidence/<sha256>` for SBOM/VEX/graphs/feeds/policies.

|

||||||

|

* **Verdict registry:** keyed by `(image_digest, manifest_sha, engine_version)`.

|

||||||

|

* **Delta ledger:** append‑only, signed; supports cross‑agent consensus (multiple engines can co‑sign identical deltas).

|

||||||

|

|

||||||

|

# UI slices (where it lives)

|

||||||

|

|

||||||

|

* **Run details → “Verdict” tab:** status, risk score, unknowns, top evidence links.

|

||||||

|

* **“Diff” tab:** render **Delta Verdict** (added/removed/changed), with drill‑down to proofs.

|

||||||

|

* **“Replay” button:** shows the exact manifest & engine version; one‑click re‑evaluation (offline possible).

|

||||||

|

* **Audit export:** zip of `manifest.jsonc`, `verdict.json`, `delta.json` (if any), attestation, and referenced evidence.

|

||||||

|

|

||||||

|

# Testing & QA (must‑have)

|

||||||

|

|

||||||

|

* **Golden tests:** fixtures of manifests → frozen verdict JSONs (byte‑for‑byte).

|

||||||

|

* **Chaos determinism tests:** vary thread counts, env vars, map iteration seeds; assert identical verdicts.

|

||||||

|

* **Cross‑engine round‑trips:** two independent builds of the engine produce the same verdict for the same manifest.

|

||||||

|

* **Time‑travel tests:** replay older feed snapshots to ensure stability.

|

||||||

|

|

||||||

|

# Rollout plan

|

||||||

|

|

||||||

|

1. **Phase 1:** Introduce Manifest + canonical verdict format alongside existing policy engine (shadow mode).

|

||||||

|

2. **Phase 2:** Make verdicts the **first‑class artifact** (OCI‑attached); ship UI “Verdict/Diff”.

|

||||||

|

3. **Phase 3:** Enforce **delta‑gates** in CI/CD (risk budgets + exception packs referenceable by content ID).

|

||||||

|

4. **Phase 4:** Open **consensus mode**—accept externally signed identical delta verdicts to strengthen trust.

|

||||||

|

|

||||||

|

# Notes for Stella modules

|

||||||

|

|

||||||

|

* **scanner.webservice:** keep lattice algorithms here (per your standing rule). Concelier/Excitors “preserve‑prune source.”

|

||||||

|

* **Authority/Attestor:** handle DSSE signing, key management, regional crypto profiles (eIDAS/FIPS/GOST/SM).

|

||||||

|

* **Feedser/Vexer:** produce immutable **snapshot bundles**; never query live during evaluation.

|

||||||

|

* **Router/Scheduler:** schedule replay jobs; cache manifests to speed up audits.

|

||||||

|

* **Db:** Postgres as SoR; Valkey only for ephemeral queues/caches (per your BSD‑only profile).

|

||||||

|

|

||||||

|

If you want, I can generate:

|

||||||

|

|

||||||

|

* a sample **Manifest + Verdict + Delta** trio,

|

||||||

|

* the **canonical JSON schema**,

|

||||||

|

* and a **.NET 10** reference evaluator (deterministic LINQ pipeline + fixed‑precision math) you can drop into `scanner.webservice`.

|

||||||

@@ -0,0 +1,135 @@

|

|||||||

|

Here’s a small but high‑impact practice to make your hashes/signatures and “same inputs → same verdict” truly stable across services: **pick one canonicalization and enforce it at the resolver boundary.**

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### Why this matters (in plain words)

|

||||||

|

|

||||||

|

Two JSONs that *look* the same can serialize differently (key order, spacing, Unicode forms). If one producer emits slightly different bytes, your REG/verdict hash changes—even though the meaning didn’t—breaking dedup, cache hits, attestations, and audits.

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### The rule

|

||||||

|

|

||||||

|

**Adopt one canonicalization spec and apply it everywhere at ingress/egress of your resolver:**

|

||||||

|

|

||||||

|

* **Strings:** normalize to **UTF‑8, Unicode NFC** (Normalization Form C).

|

||||||

|

* **JSON:** canonicalize with a deterministic scheme (e.g., **RFC 8785 JCS**: sorted keys, no insignificant whitespace, exact number formatting, escape rules).

|

||||||

|

* **Binary for hashing/signing:** always hash **the canonical bytes**, never ad‑hoc serializer output.

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### Minimal contract (put this in your CONTRIBUTING/AGREEMENTS.md)

|

||||||

|

|

||||||

|

1. Inputs may arrive in any well‑formed JSON.

|

||||||

|

2. Resolver **normalizes strings (NFC)** and **re‑emits JSON in JCS**.

|

||||||

|

3. **REG hash** is computed from **JCS‑canonical UTF‑8 bytes** only.

|

||||||

|

4. Any signature/attestation (DSSE/OCI) MUST cover those same bytes.

|

||||||

|

5. Any module that can’t speak JCS must pass raw data to the resolver; only the resolver serializes.

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### Practical .NET 10 snippet (drop‑in utility)

|

||||||

|

|

||||||

|

```csharp

|

||||||

|

using System.Text;

|

||||||

|

using System.Text.Json;

|

||||||

|

using System.Globalization;

|

||||||

|

|

||||||

|

public static class Canon

|

||||||

|

{

|

||||||

|

// 1) Unicode → NFC

|

||||||

|

public static string ToNfc(string s) => s is null ? null : s.Normalize(NormalizationForm.FormC);

|

||||||

|

|

||||||

|

// 2) Walk JSON tree, NFC all strings

|

||||||

|

public static JsonElement NormalizeStrings(JsonElement node, JsonSerializerOptions opts = null!)

|

||||||

|

{

|

||||||

|

switch (node.ValueKind)

|

||||||

|

{

|

||||||

|

case JsonValueKind.String:

|

||||||

|

var nfc = ToNfc(node.GetString()!);

|

||||||

|

return JsonDocument.Parse(JsonSerializer.Serialize(nfc)).RootElement;

|

||||||

|

|

||||||

|

case JsonValueKind.Object:

|

||||||

|

{

|

||||||

|

using var doc = JsonDocument.Parse("{}");

|

||||||

|

var dict = new SortedDictionary<string, JsonElement>(StringComparer.Ordinal); // sorted keys (JCS)

|

||||||

|

foreach (var p in node.EnumerateObject())

|

||||||

|

{

|

||||||

|

var key = ToNfc(p.Name);

|

||||||

|

dict[key] = NormalizeStrings(p.Value, opts);

|

||||||

|

}

|

||||||

|

// re‑emit in sorted order

|

||||||

|

using var buf = new MemoryStream();

|

||||||

|

using (var w = new Utf8JsonWriter(buf, new JsonWriterOptions { SkipValidation = false, Indented = false }))

|

||||||

|

{

|

||||||

|

w.WriteStartObject();

|

||||||

|

foreach (var kv in dict)

|

||||||

|

WriteCanonical(kv.Key, kv.Value, w);

|

||||||

|

w.WriteEndObject();

|

||||||

|

}

|

||||||

|

return JsonDocument.Parse(buf.ToArray()).RootElement;

|

||||||

|

}

|

||||||

|

|

||||||

|

case JsonValueKind.Array:

|

||||||

|

{

|

||||||

|

var items = new List<JsonElement>();

|

||||||

|

foreach (var v in node.EnumerateArray())

|

||||||

|

items.Add(NormalizeStrings(v, opts));

|

||||||

|

using var buf = new MemoryStream();

|

||||||

|

using (var w = new Utf8JsonWriter(buf, new JsonWriterOptions { SkipValidation = false, Indented = false }))

|

||||||

|

{

|

||||||

|

w.WriteStartArray();

|

||||||

|

foreach (var v in items) v.WriteTo(w);

|

||||||

|

w.WriteEndArray();

|

||||||

|

}

|

||||||

|

return JsonDocument.Parse(buf.ToArray()).RootElement;

|

||||||

|

}

|

||||||

|

|

||||||

|

default:

|

||||||

|

return node; // numbers/bools/null unchanged (JCS rules avoid extra zeros, no NaN/Inf)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

// helper to write a property with an already-built JsonElement

|

||||||

|

static void WriteCanonical(string name, JsonElement value, Utf8JsonWriter w)

|

||||||

|

{

|

||||||

|

w.WritePropertyName(name); // JCS: name is exact UTF‑8, no extra spaces

|

||||||

|

value.WriteTo(w); // keep canonicalized subtree

|

||||||

|

}

|

||||||

|

|

||||||

|

// 3) Canonical bytes for hashing/signing

|

||||||

|

public static byte[] CanonicalizeUtf8(ReadOnlySpan<byte> utf8Json)

|

||||||

|

{

|

||||||

|

using var doc = JsonDocument.Parse(utf8Json);

|

||||||

|

var normalized = NormalizeStrings(doc.RootElement);

|

||||||

|

using var buf = new MemoryStream();

|

||||||

|

using (var w = new Utf8JsonWriter(buf, new JsonWriterOptions { Indented = false })) // no whitespace

|

||||||

|

normalized.WriteTo(w);

|

||||||

|

return buf.ToArray(); // feed into SHA‑256/DSSE

|

||||||

|

}

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

|

**Usage (hash/sign):**

|

||||||

|

|

||||||

|

```csharp

|

||||||

|

var inputBytes = File.ReadAllBytes("input.json");

|

||||||

|

var canon = Canon.CanonicalizeUtf8(inputBytes);

|

||||||

|

var sha256 = System.Security.Cryptography.SHA256.HashData(canon);

|

||||||

|

// sign `canon` bytes; attach hash to verdict/attestation

|

||||||

|

```

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### Drop‑in checklist (pin on your wall)

|

||||||

|

|

||||||

|

* [ ] One canonicalization policy: **UTF‑8 + NFC + JCS**.

|

||||||

|

* [ ] Resolver owns canonicalization (single choke‑point).

|

||||||

|

* [ ] **REG hash/signatures always over canonical bytes.**

|

||||||

|

* [ ] CI gate: reject outputs that aren’t JCS; fuzz keys/order/whitespace in tests.

|

||||||

|

* [ ] Log both the pre‑canonical and canonical SHA‑256 for audits.

|

||||||

|

* [ ] Backward‑compat path: migrate legacy verdicts by re‑canonicalizing once, store “old_hash → new_hash” map.

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

If you want, I can wrap this into a tiny **`StellaOps.Canonicalizer`** NuGet (net10.0) and a Git pre‑commit hook + CI check so your agents and services can’t drift.

|

||||||

@@ -0,0 +1,108 @@

|

|||||||

|

Here’s a practical blueprint for linking what you *build* to what actually *runs*, and turning that into proof-grade security decisions.

|

||||||

|

|

||||||

|

# Static → Binary braid (build-time proof of “what functions are inside”)

|

||||||

|

|

||||||

|

**Goal:** Prove exactly which functions/offsets shipped in an artifact—without exposing full source.

|

||||||

|

|

||||||

|

* **What to store (per artifact):**

|

||||||

|

|

||||||

|

* Minimal call‑stack “entry→sink” traces for relevant code paths (e.g., public handlers → sensitive sinks).

|

||||||

|

* Symbol map concordance: `{ function, file, address-range, Build‑ID, debug-id }`.

|

||||||

|

* Hashes per function-range (e.g., rolling BLAKE3 over `.text` subranges), plus overall `.text`/`.rodata` digests.

|

||||||

|

* **How to generate:**

|

||||||

|

|

||||||

|

* During build, emit:

|

||||||

|

|

||||||

|

* ELF/PE/Mach‑O: capture Build‑ID, section ranges, and DWARF/CodeView ↔ symbol table mapping.

|

||||||

|

* Function-range hashing: disassemble to find prolog/epilog (fallback to symbol boundaries), hash byte ranges.

|

||||||

|

* Entry→sink traces: from static CFG or unit/integration tests with instrumentation; serialize as compact spans (start fn, end fn, edge list hash).

|

||||||

|

* **Proof object (tiny & replayable):**

|

||||||

|

|

||||||

|

* `{ build_id, section_hashes, [ {func: name, addr: start..end, func_hash}, … ], [trace_hashes] }`

|

||||||

|

* Sign with DSSE (in‑toto envelope). Auditors can replay using the published Build‑ID + debug symbols to verify function boundaries without your source.

|

||||||

|

* **Attach & ship:**

|

||||||

|

|

||||||

|

* Publish as an OCI referrers artifact alongside the image (e.g., `application/vnd.stellaops.funcproof+json`), referenced from SBOM (CycloneDX `evidence` or SPDX `verificationCode` extension).

|

||||||

|

* **Why it matters:**

|

||||||

|

|

||||||

|

* When a CVE names a *symbol* (not just a package version), you can prove whether that symbol (and exact byte-range) is present in your binary.

|

||||||

|

|

||||||

|

# Runtime → Build braid (production proof of “what code ran”)

|

||||||

|

|

||||||

|

**Goal:** Observe live stacks (cheaply), canonicalize to symbols, and correlate to SBOM components. If a vulnerable symbol appears *in hot paths*, automatically downgrade VEX posture.

|

||||||

|

|

||||||

|

* **Collection (Linux):**

|

||||||

|

|

||||||

|

* eBPF sampling for targeted processes/containers; use `bpf_get_stackid` to capture stack traces (user & kernel) into a perf map with low overhead.

|

||||||

|

* Collapse stacks (“frameA;frameB;… count”) à la flamegraph format; include PID, container image digest, Build‑ID tuples.

|

||||||

|

* **Canonicalization:**

|

||||||

|

|

||||||

|

* Resolve PCs → (Build‑ID, function, offset) via `perf-map-agent`/`eu-stack`, or your own resolver using `.note.gnu.build-id` + symbol table (prefer `debuginfod` in lab; ship a slim symbol cache in prod).

|

||||||

|

* Normalize language runtimes: Java/.NET/Python frames mapped to package+symbol via runtime metadata; native frames via ELF.

|

||||||

|

* **Correlate to SBOM:**

|

||||||

|

|

||||||

|

* For each frame: map `(image-digest, Build‑ID, function)` → SBOM component (pkg + version) and to your **Static→Binary proof** entry.

|

||||||

|

* **VEX policy reaction:**

|

||||||

|

|

||||||

|

* If a CVE’s vulnerable symbol appears in observed stacks **and** matches your static proof:

|

||||||

|

|

||||||

|

* Auto‑emit a **VEX downgrade** (e.g., from `not_affected` to `affected`) with DSSE signatures, including runtime evidence:

|

||||||

|

|

||||||

|

* Top stacks where the symbol was hot (counts/percentile),

|

||||||

|

* Build‑ID(s) observed,

|

||||||

|

* Timestamp window and container IDs.

|

||||||

|

* If symbol is present in build but never observed (and policy allows), maintain or upgrade to `not_affected(conditions: not_reachable_at_runtime)`—with time‑boxed confidence.

|

||||||

|

* **Controls & SLOs:**

|

||||||

|

|

||||||

|

* Sampling budget per workload (e.g., 49 Hz for N minutes per hour), P99 overhead <1%.

|

||||||

|

* Privacy guardrails: hash short-lived arguments; only persist canonical frames + counts.

|

||||||

|

|

||||||

|

# How this lands in Stella Ops (concrete modules & evidence flow)

|

||||||

|

|

||||||

|

* **Sbomer**: add `funcproof` generator at build (ELF range hashing + entry→sink traces). Emit CycloneDX `components.evidence` link to funcproof artifact.

|

||||||

|

* **Attestor**: wrap funcproof in DSSE, push as OCI referrer; record in Proof‑of‑Integrity Graph.

|

||||||

|

* **Signals/Excititor**: eBPF sampler daemonset; push collapsed frames with `(image-digest, Build‑ID)` to pipeline.

|

||||||

|

* **Concelier**: resolver service mapping frames → SBOM components + funcproof presence; maintain hot‑symbol index.

|

||||||

|

* **Vexer/Policy Engine**: when hot vulnerable symbol is confirmed, produce signed VEX downgrade; route to **Authority** for policy‑gated actions (quarantine, canary freeze, diff-aware release gate).

|

||||||

|

* **Timeline/Notify**: human‑readable evidence pack: “CVE‑2025‑XXXX observed in `libfoo::parse_hdr` (Build‑ID abc…), 17.3% of CPU in api‑gw@prod between 12:00–14:00 UTC; VEX → affected.”

|

||||||

|

|

||||||

|

# Data shapes (keep them tiny)

|

||||||

|

|

||||||

|

* **FuncProof JSON (per binary):**

|

||||||

|

|

||||||

|

```json

|

||||||

|

{

|

||||||

|

"buildId": "ab12…",

|

||||||

|

"sections": {".text": "hash", ".rodata": "hash"},

|

||||||

|

"functions": [

|

||||||

|

{"sym": "foo::bar", "start": "0x401120", "end": "0x4013af", "hash": "…"}

|

||||||

|

],

|

||||||

|

"traces": ["hash(edge-list-1)", "hash(edge-list-2)"],

|

||||||

|

"meta": {"compiler": "clang-18", "flags": "-O2 -fno-plt"}

|

||||||

|

}

|

||||||

|

```

|

||||||

|

* **Runtime frame sample (collapsed):**

|

||||||

|

|

||||||

|

```

|

||||||

|

api-gw@sha256:…;buildid=ab12…;foo::bar+0x3a;net/http::Serve;… 97

|

||||||

|

```

|

||||||

|

|

||||||

|

# Rollout plan (short and sweet)

|

||||||

|

|

||||||

|

1. **Phase 1 — Build plumbing:** implement function-range hashing + DSSE attestation; publish as OCI referrer; link from SBOM.

|

||||||

|

2. **Phase 2 — Runtime sampler:** ship eBPF agent with stack collapse + Build‑ID resolution; store only canonical frames.

|

||||||

|

3. **Phase 3 — Correlation & VEX:** map frames ↔ SBOM ↔ funcproof; auto‑downgrade VEX on hot vulnerable symbols; wire policy actions.

|

||||||

|

4. **Phase 4 — Auditor replay:** `stella verify --image X` downloads funcproof + symbols and replays hashes and traces to prove presence/absence without source.

|

||||||

|

|

||||||

|

# Why this is a moat

|

||||||

|

|

||||||

|

* **Symbol‑level truth**, not just package versions.

|

||||||

|

* **Runtime‑aware VEX** that flips based on evidence, not assumptions.

|

||||||

|

* **Tiny proof objects** make audits fast and air‑gap‑friendly.

|

||||||

|

* **Deterministic replay**: “same inputs → same verdict,” signed.

|

||||||

|

|

||||||

|

If you want, I can draft:

|

||||||

|

|

||||||

|

* the DSSE schemas,

|

||||||

|

* the eBPF sampler config for Alpine/Debian/RHEL/SLES/Astra,

|

||||||

|

* and the exact CycloneDX/SPDX extensions to carry `funcproof` links.

|

||||||

@@ -0,0 +1,123 @@

|

|||||||

|

Here’s a simple, practical way to make **release gates** that auto‑decide if a build is “routine” or “risky” by comparing the *semantic delta* across SBOMs, VEX data, and dependency graphs—so product managers can approve (or defer) with evidence, not guesswork.

|

||||||

|

|

||||||

|

### What this means (quick background)

|

||||||

|

|

||||||

|

* **SBOM**: a bill of materials for your build (what components you ship).

|

||||||

|

* **VEX**: vendor statements about whether known CVEs actually affect a product/version.

|

||||||

|

* **Dependency graph**: how components link together at build/runtime.

|

||||||

|

* **Semantic delta**: not just “files changed,” but “risk‑relevant meaning changed” (e.g., new reachable vuln path, new privileged capability, downgraded VEX confidence).

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### The gate’s core signal (one line)

|

||||||

|

|

||||||

|

**Risk Verdict = f(ΔSBOM, ΔReachability, ΔVEX, ΔConfig/Capabilities, ΔExploitability)** → Routine | Review | Block

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### Minimal data you need per release

|

||||||

|

|

||||||

|

* **SBOM (CycloneDX/SPDX)** for previous vs current release.

|

||||||

|

* **Reachability subgraph**: which vulnerable symbols/paths are actually callable (source, package, binary, or eBPF/runtime).

|

||||||

|

* **VEX claims** merged from vendors/distros/internal (with trust scores).

|

||||||

|

* **Policy knobs**: env tier (prod vs dev), allowed unknowns, max risk budget, critical assets list.

|

||||||

|

* **Exploit context**: EPSS/CISA KEV or your internal exploit sighting, if available.

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### How to compute the semantic delta (fast path)

|

||||||

|

|

||||||

|

1. **Component delta**: new/removed/updated packages → tag each change with severity (critical/security‑relevant vs cosmetic).

|

||||||

|

2. **Vulnerability delta**:

|

||||||

|

|

||||||

|

* New CVEs introduced?

|

||||||

|

* Old CVEs now mitigated (patch/backport) or declared **not‑affected** via VEX?

|

||||||

|

* Any VEX status regressions (e.g., “not‑affected” → “under‑investigation”)?

|

||||||

|

3. **Reachability delta**:

|

||||||

|

|

||||||

|

* Any *new* call‑paths to vulnerable functions?

|

||||||

|

* Any risk removed (path eliminated via config/feature‑flag/off by default)?

|

||||||

|

4. **Config/capabilities delta**:

|

||||||

|

|

||||||

|

* New container perms (NET_ADMIN, SYS_ADMIN), new open ports, new outbound calls.

|

||||||

|

* New data flows to sensitive stores.

|

||||||

|

5. **Exploitability delta**:

|

||||||

|

|

||||||

|

* EPSS/KEV jumps; active exploitation signals.

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### A tiny, useful scoring rubric (defaults you can ship)

|

||||||

|

|

||||||

|

* Start at 0. Add:

|

||||||

|

|

||||||

|

* +6 if any **reachable** critical vuln (no valid VEX “not‑affected”).

|

||||||

|

* +4 if any **reachable** high vuln.

|

||||||

|

* +3 if new sensitive capability added (e.g., NET_ADMIN) or new public port opened.

|

||||||

|

* +2 if VEX status regressed (NA→U/I or Affected).

|

||||||

|

* +1 per unknown package origin or unsigned artifact (cap at +5).

|

||||||

|

* Subtract:

|

||||||

|

|

||||||

|

* −3 per *proven* mitigation (valid VEX NA with trusted source + reachability proof).

|

||||||

|

* −2 if vulnerable path is demonstrably gated off in target env (feature flag off + policy evidence).

|

||||||

|

* Verdict:

|

||||||

|

|

||||||

|

* **0–3** → Routine (auto‑approve)

|

||||||

|

* **4–7** → Review (PM/Eng sign‑off)

|

||||||

|

* **≥8** → Block (require remediation/exception)

|

||||||

|

|

||||||

|

*(Tune thresholds per env: e.g., prod stricter than staging.)*

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### What PMs see (clean UI)

|

||||||

|

|

||||||

|

* **Badge**: Routine / Review / Block.

|

||||||

|

* **Why** (3–5 bullets):

|

||||||

|

|

||||||

|

* “Added `libpng` 1.6.43 (new), CVE‑XXXX reachable via `DecodePng()`”

|

||||||

|

* “Vendor VEX for `libssl` says not‑affected (function not built)”

|

||||||

|

* “Container gained `CAP_NET_RAW`”

|

||||||

|

* **Evidence buttons**:

|

||||||

|

|

||||||

|

* “Show reachability slice” (mini graph)

|

||||||

|

* “Show VEX sources + trust”

|

||||||

|

* “Show SBOM diff”

|

||||||

|

* **Call to action**:

|

||||||

|

|

||||||

|

* “Auto‑remediate to 1.6.44” / “Mark exception” / “Open fix PR”

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### Exception workflow (auditable)

|

||||||

|

|

||||||

|

* Exception must include: scope, expiry, compensating controls, owner, and linked evidence (reachability/VEX).

|

||||||

|

* Gate re‑evaluates each release; expired exceptions auto‑fail the gate.

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### How to wire it into CI/CD (quick recipe)

|

||||||

|

|

||||||

|

1. Generate SBOM + reachability slice for `prev` and `curr`.

|

||||||

|

2. Merge VEX from vendor/distro/internal with trust scoring.

|

||||||

|

3. Run **Delta Evaluator** → score + verdict + evidence bundle (JSON + attestation).

|

||||||

|

4. Gate policy checks score vs environment thresholds.

|

||||||

|

5. Publish an **OCI‑attached attestation** (DSSE/in‑toto) so auditors can replay: *same inputs → same verdict*.

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### Starter policy you can copy

|

||||||

|

|

||||||

|

* **Prod**: block on any reachable Critical; review on any reachable High; unknowns ≤ 2; no new privileged caps without exception.

|

||||||

|

* **Pre‑prod**: review on reachable High/Critical; unknowns ≤ 5.

|

||||||

|

* **Dev**: allow but flag; collect evidence.

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### Why this helps immediately

|

||||||

|

|

||||||

|

* PMs get **evidence‑backed** green/yellow/red, not CVE walls.

|

||||||

|

* Engineers get **actionable deltas** (what changed that matters).

|

||||||

|

* Auditors get **replayable proofs** (deterministic verdicts + inputs).

|

||||||

|

|

||||||

|

If you want, I can turn this into a ready‑to‑drop spec for Stella Ops (modules, JSON schemas, attestation format, and a tiny React panel mock) so your team can implement the gate this sprint.

|

||||||

@@ -0,0 +1,67 @@

|

|||||||

|

Here’s a practical, low‑friction way to modernize how you sign and verify build “verdicts” in CI/CD using Sigstore—no long‑lived keys, offline‑friendly, and easy to audit.

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### 1) Use **keyless** signing in CI

|

||||||

|

|

||||||

|

* In your pipeline, obtain an OIDC token (from your CI runner) and let **Fulcio** issue a short‑lived X.509 code‑signing cert (~10 minutes). You sign with the ephemeral key; cert + signature are logged to Rekor. ([Sigstore Blog][1])

|

||||||

|

|

||||||

|

**Why:** no key escrow in CI, nothing persistent to steal, and every signature is time‑bound + transparency‑logged.

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### 2) Keep one **hardware‑backed org key** only for special cases

|

||||||

|

|

||||||

|

* Reserve a physical HSM/YubiKey (or KMS) key for:

|

||||||

|

a) re‑signing monthly bundles (see §4), and

|

||||||

|

b) offline/air‑gapped verification workflows where a trust anchor is needed.

|

||||||

|

Cosign supports disconnected/offline verification patterns and mirroring the proof data. ([Sigstore][2])

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### 3) Make “verdicts” first‑class OCI attestations

|

||||||

|

|

||||||

|

* Emit DSSE/attestations (SBOM deltas, reachability graphs, policy results) as OCI‑attached artifacts and sign them with keyless in CI. (Cosign is designed to sign/verify arbitrary OCI artifacts alongside images.) ([Artifact Hub][3])

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### 4) Publish a **rotation & refresh policy**

|

||||||

|

|

||||||

|

* Every month, collect older attestations and **re‑sign into a long‑lived “bundle”** (plus timestamps) using the org key. This keeps proofs verifiable over years—even if the 10‑minute certs expire—because the bundle contains the cert chain, Rekor inclusion proof, and timestamps suitable for **offline** verification. ([Trustification][4])

|

||||||

|

|

||||||

|

**Suggested SLOs**

|

||||||

|

|

||||||

|

* CI keyless cert TTL: 10 minutes (Fulcio default). ([Sigstore][5])

|

||||||

|

* Bundle cadence: monthly (or per release); retain N=24 months.

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### 5) Offline / air‑gapped verification

|

||||||

|

|

||||||

|

* Mirror the image + attestation + Rekor proof (or bundle) into the disconnected registry. Verify with `cosign verify` using the mirrored materials—no internet needed. (Multiple guides show fully disconnected OpenShift/air‑gapped flows.) ([Red Hat Developer][6])

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### 6) Address common concerns (“myths”)

|

||||||

|

|

||||||

|

* “Short‑lived certs will break verification later.” → They don’t: you verify against the Rekor proof/bundle, not live cert validity. ([Trustification][4])

|

||||||

|

* “Keyless means less security.” → The opposite: no static secrets in CI; certs expire in ~10 minutes; identity bound via OIDC and logged. ([Chainguard][7])

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### Minimal rollout checklist

|

||||||

|

|

||||||

|

* [ ] Enable OIDC on your CI runners; test `cosign sign --identity-token ...`

|

||||||

|

* [ ] Enforce identity/issuer in policy: `--certificate-identity` + `--certificate-oidc-issuer` at verify time. ([Sigstore][2])

|

||||||

|

* [ ] Set up a monthly job to build **Sigstore bundles** from past attestations and re‑sign with the org key. ([Trustification][4])

|

||||||

|

* [ ] For offline sites: mirror images + attestations + bundles; verify with `cosign verify` entirely offline. ([Red Hat Developer][6])

|

||||||

|

|

||||||

|

Want me to draft the exact cosign commands and a GitLab/GitHub Actions snippet for your Stella Ops pipelines (keyless sign, verify gates, monthly bundling, and an offline verification playbook)?

|

||||||

|

|

||||||

|

[1]: https://blog.sigstore.dev/trusted-time/?utm_source=chatgpt.com "Trusted Time in Sigstore"

|

||||||

|

[2]: https://docs.sigstore.dev/cosign/verifying/verify/?utm_source=chatgpt.com "Verifying Signatures - Cosign"

|

||||||

|

[3]: https://artifacthub.io/packages/container/cosign/cosign/latest?utm_source=chatgpt.com "cosign latest · sigstore/cosign"

|

||||||

|

[4]: https://trustification.io/blog/?utm_source=chatgpt.com "Blog"

|

||||||

|

[5]: https://docs.sigstore.dev/certificate_authority/overview/?utm_source=chatgpt.com "Fulcio"

|

||||||

|

[6]: https://developers.redhat.com/articles/2025/08/27/how-verify-container-signatures-disconnected-openshift?utm_source=chatgpt.com "How to verify container signatures in disconnected OpenShift"

|

||||||

|

[7]: https://www.chainguard.dev/unchained/life-of-a-sigstore-signature?utm_source=chatgpt.com "Life of a Sigstore signature"

|

||||||

@@ -0,0 +1,61 @@

|

|||||||

|

I’m sharing this with you because your Stella Ops vision for vulnerability triage and supply‑chain context beats what many current tools actually deliver — and the differences highlight exactly where to push hard to out‑execute the incumbents.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

**Where competitors actually land today**

|

||||||

|

|

||||||

|

**Snyk — reachability + continuous context**

|

||||||

|

|

||||||

|

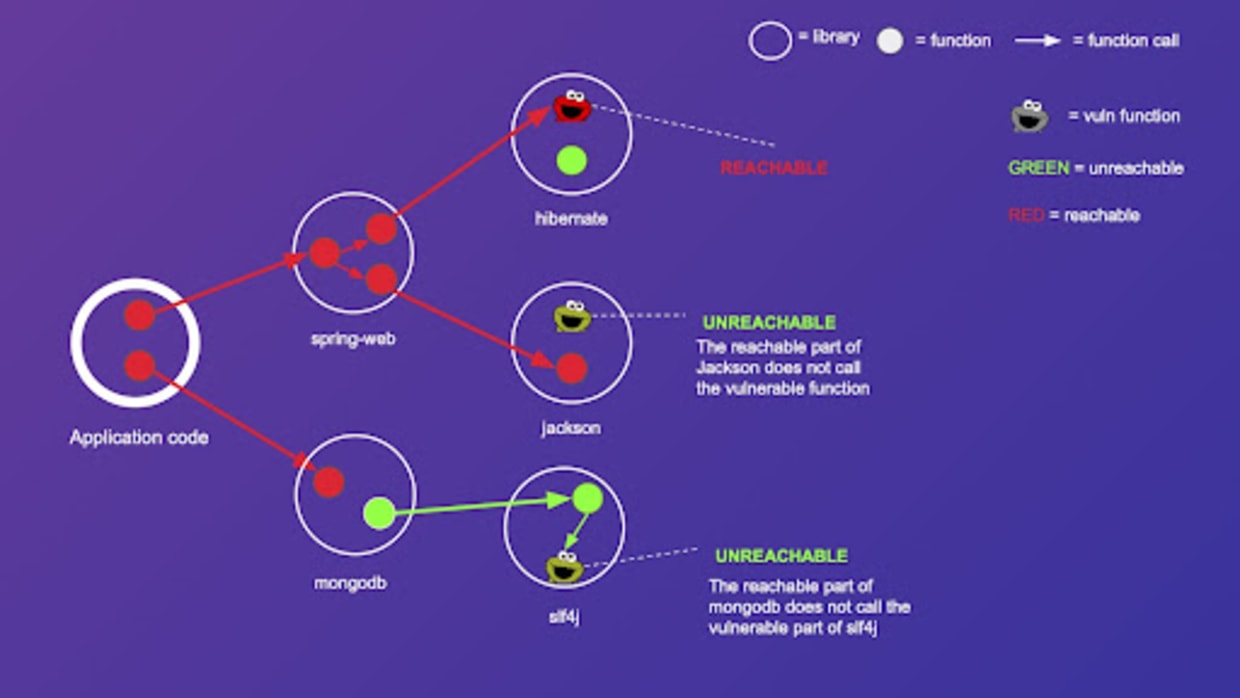

* Snyk now implements *reachability analysis* that builds a call graph to determine if vulnerable code *is actually reachable by your application*. This is factored into their risk and priority scores to help teams triage what matters most, beyond just severity numbers. ([Snyk Docs][1])

|

||||||

|

* Their model uses static program analysis combined with AI and expert curation for prioritization. ([Snyk Docs][1])

|

||||||

|

* For ongoing monitoring, Snyk *tracks issues over time* as projects are monitored and rescanned (e.g., via CLI or integrations), updating status as new CVEs are disclosed — without needing to re‑pull unchanged images. ([Snyk Docs][1])

|

||||||

|

|

||||||

|

**Anchore — vulnerability annotations & VEX export**

|

||||||

|

|

||||||

|

* Anchore Enterprise has shipped *vulnerability annotation workflows* where users or automation can label each finding with context (“not applicable”, “mitigated”, “under investigation”, etc.) via UI or API. ([Anchore Documentation][2])

|

||||||

|

* These annotations are exportable as *OpenVEX and CycloneDX VEX* formats so downstream consumers can consume authoritative exploitability state instead of raw scanner noise. ([Anchore][3])

|

||||||

|

* This means Anchore customers can generate SBOM + VEX outputs that carry your curated reasoning, reducing redundant triage across the supply chain.

|

||||||

|

|

||||||

|

**Prisma Cloud — runtime defense**

|

||||||

|

|

||||||

|

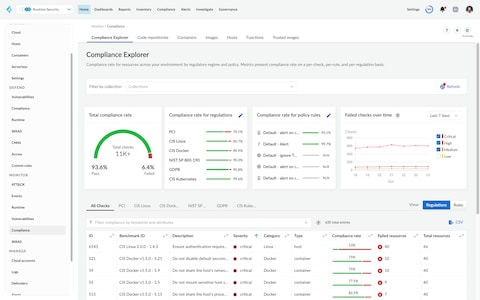

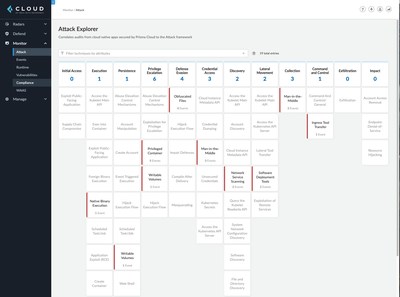

* Prisma Cloud’s *runtime defense* for containers continually profiles behavior and enforces *process, file, and network rules* for running workloads — using learning models to baseline expected behavior and block/alert on anomalies. ([Prisma Cloud][4])

|

||||||

|

* This gives security teams *runtime context* during operational incidents, not just pre‑deployment scan results — which can improve triage but is conceptually orthogonal to static SBOM/VEX artifacts.

|

||||||

|

|

||||||

|

**What Stella Ops should out‑execute**

|

||||||

|

|

||||||

|

Instead of disparate insights, Stella Ops can unify and elevate:

|

||||||

|

|

||||||

|

1. **One triage canvas with rich evidence**

|

||||||

|

|

||||||

|

* Combine static *reachability/evidence graphs* with call stacks and evidence traces — so users see *why* a finding matters, not just “reachable vs. not”.

|

||||||

|

* If you build this as a subgraph panel, teams can trace from SBOM → code paths → runtime indicators.

|

||||||

|

|

||||||

|

2. **VEX decisioning as first‑class**

|

||||||

|

|

||||||

|

* Treat VEX not as an export format but as *core policy objects*: policies that can *explain*, *override*, and *drive decisions*.

|

||||||

|

* This includes programmable policy rules driving whether something is actionable or suppressed in a given context — surfacing context alongside triage.

|

||||||

|

|

||||||

|

3. **Attestable exception objects**

|

||||||

|

|

||||||

|

* Model exceptions as *attestable contracts* with *expiries and audit trails* — not ad‑hoc labels. These become first‑class artifacts that can be cryptographically attested, shared, and verified across orgs.

|

||||||

|

|

||||||

|

4. **Offline replay packs for air‑gapped parity**

|

||||||

|

|

||||||

|

* Build *offline replay packs* so the *same UI, interactions, and decisions* work identically in fully air‑gapped environments.

|

||||||

|

* This is critical for compliance/defense customers who cannot connect to external feeds but still need consistent triage and reasoning workflows.

|

||||||

|

|

||||||

|

In short, competitors give you pieces — reachability scores, VEX exports, or behavioral runtime signals — but Stella Ops can unify these into *a single, evidence‑rich, policy‑driven triage experience that works both online and offline*. You already have the architecture to do it; now it’s about integrating these signals into a coherent decision surface that beats siloed tools.

|

||||||

|

|

||||||

|

[1]: https://docs.snyk.io/manage-risk/prioritize-issues-for-fixing/reachability-analysis?utm_source=chatgpt.com "Reachability analysis | Snyk User Docs"

|

||||||

|

[2]: https://docs.anchore.com/current/docs/vulnerability_management/vuln_annotations/?utm_source=chatgpt.com "Vulnerability Annotations and VEX"

|

||||||

|

[3]: https://anchore.com/blog/anchore-enterprise-5-23-cyclonedx-vex-and-vdr-support/?utm_source=chatgpt.com "Anchore Enterprise 5.23: CycloneDX VEX and VDR Support"

|

||||||

|

[4]: https://docs.prismacloud.io/en/compute-edition/30/admin-guide/runtime-defense/runtime-defense-containers?utm_source=chatgpt.com "Runtime defense for containers - Prisma Cloud Documentation"

|

||||||

|

|

||||||

|

--

|

||||||

|

Note from the the product manager. Note there is AdvisoryAI module on the Stella Ops suite

|

||||||

@@ -0,0 +1,56 @@

|

|||||||

|

Here’s a simple, high‑leverage UX pattern you can borrow from top observability tools: **treat every policy decision or reachability change as a visual diff.**

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### Why this helps

|

||||||

|

|

||||||

|

* Turns opaque “why is this verdict different?” moments into **quick, explainable triage**.

|

||||||

|

* Reduces back‑and‑forth between Security, Dev, and Audit—**everyone sees the same before/after evidence**.

|

||||||

|

|

||||||

|

### Core UI concept

|

||||||

|

|

||||||

|

* **Side‑by‑side panes**: **Before** (previous scan/policy) vs **After** (current).

|

||||||

|

* **Graph focus**: show the dependency/reachability subgraph; **highlight added/removed/changed nodes/edges**.

|

||||||

|

* **Evidence strip** (right rail): human‑readable facts used by the engine (e.g., *feature flag OFF*, *code path unreachable*, *kernel eBPF trace absent*).

|

||||||

|

* **Diff verdict header**: “Risk ↓ from *Medium → Low* (policy v1.8 → v1.9)”.

|

||||||

|

* **Filter chips**: Scope by component, package, CVE, policy rule, environment.

|

||||||

|

|

||||||

|

### Minimal data model (so UI is easy)

|

||||||

|

|

||||||

|

* `GraphSnapshot`: nodes, edges, metadata (component, version, tags).

|

||||||

|

* `PolicySnapshot`: version, rules hash, inputs (flags, env, VEX sources).

|

||||||

|

* `Delta`: `added/removed/changed` for nodes, edges, and rule outcomes.

|

||||||

|

* `EvidenceItems[]`: typed facts (trace hits, SBOM lines, VEX claims, config values) with source + timestamp.

|

||||||

|

* `SignedDeltaVerdict`: final status + signatures (who/what produced it).

|

||||||

|

|

||||||

|

### Micro‑interactions that matter

|

||||||

|

|

||||||

|

* Hover a changed node ⇒ **inline badge** explaining *why it changed* (e.g., “now gated by `--no-xml` runtime flag”).

|

||||||

|

* Click a rule change in the right rail ⇒ **spotlight** the exact subgraph it affected.

|

||||||

|

* Toggle **“explain like I’m new”** ⇒ expands jargon into plain language.

|

||||||

|

* One‑click **“copy audit bundle”** ⇒ exports the delta + evidence as an attachment.

|

||||||

|

|

||||||

|

### Where this belongs in your product

|

||||||

|

|

||||||

|

* **Primary**: in the **Triage** view for any new finding/regression.

|

||||||

|

* **Secondary**: in **Policy history** (compare vX vs vY) and **Release gates** (compare build A vs build B).

|

||||||

|

* **Inline surfaces**: small “diff pills” next to every verdict in tables; click opens the big side‑by‑side.

|

||||||

|

|

||||||

|

### Quick build checklist (dev & PM)

|

||||||

|

|

||||||

|

* Compute a stable **graph hash** per scan; store **snapshots**.

|

||||||

|

* Add a **delta builder** that outputs `added/removed/changed` at node/edge + rule outcome levels.

|

||||||

|

* Normalize **evidence items** (source, digest, excerpt) so the UI can render consistent cards.

|

||||||

|

* Ship a **Signed Delta Verdict** (OCI‑attached) so audits can replay the view from the artifact alone.

|

||||||

|

* Include **hotkeys**: `1` focus changes only, `2` show full graph, `E` expand evidence, `A` export audit.

|

||||||

|

|

||||||

|

### Empty state & failure modes

|

||||||

|

|

||||||

|

* If evidence is incomplete: show a **yellow “Unknowns present” ribbon** with a count and a button to collect missing traces.

|

||||||

|

* If graphs are huge: default to **“changed neighborhood only”** with a mini‑map to pan.

|

||||||

|

|

||||||

|

### Success metric (simple)

|

||||||

|

|

||||||

|

* **Mean time to explain (MTTE)**: time from “why did this change?” to user clicking *“Understood”*. Track trend ↓.

|

||||||

|

|

||||||

|

If you want, I can sketch a quick wireframe (header, graph panes, evidence rail, and the export action) or generate a JSON schema for the `Delta` and `EvidenceItem` objects you can hand to your frontend.

|

||||||

Reference in New Issue

Block a user