feat: Add archived advisories and implement smart-diff as a core evidence primitive

- Introduced new advisory documents for archived superseded advisories, including detailed descriptions of features already implemented or covered by existing sprints. - Added "Smart-Diff as a Core Evidence Primitive" advisory outlining the treatment of SBOM diffs as first-class evidence objects, enhancing vulnerability verdicts with deterministic replayability. - Created "Visual Diffs for Explainable Triage" advisory to improve user experience in understanding policy decisions and reachability changes through visual diffs. - Implemented "Weighted Confidence for VEX Sources" advisory to rank conflicting vulnerability evidence based on freshness and confidence, facilitating better decision-making. - Established a signer module charter detailing the mission, expectations, key components, and signing modes for cryptographic signing services in StellaOps. - Consolidated overlapping concepts from triage UI, visual diffs, and risk budget visualization advisories into a unified specification for better clarity and implementation tracking.

This commit is contained in:

@@ -0,0 +1,123 @@

|

||||

Here’s a simple, practical way to make **release gates** that auto‑decide if a build is “routine” or “risky” by comparing the *semantic delta* across SBOMs, VEX data, and dependency graphs—so product managers can approve (or defer) with evidence, not guesswork.

|

||||

|

||||

### What this means (quick background)

|

||||

|

||||

* **SBOM**: a bill of materials for your build (what components you ship).

|

||||

* **VEX**: vendor statements about whether known CVEs actually affect a product/version.

|

||||

* **Dependency graph**: how components link together at build/runtime.

|

||||

* **Semantic delta**: not just “files changed,” but “risk‑relevant meaning changed” (e.g., new reachable vuln path, new privileged capability, downgraded VEX confidence).

|

||||

|

||||

---

|

||||

|

||||

### The gate’s core signal (one line)

|

||||

|

||||

**Risk Verdict = f(ΔSBOM, ΔReachability, ΔVEX, ΔConfig/Capabilities, ΔExploitability)** → Routine | Review | Block

|

||||

|

||||

---

|

||||

|

||||

### Minimal data you need per release

|

||||

|

||||

* **SBOM (CycloneDX/SPDX)** for previous vs current release.

|

||||

* **Reachability subgraph**: which vulnerable symbols/paths are actually callable (source, package, binary, or eBPF/runtime).

|

||||

* **VEX claims** merged from vendors/distros/internal (with trust scores).

|

||||

* **Policy knobs**: env tier (prod vs dev), allowed unknowns, max risk budget, critical assets list.

|

||||

* **Exploit context**: EPSS/CISA KEV or your internal exploit sighting, if available.

|

||||

|

||||

---

|

||||

|

||||

### How to compute the semantic delta (fast path)

|

||||

|

||||

1. **Component delta**: new/removed/updated packages → tag each change with severity (critical/security‑relevant vs cosmetic).

|

||||

2. **Vulnerability delta**:

|

||||

|

||||

* New CVEs introduced?

|

||||

* Old CVEs now mitigated (patch/backport) or declared **not‑affected** via VEX?

|

||||

* Any VEX status regressions (e.g., “not‑affected” → “under‑investigation”)?

|

||||

3. **Reachability delta**:

|

||||

|

||||

* Any *new* call‑paths to vulnerable functions?

|

||||

* Any risk removed (path eliminated via config/feature‑flag/off by default)?

|

||||

4. **Config/capabilities delta**:

|

||||

|

||||

* New container perms (NET_ADMIN, SYS_ADMIN), new open ports, new outbound calls.

|

||||

* New data flows to sensitive stores.

|

||||

5. **Exploitability delta**:

|

||||

|

||||

* EPSS/KEV jumps; active exploitation signals.

|

||||

|

||||

---

|

||||

|

||||

### A tiny, useful scoring rubric (defaults you can ship)

|

||||

|

||||

* Start at 0. Add:

|

||||

|

||||

* +6 if any **reachable** critical vuln (no valid VEX “not‑affected”).

|

||||

* +4 if any **reachable** high vuln.

|

||||

* +3 if new sensitive capability added (e.g., NET_ADMIN) or new public port opened.

|

||||

* +2 if VEX status regressed (NA→U/I or Affected).

|

||||

* +1 per unknown package origin or unsigned artifact (cap at +5).

|

||||

* Subtract:

|

||||

|

||||

* −3 per *proven* mitigation (valid VEX NA with trusted source + reachability proof).

|

||||

* −2 if vulnerable path is demonstrably gated off in target env (feature flag off + policy evidence).

|

||||

* Verdict:

|

||||

|

||||

* **0–3** → Routine (auto‑approve)

|

||||

* **4–7** → Review (PM/Eng sign‑off)

|

||||

* **≥8** → Block (require remediation/exception)

|

||||

|

||||

*(Tune thresholds per env: e.g., prod stricter than staging.)*

|

||||

|

||||

---

|

||||

|

||||

### What PMs see (clean UI)

|

||||

|

||||

* **Badge**: Routine / Review / Block.

|

||||

* **Why** (3–5 bullets):

|

||||

|

||||

* “Added `libpng` 1.6.43 (new), CVE‑XXXX reachable via `DecodePng()`”

|

||||

* “Vendor VEX for `libssl` says not‑affected (function not built)”

|

||||

* “Container gained `CAP_NET_RAW`”

|

||||

* **Evidence buttons**:

|

||||

|

||||

* “Show reachability slice” (mini graph)

|

||||

* “Show VEX sources + trust”

|

||||

* “Show SBOM diff”

|

||||

* **Call to action**:

|

||||

|

||||

* “Auto‑remediate to 1.6.44” / “Mark exception” / “Open fix PR”

|

||||

|

||||

---

|

||||

|

||||

### Exception workflow (auditable)

|

||||

|

||||

* Exception must include: scope, expiry, compensating controls, owner, and linked evidence (reachability/VEX).

|

||||

* Gate re‑evaluates each release; expired exceptions auto‑fail the gate.

|

||||

|

||||

---

|

||||

|

||||

### How to wire it into CI/CD (quick recipe)

|

||||

|

||||

1. Generate SBOM + reachability slice for `prev` and `curr`.

|

||||

2. Merge VEX from vendor/distro/internal with trust scoring.

|

||||

3. Run **Delta Evaluator** → score + verdict + evidence bundle (JSON + attestation).

|

||||

4. Gate policy checks score vs environment thresholds.

|

||||

5. Publish an **OCI‑attached attestation** (DSSE/in‑toto) so auditors can replay: *same inputs → same verdict*.

|

||||

|

||||

---

|

||||

|

||||

### Starter policy you can copy

|

||||

|

||||

* **Prod**: block on any reachable Critical; review on any reachable High; unknowns ≤ 2; no new privileged caps without exception.

|

||||

* **Pre‑prod**: review on reachable High/Critical; unknowns ≤ 5.

|

||||

* **Dev**: allow but flag; collect evidence.

|

||||

|

||||

---

|

||||

|

||||

### Why this helps immediately

|

||||

|

||||

* PMs get **evidence‑backed** green/yellow/red, not CVE walls.

|

||||

* Engineers get **actionable deltas** (what changed that matters).

|

||||

* Auditors get **replayable proofs** (deterministic verdicts + inputs).

|

||||

|

||||

If you want, I can turn this into a ready‑to‑drop spec for Stella Ops (modules, JSON schemas, attestation format, and a tiny React panel mock) so your team can implement the gate this sprint.

|

||||

@@ -0,0 +1,61 @@

|

||||

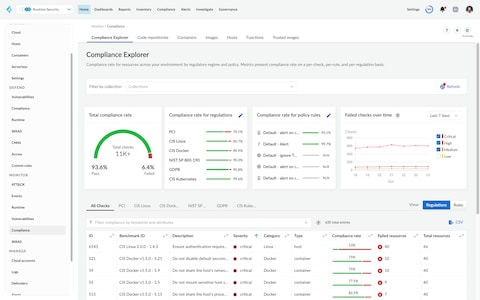

Here’s a tight, practical pattern you can lift for Stella Ops: **make exceptions first‑class, auditable objects** and **gate releases on risk deltas (diff‑aware checks)**—mirroring what top scanners do, but with stronger evidence and auto‑revalidation.

|

||||

|

||||

### 1) Exceptions as auditable objects

|

||||

|

||||

Competitor cues

|

||||

|

||||

* **Snyk** lets users ignore issues with a required reason and optional expiry (UI/CLI; `.snyk` policy). Ignored items can auto‑resurface when a fix exists. ([Snyk User Docs][1])

|

||||

* **Anchore** models **policy allowlists** (named sets of exceptions) applied during evaluation/mapping. ([Anchore Documentation][2])

|

||||

* **Prisma Cloud** supports vulnerability rules/CVE exceptions to soften or block findings. ([Prisma Cloud][3])

|

||||

|

||||

What to ship (Stella Ops)

|

||||

|

||||

* **Exception entity**: `{scope, subject(CVE/pkg/path), reason(text), evidenceRefs[], createdBy, createdAt, expiresAt?, policyBinding, signature}`

|

||||

* **Signed rationale + evidence**: require a justification plus **linked proofs** (attestation IDs, VEX note, reachability subgraph slice). Store as an **OCI‑attached attestation** to the SBOM/VEX artifact.

|

||||

* **Auto‑expiry & revalidation gates**: scheduler re‑tests on expiry or when feeds mark “fix available / EPSS ↑ / reachability ↑”; on failure, **flip gate to “needs re‑review”** and notify.

|

||||

* **Audit view**: timeline of exception lifecycle; show who/why, evidence, and re‑checks; exportable as an “audit pack.”

|

||||

* **Policy hooks**: “allow only if: reason ∧ evidence present ∧ max TTL ≤ X ∧ owner = team‑Y.”

|

||||

* **Inheritance**: repo→image→env scoping with explicit shadowing (surface conflicts).

|

||||

|

||||

### 2) Diff‑aware release gates (“delta verdicts”)

|

||||

|

||||

Competitor cues

|

||||

|

||||

* **Snyk PR Checks** scan *changes* and gate merges with a severity threshold; results show issue diffs per PR. ([Snyk User Docs][4])

|

||||

|

||||

What to ship (Stella Ops)

|

||||

|

||||

* **Graph deltas**: on each commit/image, compute `Δ(SBOM graph, reachability graph, VEX claims)`.

|

||||

* **Delta verdict** (signed, replayable): `PASS | WARN | FAIL` + **proof links** to:

|

||||

|

||||

* attestation bundle (in‑toto/DSSE),

|

||||

* **reachability subgraph** showing new execution paths to vulnerable symbols,

|

||||

* policy evaluation trace.

|

||||

* **Side‑by‑side UI**: “before vs after” risks; highlight *newly reachable* vulns and *fixed/mitigated* ones; one‑click **Create Exception** (enforces reason+evidence+TTL).

|

||||

* **Enforcement knobs**: per‑branch/env risk budgets; fail if `unknowns > N` or if any exception lacks evidence/TTL.

|

||||

* **Supply chain scope**: run the same gate on base‑image bumps and dependency updates.

|

||||

|

||||

### Minimal data model (sketch)

|

||||

|

||||

* `Exception`: id, scope, subject, reason, evidenceRefs[], ttl, status, sig.

|

||||

* `DeltaVerdict`: id, baseRef, headRef, changes[], policyOutcome, proofs[], sig.

|

||||

* `Proof`: type(`attestation|reachability|vex|log`), uri, hash.

|

||||

|

||||

### CLI / API ergonomics (examples)

|

||||

|

||||

* `stella exception create --cve CVE-2025-1234 --scope image:repo/app:tag --reason "Feature disabled" --evidence att:sha256:… --ttl 30d`

|

||||

* `stella verify delta --from abc123 --to def456 --policy prod.json --print-proofs`

|

||||

|

||||

### Guardrails out of the box

|

||||

|

||||

* **No silent ignores**: exceptions are visible in results (action changes, not deletion)—same spirit as Anchore. ([Anchore Documentation][2])

|

||||

* **Resurface on fix**: if a fix exists, force re‑review (parity with Snyk behavior). ([Snyk User Docs][1])

|

||||

* **Rule‑based blocking**: allow “hard/soft fail” like Prisma enforcement. ([Prisma Cloud][5])

|

||||

|

||||

If you want, I can turn this into a short product spec (API + UI wireframe + policy snippets) tailored to your Stella Ops modules (Policy Engine, Vexer, Attestor).

|

||||

|

||||

[1]: https://docs.snyk.io/manage-risk/prioritize-issues-for-fixing/ignore-issues?utm_source=chatgpt.com "Ignore issues | Snyk User Docs"

|

||||

[2]: https://docs.anchore.com/current/docs/overview/concepts/policy/policies/?utm_source=chatgpt.com "Policies and Evaluation"

|

||||

[3]: https://docs.prismacloud.io/en/compute-edition/22-12/admin-guide/vulnerability-management/configure-vuln-management-rules?utm_source=chatgpt.com "Vulnerability management rules - Prisma Cloud Documentation"

|

||||

[4]: https://docs.snyk.io/scan-with-snyk/pull-requests/pull-request-checks?utm_source=chatgpt.com "Pull Request checks | Snyk User Docs"

|

||||

[5]: https://docs.prismacloud.io/en/enterprise-edition/content-collections/application-security/risk-management/monitor-and-manage-code-build/enforcement?utm_source=chatgpt.com "Enforcement - Prisma Cloud Documentation"

|

||||

@@ -0,0 +1,71 @@

|

||||

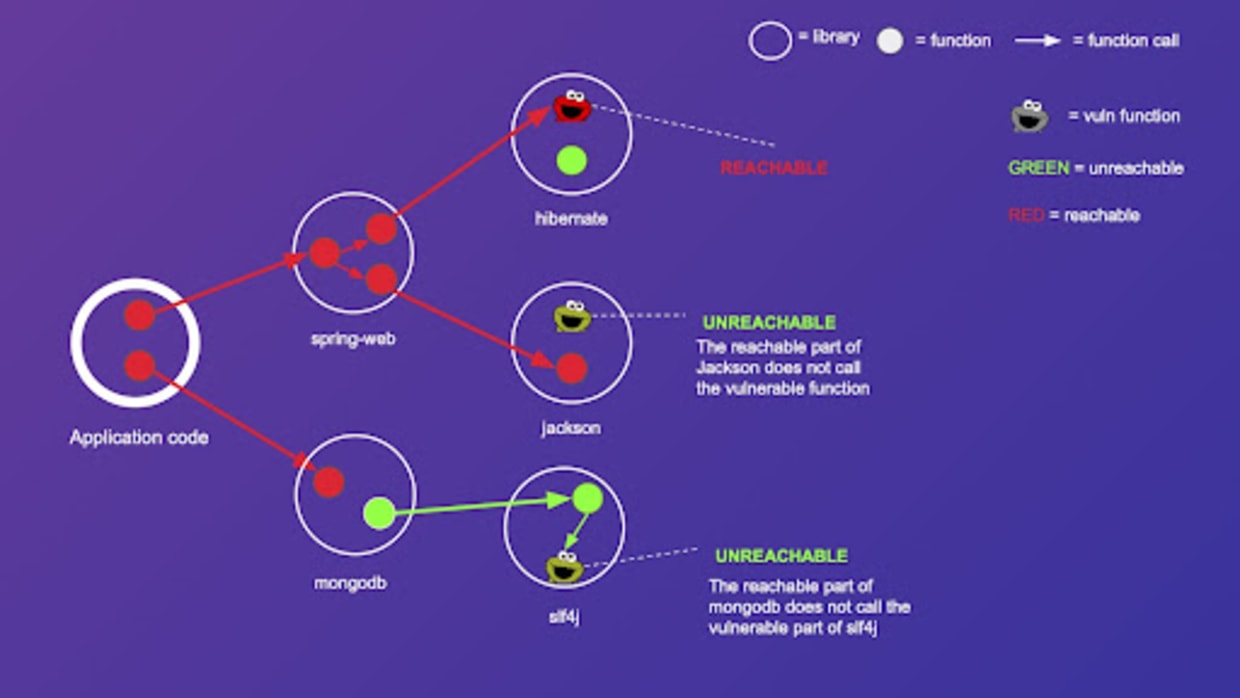

Here’s a crisp way to think about “reachability” that makes triage sane and auditable: **treat it like a cryptographic proof**—a minimal, reproducible chain that shows *why* a vuln can (or cannot) hit runtime.

|

||||

|

||||

### The idea (plain English)

|

||||

|

||||

* **Reachability** asks: “Could data flow from an attacker to the vulnerable code path during real execution?”

|

||||

* **Proof-carrying reachability** says: “Don’t just say yes/no—hand me a *proof chain* I can re-run.”

|

||||

Think: the shortest, lossless breadcrumb trail from entrypoint → sinks, with the exact build + policy context that made it true.

|

||||

|

||||

### What the “proof” contains

|

||||

|

||||

1. **Scope hash**: content digests for artifact(s) (image layers, SBOM nodes, commit IDs, compiler flags).

|

||||

2. **Policy hash**: the decision rules used (e.g., “prod disallows unknowns > 0”; “vendor VEX outranks distro unless backport tag present”).

|

||||

3. **Graph snippet**: the *minimal subgraph* (call/data/control edges) that connects:

|

||||

|

||||

* external entrypoint(s) → user-controlled sources → validators (if any) → vulnerable function(s)/sink(s).

|

||||

4. **Conditions**: feature flags, env vars, platform guards, version ranges, eBPF-observed edges (if present).

|

||||

5. **Verdict** (signed): A → {Affected | Not Affected | Under-Constrained} with reason codes.

|

||||

6. **Replay manifest**: the inputs needed to recompute the same verdict (feeds, rules, versions, hashes).

|

||||

|

||||

### Why this helps

|

||||

|

||||

* **Auditable**: Every “Not Affected” is defensible (no hand-wavy “scanner says so”).

|

||||

* **Deterministic**: Same inputs → same verdict (great for change control and regulators).

|

||||

* **Compact**: You store only the *minimal subgraph*, not the whole monolith.

|

||||

|

||||

### Minimal proof example (sketch)

|

||||

|

||||

* Artifact: `svc.payments:1.4.7` (image digest `sha256:…`)

|

||||

* CVE: `CVE-2024-XYZ` in `libyaml 0.2.5`

|

||||

* Entry: `POST /import`, body → `YamlDeserializer.Parse`

|

||||

* Guards: none (no schema/whitelist prior to parse)

|

||||

* Edge chain: `HttpBody → Parse(bytes) → LoadNode() → vulnerable_path()`

|

||||

* Condition: feature flag `BULK_IMPORT=true`

|

||||

* Verdict: **Affected**

|

||||

* Signed DSSE envelope over {scope hash, policy hash, graph snippet JSON, conditions, verdict}.

|

||||

|

||||

### How to build it (practical checklist)

|

||||

|

||||

* **During build**

|

||||

|

||||

* Emit SBOM (source & binary) with function/file symbols where possible.

|

||||

* Capture compiler/linker flags; normalize paths; include feature flags’ default state.

|

||||

* **During analysis**

|

||||

|

||||

* Static: slice the call graph to the *shortest* source→sink chain; attach type-state facts (e.g., “validated length”).

|

||||

* Deps: map CVEs to precise symbol/ABI surfaces (not just package names).

|

||||

* Backports: require explicit evidence (patch IDs, symbol presence) before downgrading severity.

|

||||

* **During runtime (optional but strong)**

|

||||

|

||||

* eBPF trace to confirm edges observed; store hashes of kprobes/uprobes programs and sampling window.

|

||||

* **During decisioning**

|

||||

|

||||

* Apply merge policy (vendor VEX, distro notes, internal tests) deterministically; hash the policy.

|

||||

* Emit one DSSE/attestation per verdict; include replay manifest.

|

||||

|

||||

### UI that won’t overwhelm

|

||||

|

||||

* **Default card**: Verdict + “Why?” (one-line chain) + “Replay” button.

|

||||

* **Expand**: shows the 5–10 edge subgraph, conditions, and signed envelope.

|

||||

* **Compare builds**: side-by-side proof deltas (edges added/removed, policy change, backport flip).

|

||||

|

||||

### Operating modes

|

||||

|

||||

* **Strict** (prod): Unknowns → fail-closed; proofs required for Not Affected.

|

||||

* **Lenient** (dev): Unknowns tolerated; proofs optional but encouraged; allow “Under-Constrained”.

|

||||

|

||||

### What to measure

|

||||

|

||||

* Proof generation rate, median proof size (KB), replay success %, proof dedup ratio, and “unknowns” burn-down.

|

||||

|

||||

If you want, I can turn this into a ready-to-ship spec for Stella Ops (attestation schema, JSON examples, API routes, and a tiny .NET verifier).

|

||||

@@ -0,0 +1,86 @@

|

||||

Here’s a crisp idea you can put to work right away: **treat SBOM diffs as a first‑class, signed evidence object**—not just “what components changed,” but also **VEX claim deltas** and **attestation (in‑toto/DSSE) deltas**. This makes vulnerability verdicts **deterministically replayable** and **audit‑ready** across release gates.

|

||||

|

||||

### Why this matters (plain speak)

|

||||

|

||||

* **Less noise, faster go/no‑go:** Only re‑triage what truly changed (package, reachability, config, or vendor stance), not the whole universe.

|

||||

* **Deterministic audits:** Same inputs → same verdict. Auditors can replay checks exactly.

|

||||

* **Tighter release gates:** Policies evaluate the *delta verdict*, not raw scans.

|

||||

|

||||

### Evidence model (minimal but complete)

|

||||

|

||||

* **Subject:** OCI digest of image/artifact.

|

||||

* **Baseline:** SBOM‑G (graph hash), VEX set hash, policy + rules hash, feed snapshots (CVE JSON digests), toolchain + config hashes.

|

||||

* **Delta:**

|

||||

|

||||

* `components_added/removed/updated` (with semver + source/distro origin)

|

||||

* `reachability_delta` (edges added/removed in call/file/path graph)

|

||||

* `settings_delta` (flags, env, CAPs, eBPF signals)

|

||||

* `vex_delta` (per‑CVE claim transitions: *affected → not_affected → fixed*, with reason codes)

|

||||

* `attestation_delta` (build‑provenance step or signer changes)

|

||||

* **Verdict:** Signed “delta verdict” (allow/block/risk_budget_consume) with rationale pointers into the deltas.

|

||||

* **Provenance:** DSSE envelope, in‑toto link to baseline + new inputs.

|

||||

|

||||

### Deterministic replay contract

|

||||

|

||||

Pin and record:

|

||||

|

||||

* Feed snapshots (CVE/VEX advisories) + hashes

|

||||

* Scanner versions + rule packs + lattice/policy version

|

||||

* SBOM generator version + mode (CycloneDX 1.6 / SPDX 3.0.1)

|

||||

* Reachability engine settings (language analyzers, eBPF taps)

|

||||

* Merge semantics ID (see below)

|

||||

|

||||

Replayer re‑hydrates these **exact** inputs and must reproduce the same verdict bit‑for‑bit.

|

||||

|

||||

### Merge semantics (stop “vendor > distro > internal” naïveté)

|

||||

|

||||

Define a policy‑controlled lattice for claims, e.g.:

|

||||

|

||||

* **Orderings:** `exploit_observed > affected > under_investigation > fixed > not_affected`

|

||||

* **Source weights:** vendor, distro, internal SCA, runtime sensor, pentest

|

||||

* **Conflict rules:** tie‑breaks, quorum, freshness windows, required evidence hooks (e.g., “not_affected because feature flag X=off, proven by config attestation Y”)

|

||||

|

||||

### Where it lives in the product

|

||||

|

||||

* **UI:** “Diff & Verdict” panel on each PR/build → shows SBOM/VEX/attestation deltas and the signed delta verdict; one‑click export of the DSSE envelope.

|

||||

* **API/Artifact:** Publish as an **OCI‑attached attestation** (`application/vnd.stella.delta-verdict+json`) alongside SBOM + VEX.

|

||||

* **Pipelines:** Release gate consumes only the delta verdict (fast path); full scan can run asynchronously for deep telemetry.

|

||||

|

||||

### Minimal schema sketch (JSON)

|

||||

|

||||

```json

|

||||

{

|

||||

"subject": {"ociDigest": "sha256:..."},

|

||||

"inputs": {

|

||||

"feeds": [{"type":"cve","digest":"sha256:..."},{"type":"vex","digest":"sha256:..."}],

|

||||

"tools": {"sbomer":"1.6.3","reach":"0.9.0","policy":"lattice-2025.12"},

|

||||

"baseline": {"sbomG":"sha256:...","vexSet":"sha256:..."}

|

||||

},

|

||||

"delta": {

|

||||

"components": {"added":[...],"removed":[...],"updated":[...]},

|

||||

"reachability": {"edgesAdded":[...],"edgesRemoved":[...]},

|

||||

"settings": {"changed":[...]},

|

||||

"vex": [{"cve":"CVE-2025-1234","from":"affected","to":"not_affected","reason":"config_flag_off","evidenceRef":"att#cfg-42"}],

|

||||

"attestations": {"changed":[...]}

|

||||

},

|

||||

"verdict": {"decision":"allow","riskBudgetUsed":2,"policyId":"lattice-2025.12","explanationRefs":["vex[0]","reachability.edgesRemoved[3]"]},

|

||||

"signing": {"dsse":"...","signer":"stella-authority"}

|

||||

}

|

||||

```

|

||||

|

||||

### Roll‑out checklist (Stella Ops framing)

|

||||

|

||||

* **Sbomer:** emit **graph‑hash** (stable canonicalization) and diff vs previous SBOM‑G.

|

||||

* **Vexer:** compute VEX claim deltas + reason codes; apply lattice merge; expose `vexDelta[]`.

|

||||

* **Attestor:** snapshot feed digests, tool/rule versions, and config; produce DSSE bundle.

|

||||

* **Policy Engine:** evaluate deltas → produce **delta verdict** with strict replay semantics.

|

||||

* **Router/Timeline:** store delta verdicts as auditable objects; enable “replay build N” button.

|

||||

* **CLI/CI:** `stella delta-verify --subject <digest> --envelope delta.json.dsse` → must return identical verdict.

|

||||

|

||||

### Guardrails

|

||||

|

||||

* Canonicalize and sort everything before hashing.

|

||||

* Record unknowns explicitly and let policy act on them (e.g., “fail if unknowns > N in prod”).

|

||||

* No network during replay except to fetch pinned digests.

|

||||

|

||||

If you want, I can draft the precise CycloneDX extension fields + an OCI media type registration, plus .NET 10 interfaces for Sbomer/Vexer/Attestor to emit/consume this today.

|

||||

@@ -0,0 +1,79 @@

|

||||

# Archived Superseded Advisories

|

||||

|

||||

**Archived:** 2025-12-26

|

||||

**Reason:** Concepts already implemented or covered by existing sprints

|

||||

|

||||

## Advisory Status

|

||||

|

||||

These advisories described features that are **already substantially implemented** in the codebase or covered by existing sprint files.

|

||||

|

||||

| Advisory | Status | Superseded By |

|

||||

|----------|--------|---------------|

|

||||

| `25-Dec-2025 - Implementing Diff‑Aware Release Gates.md` | SUPERSEDED | SPRINT_20251226_001_BE through 006_DOCS |

|

||||

| `26-Dec-2026 - Diff‑Aware Releases and Auditable Exceptions.md` | SUPERSEDED | SPRINT_20251226_003_BE_exception_approval.md |

|

||||

| `26-Dec-2026 - Smart‑Diff as a Core Evidence Primitive.md` | SUPERSEDED | Existing DeltaVerdict library |

|

||||

| `26-Dec-2026 - Reachability as Cryptographic Proof.md` | SUPERSEDED | Existing ProofChain library + SPRINT_007/009/010/011 |

|

||||

|

||||

## Existing Implementation

|

||||

|

||||

The following components already implement the advisory concepts:

|

||||

|

||||

### DeltaVerdict & DeltaComputer

|

||||

- `src/Policy/__Libraries/StellaOps.Policy/Deltas/DeltaVerdict.cs`

|

||||

- `src/Policy/__Libraries/StellaOps.Policy/Deltas/DeltaComputer.cs`

|

||||

- `src/__Libraries/StellaOps.DeltaVerdict/` (complete library)

|

||||

|

||||

### Exception Management

|

||||

- `src/Policy/__Libraries/StellaOps.Policy.Storage.Postgres/Models/ExceptionEntity.cs`

|

||||

- `src/Policy/StellaOps.Policy.Engine/Adapters/ExceptionAdapter.cs`

|

||||

- `src/Policy/__Libraries/StellaOps.Policy.Exceptions/` (complete library)

|

||||

|

||||

### ProofChain & Reachability Proofs

|

||||

- `src/Attestor/__Libraries/StellaOps.Attestor.ProofChain/` (complete library):

|

||||

- `Statements/ReachabilityWitnessStatement.cs` - Entry→sink proof chains

|

||||

- `Statements/ReachabilitySubgraphStatement.cs` - Minimal subgraph attestation

|

||||

- `Statements/ProofSpineStatement.cs` - Merkle-aggregated proof bundles

|

||||

- `Predicates/ReachabilitySubgraphPredicate.cs` - Subgraph predicate

|

||||

- `Identifiers/ContentAddressedIdGenerator.cs` - Content-addressed IDs

|

||||

- `Merkle/DeterministicMerkleTreeBuilder.cs` - Merkle tree construction

|

||||

- `Signing/ProofChainSigner.cs` - DSSE signing

|

||||

- `Verification/VerificationPipeline.cs` - Proof verification

|

||||

- `src/__Libraries/StellaOps.Replay.Core/ReplayManifest.cs` - Replay manifests

|

||||

|

||||

### Covering Sprints

|

||||

- `docs/implplan/SPRINT_20251226_001_BE_cicd_gate_integration.md` - Gate endpoints, CI/CD

|

||||

- `docs/implplan/SPRINT_20251226_002_BE_budget_enforcement.md` - Risk budget automation

|

||||

- `docs/implplan/SPRINT_20251226_003_BE_exception_approval.md` - Exception workflows (21 tasks)

|

||||

- `docs/implplan/SPRINT_20251226_004_FE_risk_dashboard.md` - Side-by-side UI

|

||||

- `docs/implplan/SPRINT_20251226_005_SCANNER_reachability_extractors.md` - Language extractors

|

||||

- `docs/implplan/SPRINT_20251226_006_DOCS_advisory_consolidation.md` - Documentation

|

||||

- `docs/implplan/SPRINT_20251226_007_BE_determinism_gaps.md` - Determinism gaps, metrics (25 tasks)

|

||||

- `docs/implplan/SPRINT_20251226_009_SCANNER_funcproof.md` - FuncProof generation (18 tasks)

|

||||

- `docs/implplan/SPRINT_20251226_010_SIGNALS_runtime_stack.md` - eBPF stack capture (17 tasks)

|

||||

- `docs/implplan/SPRINT_20251226_011_BE_auto_vex_downgrade.md` - Auto-VEX from runtime (16 tasks)

|

||||

|

||||

## Remaining Gaps Added to Sprints

|

||||

|

||||

Minor gaps from these advisories were added to existing sprints:

|

||||

|

||||

**Added to SPRINT_20251226_003_BE_exception_approval.md:**

|

||||

- EXCEPT-16: Auto-revalidation job

|

||||

- EXCEPT-17: Re-review gate flip on failure

|

||||

- EXCEPT-18: Exception inheritance (repo→image→env)

|

||||

- EXCEPT-19: Conflict surfacing for shadowed exceptions

|

||||

- EXCEPT-20: OCI-attached exception attestation

|

||||

- EXCEPT-21: CLI export command

|

||||

|

||||

**Added to SPRINT_20251226_007_BE_determinism_gaps.md:**

|

||||

- DET-GAP-21: Proof generation rate metric

|

||||

- DET-GAP-22: Median proof size metric

|

||||

- DET-GAP-23: Replay success rate metric

|

||||

- DET-GAP-24: Proof dedup ratio metric

|

||||

- DET-GAP-25: "Unknowns" burn-down tracking

|

||||

|

||||

## Cross-References

|

||||

|

||||

If you arrived here via a broken link, see:

|

||||

- `docs/implplan/SPRINT_20251226_*.md` for implementation tasks

|

||||

- `src/Policy/__Libraries/StellaOps.Policy/Deltas/` for delta computation

|

||||

- `src/__Libraries/StellaOps.DeltaVerdict/` for verdict models

|

||||

@@ -0,0 +1,61 @@

|

||||

I’m sharing this with you because your Stella Ops vision for vulnerability triage and supply‑chain context beats what many current tools actually deliver — and the differences highlight exactly where to push hard to out‑execute the incumbents.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

**Where competitors actually land today**

|

||||

|

||||

**Snyk — reachability + continuous context**

|

||||

|

||||

* Snyk now implements *reachability analysis* that builds a call graph to determine if vulnerable code *is actually reachable by your application*. This is factored into their risk and priority scores to help teams triage what matters most, beyond just severity numbers. ([Snyk Docs][1])

|

||||

* Their model uses static program analysis combined with AI and expert curation for prioritization. ([Snyk Docs][1])

|

||||

* For ongoing monitoring, Snyk *tracks issues over time* as projects are monitored and rescanned (e.g., via CLI or integrations), updating status as new CVEs are disclosed — without needing to re‑pull unchanged images. ([Snyk Docs][1])

|

||||

|

||||

**Anchore — vulnerability annotations & VEX export**

|

||||

|

||||

* Anchore Enterprise has shipped *vulnerability annotation workflows* where users or automation can label each finding with context (“not applicable”, “mitigated”, “under investigation”, etc.) via UI or API. ([Anchore Documentation][2])

|

||||

* These annotations are exportable as *OpenVEX and CycloneDX VEX* formats so downstream consumers can consume authoritative exploitability state instead of raw scanner noise. ([Anchore][3])

|

||||

* This means Anchore customers can generate SBOM + VEX outputs that carry your curated reasoning, reducing redundant triage across the supply chain.

|

||||

|

||||

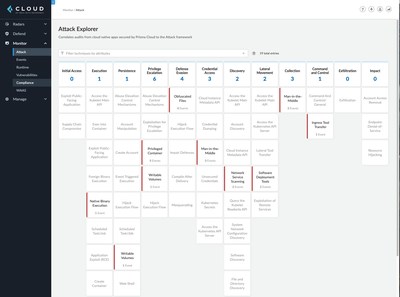

**Prisma Cloud — runtime defense**

|

||||

|

||||

* Prisma Cloud’s *runtime defense* for containers continually profiles behavior and enforces *process, file, and network rules* for running workloads — using learning models to baseline expected behavior and block/alert on anomalies. ([Prisma Cloud][4])

|

||||

* This gives security teams *runtime context* during operational incidents, not just pre‑deployment scan results — which can improve triage but is conceptually orthogonal to static SBOM/VEX artifacts.

|

||||

|

||||

**What Stella Ops should out‑execute**

|

||||

|

||||

Instead of disparate insights, Stella Ops can unify and elevate:

|

||||

|

||||

1. **One triage canvas with rich evidence**

|

||||

|

||||

* Combine static *reachability/evidence graphs* with call stacks and evidence traces — so users see *why* a finding matters, not just “reachable vs. not”.

|

||||

* If you build this as a subgraph panel, teams can trace from SBOM → code paths → runtime indicators.

|

||||

|

||||

2. **VEX decisioning as first‑class**

|

||||

|

||||

* Treat VEX not as an export format but as *core policy objects*: policies that can *explain*, *override*, and *drive decisions*.

|

||||

* This includes programmable policy rules driving whether something is actionable or suppressed in a given context — surfacing context alongside triage.

|

||||

|

||||

3. **Attestable exception objects**

|

||||

|

||||

* Model exceptions as *attestable contracts* with *expiries and audit trails* — not ad‑hoc labels. These become first‑class artifacts that can be cryptographically attested, shared, and verified across orgs.

|

||||

|

||||

4. **Offline replay packs for air‑gapped parity**

|

||||

|

||||

* Build *offline replay packs* so the *same UI, interactions, and decisions* work identically in fully air‑gapped environments.

|

||||

* This is critical for compliance/defense customers who cannot connect to external feeds but still need consistent triage and reasoning workflows.

|

||||

|

||||

In short, competitors give you pieces — reachability scores, VEX exports, or behavioral runtime signals — but Stella Ops can unify these into *a single, evidence‑rich, policy‑driven triage experience that works both online and offline*. You already have the architecture to do it; now it’s about integrating these signals into a coherent decision surface that beats siloed tools.

|

||||

|

||||

[1]: https://docs.snyk.io/manage-risk/prioritize-issues-for-fixing/reachability-analysis?utm_source=chatgpt.com "Reachability analysis | Snyk User Docs"

|

||||

[2]: https://docs.anchore.com/current/docs/vulnerability_management/vuln_annotations/?utm_source=chatgpt.com "Vulnerability Annotations and VEX"

|

||||

[3]: https://anchore.com/blog/anchore-enterprise-5-23-cyclonedx-vex-and-vdr-support/?utm_source=chatgpt.com "Anchore Enterprise 5.23: CycloneDX VEX and VDR Support"

|

||||

[4]: https://docs.prismacloud.io/en/compute-edition/30/admin-guide/runtime-defense/runtime-defense-containers?utm_source=chatgpt.com "Runtime defense for containers - Prisma Cloud Documentation"

|

||||

|

||||

--

|

||||

Note from the the product manager. Note there is AdvisoryAI module on the Stella Ops suite

|

||||

@@ -0,0 +1,105 @@

|

||||

# Visual Diffs for Explainable Triage

|

||||

|

||||

> **Status:** PLANNED — Enhancement sprint created

|

||||

> **Date:** 2025-12-25

|

||||

> **Updated:** 2025-12-26

|

||||

> **Implementation:** ~75-80% complete in existing infrastructure

|

||||

> **Sprint:** [`SPRINT_20251226_010_FE_visual_diff_enhancements.md`](../implplan/SPRINT_20251226_010_FE_visual_diff_enhancements.md)

|

||||

|

||||

---

|

||||

|

||||

## Implementation Status

|

||||

|

||||

Most features proposed in this advisory are **already implemented**:

|

||||

|

||||

| Feature | Status | Location |

|

||||

|---------|--------|----------|

|

||||

| Three-pane layout (Categories/Items/Proof) | COMPLETE | `smart-diff-ui-architecture.md` |

|

||||

| Delta summary strip | COMPLETE | `DeltaSummaryStripComponent` |

|

||||

| Evidence rail with witness paths | COMPLETE | `ProofPaneComponent`, `WitnessPathComponent` |

|

||||

| VEX merge explanations | COMPLETE | `VexMergeExplanationComponent` |

|

||||

| Role-based views (Dev/Security/Audit) | COMPLETE | `CompareViewComponent` |

|

||||

| Keyboard shortcuts | COMPLETE | `TriageShortcutsService` |

|

||||

| Audit bundle export | COMPLETE | `ExportActionsComponent` |

|

||||

| Delta computation engine | COMPLETE | `StellaOps.DeltaVerdict.Engine` |

|

||||

| Signed delta verdicts | COMPLETE | `DeltaSigningService` |

|

||||

| **Visual graph diff with highlights** | TODO | Sprint VD-ENH-01..04 |

|

||||

| **"Explain like I'm new" toggle** | TODO | Sprint VD-ENH-05..07 |

|

||||

| **Graph export (SVG/PNG)** | TODO | Sprint VD-ENH-08 |

|

||||

|

||||

### Existing Infrastructure

|

||||

|

||||

- `TriageWorkspaceComponent` (1042 lines) - Full triage workspace

|

||||

- `ProofTreeComponent` (1009 lines) - Merkle tree visualization

|

||||

- `docs/modules/web/smart-diff-ui-architecture.md` - Architecture specification

|

||||

- `StellaOps.DeltaVerdict` library - Backend delta computation

|

||||

|

||||

---

|

||||

|

||||

## Advisory Content

|

||||

|

||||

Here's a simple, high-leverage UX pattern you can borrow from top observability tools: **treat every policy decision or reachability change as a visual diff.**

|

||||

|

||||

---

|

||||

|

||||

### Why this helps

|

||||

|

||||

* Turns opaque "why is this verdict different?" moments into **quick, explainable triage**.

|

||||

* Reduces back-and-forth between Security, Dev, and Audit—**everyone sees the same before/after evidence**.

|

||||

|

||||

### Core UI concept

|

||||

|

||||

* **Side-by-side panes**: **Before** (previous scan/policy) vs **After** (current).

|

||||

* **Graph focus**: show the dependency/reachability subgraph; **highlight added/removed/changed nodes/edges**.

|

||||

* **Evidence strip** (right rail): human-readable facts used by the engine (e.g., *feature flag OFF*, *code path unreachable*, *kernel eBPF trace absent*).

|

||||

* **Diff verdict header**: "Risk ↓ from *Medium → Low* (policy v1.8 → v1.9)".

|

||||

* **Filter chips**: Scope by component, package, CVE, policy rule, environment.

|

||||

|

||||

### Minimal data model (so UI is easy)

|

||||

|

||||

* `GraphSnapshot`: nodes, edges, metadata (component, version, tags).

|

||||

* `PolicySnapshot`: version, rules hash, inputs (flags, env, VEX sources).

|

||||

* `Delta`: `added/removed/changed` for nodes, edges, and rule outcomes.

|

||||

* `EvidenceItems[]`: typed facts (trace hits, SBOM lines, VEX claims, config values) with source + timestamp.

|

||||

* `SignedDeltaVerdict`: final status + signatures (who/what produced it).

|

||||

|

||||

### Micro-interactions that matter

|

||||

|

||||

* Hover a changed node ⇒ **inline badge** explaining *why it changed* (e.g., "now gated by `--no-xml` runtime flag").

|

||||

* Click a rule change in the right rail ⇒ **spotlight** the exact subgraph it affected.

|

||||

* Toggle **"explain like I'm new"** ⇒ expands jargon into plain language.

|

||||

* One-click **"copy audit bundle"** ⇒ exports the delta + evidence as an attachment.

|

||||

|

||||

### Where this belongs in your product

|

||||

|

||||

* **Primary**: in the **Triage** view for any new finding/regression.

|

||||

* **Secondary**: in **Policy history** (compare vX vs vY) and **Release gates** (compare build A vs build B).

|

||||

* **Inline surfaces**: small "diff pills" next to every verdict in tables; click opens the big side-by-side.

|

||||

|

||||

### Quick build checklist (dev & PM)

|

||||

|

||||

* [x] Compute a stable **graph hash** per scan; store **snapshots**. *(Implemented: `DeltaComputationEngine`)*

|

||||

* [x] Add a **delta builder** that outputs `added/removed/changed` at node/edge + rule outcome levels. *(Implemented: `DeltaVerdictBuilder`)*

|

||||

* [x] Normalize **evidence items** (source, digest, excerpt) so the UI can render consistent cards. *(Implemented: `ProofTreeComponent`)*

|

||||

* [x] Ship a **Signed Delta Verdict** (OCI-attached) so audits can replay the view from the artifact alone. *(Implemented: `DeltaSigningService`)*

|

||||

* [ ] Include **hotkeys**: `1` focus changes only, `2` show full graph, `E` expand evidence, `A` export audit. *(Partial: general shortcuts exist)*

|

||||

|

||||

### Empty state & failure modes

|

||||

|

||||

* If evidence is incomplete: show a **yellow "Unknowns present" ribbon** with a count and a button to collect missing traces.

|

||||

* If graphs are huge: default to **"changed neighborhood only"** with a mini-map to pan.

|

||||

|

||||

### Success metric (simple)

|

||||

|

||||

* **Mean time to explain (MTTE)**: time from "why did this change?" to user clicking *"Understood"*. Track trend ↓.

|

||||

|

||||

---

|

||||

|

||||

## Related Advisories

|

||||

|

||||

| Advisory | Relationship |

|

||||

|----------|--------------|

|

||||

| [Triage UI Lessons from Competitors](./25-Dec-2025%20-%20Triage%20UI%20Lessons%20from%20Competitors.md) | Complementary - competitive UX patterns |

|

||||

| [Implementing Diff-Aware Release Gates](./25-Dec-2025%20-%20Implementing%20Diff%E2%80%91Aware%20Release%20Gates.md) | Backend - IMPLEMENTED |

|

||||

| [Smart-Diff as Core Evidence Primitive](./26-Dec-2026%20-%20Smart%E2%80%91Diff%20as%20a%20Core%20Evidence%20Primitive.md) | Data model - IMPLEMENTED |

|

||||

| [Visualizing the Risk Budget](./26-Dec-2026%20-%20Visualizing%20the%20Risk%20Budget.md) | Related - Risk visualization |

|

||||

@@ -0,0 +1,58 @@

|

||||

Here’s a simple way to make “risk budget” feel like a real, live dashboard rather than a dusty policy—plus the one visualization that best explains “budget burn” to PMs.

|

||||

|

||||

### First, quick background (plain English)

|

||||

|

||||

* **Risk budget** = how much unresolved risk we’re willing to carry for a release (e.g., 100 “risk points”).

|

||||

* **Burn** = how fast we consume that budget as unknowns/alerts pop up, minus how much we “pay back” by fixing/mitigating.

|

||||

|

||||

### What to show on the dashboard

|

||||

|

||||

1. **Heatmap of Unknowns (Where are we blind?)**

|

||||

|

||||

* Rows = components/services; columns = risk categories (vulns, compliance, perf, data, supply-chain).

|

||||

* Cell value = *unknowns count × severity weight* (unknown ≠ unimportant; it’s the most dangerous).

|

||||

* Click-through reveals: last evidence timestamp, owners, next probe.

|

||||

|

||||

2. **Delta Table (Risk Decay per Release)**

|

||||

|

||||

* Each release row compares **Before vs After**: total risk, unknowns, known-high, accepted, deferred.

|

||||

* Include a **“risk retired”** column (points dropped due to fixes/mitigations) and **“risk shifted”** (moved to exceptions).

|

||||

|

||||

3. **Exception Ledger (Auditable)**

|

||||

|

||||

* Every accepted risk has an ID, owner, expiry, evidence note, and auto-reminder.

|

||||

|

||||

### The best single chart for PMs: **Risk Budget Burn-Up**

|

||||

|

||||

*(This is the one slide they’ll get immediately.)*

|

||||

|

||||

* **X-axis:** calendar dates up to code freeze.

|

||||

* **Y-axis:** risk points.

|

||||

* **Two lines:**

|

||||

|

||||

* **Budget (flat or stepped)** = allowable risk over time (e.g., 100 pts until T‑2, then 60).

|

||||

* **Actual Risk (cumulative)** = unknowns + knowns − mitigations (daily snapshot).

|

||||

* **Shaded area** between lines = **Headroom** (green) or **Overrun** (red).

|

||||

* Add **vertical markers** for major changes (feature freeze, pen-test start, dependency bump).

|

||||

* Add **burn targets** (dotted) to show where you must be each week to land inside budget.

|

||||

|

||||

### How to compute the numbers (lightweight)

|

||||

|

||||

* **Risk points** = Σ(issue_severity_weight × exposure_factor × evidence_freshness_penalty).

|

||||

* **Unknown penalty**: if no evidence ≤ N days, apply multiplier (e.g., ×1.5).

|

||||

* **Decay**: when a fix lands *and* evidence is refreshed, subtract points that day.

|

||||

* **Guardrail**: fail gate if **unknowns > K** *or* **Actual Risk > Budget** within T days of release.

|

||||

|

||||

### Minimal artifacts to ship

|

||||

|

||||

* **Schema:** `issue_id, component, category, severity, is_unknown, exposure, evidence_date, status, owner`.

|

||||

* **Daily snapshot job:** materialize totals + unknowns + mitigations per component.

|

||||

* **One chart, one table, one heatmap** (don’t overdo it).

|

||||

|

||||

### Copy‑paste labels for the board

|

||||

|

||||

* **Top-left KPI:** “Headroom: 28 pts (green)”

|

||||

* **Badges:** “Unknowns↑ +6 (24h)”, “Risk retired −18 (7d)”, “Exceptions expiring: 3”

|

||||

* **Callout:** “At current burn, overrun in 5 days—pull forward libX fix or scope‑cut Y.”

|

||||

|

||||

If you want, I can mock this with sample data (CSV → chart) so your team sees exactly how it looks.

|

||||

@@ -0,0 +1,33 @@

|

||||

# Archived Triage/Visualization Advisories

|

||||

|

||||

**Archived:** 2025-12-26

|

||||

**Reason:** Consolidated into unified specification

|

||||

|

||||

## Advisory Consolidation

|

||||

|

||||

These 3 advisories contained overlapping concepts about triage UI, visual diffs, and risk budget visualization. They have been consolidated into a single authoritative specification:

|

||||

|

||||

**Consolidated Into:** `docs/modules/web/unified-triage-specification.md`

|

||||

|

||||

## Archived Advisories

|

||||

|

||||

| Advisory | Primary Concepts | Preserved In |

|

||||

|----------|------------------|--------------|

|

||||

| `25-Dec-2025 - Triage UI Lessons from Competitors.md` | Snyk/Anchore/Prisma analysis, 4 recommendations | Section 2: Competitive Landscape |

|

||||

| `25-Dec-2025 - Visual Diffs for Explainable Triage.md` | Side-by-side panes, evidence strip, micro-interactions | Section 3: Core UI Concepts |

|

||||

| `26-Dec-2026 - Visualizing the Risk Budget.md` | Burn-up charts, heatmaps, exception ledger | Section 4: Risk Budget Visualization |

|

||||

|

||||

## Implementation Sprints

|

||||

|

||||

The consolidated specification is implemented by:

|

||||

|

||||

- `SPRINT_20251226_004_FE_risk_dashboard.md` - Risk budget visualization

|

||||

- `SPRINT_20251226_012_FE_smart_diff_compare.md` - Smart-Diff Compare View

|

||||

- `SPRINT_20251226_013_FE_triage_canvas.md` - Unified Triage Canvas

|

||||

- `SPRINT_20251226_014_DOCS_triage_consolidation.md` - Documentation tasks

|

||||

|

||||

## Cross-References

|

||||

|

||||

If you arrived here via a broken link, the content you're looking for is now in:

|

||||

- `docs/modules/web/unified-triage-specification.md`

|

||||

- `docs/modules/web/smart-diff-ui-architecture.md`

|

||||

@@ -0,0 +1,90 @@

|

||||

Here’s a compact, practical way to rank conflicting vulnerability evidence (VEX) by **freshness vs. confidence**—so your system picks the best truth without hand‑holding.

|

||||

|

||||

---

|

||||

|

||||

# A scoring lattice for VEX sources

|

||||

|

||||

**Goal:** Given multiple signals (VEX statements, advisories, bug trackers, scanner detections), compute a single verdict with a transparent score and a proof trail.

|

||||

|

||||

## 1) Normalize inputs → “evidence atoms”

|

||||

|

||||

For every item, extract:

|

||||

|

||||

* **scope** (package@version, image@digest, file hash)

|

||||

* **claim** (affected, not_affected, under_investigation, fixed)

|

||||

* **reason** (reachable?, feature flag off, vulnerable code not present, platform not impacted)

|

||||

* **provenance** (who said it, how it’s signed)

|

||||

* **when** (issued_at, observed_at, expires_at)

|

||||

* **supporting artifacts** (SBOM ref, in‑toto link, CVE IDs, PoC link)

|

||||

|

||||

## 2) Confidence (C) and Freshness (F)

|

||||

|

||||

**Confidence C (0–1)** (multiply factors; cap at 1):

|

||||

|

||||

* **Signature strength:** DSSE + Sigstore/Rekor inclusion (0.35), plus hardware‑backed key or org OIDC (0.15)

|

||||

* **Source reputation:** NVD (0.20), major distro PSIRT (0.20), upstream vendor (0.20), reputable CERT (0.15), small vendor (0.10)

|

||||

* **Evidence quality:** reachability proof / test (0.25), code diff linking (0.20), deterministic build link (0.15), “reason” present (0.10)

|

||||

* **Consensus bonus:** ≥2 independent concurring sources (+0.10)

|

||||

|

||||

**Freshness F (0–1)** (monotone decay):

|

||||

|

||||

* F = exp(−Δdays / τ) with τ tuned per source class (e.g., **τ=30** vendor VEX, **τ=90** NVD, **τ=14** exploit‑active feeds).

|

||||

* **Update reset:** new attestation with same subject resets Δdays.

|

||||

* **Expiry clamp:** if `now > expires_at`, set F=0.

|

||||

|

||||

## 3) Claim strength (S_claim)

|

||||

|

||||

Map claim → base weight:

|

||||

|

||||

* not_affected (0.9), fixed (0.8), affected (0.7), under_investigation (0.4)

|

||||

* **Reason multipliers:** reachable? (+0.15 to “affected”), “feature flag off” (+0.10 to “not_affected”), platform mismatch (+0.10), backport patch note (+0.10 if patch commit hash provided)

|

||||

|

||||

## 4) Overall score & lattice merge

|

||||

|

||||

Per evidence `e`:

|

||||

**Score(e) = C(e) × F(e) × S_claim(e)**

|

||||

|

||||

Then, merge in a **distributive lattice** ordered by:

|

||||

|

||||

1. **Claim precedence** (not_affected > fixed > affected > under_investigation)

|

||||

2. Break ties by **Score(e)**

|

||||

3. If competing top claims within ε (e.g., 0.05), **escalate to “disputed”** and surface both with proofs.

|

||||

|

||||

**Policy hooks:** allow org‑level overrides (e.g., “prod must treat ‘under_investigation’ as affected unless reachability=false proof present”).

|

||||

|

||||

## 5) Worked example: small‑vendor Sigstore VEX vs 6‑month‑old NVD note

|

||||

|

||||

* **Small vendor VEX (signed, Sigstore, reason: code path unreachable, issued 7 days ago):**

|

||||

C ≈ signature (0.35) + small‑vendor (0.10) + reason (0.10) + evidence (reachability +0.25) = ~0.70

|

||||

F = exp(−7/30) ≈ 0.79

|

||||

S_claim (not_affected + reason) = 0.9 + 0.10 = 1.0 (cap at 1)

|

||||

**Score ≈ 0.70 × 0.79 × 1.0 = 0.55**

|

||||

|

||||

* **NVD entry (affected; no extra reasoning; last updated 180 days ago):**

|

||||

C ≈ NVD (0.20) = 0.20

|

||||

F = exp(−180/90) ≈ 0.14

|

||||

S_claim (affected) = 0.7

|

||||

**Score ≈ 0.20 × 0.14 × 0.7 = 0.02**

|

||||

|

||||

**Outcome:** vendor VEX decisively wins; lattice yields **not_affected** with linked proofs. If NVD updates tomorrow, its F jumps and the lattice may flip—deterministically.

|

||||

|

||||

## 6) Implementation notes (fits Stella Ops modules)

|

||||

|

||||

* **Where:** run in **scanner.webservice** (per your standing rule), keep Concelier/Excitors as preserve‑prune pipes.

|

||||

* **Storage:** Postgres as SoR; Valkey as cache for score shards.

|

||||

* **Inputs:** CycloneDX/SPDX IDs, in‑toto attestations, Rekor proofs, feed timestamps.

|

||||

* **Outputs:**

|

||||

|

||||

* **Signed “verdict attestation”** (OCI‑attached) with inputs’ hashes + chosen path in lattice.

|

||||

* **Delta verdicts** when any input changes (freshness decay counts as change).

|

||||

* **UI:** “Trust Algebra” panel showing (C,F,S_claim), decay timeline, and “why this won.”

|

||||

|

||||

## 7) Guardrails & ops

|

||||

|

||||

* **Replayability:** include τ values, weights, and source catalog in the attested policy so anyone can recompute the same score.

|

||||

* **Backports:** add a “patch‑aware” booster only if commit hash maps to shipped build (prove via diff or package changelog).

|

||||

* **Air‑gapped:** mirror Rekor; cache trust anchors; freeze decay at scan time but recompute at policy‑evaluation time.

|

||||

|

||||

---

|

||||

|

||||

If you want, I can drop this into a ready‑to‑run JSON/YAML policy bundle (with τ/weights defaults) and a tiny C# evaluator stub you can wire into **Policy Engine → Vexer** right away.

|

||||

@@ -0,0 +1,59 @@

|

||||

# Archived VEX Scoring Advisory

|

||||

|

||||

**Archived:** 2025-12-26

|

||||

**Reason:** Concepts substantially implemented in VexLens module

|

||||

|

||||

## Advisory Status

|

||||

|

||||

The "Weighted Confidence for VEX Sources" advisory described a scoring lattice for VEX sources using the formula `Score(e) = C(e) × F(e) × S_claim(e)`. This is **substantially implemented** in the existing codebase.

|

||||

|

||||

## Existing Implementation

|

||||

|

||||

### SourceTrustScoreCalculator (VexLens)

|

||||

Location: `src/VexLens/StellaOps.VexLens/Trust/SourceTrust/SourceTrustScoreCalculator.cs`

|

||||

|

||||

Implements multi-dimensional trust scoring:

|

||||

- **Authority Score** - Source category (Vendor, Distributor, Community, Internal, Aggregator), trust tier, official source bonus

|

||||

- **Accuracy Score** - Confirmation rate, false positive rate, revocation rate, consistency

|

||||

- **Timeliness Score** - Response time, update frequency, freshness

|

||||

- **Coverage Score** - CVE coverage ratio, product breadth, completeness

|

||||

- **Verification Score** - Signature validity, provenance integrity, issuer verification

|

||||

|

||||

### TrustDecayService (VexLens)

|

||||

Location: `src/VexLens/StellaOps.VexLens/Trust/SourceTrust/TrustDecayService.cs`

|

||||

|

||||

Implements freshness decay with configurable τ values per source class.

|

||||

|

||||

### TrustLatticeEngine (Policy)

|

||||

Location: `src/Policy/__Libraries/StellaOps.Policy/TrustLattice/TrustLatticeEngine.cs`

|

||||

|

||||

Implements:

|

||||

- K4 lattice with security atoms (Present, Applies, Reachable, Mitigated, Fixed, Misattributed)

|

||||

- VEX normalization (CycloneDX, OpenVEX, CSAF)

|

||||

- Proof bundle generation with decision traces

|

||||

- Lattice merge with claim precedence

|

||||

|

||||

### VexConsensusEngine (VexLens)

|

||||

Location: `src/VexLens/StellaOps.VexLens/Consensus/VexConsensusEngine.cs`

|

||||

|

||||

Handles multi-source VEX consensus with configurable merge policies.

|

||||

|

||||

## Advisory Concepts → Implementation Mapping

|

||||

|

||||

| Advisory Concept | Implementation |

|

||||

|------------------|----------------|

|

||||

| Confidence C(e) factors | `SourceTrustScoreCalculator` with 5 component scores |

|

||||

| Freshness F(e) decay | `TrustDecayService` with configurable τ per source |

|

||||

| Claim strength S_claim | `TrustLatticeEngine` security atoms + disposition selector |

|

||||

| Lattice merge ordering | `K4Lattice` with precedence rules |

|

||||

| Signature strength | `VerificationScoreCalculator` with signature/provenance scoring |

|

||||

| Consensus bonus | `VexConsensusEngine` with multi-source corroboration |

|

||||

| Policy hooks | `PolicyBundle` with override rules |

|

||||

| Replay/determinism | `ProofBundle` with decision traces |

|

||||

|

||||

## Cross-References

|

||||

|

||||

If you arrived here via a broken link, see:

|

||||

- `src/VexLens/StellaOps.VexLens/Trust/` for trust scoring

|

||||

- `src/Policy/__Libraries/StellaOps.Policy/TrustLattice/` for lattice logic

|

||||

- `docs/modules/vexlens/` for VexLens documentation

|

||||

Reference in New Issue

Block a user