archive advisories

This commit is contained in:

@@ -0,0 +1,224 @@

|

||||

# DSSE‑Signed Offline Scanner Updates — Developer Guidelines

|

||||

|

||||

> **Date:** 2025-12-01

|

||||

> **Status:** Advisory draft (from user-provided guidance)

|

||||

> **Scope:** Offline vulnerability DB bundles (Scanner), DSSE+Rekor v2 verification, offline kit alignment, activation rules, ops playbook.

|

||||

|

||||

Here’s a tight, practical pattern to make your scanner’s vuln‑DB updates rock‑solid even when feeds hiccup:

|

||||

|

||||

## Offline, verifiable update bundles (DSSE + Rekor v2)

|

||||

|

||||

**Idea:** distribute DB updates as offline tarballs. Each tarball ships with:

|

||||

|

||||

* a **DSSE‑signed** statement (e.g., in‑toto style) over the bundle hash

|

||||

* a **Rekor v2 receipt** proving the signature/statement was logged

|

||||

* a small **manifest.json** (version, created_at, content hashes)

|

||||

|

||||

**Startup flow (happy path):**

|

||||

|

||||

1. Load latest tarball from your local `updates/` cache.

|

||||

2. Verify DSSE signature against your trusted public keys.

|

||||

3. Verify Rekor v2 receipt (inclusion proof) matches the DSSE payload hash.

|

||||

4. If both pass, unpack/activate; record the bundle’s **trust_id** (e.g., statement digest).

|

||||

5. If anything fails, **keep using the last good bundle**. No service disruption.

|

||||

|

||||

**Why this helps**

|

||||

|

||||

* **Air‑gap friendly:** no live network needed at activation time.

|

||||

* **Tamper‑evident:** DSSE + Rekor receipt proves provenance and transparency.

|

||||

* **Operational stability:** feed outages become non‑events—scanner just keeps the last good state.

|

||||

|

||||

---

|

||||

|

||||

## File layout inside each bundle

|

||||

|

||||

```

|

||||

/bundle-2025-11-29/

|

||||

manifest.json # { version, created_at, entries[], sha256s }

|

||||

payload.tar.zst # the actual DB/indices

|

||||

payload.tar.zst.sha256

|

||||

statement.dsse.json # DSSE-wrapped statement over payload hash

|

||||

rekor-receipt.json # Rekor v2 inclusion/verification material

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## Acceptance/Activation rules

|

||||

|

||||

* **Trust root:** pin one (or more) publisher public keys; rotate via separate, out‑of‑band process.

|

||||

* **Monotonicity:** only activate if `manifest.version > current.version` (or if trust policy explicitly allows replay for rollback testing).

|

||||

* **Atomic switch:** unpack to `db/staging/`, validate, then symlink‑flip to `db/active/`.

|

||||

* **Quarantine on failure:** move bad bundles to `updates/quarantine/` with a reason code.

|

||||

|

||||

---

|

||||

|

||||

## Minimal .NET 10 verifier sketch (C#)

|

||||

|

||||

```csharp

|

||||

public sealed record BundlePaths(string Dir) {

|

||||

public string Manifest => Path.Combine(Dir, "manifest.json");

|

||||

public string Payload => Path.Combine(Dir, "payload.tar.zst");

|

||||

public string Dsse => Path.Combine(Dir, "statement.dsse.json");

|

||||

public string Receipt => Path.Combine(Dir, "rekor-receipt.json");

|

||||

}

|

||||

|

||||

public async Task<bool> ActivateBundleAsync(BundlePaths b, TrustConfig trust, string activeDir) {

|

||||

var manifest = await Manifest.LoadAsync(b.Manifest);

|

||||

if (!await Hashes.VerifyAsync(b.Payload, manifest.PayloadSha256)) return false;

|

||||

|

||||

// 1) DSSE verify (publisher keys pinned in trust)

|

||||

var (okSig, dssePayloadDigest) = await Dsse.VerifyAsync(b.Dsse, trust.PublisherKeys);

|

||||

if (!okSig || dssePayloadDigest != manifest.PayloadSha256) return false;

|

||||

|

||||

// 2) Rekor v2 receipt verify (inclusion + statement digest == dssePayloadDigest)

|

||||

if (!await RekorV2.VerifyReceiptAsync(b.Receipt, dssePayloadDigest, trust.RekorPub)) return false;

|

||||

|

||||

// 3) Stage, validate, then atomically flip

|

||||

var staging = Path.Combine(activeDir, "..", "staging");

|

||||

DirUtil.Empty(staging);

|

||||

await TarZstd.ExtractAsync(b.Payload, staging);

|

||||

if (!await LocalDbSelfCheck.RunAsync(staging)) return false;

|

||||

|

||||

SymlinkUtil.AtomicSwap(source: staging, target: activeDir);

|

||||

State.WriteLastGood(manifest.Version, dssePayloadDigest);

|

||||

return true;

|

||||

}

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## Operational playbook

|

||||

|

||||

* **On boot & daily at HH:MM:** try `ActivateBundleAsync()` on the newest bundle; on failure, log and continue.

|

||||

* **Telemetry (no PII):** reason codes (SIG_FAIL, RECEIPT_FAIL, HASH_MISMATCH, SELFTEST_FAIL), versions, last_good.

|

||||

* **Keys & rotation:** keep `publisher.pub` and `rekor.pub` in a root‑owned, read‑only path; rotate via a separate signed “trust bundle”.

|

||||

* **Defense‑in‑depth:** verify both the **payload hash** and each file’s hash listed in `manifest.entries[]`.

|

||||

* **Rollback:** allow `--force-activate <bundle>` for emergency testing, but mark as **non‑monotonic** in state.

|

||||

|

||||

---

|

||||

|

||||

## What to hand your release team

|

||||

|

||||

* A Make/CI target that:

|

||||

1. Builds `payload.tar.zst` and computes hashes

|

||||

2. Generates `manifest.json`

|

||||

3. Creates and signs the **DSSE statement**

|

||||

4. Submits to Rekor (or your mirror) and saves the **v2 receipt**

|

||||

5. Packages the bundle folder and publishes to your offline repo

|

||||

* A checksum file (`*.sha256sum`) for ops to verify out‑of‑band.

|

||||

|

||||

---

|

||||

|

||||

If you want, I can turn this into a Stella Ops spec page (`docs/modules/scanner/offline-bundles.md`) plus a small reference implementation (C# library + CLI) that drops right into your Scanner service.

|

||||

|

||||

---

|

||||

|

||||

# Drop‑in Stella Ops Dev Guide (seed for `docs/modules/scanner/development/dsse-offline-updates.md`)

|

||||

|

||||

> **Audience**

|

||||

> Scanner, Export Center, Attestor, CLI, and DevOps engineers implementing DSSE‑signed offline vulnerability updates and integrating them into the Offline Update Kit (OUK).

|

||||

>

|

||||

> **Context**

|

||||

>

|

||||

> * OUK already ships **signed, atomic offline update bundles** with merged vulnerability feeds, container images, and an attested manifest.

|

||||

> * DSSE + Rekor is already used for **scan evidence** (SBOM attestations, Rekor proofs).

|

||||

> * Sprints 160/162 add **attestation bundles** with manifest, checksums, DSSE signature, and optional transparency log segments, and integrate them into OUK and CLI flows.

|

||||

|

||||

These guidelines tell you how to **wire all of that together** for “offline scanner updates” (feeds, rules, packs) in a way that matches Stella Ops’ determinism + sovereignty promises.

|

||||

|

||||

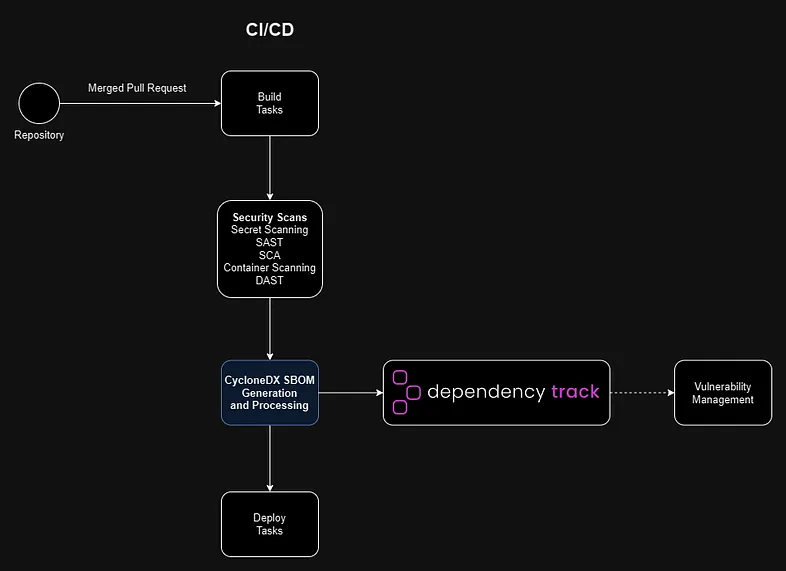

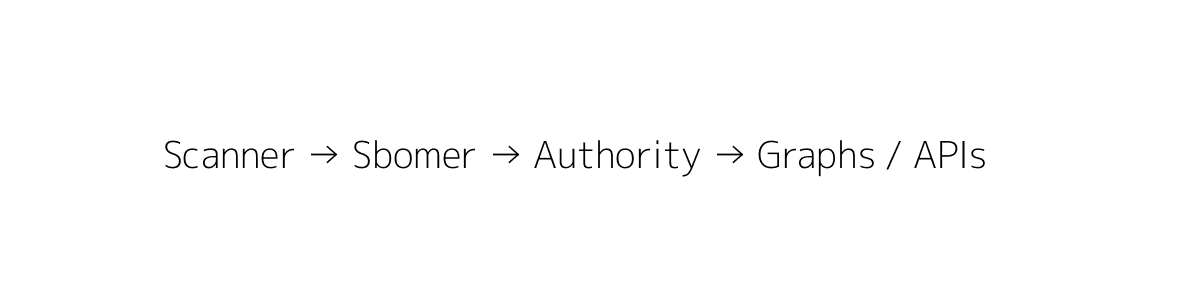

## 0. Mental model

|

||||

|

||||

```text

|

||||

Advisory mirrors / Feeds builders

|

||||

│

|

||||

▼

|

||||

ExportCenter.AttestationBundles

|

||||

(creates DSSE + Rekor evidence

|

||||

for each offline update snapshot)

|

||||

│

|

||||

▼

|

||||

Offline Update Kit (OUK) builder

|

||||

(adds feeds + evidence to kit tarball)

|

||||

│

|

||||

▼

|

||||

stella offline kit import / admin CLI

|

||||

(verifies Cosign + DSSE + Rekor segments,

|

||||

then atomically swaps scanner feeds)

|

||||

```

|

||||

|

||||

Online, Rekor is live; offline, you rely on **bundled Rekor segments / snapshots** and the existing OUK mechanics (import is atomic, old feeds kept until new bundle is fully verified).

|

||||

|

||||

## 1. Goals & non‑goals

|

||||

|

||||

### Goals

|

||||

|

||||

1. **Authentic offline snapshots**: every offline scanner update (OUK or delta) must be verifiably tied to a DSSE envelope, a certificate chain, and a Rekor v2 inclusion proof or bundled log segment.

|

||||

2. **Deterministic replay**: given a kit + its DSSE attestation bundle, every verifier reaches the same verdict online or air‑gapped.

|

||||

3. **Separation of concerns**: Export Center builds attestations; Scanner verifies/imports; Signer/Attestor handle DSSE/Rekor.

|

||||

4. **Operational safety**: imports remain atomic/idempotent; old feeds stay live until full verification.

|

||||

|

||||

### Non‑goals

|

||||

|

||||

* Designing new crypto or log formats.

|

||||

* Per‑feed DSSE envelopes (minimum contract is bundle‑level attestation).

|

||||

|

||||

## 2. Bundle contract for DSSE‑signed offline updates

|

||||

|

||||

**Files to ship** (inside each offline kit or delta):

|

||||

|

||||

```

|

||||

/attestations/

|

||||

offline-update.dsse.json # DSSE envelope

|

||||

offline-update.rekor.json # Rekor entry + inclusion proof (or segment descriptor)

|

||||

/manifest/

|

||||

offline-manifest.json # existing manifest

|

||||

offline-manifest.json.jws # existing detached JWS

|

||||

/feeds/

|

||||

... # existing feed payloads

|

||||

```

|

||||

|

||||

**DSSE payload (minimum):** subject = kit name + tarball sha256; predicate fields include `offline_manifest_sha256`, feed entries, builder ID/commit, created_at UTC, and channel.

|

||||

|

||||

**Rekor material:** submit DSSE to Rekor v2, store UUID/logIndex/inclusion proof as `offline-update.rekor.json`; for offline, embed a minimal log segment or rely on mirrored snapshots.

|

||||

|

||||

## 3. Implementation by module

|

||||

|

||||

### Export Center — attestation bundles

|

||||

* Compose attestation job: build DSSE payload, sign via Signer, submit to Rekor via Attestor, persist `offline-update.dsse.json` and `.rekor.json` (+ segments).

|

||||

* Integrate into offline kit packaging: place attestation files under `/attestations/`; list them in `offline-manifest.json` with sha256/size/capturedAt.

|

||||

* Define JSON schema for `offline-update.rekor.json`; version it and validate.

|

||||

|

||||

### Offline Update Kit builder

|

||||

* Preserve idempotent, atomic imports; include DSSE/Rekor files in kit staging tree.

|

||||

* Keep manifests deterministic: ordered file lists, UTC timestamps.

|

||||

* For delta kits, attest the resulting snapshot state (not just diffs).

|

||||

|

||||

### Scanner — import & activation

|

||||

* Verification sequence: Cosign tarball → manifest JWS → file digests (incl. attestation files) → DSSE verify → Rekor verify (online or offline segment) → atomic swap.

|

||||

* Config surface: `requireDsse`, `rekorOfflineMode`, `attestationVerifier` (env-var mirrored); allow observe→enforce rollout.

|

||||

* Failure behavior: keep old feeds; log structured failure fields; expose ProblemDetails.

|

||||

|

||||

### Signer & Attestor

|

||||

* Add predicate type/schema for offline updates; submit DSSE to Rekor; emit verification routines usable by CLI/Scanner with offline snapshot support.

|

||||

|

||||

### CLI & UI

|

||||

* CLI verbs to verify/import bundles with Rekor key; UI shows Cosign/JWS + DSSE/Rekor status and kit freshness.

|

||||

|

||||

## 4. Determinism & offline‑safety rules

|

||||

* No hidden network dependencies; offline must succeed with kit + Rekor snapshot.

|

||||

* Stable JSON serialization; UTC timestamps.

|

||||

* Replayable imports (idempotent); DSSE payload for a snapshot must be immutable.

|

||||

* Explainable failures with precise mismatch points.

|

||||

|

||||

## 5. Testing & CI expectations

|

||||

* Unit/integration: happy path, tampering cases (manifest/DSSE/Rekor), offline mode, rollback logic.

|

||||

* Metrics: `offlinekit_import_total{status}`, `attestation_verify_latency_seconds`, Rekor success/retry counts.

|

||||

* Golden fixtures: deterministic bundle + snapshot tests.

|

||||

|

||||

## 6. Developer checklist (TL;DR)

|

||||

1. Read operator guides: `docs/modules/scanner/operations/dsse-rekor-operator-guide.md`, `docs/24_OFFLINE_KIT.md`, relevant sprints.

|

||||

2. Implement: generate DSSE in Export Center; include attestation in kit; Scanner verifies before swap.

|

||||

3. Test: bundle composition, import rollback, determinism.

|

||||

4. Telemetry: counters + latency; log digests/UUIDs.

|

||||

5. Document: update Export Center and Scanner architecture docs, OUK docs when flows/contracts change.

|

||||

|

||||

@@ -0,0 +1,130 @@

|

||||

# Proof-Linked VEX UI Developer Guidelines

|

||||

|

||||

Compiled: 2025-12-01 (UTC)

|

||||

|

||||

## Purpose

|

||||

Any VEX-influenced verdict a user sees (Findings, Advisories & VEX, Vuln Explorer, etc.) must be directly traceable to concrete evidence: normalized VEX claims, their DSSE/signatures, and the policy explain trace. Every "Not Affected" badge is a verifiable link to the proof.

|

||||

|

||||

## What this solves (in one line)

|

||||

Every "Not Affected" badge becomes a verifiable link to why it is safe.

|

||||

|

||||

## UX pattern (at a glance)

|

||||

- Badge: `Not Affected` (green pill) always renders as a link.

|

||||

- On click: open a right-side drawer with three tabs:

|

||||

1. Evidence (DSSE / in-toto / Sigstore)

|

||||

2. Attestation (predicate details + signer)

|

||||

3. Reasoning Graph (the node + edges that justify the verdict)

|

||||

- Hover state: mini popover showing proof types available (e.g., "DSSE, SLSA attestation, Graph node").

|

||||

|

||||

## Data model (API & DB)

|

||||

Canonical object returned by VEX API for each finding:

|

||||

|

||||

```json

|

||||

{

|

||||

"findingId": "vuln:CVE-2024-12345@pkg:docker/alpine@3.19",

|

||||

"status": "not_affected",

|

||||

"justificationCode": "vex:not_present",

|

||||

"proof": {

|

||||

"dsse": {

|

||||

"envelopeDigest": "sha256-…",

|

||||

"rekorEntryId": "e3f1…",

|

||||

"downloadUrl": "https://…/dsse/e3f1…",

|

||||

"signer": { "name": "StellaOps Authority", "keyId": "SHA256:…" }

|

||||

},

|

||||

"attestation": {

|

||||

"predicateType": "slsa/v1",

|

||||

"attestationId": "att:01H…",

|

||||

"downloadUrl": "https://…/att/01H…"

|

||||

},

|

||||

"graph": {

|

||||

"nodeId": "gx:NA-78f…",

|

||||

"revision": "gx-r:2025-11-30T12:01:22Z",

|

||||

"explainUrl": "https://…/graph?rev=gx-r:…&node=NA-78f…"

|

||||

}

|

||||

},

|

||||

"receipt": {

|

||||

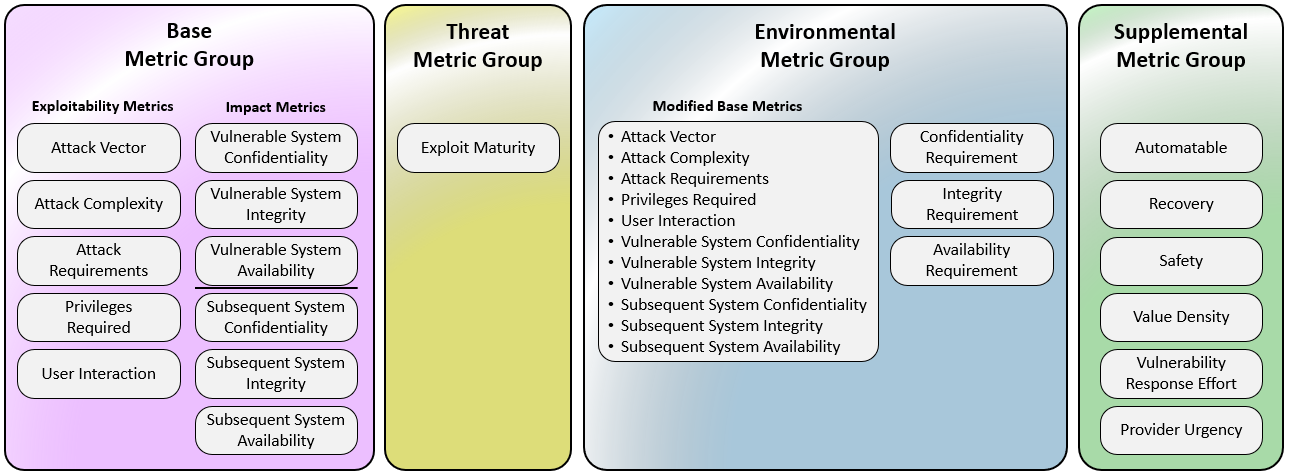

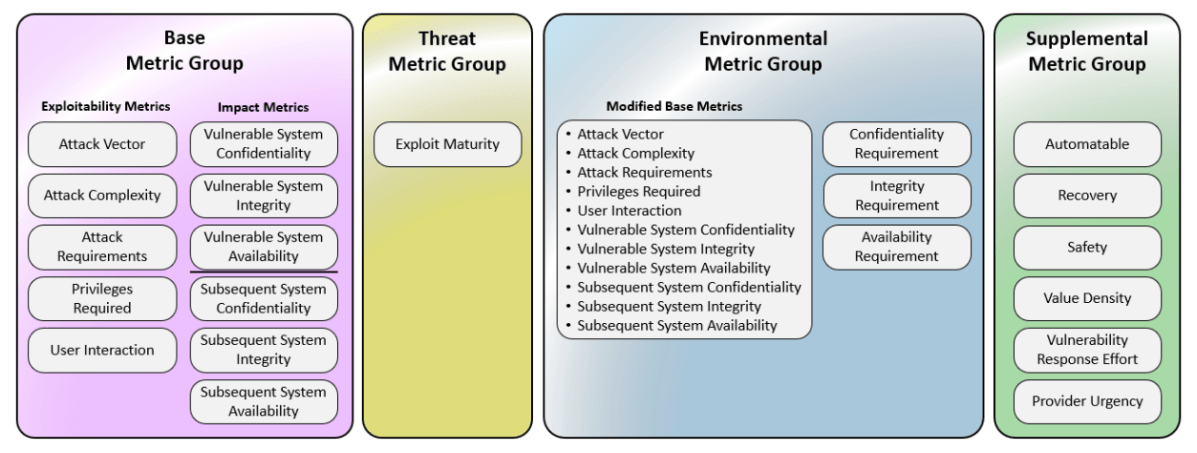

"algorithm": "CVSS:4.0",

|

||||

"inputsHash": "sha256-…",

|

||||

"computedAt": "2025-11-30T12:01:22Z"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

Suggested Postgres tables:

|

||||

- vex_findings(finding_id, status, justification_code, graph_node_id, graph_rev, dsse_digest, rekor_id, attestation_id, created_at, updated_at)

|

||||

- proof_artifacts(id, type, digest, url, signer_keyid, meta jsonb)

|

||||

- graph_revisions(revision_id, created_at, root_hash)

|

||||

|

||||

## API contract (minimal)

|

||||

- GET /vex/findings/:id -> returns the object above.

|

||||

- GET /proofs/:type/:id -> streams artifact (with Content-Disposition: attachment).

|

||||

- GET /graph/explain?rev=…&node=… -> returns a JSON justification subgraph.

|

||||

- Security headers: Content-SHA256, Digest, X-Proof-Root (graph root hash), and X-Signer-KeyId.

|

||||

|

||||

## Angular UI spec (drop-in)

|

||||

Component: FindingStatusBadge

|

||||

|

||||

```html

|

||||

<button class="badge badge--ok" (click)="drawer.open(finding.proof)">

|

||||

Not Affected

|

||||

</button>

|

||||

```

|

||||

|

||||

Drawer layout (3 tabs):

|

||||

1) Evidence

|

||||

- DSSE digest (copy button)

|

||||

- Rekor entry (open in new tab)

|

||||

- "Download envelope"

|

||||

2) Attestation

|

||||

- Predicate type

|

||||

- Attestation ID

|

||||

- "Download attestation"

|

||||

3) Reasoning Graph

|

||||

- Node ID + Revision

|

||||

- Inline explainer ("Why safe?" bullets)

|

||||

- "Open full graph" (routes to /graph?rev=…&node=…)

|

||||

|

||||

Micro-interactions:

|

||||

- Copy-to-clipboard with toast ("Digest copied").

|

||||

- If any artifact missing, show a yellow "Partial Proof" ribbon listing what is absent.

|

||||

|

||||

Visual language:

|

||||

- Badges: Not Affected = solid green; Partial Proof = olive with warning dot; No Proof = gray outline (still clickable, explains absence).

|

||||

- Proof chips: small caps labels `DSSE`, `ATTESTATION`, `GRAPH`; each chip opens its subsection.

|

||||

|

||||

Validation (trust & integrity):

|

||||

- On drawer open, the UI calls HEAD /proofs/... to fetch Digest header and X-Proof-Root; compare to stored digests. If mismatch, show red "Integrity Mismatch" banner with retry and report.

|

||||

|

||||

Telemetry (debugging):

|

||||

- Emit events: proof_drawer_opened, proof_artifact_downloaded, graph_explain_viewed (include findingId, artifactType, latencyMs, integrityStatus).

|

||||

|

||||

Developer checklist:

|

||||

- Every not_affected status must include at least one proof artifact.

|

||||

- Badge is never a dead label; always clickable.

|

||||

- Drawer validates artifact digests before rendering contents.

|

||||

- "Open full graph" deep-links with rev + node (stable and shareable).

|

||||

- Graceful partials: show what is present and what is missing.

|

||||

- Accessibility: focus trap in drawer, aria-labels for chips, keyboard nav.

|

||||

|

||||

Test cases (quick):

|

||||

1) Happy path: all three proofs present; digests match; downloads work.

|

||||

2) Partial proof: DSSE present, attestation missing; drawer shows warning ribbon.

|

||||

3) Integrity fail: server returns different digest; red banner appears; badge stays clickable.

|

||||

4) Graph only: reasoning node present; DSSE/attestation absent; explains rationale clearly.

|

||||

|

||||

Optional nice-to-haves:

|

||||

- Permalinks: copyable URL that re-opens the drawer to the same tab.

|

||||

- QR export: downloadable "proof card" PNG with digests + signer (for audit packets).

|

||||

- Offline kit: bundle DSSE, attestation, and a compact graph slice in a .tar.zst for air-gapped review.

|

||||

|

||||

If needed, this can be turned into:

|

||||

- A small Angular module (ProofDrawerModule) + styles.

|

||||

- A .NET 10 controller stub with integrity headers.

|

||||

- Fixture JSON so teams can wire it up quickly.

|

||||

|

||||

## Context links (from source conversation)

|

||||

- docs/ui/console-overview.md

|

||||

- docs/ui/advisories-and-vex.md

|

||||

- docs/ui/findings.md

|

||||

- src/VexLens/StellaOps.VexLens/AGENTS.md and TASKS.md

|

||||

- docs/policy/overview.md

|

||||

@@ -0,0 +1,95 @@

|

||||

# 01-Dec-2025 - Storage Blueprint for PostgreSQL Modules

|

||||

|

||||

## Summary

|

||||

A crisp, opinionated storage blueprint for StellaOps modules with zero-downtime conversion tactics. Covers module-to-store mapping, PostgreSQL patterns, JSONB/RLS scaffolds, materialized views, temporal tables, CAS usage for SBOM/VEX blobs, and phased cutover guidance.

|

||||

|

||||

## Module → Store Map (deterministic by default)

|

||||

- **Authority / OAuth / Accounts & Audit**: PostgreSQL source of truth; tables `users`, `clients`, `oauth_tokens`, `roles`, `grants`, `audit_log`; RLS on `users`, `grants`, `audit_log`; strict FK/CHECK; immutable UUID PKs; audit table with actor/action/entity/diff and timestamptz default now().

|

||||

- **VEX & Vulnerability Writes**: PostgreSQL with JSONB facts plus relational decisions; tables `vuln_fact(jsonb)`, `vex_decision(package_id, vuln_id, status, rationale, proof_ref, updated_at)`; materialized views like `mv_triage_hotset` refreshed on commit or schedule.

|

||||

- **Routing / Feature Flags / Rate-limits**: PostgreSQL truth plus Redis cache; tables `feature_flag(key, rules jsonb, version)`, `route(domain, service, instance_id, last_heartbeat)`, `rate_limiter(bucket, quota, interval)`; Redis keys `flag:{key}:{version}`, `route:{domain}`, `rl:{bucket}` with short TTLs.

|

||||

- **Unknowns Registry**: PostgreSQL with temporal tables (bitemporal via `valid_from/valid_to`, `sys_from/ sys_to`); view `unknowns_current` for open rows.

|

||||

- **Artifacts / SBOM / VEX files**: OCI-compatible CAS (e.g., self-hosted registry or MinIO) keyed by digest; metadata in Postgres `artifact_index(digest, media_type, size, signatures)`.

|

||||

|

||||

## PostgreSQL Implementation Essentials

|

||||

- **RLS scaffold (Authority)**

|

||||

```sql

|

||||

alter table audit_log enable row level security;

|

||||

create policy p_audit_read_self

|

||||

on audit_log for select

|

||||

using (

|

||||

actor_id = current_setting('app.user_id')::uuid

|

||||

or exists (

|

||||

select 1 from grants g

|

||||

where g.user_id = current_setting('app.user_id')::uuid

|

||||

and g.role = 'auditor'

|

||||

)

|

||||

);

|

||||

```

|

||||

|

||||

- **JSONB facts + relational decisions**

|

||||

```sql

|

||||

create table vuln_fact (

|

||||

id uuid primary key default gen_random_uuid(),

|

||||

source text not null,

|

||||

payload jsonb not null,

|

||||

received_at timestamptz default now()

|

||||

);

|

||||

|

||||

create table vex_decision (

|

||||

package_id uuid not null,

|

||||

vuln_id text not null,

|

||||

status text check (status in ('not_affected','affected','fixed','under_investigation')),

|

||||

rationale text,

|

||||

proof_ref text,

|

||||

decided_at timestamptz default now(),

|

||||

primary key (package_id, vuln_id)

|

||||

);

|

||||

```

|

||||

|

||||

- **Materialized view for triage**

|

||||

```sql

|

||||

create materialized view mv_triage_hotset as

|

||||

select v.id as fact_id, v.payload->>'vuln' as vuln, v.received_at

|

||||

from vuln_fact v

|

||||

where (now() - v.received_at) < interval '7 days';

|

||||

-- refresh concurrently via job

|

||||

```

|

||||

|

||||

- **Temporal pattern (Unknowns)**

|

||||

```sql

|

||||

create table unknowns (

|

||||

id uuid primary key default gen_random_uuid(),

|

||||

subject_hash text not null,

|

||||

kind text not null,

|

||||

context jsonb not null,

|

||||

valid_from timestamptz not null default now(),

|

||||

valid_to timestamptz,

|

||||

sys_from timestamptz not null default now(),

|

||||

sys_to timestamptz

|

||||

);

|

||||

|

||||

create view unknowns_current as

|

||||

select * from unknowns where valid_to is null;

|

||||

```

|

||||

|

||||

## Conversion (not migration): zero-downtime, prototype-friendly

|

||||

1. Encode Mongo-shaped docs into JSONB with versioned schemas and forward-compatible projection views.

|

||||

2. Outbox pattern for exactly-once side-effects (`outbox(id, topic, key, payload jsonb, created_at, dispatched bool default false)`).

|

||||

3. Parallel read adapters behind feature flags with read-diff monitoring.

|

||||

4. CDC for analytics without coupling (logical replication).

|

||||

5. Materialized views with fixed refresh cadence; cold-path analytics in separate schemas.

|

||||

6. Phased cutover playbook: Dark Read → Shadow Serve → Authoritative → Retire (with auto-rollback).

|

||||

|

||||

## Rate-limits & Flags: single truth, fast edges

|

||||

- Truth in Postgres with versioned flag docs; history table for changes; Redis edge cache keyed by version; rate-limit quotas in Postgres, counters in Redis with reconciliation jobs.

|

||||

|

||||

## CAS for SBOM/VEX/attestations

|

||||

- Store blobs in OCI/MinIO by digest; keep pointer metadata in Postgres `artifact_index`; benefits: immutability, dedup, easy offline mirroring.

|

||||

|

||||

## Guardrails

|

||||

- Wrap multi-table writes in single transactions; prefer `jsonb_path_query` for targeted reads; enforce RLS and least-privilege roles; version everything (schemas, flags, materialized views); expose observability for statements, MV refresh latency, outbox lag, Redis hit ratio, and RLS hits.

|

||||

|

||||

## Optional Next Steps (from advisory)

|

||||

- Generate ready-to-run EF Core 10 migrations.

|

||||

- Add `/docs/architecture/store-map.md` summarizing these patterns.

|

||||

- Provide a small Docker-based dev seed with sample data.

|

||||

@@ -0,0 +1,38 @@

|

||||

# Verifiable Proof Spine: Receipts + Benchmarks

|

||||

|

||||

Compiled: 2025-12-01 (UTC)

|

||||

|

||||

## Why this matters

|

||||

Move from “trust the scanner” to “prove every verdict.” Each finding and every “not affected” claim must carry cryptographic, replayable evidence that anyone can verify offline or online.

|

||||

|

||||

## Differentiators to build in

|

||||

- **Graph Revision ID on every verdict**: stable Merkle root over SBOM nodes/edges, policies, feeds, scan params, and tool versions. Any data change → new graph hash → new revisioned verdicts; surface the ID in UI/API.

|

||||

- **Machine-verifiable receipts (DSSE)**: emit a DSSE-wrapped in-toto statement per verdict (predicate `stellaops.dev/verdict@v1`) including graphRevisionId, artifact digests, rule id/version, inputs, and timestamps; sign with Authority keys (offline mode supported) and keep receipts queryable/exportable; mirror to Rekor-compatible ledger when online.

|

||||

- **Reachability evidence**: attach call-stack slices (entry→sink, symbols, file:line) for code-level cases; for binaries, include symbol presence proofs (bitmap/offsets) hashed and referenced from DSSE payloads.

|

||||

- **Deterministic replay manifests**: publish `replay.manifest.json` with inputs, feeds, rule/tool/container digests so auditors can recompute the same graph hash and verdicts offline.

|

||||

|

||||

## Benchmarks to publish (headline KPIs)

|

||||

- **False-positive reduction vs baseline scanners**: run public corpus across 3–4 popular scanners; label ground truth once; report mean and p95 FP reduction.

|

||||

- **Proof coverage**: percentage of findings/VEX items carrying valid DSSE receipts; break out reachable vs unreachable and “not affected.”

|

||||

- **Triage time saved**: analyst minutes from alert to final disposition with receipts visible vs hidden; publish p50/p95 deltas.

|

||||

- **Determinism stability**: re-run identical scans across nodes; publish % identical graph hashes and explain drift causes when different.

|

||||

|

||||

## Minimal implementation plan (week-by-week)

|

||||

- **Week 1 – Primitives**: add Graph Revision ID generator in scanner.webservice (Merkle over normalized SBOM+edges+policies+toolVersions); define `VerdictReceipt` schema (protobuf/JSON) and DSSE envelope types.

|

||||

- **Week 2 – Signing + storage**: wire DSSE signing via Authority with offline key support/rotation; persist receipts in `Receipts` table keyed by (graphRevisionId, verdictId); enable JSONL export and ledger mirror.

|

||||

- **Week 3 – Reachability proofs**: capture call-stack slices in reachability engine; serialize and hash; add binary symbol proof module (ELF/PE bitmap + digest) and reference from receipts.

|

||||

- **Week 4 – Replay + UX**: emit replay.manifest per scan (inputs, tool digests); UI shows “Verified” badge, graph hash, signature issuer, and one-click “Copy receipt”; API: `GET /verdicts/{id}/receipt`, `GET /graphs/{rev}/replay`.

|

||||

- **Week 5 – Benchmarks harness**: create `bench/` fixtures and runner with baseline scanner adapters, ground-truth labels, metrics export for FP%, proof coverage, triage time capture hooks.

|

||||

|

||||

## Developer guardrails (non-negotiable)

|

||||

- **No receipt, no ship**: any surfaced verdict must carry a DSSE receipt; fail closed otherwise.

|

||||

- **Schema freeze windows**: changes to rule inputs or policy logic must bump rule version and therefore graph hash.

|

||||

- **Replay-first CI**: PRs touching scanning/rules must pass a replay test that reproduces prior graph hashes on gold fixtures.

|

||||

- **Clock safety**: use monotonic time for receipts; include UTC wall-time separately.

|

||||

|

||||

## What to show buyers/auditors

|

||||

- Audit kit: sample container + receipts + replay manifest + one command to reproduce the same graph hash.

|

||||

- One-page benchmark readout: FP reduction, proof coverage, triage time saved (p50/p95), corpus description.

|

||||

|

||||

## Optional follow-ons

|

||||

- Provide DSSE predicate schema, Postgres DDL for `Receipts` and `Graphs`, and a minimal .NET verification CLI (`stellaops-verify`) that replays a manifest and validates signatures.

|

||||

@@ -0,0 +1,944 @@

|

||||

Here’s a clean, action‑ready blueprint for a **public reachability benchmark** you can stand up quickly and grow over time.

|

||||

|

||||

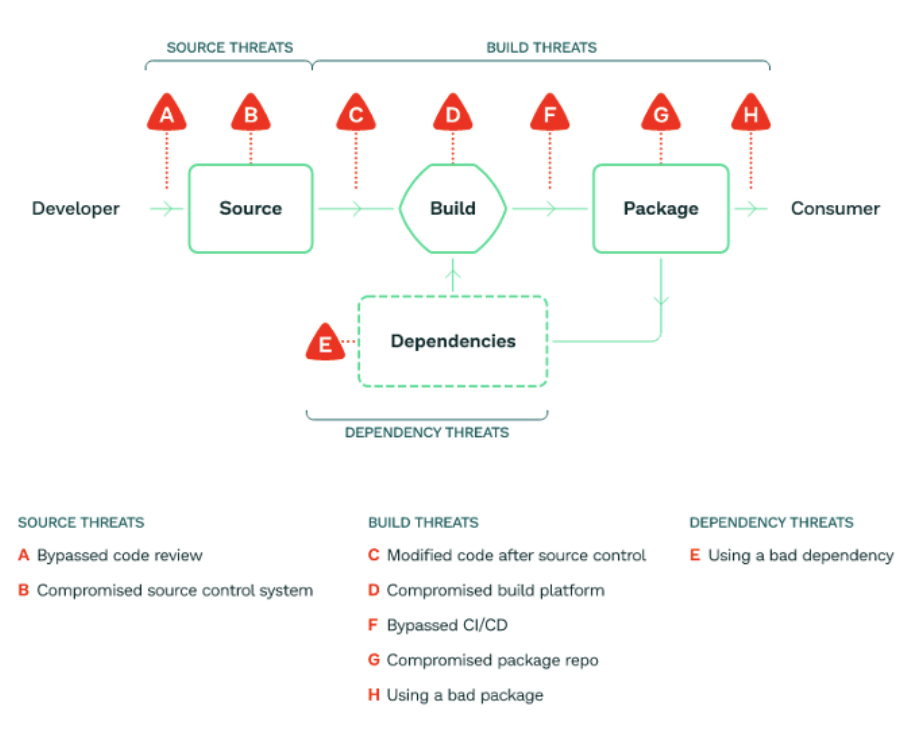

# Why this matters (quick)

|

||||

|

||||

“Reachability” asks: *is a flagged vulnerability actually executable from real entry points in this codebase/container?* A public, reproducible benchmark lets you compare tools apples‑to‑apples, drive research, and keep vendors honest.

|

||||

|

||||

# What to collect (dataset design)

|

||||

|

||||

* **Projects & languages**

|

||||

|

||||

* Polyglot mix: **C/C++ (ELF/PE/Mach‑O)**, **Java/Kotlin**, **C#/.NET**, **Python**, **JavaScript/TypeScript**, **PHP**, **Go**, **Rust**.

|

||||

* For each project: small (≤5k LOC), medium (5–100k), large (100k+).

|

||||

* **Ground‑truth artifacts**

|

||||

|

||||

* **Seed CVEs** with known sinks (e.g., deserializers, command exec, SS RF) and **neutral projects** with *no* reachable path (negatives).

|

||||

* **Exploit oracles**: minimal PoCs or unit tests that (1) reach the sink and (2) toggle reachability via feature flags.

|

||||

* **Build outputs (deterministic)**

|

||||

|

||||

* **Reproducible binaries/bytecode** (strip timestamps; fixed seeds; SOURCE_DATE_EPOCH).

|

||||

* **SBOM** (CycloneDX/SPDX) + **PURLs** + **Build‑ID** (ELF .note.gnu.build‑id / PE Authentihash / Mach‑O UUID).

|

||||

* **Attestations**: in‑toto/DSSE envelopes recording toolchain versions, flags, hashes.

|

||||

* **Execution traces (for truth)**

|

||||

|

||||

* **CI traces**: call‑graph dumps from compilers/analyzers; unit‑test coverage; optional **dynamic traces** (eBPF/.NET ETW/Java Flight Recorder).

|

||||

* **Entry‑point manifests**: HTTP routes, CLI commands, cron/queue consumers.

|

||||

* **Metadata**

|

||||

|

||||

* Language, framework, package manager, compiler versions, OS/container image, optimization level, stripping info, license.

|

||||

|

||||

# How to label ground truth

|

||||

|

||||

* **Per‑vuln case**: `(component, version, sink_id)` with label **reachable / unreachable / unknown**.

|

||||

* **Evidence bundle**: pointer to (a) static call path, (b) dynamic hit (trace/coverage), or (c) rationale for negative.

|

||||

* **Confidence**: high (static+dynamic agree), medium (one source), low (heuristic only).

|

||||

|

||||

# Scoring (simple + fair)

|

||||

|

||||

* **Binary classification** on cases:

|

||||

|

||||

* Precision, Recall, F1. Report **AU‑PR** if you output probabilities.

|

||||

* **Path quality**

|

||||

|

||||

* **Explainability score (0–3)**:

|

||||

|

||||

* 0: “vuln reachable” w/o context

|

||||

* 1: names only (entry→…→sink)

|

||||

* 2: full interprocedural path w/ locations

|

||||

* 3: plus **inputs/guards** (taint/constraints, env flags)

|

||||

* **Runtime cost**

|

||||

|

||||

* Wall‑clock, peak RAM, image size; normalized by KLOC.

|

||||

* **Determinism**

|

||||

|

||||

* Re‑run variance (≤1% is “A”, 1–5% “B”, >5% “C”).

|

||||

|

||||

# Avoiding overfitting

|

||||

|

||||

* **Train/Dev/Test** splits per language; **hidden test** projects rotated quarterly.

|

||||

* **Case churn**: introduce **isomorphic variants** (rename symbols, reorder files) to punish memorization.

|

||||

* **Poisoned controls**: include decoy sinks and unreachable dead‑code traps.

|

||||

* **Submission rules**: require **attestations** of tool versions & flags; limit per‑case hints.

|

||||

|

||||

# Reference baselines (to run out‑of‑the‑box)

|

||||

|

||||

* **Snyk Code/Reachability** (JS/Java/Python, SaaS/CLI).

|

||||

* **Semgrep + Pro Engine** (rules + reachability mode).

|

||||

* **CodeQL** (multi‑lang, LGTM‑style queries).

|

||||

* **Joern** (C/C++/JVM code property graphs).

|

||||

* **angr** (binary symbolic exec; selective for native samples).

|

||||

* **Language‑specific**: pip‑audit w/ import graphs, npm with lock‑tree + route discovery, Maven + call‑graph (Soot/WALA).

|

||||

|

||||

# Submission format (one JSON per tool run)

|

||||

|

||||

```json

|

||||

{

|

||||

"tool": {"name": "YourTool", "version": "1.2.3"},

|

||||

"run": {

|

||||

"commit": "…",

|

||||

"platform": "ubuntu:24.04",

|

||||

"time_s": 182.4, "peak_mb": 3072

|

||||

},

|

||||

"cases": [

|

||||

{

|

||||

"id": "php-shop:fastjson@1.2.68:Sink#deserialize",

|

||||

"prediction": "reachable",

|

||||

"confidence": 0.88,

|

||||

"explain": {

|

||||

"entry": "POST /api/orders",

|

||||

"path": [

|

||||

"OrdersController::create",

|

||||

"Serializer::deserialize",

|

||||

"Fastjson::parseObject"

|

||||

],

|

||||

"guards": ["feature.flag.json_enabled==true"]

|

||||

}

|

||||

}

|

||||

],

|

||||

"artifacts": {

|

||||

"sbom": "sha256:…", "attestation": "sha256:…"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

# Folder layout (repo)

|

||||

|

||||

```

|

||||

/benchmark

|

||||

/cases/<lang>/<project>/<case_id>/

|

||||

case.yaml # component@version, sink, labels, evidence refs

|

||||

entrypoints.yaml # routes/CLIs/cron

|

||||

build/ # Dockerfiles, lockfiles, pinned toolchains

|

||||

outputs/ # SBOMs, binaries, traces (checksummed)

|

||||

/splits/{train,dev,test}.txt

|

||||

/schemas/{case.json,submission.json}

|

||||

/scripts/{build.sh, run_tests.sh, score.py}

|

||||

/docs/ (how-to, FAQs, T&Cs)

|

||||

```

|

||||

|

||||

# Minimal **v1** (4–6 weeks of work)

|

||||

|

||||

1. **Languages**: JS/TS, Python, Java, C (ELF).

|

||||

2. **20–30 cases**: mix of reachable/unreachable with PoC unit tests.

|

||||

3. **Deterministic builds** in containers; publish SBOM+attestations.

|

||||

4. **Scorer**: precision/recall/F1 + explainability, runtime, determinism.

|

||||

5. **Baselines**: run CodeQL + Semgrep across all; Snyk where feasible; angr for 3 native cases.

|

||||

6. **Website**: static leaderboard (per‑lang, per‑size), download links, submission guide.

|

||||

|

||||

# V2+ (quarterly)

|

||||

|

||||

* Add **.NET, PHP, Go, Rust**; broaden binary focus (PE/Mach‑O).

|

||||

* Add **dynamic traces** (eBPF/ETW/JFR) and **taint oracles**.

|

||||

* Introduce **config‑gated reachability** (feature flags, env, k8s secrets).

|

||||

* Add **dataset cards** per case (threat model, CWE, false‑positive traps).

|

||||

|

||||

# Publishing & governance

|

||||

|

||||

* License: **CC‑BY‑SA** for metadata, **source‑compatible OSS** for code, binaries under original licenses.

|

||||

* **Repro packs**: `benchmark-kit.tgz` with container recipes, hashes, and attestations.

|

||||

* **Disclosure**: CVE hygiene, responsible use, opt‑out path for upstreams.

|

||||

* **Stewards**: small TAC (you + two external reviewers) to approve new cases and adjudicate disputes.

|

||||

|

||||

# Immediate next steps (checklist)

|

||||

|

||||

* Lock the **schemas** (case + submission + attestation fields).

|

||||

* Pick 8 seed projects (2 per language tiered by size).

|

||||

* Draft 12 sink‑cases (6 reachable, 6 unreachable) with unit‑test oracles.

|

||||

* Script deterministic builds and **hash‑locked SBOMs**.

|

||||

* Implement the scorer; publish a **starter leaderboard** with 2 baselines.

|

||||

* Ship **v1 website/docs** and open submissions.

|

||||

|

||||

If you want, I can generate the repo scaffold (folders, YAML/JSON schemas, Dockerfiles, scorer script) so your team can `git clone` and start adding cases immediately.

|

||||

Cool, let’s turn the blueprint into a concrete, developer‑friendly implementation plan.

|

||||

|

||||

I’ll assume **v1 scope** is:

|

||||

|

||||

* Languages: **JavaScript/TypeScript (Node)**, **Python**, **Java**, **C (ELF)**

|

||||

* ~**20–30 cases** total (reachable/unreachable mix)

|

||||

* Baselines: **CodeQL**, **Semgrep**, maybe **Snyk** where licenses allow, and **angr** for a few native cases

|

||||

|

||||

You can expand later, but this plan is enough to get v1 shipped.

|

||||

|

||||

---

|

||||

|

||||

## 0. Overall project structure & ownership

|

||||

|

||||

**Owners**

|

||||

|

||||

* **Tech Lead** – owns architecture & final decisions

|

||||

* **Benchmark Core** – 2–3 devs building schemas, scorer, infra

|

||||

* **Language Tracks** – 1 dev per language (JS, Python, Java, C)

|

||||

* **Website/Docs** – 1 dev

|

||||

|

||||

**Repo layout (target)**

|

||||

|

||||

```text

|

||||

reachability-benchmark/

|

||||

README.md

|

||||

LICENSE

|

||||

CONTRIBUTING.md

|

||||

CODE_OF_CONDUCT.md

|

||||

|

||||

benchmark/

|

||||

cases/

|

||||

js/

|

||||

express-blog/

|

||||

case-001/

|

||||

case.yaml

|

||||

entrypoints.yaml

|

||||

build/

|

||||

Dockerfile

|

||||

build.sh

|

||||

src/ # project source (or submodule)

|

||||

tests/ # unit tests as oracles

|

||||

outputs/

|

||||

sbom.cdx.json

|

||||

binary.tar.gz

|

||||

coverage.json

|

||||

traces/ # optional dynamic traces

|

||||

py/

|

||||

flask-api/...

|

||||

java/

|

||||

spring-app/...

|

||||

c/

|

||||

httpd-like/...

|

||||

schemas/

|

||||

case.schema.yaml

|

||||

entrypoints.schema.yaml

|

||||

truth.schema.yaml

|

||||

submission.schema.json

|

||||

tools/

|

||||

scorer/

|

||||

rb_score/

|

||||

__init__.py

|

||||

cli.py

|

||||

metrics.py

|

||||

loader.py

|

||||

explainability.py

|

||||

pyproject.toml

|

||||

tests/

|

||||

build/

|

||||

build_all.py

|

||||

validate_builds.py

|

||||

|

||||

baselines/

|

||||

codeql/

|

||||

run_case.sh

|

||||

config/

|

||||

semgrep/

|

||||

run_case.sh

|

||||

rules/

|

||||

snyk/

|

||||

run_case.sh

|

||||

angr/

|

||||

run_case.sh

|

||||

|

||||

ci/

|

||||

github/

|

||||

benchmark.yml

|

||||

|

||||

website/

|

||||

# static site / leaderboard

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## 1. Phase 1 – Repo & infra setup

|

||||

|

||||

### Task 1.1 – Create repository

|

||||

|

||||

**Developer:** Tech Lead

|

||||

**Deliverables:**

|

||||

|

||||

* Repo created (`reachability-benchmark` or similar)

|

||||

* `LICENSE` (e.g., Apache-2.0 or MIT)

|

||||

* Basic `README.md` describing:

|

||||

|

||||

* Purpose (public reachability benchmark)

|

||||

* High‑level design

|

||||

* v1 scope (langs, #cases)

|

||||

|

||||

### Task 1.2 – Bootstrap structure

|

||||

|

||||

**Developer:** Benchmark Core

|

||||

|

||||

Create directory skeleton as above (without filling everything yet).

|

||||

|

||||

Add:

|

||||

|

||||

```bash

|

||||

# benchmark/Makefile

|

||||

.PHONY: test lint build

|

||||

test:

|

||||

\tpytest benchmark/tools/scorer/tests

|

||||

|

||||

lint:

|

||||

\tblack benchmark/tools/scorer

|

||||

\tflake8 benchmark/tools/scorer

|

||||

|

||||

build:

|

||||

\tpython benchmark/tools/build/build_all.py

|

||||

```

|

||||

|

||||

### Task 1.3 – Coding standards & tooling

|

||||

|

||||

**Developer:** Benchmark Core

|

||||

|

||||

* Add `.editorconfig`, `.gitignore`, and Python tool configs (`ruff`, `black`, or `flake8`).

|

||||

* Define minimal **PR checklist** in `CONTRIBUTING.md`:

|

||||

|

||||

* Tests pass

|

||||

* Lint passes

|

||||

* New schemas have JSON schema or YAML schema and tests

|

||||

* New cases come with oracles (tests/coverage)

|

||||

|

||||

---

|

||||

|

||||

## 2. Phase 2 – Case & submission schemas

|

||||

|

||||

### Task 2.1 – Define case metadata format

|

||||

|

||||

**Developer:** Benchmark Core

|

||||

|

||||

Create `benchmark/schemas/case.schema.yaml` and an example `case.yaml`.

|

||||

|

||||

**Example `case.yaml`**

|

||||

|

||||

```yaml

|

||||

id: "js-express-blog:001"

|

||||

language: "javascript"

|

||||

framework: "express"

|

||||

size: "small" # small | medium | large

|

||||

component:

|

||||

name: "express-blog"

|

||||

version: "1.0.0-bench"

|

||||

vulnerability:

|

||||

cve: "CVE-XXXX-YYYY"

|

||||

cwe: "CWE-502"

|

||||

description: "Unsafe deserialization via user-controlled JSON."

|

||||

sink_id: "Deserializer::parse"

|

||||

ground_truth:

|

||||

label: "reachable" # reachable | unreachable | unknown

|

||||

confidence: "high" # high | medium | low

|

||||

evidence_files:

|

||||

- "truth.yaml"

|

||||

notes: >

|

||||

Unit test test_reachable_deserialization triggers the sink.

|

||||

build:

|

||||

dockerfile: "build/Dockerfile"

|

||||

build_script: "build/build.sh"

|

||||

output:

|

||||

artifact_path: "outputs/binary.tar.gz"

|

||||

sbom_path: "outputs/sbom.cdx.json"

|

||||

coverage_path: "outputs/coverage.json"

|

||||

traces_dir: "outputs/traces"

|

||||

environment:

|

||||

os_image: "ubuntu:24.04"

|

||||

compiler: null

|

||||

runtime:

|

||||

node: "20.11.0"

|

||||

source_date_epoch: 1730000000

|

||||

```

|

||||

|

||||

**Acceptance criteria**

|

||||

|

||||

* Schema validates sample `case.yaml` with a Python script:

|

||||

|

||||

* `benchmark/tools/build/validate_schema.py` using `jsonschema` or `pykwalify`.

|

||||

|

||||

---

|

||||

|

||||

### Task 2.2 – Entry points schema

|

||||

|

||||

**Developer:** Benchmark Core

|

||||

|

||||

`benchmark/schemas/entrypoints.schema.yaml`

|

||||

|

||||

**Example `entrypoints.yaml`**

|

||||

|

||||

```yaml

|

||||

entries:

|

||||

http:

|

||||

- id: "POST /api/posts"

|

||||

route: "/api/posts"

|

||||

method: "POST"

|

||||

handler: "PostsController.create"

|

||||

cli:

|

||||

- id: "generate-report"

|

||||

command: "node cli.js generate-report"

|

||||

description: "Generates summary report."

|

||||

scheduled:

|

||||

- id: "daily-cleanup"

|

||||

schedule: "0 3 * * *"

|

||||

handler: "CleanupJob.run"

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

### Task 2.3 – Ground truth / truth schema

|

||||

|

||||

**Developer:** Benchmark Core + Language Tracks

|

||||

|

||||

`benchmark/schemas/truth.schema.yaml`

|

||||

|

||||

**Example `truth.yaml`**

|

||||

|

||||

```yaml

|

||||

id: "js-express-blog:001"

|

||||

cases:

|

||||

- sink_id: "Deserializer::parse"

|

||||

label: "reachable"

|

||||

dynamic_evidence:

|

||||

covered_by_tests:

|

||||

- "tests/test_reachable_deserialization.js::should_reach_sink"

|

||||

coverage_files:

|

||||

- "outputs/coverage.json"

|

||||

static_evidence:

|

||||

call_path:

|

||||

- "POST /api/posts"

|

||||

- "PostsController.create"

|

||||

- "PostsService.createFromJson"

|

||||

- "Deserializer.parse"

|

||||

config_conditions:

|

||||

- "process.env.FEATURE_JSON_ENABLED == 'true'"

|

||||

notes: "If FEATURE_JSON_ENABLED=false, path is unreachable."

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

### Task 2.4 – Submission schema

|

||||

|

||||

**Developer:** Benchmark Core

|

||||

|

||||

`benchmark/schemas/submission.schema.json`

|

||||

|

||||

**Shape**

|

||||

|

||||

```json

|

||||

{

|

||||

"tool": { "name": "YourTool", "version": "1.2.3" },

|

||||

"run": {

|

||||

"commit": "abcd1234",

|

||||

"platform": "ubuntu:24.04",

|

||||

"time_s": 182.4,

|

||||

"peak_mb": 3072

|

||||

},

|

||||

"cases": [

|

||||

{

|

||||

"id": "js-express-blog:001",

|

||||

"prediction": "reachable",

|

||||

"confidence": 0.88,

|

||||

"explain": {

|

||||

"entry": "POST /api/posts",

|

||||

"path": [

|

||||

"PostsController.create",

|

||||

"PostsService.createFromJson",

|

||||

"Deserializer.parse"

|

||||

],

|

||||

"guards": [

|

||||

"process.env.FEATURE_JSON_ENABLED === 'true'"

|

||||

]

|

||||

}

|

||||

}

|

||||

],

|

||||

"artifacts": {

|

||||

"sbom": "sha256:...",

|

||||

"attestation": "sha256:..."

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

Write Python validation utility:

|

||||

|

||||

```bash

|

||||

python benchmark/tools/scorer/validate_submission.py submission.json

|

||||

```

|

||||

|

||||

**Acceptance criteria**

|

||||

|

||||

* Validation fails on missing fields / wrong enum values.

|

||||

* At least two sample submissions pass validation (e.g., “perfect” and “random baseline”).

|

||||

|

||||

---

|

||||

|

||||

## 3. Phase 3 – Reference projects & deterministic builds

|

||||

|

||||

### Task 3.1 – Select and vendor v1 projects

|

||||

|

||||

**Developer:** Tech Lead + Language Tracks

|

||||

|

||||

For each language, choose:

|

||||

|

||||

* 1 small toy app (simple web or CLI)

|

||||

* 1 medium app (more routes, multiple modules)

|

||||

* Optional: 1 large (for performance stress tests)

|

||||

|

||||

Add them under `benchmark/cases/<lang>/<project>/src/`

|

||||

(or as git submodules if you want to track upstream).

|

||||

|

||||

---

|

||||

|

||||

### Task 3.2 – Deterministic Docker build per project

|

||||

|

||||

**Developer:** Language Tracks

|

||||

|

||||

For each project:

|

||||

|

||||

* Create `build/Dockerfile`

|

||||

* Create `build/build.sh` that:

|

||||

|

||||

* Builds the app

|

||||

* Produces artifacts

|

||||

* Generates SBOM and attestation

|

||||

|

||||

**Example `build/Dockerfile` (Node)**

|

||||

|

||||

```dockerfile

|

||||

FROM node:20.11-slim

|

||||

|

||||

ENV NODE_ENV=production

|

||||

ENV SOURCE_DATE_EPOCH=1730000000

|

||||

|

||||

WORKDIR /app

|

||||

COPY src/ /app

|

||||

COPY package.json package-lock.json /app/

|

||||

|

||||

RUN npm ci --ignore-scripts && \

|

||||

npm run build || true

|

||||

|

||||

CMD ["node", "server.js"]

|

||||

```

|

||||

|

||||

**Example `build.sh`**

|

||||

|

||||

```bash

|

||||

#!/usr/bin/env bash

|

||||

set -euo pipefail

|

||||

|

||||

ROOT_DIR="$(dirname "$(readlink -f "$0")")/.."

|

||||

OUT_DIR="$ROOT_DIR/outputs"

|

||||

mkdir -p "$OUT_DIR"

|

||||

|

||||

IMAGE_TAG="rb-js-express-blog:1"

|

||||

|

||||

docker build -t "$IMAGE_TAG" "$ROOT_DIR/build"

|

||||

|

||||

# Export image as tarball (binary artifact)

|

||||

docker save "$IMAGE_TAG" | gzip > "$OUT_DIR/binary.tar.gz"

|

||||

|

||||

# Generate SBOM (e.g. via syft) – can be optional stub for v1

|

||||

syft packages "docker:$IMAGE_TAG" -o cyclonedx-json > "$OUT_DIR/sbom.cdx.json"

|

||||

|

||||

# In future: generate in-toto attestations

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

### Task 3.3 – Determinism checker

|

||||

|

||||

**Developer:** Benchmark Core

|

||||

|

||||

`benchmark/tools/build/validate_builds.py`:

|

||||

|

||||

* For each case:

|

||||

|

||||

* Run `build.sh` twice

|

||||

* Compare hashes of `outputs/binary.tar.gz` and `outputs/sbom.cdx.json`

|

||||

* Fail if hashes differ.

|

||||

|

||||

**Acceptance criteria**

|

||||

|

||||

* All v1 cases produce identical artifacts across two builds on CI.

|

||||

|

||||

---

|

||||

|

||||

## 4. Phase 4 – Ground truth oracles (tests & traces)

|

||||

|

||||

### Task 4.1 – Add unit/integration tests for reachable cases

|

||||

|

||||

**Developer:** Language Tracks

|

||||

|

||||

For each **reachable** case:

|

||||

|

||||

* Add `tests/` under the project to:

|

||||

|

||||

* Start the app (if necessary)

|

||||

* Send a request/trigger that reaches the vulnerable sink

|

||||

* Assert that a sentinel side effect occurs (e.g. log or marker file) instead of real exploitation.

|

||||

|

||||

Example for Node using Jest:

|

||||

|

||||

```js

|

||||

test("should reach deserialization sink", async () => {

|

||||

const res = await request(app)

|

||||

.post("/api/posts")

|

||||

.send({ title: "x", body: '{"__proto__":{}}' });

|

||||

|

||||

expect(res.statusCode).toBe(200);

|

||||

// Sink logs "REACH_SINK" – we check log or variable

|

||||

expect(sinkWasReached()).toBe(true);

|

||||

});

|

||||

```

|

||||

|

||||

### Task 4.2 – Instrument coverage

|

||||

|

||||

**Developer:** Language Tracks

|

||||

|

||||

* For each language, pick a coverage tool:

|

||||

|

||||

* JS: `nyc` + `istanbul`

|

||||

* Python: `coverage.py`

|

||||

* Java: `jacoco`

|

||||

* C: `gcov`/`llvm-cov` (optional for v1)

|

||||

|

||||

* Ensure running tests produces `outputs/coverage.json` or `.xml` that we then convert to a simple JSON format:

|

||||

|

||||

```json

|

||||

{

|

||||

"files": {

|

||||

"src/controllers/posts.js": {

|

||||

"lines_covered": [12, 13, 14, 27],

|

||||

"lines_total": 40

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

Create a small converter script if needed.

|

||||

|

||||

### Task 4.3 – Optional dynamic traces

|

||||

|

||||

If you want richer evidence:

|

||||

|

||||

* JS: add middleware that logs `(entry_id, handler, sink)` triples to `outputs/traces/traces.json`

|

||||

* Python: similar using decorators

|

||||

* C/Java: out of scope for v1 unless you want to invest extra time.

|

||||

|

||||

---

|

||||

|

||||

## 5. Phase 5 – Scoring tool (CLI)

|

||||

|

||||

### Task 5.1 – Implement `rb-score` library + CLI

|

||||

|

||||

**Developer:** Benchmark Core

|

||||

|

||||

Create `benchmark/tools/scorer/rb_score/` with:

|

||||

|

||||

* `loader.py`

|

||||

|

||||

* Load all `case.yaml`, `truth.yaml` into memory.

|

||||

* Provide functions: `load_cases() -> Dict[case_id, Case]`.

|

||||

|

||||

* `metrics.py`

|

||||

|

||||

* Implement:

|

||||

|

||||

* `compute_precision_recall(truth, predictions)`

|

||||

* `compute_path_quality_score(explain_block)` (0–3)

|

||||

* `compute_runtime_stats(run_block)`

|

||||

|

||||

* `cli.py`

|

||||

|

||||

* CLI:

|

||||

|

||||

```bash

|

||||

rb-score \

|

||||

--cases-root benchmark/cases \

|

||||

--submission submissions/mytool.json \

|

||||

--output results/mytool_results.json

|

||||

```

|

||||

|

||||

**Pseudo-code for core scoring**

|

||||

|

||||

```python

|

||||

def score_submission(truth, submission):

|

||||

y_true = []

|

||||

y_pred = []

|

||||

per_case_scores = {}

|

||||

|

||||

for case in truth:

|

||||

gt = truth[case.id].label # reachable/unreachable

|

||||

pred_case = find_pred_case(submission.cases, case.id)

|

||||

pred_label = pred_case.prediction if pred_case else "unreachable"

|

||||

|

||||

y_true.append(gt == "reachable")

|

||||

y_pred.append(pred_label == "reachable")

|

||||

|

||||

explain_score = explainability(pred_case.explain if pred_case else None)

|

||||

|

||||

per_case_scores[case.id] = {

|

||||

"gt": gt,

|

||||

"pred": pred_label,

|

||||

"explainability": explain_score,

|

||||

}

|

||||

|

||||

precision, recall, f1 = compute_prf(y_true, y_pred)

|

||||

|

||||

return {

|

||||

"summary": {

|

||||

"precision": precision,

|

||||

"recall": recall,

|

||||

"f1": f1,

|

||||

"num_cases": len(truth),

|

||||

},

|

||||

"cases": per_case_scores,

|

||||

}

|

||||

```

|

||||

|

||||

### Task 5.2 – Explainability scoring rules

|

||||

|

||||

**Developer:** Benchmark Core

|

||||

|

||||

Implement `explainability(explain)`:

|

||||

|

||||

* 0 – `explain` missing or `path` empty

|

||||

* 1 – `path` present with at least 2 nodes (sink + one function)

|

||||

* 2 – `path` contains:

|

||||

|

||||

* Entry label (HTTP route/CLI id)

|

||||

* ≥3 nodes (entry → … → sink)

|

||||

* 3 – Level 2 plus `guards` list non-empty

|

||||

|

||||

Unit tests for at least 4 scenarios.

|

||||

|

||||

### Task 5.3 – Regression tests for scoring

|

||||

|

||||

Add small test fixture:

|

||||

|

||||

* Tiny synthetic benchmark: 3 cases, 2 reachable, 1 unreachable.

|

||||

* 3 submissions:

|

||||

|

||||

* Perfect

|

||||

* All reachable

|

||||

* All unreachable

|

||||

|

||||

Assertions:

|

||||

|

||||

* Perfect: `precision=1, recall=1`

|

||||

* All reachable: `recall=1, precision<1`

|

||||

* All unreachable: `precision=1 (trivially on negatives), recall=0`

|

||||

|

||||

---

|

||||

|

||||

## 6. Phase 6 – Baseline integrations

|

||||

|

||||

### Task 6.1 – Semgrep baseline

|

||||

|

||||

**Developer:** Benchmark Core (with Semgrep experience)

|

||||

|

||||

* `baselines/semgrep/run_case.sh`:

|

||||

|

||||

* Inputs: `case_id`, `cases_root`, `output_path`

|

||||

* Steps:

|

||||

|

||||

* Find `src/` for case

|

||||

* Run `semgrep --config auto` or curated rules

|

||||

* Convert Semgrep findings into benchmark submission format:

|

||||

|

||||

* Map Semgrep rules → vulnerability types → candidate sinks

|

||||

* Heuristically guess reachability (for v1, maybe always “reachable” if sink in code path)

|

||||

* Output: `output_path` JSON conforming to `submission.schema.json`.

|

||||

|

||||

### Task 6.2 – CodeQL baseline

|

||||

|

||||

* Create CodeQL databases for each project (likely via `codeql database create`).

|

||||

* Create queries targeting known sinks (e.g., `Deserialization`, `CommandInjection`).

|

||||

* `baselines/codeql/run_case.sh`:

|

||||

|

||||

* Build DB (or reuse)

|

||||

* Run queries

|

||||

* Translate results into our submission format (again as heuristic reachability).

|

||||

|

||||

### Task 6.3 – Optional Snyk / angr baselines

|

||||

|

||||

* Snyk:

|

||||

|

||||

* Use `snyk test` on the project

|

||||

* Map results to dependencies & known CVEs

|

||||

* For v1, just mark as `reachable` if Snyk reports a reachable path (if available).

|

||||

* angr:

|

||||

|

||||

* For 1–2 small C samples, configure simple analysis script.

|

||||

|

||||

**Acceptance criteria**

|

||||

|

||||

* For at least 5 cases (across languages), the baselines produce valid submission JSON.

|

||||

* `rb-score` runs and yields metrics without errors.

|

||||

|

||||

---

|

||||

|

||||

## 7. Phase 7 – CI/CD

|

||||

|

||||

### Task 7.1 – GitHub Actions workflow

|

||||

|

||||

**Developer:** Benchmark Core

|

||||

|

||||

`ci/github/benchmark.yml`:

|

||||

|

||||

Jobs:

|

||||

|

||||

1. `lint-and-test`

|

||||

|

||||

* `python -m pip install -e benchmark/tools/scorer[dev]`

|

||||

* `make lint`

|

||||

* `make test`

|

||||

|

||||

2. `build-cases`

|

||||

|

||||

* `python benchmark/tools/build/build_all.py`

|

||||

* Run `validate_builds.py`

|

||||

|

||||

3. `smoke-baselines`

|

||||

|

||||

* For 2–3 cases, run Semgrep/CodeQL wrappers and ensure they emit valid submissions.

|

||||

|

||||

### Task 7.2 – Artifact upload

|

||||

|

||||

* Upload `outputs/` tarball from `build-cases` as workflow artifacts.

|

||||

* Upload `results/*.json` from scoring runs.

|

||||

|

||||

---

|

||||

|

||||

## 8. Phase 8 – Website & leaderboard

|

||||

|

||||

### Task 8.1 – Define results JSON format

|

||||

|

||||

**Developer:** Benchmark Core + Website dev

|

||||

|

||||

`results/leaderboard.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"tools": [

|

||||

{

|

||||

"name": "Semgrep",

|

||||

"version": "1.60.0",

|

||||

"summary": {

|

||||

"precision": 0.72,

|

||||

"recall": 0.48,

|

||||

"f1": 0.58

|

||||

},

|

||||

"by_language": {

|

||||

"javascript": {"precision": 0.80, "recall": 0.50, "f1": 0.62},

|

||||

"python": {"precision": 0.65, "recall": 0.45, "f1": 0.53}

|

||||

}

|

||||

}

|

||||

]

|

||||

}

|

||||

```

|

||||

|

||||

CLI option to generate this:

|

||||

|

||||

```bash

|

||||

rb-score compare \

|

||||

--cases-root benchmark/cases \

|

||||

--submissions submissions/*.json \

|

||||

--output results/leaderboard.json

|

||||

```

|

||||

|

||||

### Task 8.2 – Static site

|

||||

|

||||

**Developer:** Website dev

|

||||

|

||||

Tech choice: any static framework (Next.js, Astro, Docusaurus, or even pure HTML+JS).

|

||||

|

||||

Pages:

|

||||

|

||||

* **Home**

|

||||

|

||||

* What is reachability?

|

||||

* Summary of benchmark

|

||||

|

||||

* **Leaderboard**

|

||||

|

||||

* Renders `leaderboard.json`

|

||||

* Filters: language, case size

|

||||

|

||||

* **Docs**

|

||||

|

||||

* How to run benchmark locally

|

||||

* How to prepare a submission

|

||||

|

||||

Add a simple script to copy `results/leaderboard.json` into `website/public/` for publishing.

|

||||

|

||||

---

|

||||

|

||||

## 9. Phase 9 – Docs, governance, and contribution flow

|

||||

|

||||

### Task 9.1 – CONTRIBUTING.md

|

||||

|

||||

Include:

|

||||

|

||||

* How to add a new case:

|

||||

|

||||

* Step‑by‑step:

|

||||

|

||||

1. Create project folder under `benchmark/cases/<lang>/<project>/case-XXX/`

|

||||

2. Add `case.yaml`, `entrypoints.yaml`, `truth.yaml`

|

||||

3. Add oracles (tests, coverage)

|

||||

4. Add deterministic `build/` assets

|

||||

5. Run local tooling:

|

||||

|

||||

* `validate_schema.py`

|

||||

* `validate_builds.py --case <id>`

|

||||

* Example PR description template.

|

||||

|

||||

### Task 9.2 – Governance doc

|

||||

|

||||

* Define **Technical Advisory Committee (TAC)** roles:

|

||||

|

||||

* Approve new cases

|

||||

* Approve schema changes

|

||||