Add reference architecture and testing strategy documentation

- Created a new document for the Stella Ops Reference Architecture outlining the system's topology, trust boundaries, artifact association, and interfaces. - Developed a comprehensive Testing Strategy document detailing the importance of offline readiness, interoperability, determinism, and operational guardrails. - Introduced a README for the Testing Strategy, summarizing processing details and key concepts implemented. - Added guidance for AI agents and developers in the tests directory, including directory structure, test categories, key patterns, and rules for test development.

This commit is contained in:

Binary file not shown.

@@ -0,0 +1,81 @@

|

||||

# Archived Advisory: Mapping Evidence Within Compiled Binaries

|

||||

|

||||

**Original Advisory:** `21-Dec-2025 - Mapping Evidence Within Compiled Binaries.md`

|

||||

**Archived:** 2025-12-21

|

||||

**Status:** Converted to Implementation Plan

|

||||

|

||||

---

|

||||

|

||||

## Summary

|

||||

|

||||

This advisory proposed building a **Vulnerable Binaries Database** that enables detection of vulnerable code at the binary level, independent of package metadata.

|

||||

|

||||

## Implementation Artifacts Created

|

||||

|

||||

### Architecture Documentation

|

||||

|

||||

- `docs/modules/binaryindex/architecture.md` - Full module architecture

|

||||

- `docs/db/schemas/binaries_schema_specification.md` - Database schema

|

||||

|

||||

### Sprint Files

|

||||

|

||||

**Summary:**

|

||||

- `docs/implplan/SPRINT_6000_SUMMARY.md` - MVP roadmap overview

|

||||

|

||||

**MVP 1: Known-Build Binary Catalog (Sprint 6000.0001)**

|

||||

- `SPRINT_6000_0001_0001_binaries_schema.md` - PostgreSQL schema

|

||||

- `SPRINT_6000_0001_0002_binary_identity_service.md` - Identity extraction

|

||||

- `SPRINT_6000_0001_0003_debian_corpus_connector.md` - Debian/Ubuntu ingestion

|

||||

|

||||

**MVP 2: Patch-Aware Backport Handling (Sprint 6000.0002)**

|

||||

- `SPRINT_6000_0002_0001_fix_evidence_parser.md` - Changelog/patch parsing

|

||||

|

||||

**MVP 3: Binary Fingerprint Factory (Sprint 6000.0003)**

|

||||

- `SPRINT_6000_0003_0001_fingerprint_storage.md` - Fingerprint storage

|

||||

|

||||

**MVP 4: Scanner Integration (Sprint 6000.0004)**

|

||||

- `SPRINT_6000_0004_0001_scanner_integration.md` - Scanner.Worker integration

|

||||

|

||||

## Key Decisions

|

||||

|

||||

| Decision | Rationale |

|

||||

|----------|-----------|

|

||||

| New `BinaryIndex` module | Binary vulnerability DB is distinct concern from Scanner |

|

||||

| Build-ID as primary key | Most deterministic identifier for ELF binaries |

|

||||

| `binaries` PostgreSQL schema | Aligns with existing per-module schema pattern |

|

||||

| Three-tier lookup | Assertions → Build-ID → Fingerprints for precision |

|

||||

| Patch-aware fix index | Handles distro backports correctly |

|

||||

|

||||

## Module Structure

|

||||

|

||||

```

|

||||

src/BinaryIndex/

|

||||

├── StellaOps.BinaryIndex.WebService/

|

||||

├── StellaOps.BinaryIndex.Worker/

|

||||

├── __Libraries/

|

||||

│ ├── StellaOps.BinaryIndex.Core/

|

||||

│ ├── StellaOps.BinaryIndex.Persistence/

|

||||

│ ├── StellaOps.BinaryIndex.Corpus/

|

||||

│ ├── StellaOps.BinaryIndex.Corpus.Debian/

|

||||

│ ├── StellaOps.BinaryIndex.FixIndex/

|

||||

│ └── StellaOps.BinaryIndex.Fingerprints/

|

||||

└── __Tests/

|

||||

```

|

||||

|

||||

## Database Tables

|

||||

|

||||

| Table | Purpose |

|

||||

|-------|---------|

|

||||

| `binaries.binary_identity` | Known binary identities |

|

||||

| `binaries.binary_package_map` | Binary → package mapping |

|

||||

| `binaries.vulnerable_buildids` | Vulnerable Build-IDs |

|

||||

| `binaries.cve_fix_index` | Patch-aware fix status |

|

||||

| `binaries.vulnerable_fingerprints` | Function fingerprints |

|

||||

| `binaries.fingerprint_matches` | Scan match results |

|

||||

|

||||

## References

|

||||

|

||||

- Original advisory: This folder

|

||||

- Architecture: `docs/modules/binaryindex/architecture.md`

|

||||

- Schema: `docs/db/schemas/binaries_schema_specification.md`

|

||||

- Sprints: `docs/implplan/SPRINT_6000_*.md`

|

||||

@@ -0,0 +1,606 @@

|

||||

# CVSS and Competitive Analysis Technical Reference

|

||||

|

||||

**Source Advisories**:

|

||||

- 29-Nov-2025 - CVSS v4.0 Momentum in Vulnerability Management

|

||||

- 30-Nov-2025 - Comparative Evidence Patterns for Stella Ops

|

||||

- 03-Dec-2025 - Next‑Gen Scanner Differentiators and Evidence Moat

|

||||

|

||||

**Last Updated**: 2025-12-14

|

||||

|

||||

---

|

||||

|

||||

## 1. CVSS V4.0 INTEGRATION

|

||||

|

||||

### 1.1 Requirements

|

||||

|

||||

- Vendors (NVD, GitHub, Microsoft, Snyk) shipping CVSS v4 signals

|

||||

- Awareness needed for receipt schemas, reporting, UI alignment

|

||||

|

||||

### 1.2 Determinism & Offline

|

||||

|

||||

- Keep CVSS vector parsing deterministic

|

||||

- Pin scoring library versions in receipts

|

||||

- Avoid live API dependency

|

||||

- Rely on mirrored NVD feeds or frozen samples

|

||||

|

||||

### 1.3 Schema Mapping

|

||||

|

||||

- Map impacts to receipt schemas

|

||||

- Identify UI/reporting deltas for transparency

|

||||

- Note in sprint Decisions & Risks for CVSS receipts

|

||||

|

||||

### 1.4 CVSS v4.0 MacroVector Scoring System

|

||||

|

||||

CVSS v4.0 uses a **MacroVector-based scoring system** instead of the direct formula computation used in v2/v3. The MacroVector is a 6-digit string derived from the base metrics, which maps to a precomputed score table with 486 possible combinations.

|

||||

|

||||

**MacroVector Structure**:

|

||||

```

|

||||

MacroVector = EQ1 + EQ2 + EQ3 + EQ4 + EQ5 + EQ6

|

||||

Example: "001100" -> Base Score = 8.2

|

||||

```

|

||||

|

||||

**Equivalence Classes (EQ1-EQ6)**:

|

||||

|

||||

| EQ | Metrics Used | Values | Meaning |

|

||||

|----|--------------|--------|---------|

|

||||

| EQ1 | Attack Vector + Privileges Required | 0-2 | Network reachability and auth barrier |

|

||||

| EQ2 | Attack Complexity + User Interaction | 0-1 | Attack prerequisites |

|

||||

| EQ3 | Vulnerable System CIA | 0-2 | Impact on vulnerable system |

|

||||

| EQ4 | Subsequent System CIA | 0-2 | Impact on downstream systems |

|

||||

| EQ5 | Attack Requirements | 0-1 | Preconditions needed |

|

||||

| EQ6 | Combined Impact Pattern | 0-2 | Multi-impact severity |

|

||||

|

||||

**EQ1 (Attack Vector + Privileges Required)**:

|

||||

- AV=Network + PR=None -> 0 (worst case: remote, no auth)

|

||||

- AV=Network + PR=Low/High -> 1

|

||||

- AV=Adjacent + PR=None -> 1

|

||||

- AV=Adjacent + PR=Low/High -> 2

|

||||

- AV=Local or Physical -> 2 (requires local access)

|

||||

|

||||

**EQ2 (Attack Complexity + User Interaction)**:

|

||||

- AC=Low + UI=None -> 0 (easiest to exploit)

|

||||

- AC=Low + UI=Passive/Active -> 1

|

||||

- AC=High + any UI -> 1 (harder to exploit)

|

||||

|

||||

**EQ3 (Vulnerable System CIA)**:

|

||||

- Any High in VC/VI/VA -> 0 (severe impact)

|

||||

- Any Low in VC/VI/VA -> 1 (moderate impact)

|

||||

- All None -> 2 (no impact)

|

||||

|

||||

**EQ4 (Subsequent System CIA)**:

|

||||

- Any High in SC/SI/SA -> 0 (cascading impact)

|

||||

- Any Low in SC/SI/SA -> 1

|

||||

- All None -> 2

|

||||

|

||||

**EQ5 (Attack Requirements)**:

|

||||

- AT=None -> 0 (no preconditions)

|

||||

- AT=Present -> 1 (needs specific setup)

|

||||

|

||||

**EQ6 (Combined Impact Pattern)**:

|

||||

- >=2 High impacts (vuln OR sub) -> 0 (severe multi-impact)

|

||||

- 1 High impact -> 1

|

||||

- 0 High impacts -> 2

|

||||

|

||||

**Scoring Algorithm**:

|

||||

1. Parse base metrics from vector string

|

||||

2. Compute EQ1-EQ6 from metrics

|

||||

3. Build MacroVector string: "{EQ1}{EQ2}{EQ3}{EQ4}{EQ5}{EQ6}"

|

||||

4. Lookup base score from MacroVectorLookup table

|

||||

5. Round up to nearest 0.1 (per FIRST spec)

|

||||

|

||||

**Implementation**: `src/Policy/StellaOps.Policy.Scoring/Engine/CvssV4Engine.cs:262-359`

|

||||

|

||||

### 1.5 Threat Metrics and Exploit Maturity

|

||||

|

||||

CVSS v4.0 introduces **Threat Metrics** to adjust scores based on real-world exploit intelligence. The primary metric is **Exploit Maturity (E)**, which applies a multiplier to the base score.

|

||||

|

||||

**Exploit Maturity Values**:

|

||||

|

||||

| Value | Code | Multiplier | Description |

|

||||

|-------|------|------------|-------------|

|

||||

| Attacked | A | **1.00** | Active exploitation in the wild |

|

||||

| Proof of Concept | P | **0.94** | Public PoC exists but no active exploitation |

|

||||

| Unreported | U | **0.91** | No known exploit activity |

|

||||

| Not Defined | X | 1.00 | Default (assume worst case) |

|

||||

|

||||

**Score Computation (CVSS-BT)**:

|

||||

```

|

||||

Threat Score = Base Score x Threat Multiplier

|

||||

|

||||

Example:

|

||||

Base Score = 9.1

|

||||

Exploit Maturity = Unreported (U)

|

||||

Threat Score = 9.1 x 0.91 = 8.3 (rounded up)

|

||||

```

|

||||

|

||||

**Threat Metrics in Vector String**:

|

||||

```

|

||||

CVSS:4.0/AV:N/AC:L/AT:N/PR:N/UI:N/VC:H/VI:H/VA:H/SC:N/SI:N/SA:N/E:A

|

||||

^^^

|

||||

Exploit Maturity

|

||||

```

|

||||

|

||||

**Why Threat Metrics Matter**:

|

||||

- Reduces noise: An unreported vulnerability scores ~9% lower

|

||||

- Prioritizes real threats: Actively exploited vulns maintain full score

|

||||

- Evidence-based: Integrates with KEV, EPSS, and internal threat feeds

|

||||

|

||||

**Implementation**: `src/Policy/StellaOps.Policy.Scoring/Engine/CvssV4Engine.cs:365-375`

|

||||

|

||||

### 1.6 Environmental Score Modifiers

|

||||

|

||||

**Security Requirements Multipliers**:

|

||||

|

||||

| Requirement | Low | Medium | High |

|

||||

|-------------|-----|--------|------|

|

||||

| Confidentiality (CR) | 0.5 | 1.0 | 1.5 |

|

||||

| Integrity (IR) | 0.5 | 1.0 | 1.5 |

|

||||

| Availability (AR) | 0.5 | 1.0 | 1.5 |

|

||||

|

||||

**Modified Base Metrics** (can override any base metric):

|

||||

- MAV (Modified Attack Vector)

|

||||

- MAC (Modified Attack Complexity)

|

||||

- MAT (Modified Attack Requirements)

|

||||

- MPR (Modified Privileges Required)

|

||||

- MUI (Modified User Interaction)

|

||||

- MVC/MVI/MVA (Modified Vulnerable System CIA)

|

||||

- MSC/MSI/MSA (Modified Subsequent System CIA)

|

||||

|

||||

**Score Computation (CVSS-BE)**:

|

||||

1. Apply modified metrics to base metrics (if defined)

|

||||

2. Compute modified MacroVector

|

||||

3. Lookup modified base score

|

||||

4. Multiply by average of Security Requirements

|

||||

5. Clamp to [0, 10]

|

||||

|

||||

```

|

||||

Environmental Score = Modified Base x (CR + IR + AR) / 3

|

||||

```

|

||||

|

||||

### 1.7 Supplemental Metrics (Non-Scoring)

|

||||

|

||||

CVSS v4.0 introduces supplemental metrics that provide context but **do not affect the score**:

|

||||

|

||||

| Metric | Values | Purpose |

|

||||

|--------|--------|---------|

|

||||

| Safety (S) | Negligible/Present | Safety impact (ICS/OT systems) |

|

||||

| Automatable (AU) | No/Yes | Can attack be automated? |

|

||||

| Recovery (R) | Automatic/User/Irrecoverable | System recovery difficulty |

|

||||

| Value Density (V) | Diffuse/Concentrated | Target value concentration |

|

||||

| Response Effort (RE) | Low/Moderate/High | Effort to respond |

|

||||

| Provider Urgency (U) | Clear/Green/Amber/Red | Vendor urgency rating |

|

||||

|

||||

**Use Cases**:

|

||||

- **Safety**: Critical for ICS/SCADA vulnerability prioritization

|

||||

- **Automatable**: Indicates wormable vulnerabilities

|

||||

- **Provider Urgency**: Vendor-supplied priority signal

|

||||

|

||||

## 2. SCANNER DISCREPANCIES ANALYSIS

|

||||

|

||||

### 2.1 Trivy vs Grype Comparative Study (927 images)

|

||||

|

||||

**Findings**:

|

||||

- Tools disagreed on total vulnerability counts and specific CVE IDs

|

||||

- Grype: ~603,259 vulns; Trivy: ~473,661 vulns

|

||||

- Exact match in only 9.2% of cases (80 out of 865 vulnerable images)

|

||||

- Even with same counts, specific vulnerability IDs differed

|

||||

|

||||

**Root Causes**:

|

||||

- Divergent vulnerability databases

|

||||

- Differing matching logic

|

||||

- Incomplete visibility

|

||||

|

||||

### 2.2 VEX Tools Consistency Study (2025)

|

||||

|

||||

**Tools Tested**:

|

||||

- Trivy

|

||||

- Grype

|

||||

- OWASP DepScan

|

||||

- Docker Scout

|

||||

- Snyk CLI

|

||||

- OSV-Scanner

|

||||

- Vexy

|

||||

|

||||

**Results**:

|

||||

- Low consistency/similarity across container scanners

|

||||

- DepScan: 18,680 vulns; Vexy: 191 vulns (2 orders of magnitude difference)

|

||||

- Pairwise Jaccard indices very low (near 0)

|

||||

- 4 most consistent tools shared only ~18% common vulnerabilities

|

||||

|

||||

### 2.3 Implications for StellaOps

|

||||

|

||||

**Moats Needed**:

|

||||

- Golden-fixture benchmarks (container images with known, audited vulnerabilities)

|

||||

- Deterministic, replayable scans

|

||||

- Cryptographic integrity

|

||||

- VEX/SBOM proofs

|

||||

|

||||

**Metrics**:

|

||||

- **Closure rate**: Time from flagged to confirmed exploitable

|

||||

- **Proof coverage**: % of dependencies with valid SBOM/VEX proofs

|

||||

- **Differential-closure**: Impact of database updates or policy changes on prior scan results

|

||||

|

||||

### 2.4 Deterministic Receipt System

|

||||

|

||||

Every CVSS scoring decision in StellaOps is captured in a **deterministic receipt** that enables audit-grade reproducibility.

|

||||

|

||||

**Receipt Schema**:

|

||||

```json

|

||||

{

|

||||

"receiptId": "uuid",

|

||||

"inputHash": "sha256:...",

|

||||

"baseMetrics": { ... },

|

||||

"threatMetrics": { ... },

|

||||

"environmentalMetrics": { ... },

|

||||

"supplementalMetrics": { ... },

|

||||

"scores": {

|

||||

"baseScore": 9.1,

|

||||

"threatScore": 8.3,

|

||||

"environmentalScore": null,

|

||||

"fullScore": null,

|

||||

"effectiveScore": 8.3,

|

||||

"effectiveScoreType": "threat"

|

||||

},

|

||||

"policyRef": "policy/cvss-v4-default@v1.2.0",

|

||||

"policyDigest": "sha256:...",

|

||||

"evidence": [ ... ],

|

||||

"attestationRefs": [ ... ],

|

||||

"createdAt": "2025-12-14T00:00:00Z"

|

||||

}

|

||||

```

|

||||

|

||||

**InputHash Computation**:

|

||||

```

|

||||

inputHash = SHA256(canonicalize({

|

||||

baseMetrics,

|

||||

threatMetrics,

|

||||

environmentalMetrics,

|

||||

supplementalMetrics,

|

||||

policyRef,

|

||||

policyDigest

|

||||

}))

|

||||

```

|

||||

|

||||

**Determinism Guarantees**:

|

||||

- Same inputs -> same `inputHash` -> same scores

|

||||

- Receipts are immutable once created

|

||||

- Amendments create new receipts with `supersedes` reference

|

||||

- Optional DSSE signatures for cryptographic binding

|

||||

|

||||

**Implementation**: `src/Policy/StellaOps.Policy.Scoring/Receipts/ReceiptBuilder.cs`

|

||||

|

||||

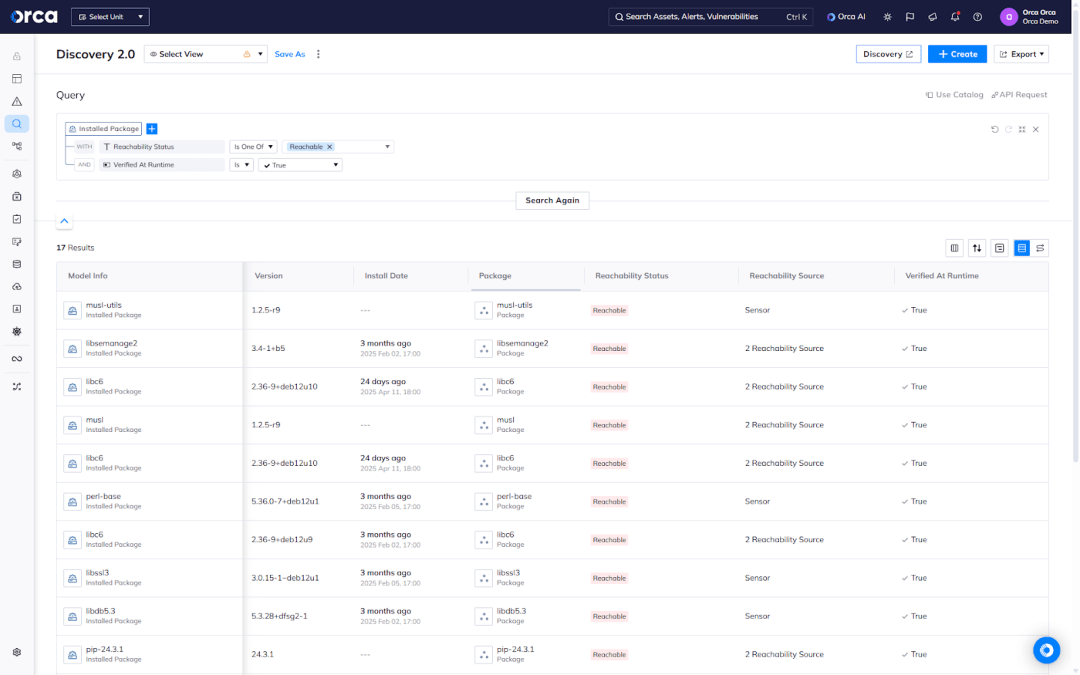

## 3. RUNTIME REACHABILITY APPROACHES

|

||||

|

||||

### 3.1 Runtime-Aware Vulnerability Prioritization

|

||||

|

||||

**Approach**:

|

||||

- Monitor container workloads at runtime to determine which vulnerable components are actually used

|

||||

- Use eBPF-based monitors, dynamic tracers, or built-in profiling

|

||||

- Construct runtime call graph or dependency graph

|

||||

- Map vulnerabilities to code entities (functions/modules)

|

||||

- If execution trace covers entity, vulnerability is "reachable"

|

||||

|

||||

**Findings**: ~85% of critical vulns in containers are in inactive code (Sysdig)

|

||||

|

||||

### 3.2 Reachability Analysis Techniques

|

||||

|

||||

**Static**:

|

||||

- Call-graph analysis (Snyk reachability, CodeQL)

|

||||

- All possible paths

|

||||

|

||||

**Dynamic**:

|

||||

- Runtime observation (loaded modules, invoked functions)

|

||||

- Actual runtime paths

|

||||

|

||||

**Granularity Levels**:

|

||||

- Function-level (precise, limited languages: Java, .NET)

|

||||

- Package/module-level (broader, coarse)

|

||||

|

||||

**Hybrid Approach**: Combine static (all possible paths) + dynamic (actual runtime paths)

|

||||

|

||||

## 4. CONTAINER PROVENANCE & SUPPLY CHAIN

|

||||

|

||||

### 4.1 In-Toto/DSSE Framework (NDSS 2024)

|

||||

|

||||

**Purpose**:

|

||||

- Track chain of custody in software builds

|

||||

- Signed metadata (attestations) for each step

|

||||

- DSSE: Dead Simple Signing Envelope for standardized signing

|

||||

|

||||

### 4.2 Scudo System

|

||||

|

||||

**Features**:

|

||||

- Combines in-toto with Uptane

|

||||

- Verifies build process and final image

|

||||

- Full verification on client inefficient; verify upstream and trust summary

|

||||

- Client checks final signature + hash only

|

||||

|

||||

### 4.3 Supply Chain Verification

|

||||

|

||||

**Signers**:

|

||||

- Developer key signs code commit

|

||||

- CI key signs build attestation

|

||||

- Scanner key signs vulnerability attestation

|

||||

- Release key signs container image

|

||||

|

||||

**Verification Optimization**: Repository verifies in-toto attestations; client verifies final metadata only

|

||||

|

||||

## 5. VENDOR EVIDENCE PATTERNS

|

||||

|

||||

### 5.1 Snyk

|

||||

|

||||

**Evidence Handling**:

|

||||

- Runtime insights integration (Nov 2025)

|

||||

- Evolution from static-scan noise to prioritized workflow

|

||||

- Deployment context awareness

|

||||

|

||||

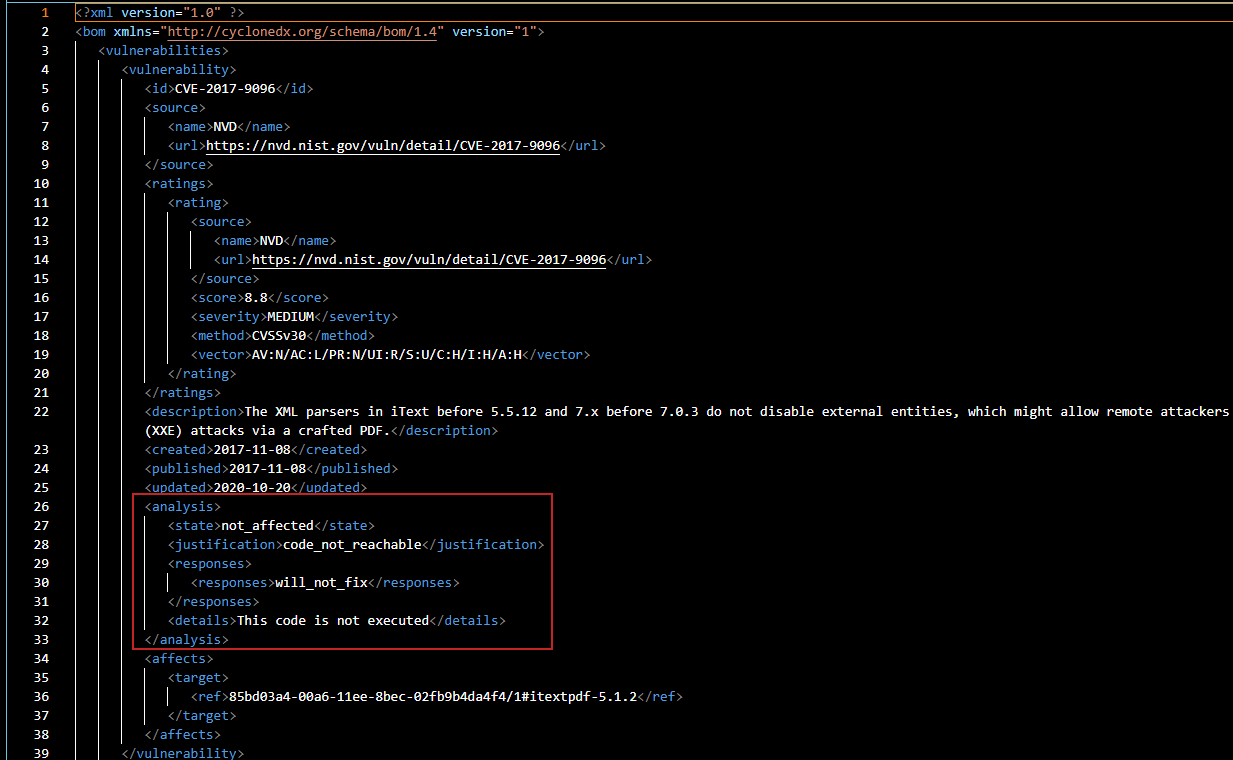

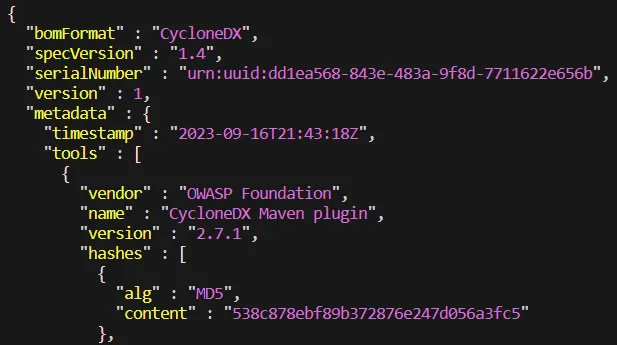

**VEX Support**:

|

||||

- CycloneDX VEX format

|

||||

- Reachability-aware suppression

|

||||

|

||||

### 5.2 GitHub Advanced Security

|

||||

|

||||

**Features**:

|

||||

- CodeQL for static analysis

|

||||

- Dependency graph

|

||||

- Dependabot alerts

|

||||

- Security advisories

|

||||

|

||||

**Evidence**:

|

||||

- SARIF output

|

||||

- SBOM generation (SPDX)

|

||||

|

||||

### 5.3 Aqua Security

|

||||

|

||||

**Approach**:

|

||||

- Runtime protection

|

||||

- Image scanning

|

||||

- Kubernetes security

|

||||

|

||||

**Evidence**:

|

||||

- Dynamic runtime traces

|

||||

- Network policy violations

|

||||

|

||||

### 5.4 Anchore/Grype

|

||||

|

||||

**Features**:

|

||||

- Open-source scanner

|

||||

- Policy-based compliance

|

||||

- SBOM generation

|

||||

|

||||

**Evidence**:

|

||||

- CycloneDX/SPDX SBOM

|

||||

- Vulnerability reports (JSON)

|

||||

|

||||

### 5.5 Prisma Cloud

|

||||

|

||||

**Features**:

|

||||

- Cloud-native security

|

||||

- Runtime defense

|

||||

- Compliance monitoring

|

||||

|

||||

**Evidence**:

|

||||

- Multi-cloud attestations

|

||||

- Compliance reports

|

||||

|

||||

## 6. STELLAOPS DIFFERENTIATORS

|

||||

|

||||

### 6.1 Reachability-with-Evidence

|

||||

|

||||

**Why it Matters**:

|

||||

- Snyk Container integrating runtime insights as "signal" (Nov 2025)

|

||||

- Evolution from static-scan noise to prioritized, actionable workflow

|

||||

- Deployment context: what's running, what's reachable, what's exploitable

|

||||

|

||||

**Implication**: Container security triage relies on runtime/context signals

|

||||

|

||||

### 6.2 Proof-First Architecture

|

||||

|

||||

**Advantages**:

|

||||

- Every claim backed by DSSE-signed attestations

|

||||

- Cryptographic integrity

|

||||

- Audit trail

|

||||

- Offline verification

|

||||

|

||||

### 6.3 Deterministic Scanning

|

||||

|

||||

**Advantages**:

|

||||

- Reproducible results

|

||||

- Bit-identical outputs given same inputs

|

||||

- Replay manifests

|

||||

- Golden fixture benchmarks

|

||||

|

||||

### 6.4 VEX-First Decisioning

|

||||

|

||||

**Advantages**:

|

||||

- Exploitability modeled in OpenVEX

|

||||

- Lattice logic for stable outcomes

|

||||

- Evidence-linked justifications

|

||||

|

||||

### 6.5 Offline/Air-Gap First

|

||||

|

||||

**Advantages**:

|

||||

- No hidden network dependencies

|

||||

- Bundled feeds, keys, Rekor snapshots

|

||||

- Verifiable without internet access

|

||||

|

||||

### 6.6 CVSS + KEV Risk Signal Combination

|

||||

|

||||

StellaOps combines CVSS scores with KEV (Known Exploited Vulnerabilities) data using a deterministic formula:

|

||||

|

||||

**Risk Formula**:

|

||||

```

|

||||

risk_score = clamp01((cvss / 10) + kevBonus)

|

||||

|

||||

where:

|

||||

kevBonus = 0.2 if vulnerability is in CISA KEV catalog

|

||||

kevBonus = 0.0 otherwise

|

||||

```

|

||||

|

||||

**Example Calculations**:

|

||||

|

||||

| CVSS Score | KEV Flag | Risk Score |

|

||||

|------------|----------|------------|

|

||||

| 9.0 | No | 0.90 |

|

||||

| 9.0 | Yes | 1.00 (clamped) |

|

||||

| 7.5 | No | 0.75 |

|

||||

| 7.5 | Yes | 0.95 |

|

||||

| 5.0 | No | 0.50 |

|

||||

| 5.0 | Yes | 0.70 |

|

||||

|

||||

**Rationale**:

|

||||

- KEV inclusion indicates active exploitation

|

||||

- 20% bonus prioritizes known-exploited over theoretical risks

|

||||

- Clamping prevents scores > 1.0

|

||||

- Deterministic formula enables reproducible prioritization

|

||||

|

||||

**Implementation**: `src/RiskEngine/StellaOps.RiskEngine/StellaOps.RiskEngine.Core/Providers/CvssKevProvider.cs`

|

||||

|

||||

## 7. COMPETITIVE POSITIONING

|

||||

|

||||

### 7.1 Market Segments

|

||||

|

||||

| Vendor | Strength | Weakness vs StellaOps |

|

||||

|--------|----------|----------------------|

|

||||

| Snyk | Developer experience | Less deterministic, SaaS-only |

|

||||

| Aqua | Runtime protection | Less reachability precision |

|

||||

| Anchore | Open-source, SBOM | Less proof infrastructure |

|

||||

| Prisma Cloud | Cloud-native breadth | Less offline/air-gap support |

|

||||

| GitHub | Integration with dev workflow | Less cryptographic proof chain |

|

||||

|

||||

### 7.2 StellaOps Unique Value

|

||||

|

||||

1. **Deterministic + Provable**: Bit-identical scans with cryptographic proofs

|

||||

2. **Reachability + Runtime**: Hybrid static/dynamic analysis

|

||||

3. **Offline/Sovereign**: Air-gap operation with regional crypto (FIPS/GOST/eIDAS/SM)

|

||||

4. **VEX-First**: Evidence-backed decisioning, not just alerting

|

||||

5. **AGPL-3.0**: Self-hostable, no vendor lock-in

|

||||

|

||||

## 8. MOAT METRICS

|

||||

|

||||

### 8.1 Proof Coverage

|

||||

|

||||

```

|

||||

proof_coverage = findings_with_valid_receipts / total_findings

|

||||

Target: ≥95%

|

||||

```

|

||||

|

||||

### 8.2 Closure Rate

|

||||

|

||||

```

|

||||

closure_rate = time_from_flagged_to_confirmed_exploitable

|

||||

Target: P95 < 24 hours

|

||||

```

|

||||

|

||||

### 8.3 Differential-Closure Impact

|

||||

|

||||

```

|

||||

differential_impact = findings_changed_after_db_update / total_findings

|

||||

Target: <5% (non-code changes)

|

||||

```

|

||||

|

||||

### 8.4 False Positive Reduction

|

||||

|

||||

```

|

||||

fp_reduction = (baseline_fp_rate - stella_fp_rate) / baseline_fp_rate

|

||||

Target: ≥50% vs baseline scanner

|

||||

```

|

||||

|

||||

### 8.5 Reachability Accuracy

|

||||

|

||||

```

|

||||

reachability_accuracy = correct_r0_r1_r2_r3_classifications / total_classifications

|

||||

Target: ≥90%

|

||||

```

|

||||

|

||||

## 9. COMPETITIVE INTELLIGENCE TRACKING

|

||||

|

||||

### 9.1 Feature Parity Matrix

|

||||

|

||||

| Feature | Snyk | Aqua | Anchore | Prisma | StellaOps |

|

||||

|---------|------|------|---------|--------|-----------|

|

||||

| SBOM Generation | ✓ | ✓ | ✓ | ✓ | ✓ |

|

||||

| VEX Support | ✓ | ✗ | Partial | ✗ | ✓ |

|

||||

| Reachability Analysis | ✓ | ✗ | ✗ | ✗ | ✓ |

|

||||

| Runtime Evidence | ✓ | ✓ | ✗ | ✓ | ✓ |

|

||||

| Cryptographic Proofs | ✗ | ✗ | ✗ | ✗ | ✓ |

|

||||

| Deterministic Scans | ✗ | ✗ | ✗ | ✗ | ✓ |

|

||||

| Offline/Air-Gap | ✗ | Partial | ✗ | ✗ | ✓ |

|

||||

| Regional Crypto | ✗ | ✗ | ✗ | ✗ | ✓ |

|

||||

|

||||

### 9.2 Monitoring Strategy

|

||||

|

||||

- Track vendor release notes

|

||||

- Monitor GitHub repos for feature announcements

|

||||

- Participate in security conferences

|

||||

- Engage with customer feedback

|

||||

- Update competitive matrix quarterly

|

||||

|

||||

## 10. MESSAGING FRAMEWORK

|

||||

|

||||

### 10.1 Core Message

|

||||

|

||||

"StellaOps provides deterministic, proof-backed vulnerability management with reachability analysis for offline/air-gapped environments."

|

||||

|

||||

### 10.2 Key Differentiators (Elevator Pitch)

|

||||

|

||||

1. **Deterministic**: Same inputs → same outputs, every time

|

||||

2. **Provable**: Cryptographic proof chains for every decision

|

||||

3. **Reachable**: Static + runtime analysis, not just presence

|

||||

4. **Sovereign**: Offline operation, regional crypto compliance

|

||||

5. **Open**: AGPL-3.0, self-hostable, no lock-in

|

||||

|

||||

### 10.3 Target Personas

|

||||

|

||||

- **Security Engineers**: Need proof-backed decisions for audits

|

||||

- **DevOps Teams**: Need deterministic scans in CI/CD

|

||||

- **Compliance Officers**: Need offline/air-gap for regulated environments

|

||||

- **Platform Engineers**: Need self-hostable, sovereign solution

|

||||

|

||||

## 11. BENCHMARKING PROTOCOL

|

||||

|

||||

### 11.1 Comparative Test Suite

|

||||

|

||||

**Images**:

|

||||

- 50 representative production images

|

||||

- Known vulnerabilities labeled

|

||||

- Reachability ground truth established

|

||||

|

||||

**Metrics**:

|

||||

- Precision (1 - FP rate)

|

||||

- Recall (TP / (TP + FN))

|

||||

- F1 score

|

||||

- Scan time (P50, P95)

|

||||

- Determinism (identical outputs over 10 runs)

|

||||

|

||||

### 11.2 Test Execution

|

||||

|

||||

```bash

|

||||

# Run StellaOps scan

|

||||

stellaops scan --image test-image:v1 --output stella-results.json

|

||||

|

||||

# Run competitor scans

|

||||

trivy image --format json test-image:v1 > trivy-results.json

|

||||

grype test-image:v1 -o json > grype-results.json

|

||||

snyk container test test-image:v1 --json > snyk-results.json

|

||||

|

||||

# Compare results

|

||||

stellaops benchmark compare \

|

||||

--ground-truth ground-truth.json \

|

||||

--stella stella-results.json \

|

||||

--trivy trivy-results.json \

|

||||

--grype grype-results.json \

|

||||

--snyk snyk-results.json

|

||||

```

|

||||

|

||||

### 11.3 Results Publication

|

||||

|

||||

- Publish benchmarks quarterly

|

||||

- Open-source test images and ground truth

|

||||

- Invite community contributions

|

||||

- Document methodology transparently

|

||||

|

||||

---

|

||||

|

||||

**Document Version**: 1.0

|

||||

**Target Platform**: .NET 10, PostgreSQL ≥16, Angular v17

|

||||

@@ -0,0 +1,817 @@

|

||||

# Determinism and Reproducibility Technical Reference

|

||||

|

||||

**Source Advisories**:

|

||||

- 07-Dec-2025 - Designing Deterministic Vulnerability Scores

|

||||

- 12-Dec-2025 - Designing a Deterministic Vulnerability Scoring Matrix

|

||||

- 12-Dec-2025 - Replay Fidelity as a Proof Metric

|

||||

- 01-Dec-2025 - Benchmarks for a Testable Security Moat

|

||||

- 02-Dec-2025 - Benchmarking a Testable Security Moat

|

||||

|

||||

**Last Updated**: 2025-12-14

|

||||

|

||||

---

|

||||

|

||||

## 1. SCORE FORMULA (BASIS POINTS)

|

||||

|

||||

**Total Score:**

|

||||

```

|

||||

riskScore = (wB*B + wR*R + wE*E + wP*P) / 10000

|

||||

```

|

||||

|

||||

**Default Weights (basis points, sum = 10000):**

|

||||

- `wB=1000` (10%) - Base Severity

|

||||

- `wR=4500` (45%) - Reachability

|

||||

- `wE=3000` (30%) - Evidence

|

||||

- `wP=1500` (15%) - Provenance

|

||||

|

||||

## 2. SUBSCORE DEFINITIONS (0-100 integers)

|

||||

|

||||

### 2.1 BaseSeverity (B)

|

||||

|

||||

```

|

||||

B = round(CVSS * 10) // CVSS 0.0-10.0 → 0-100

|

||||

```

|

||||

|

||||

### 2.2 Reachability (R)

|

||||

|

||||

Hop Buckets:

|

||||

```

|

||||

0-2 hops: 100

|

||||

3 hops: 85

|

||||

4 hops: 70

|

||||

5 hops: 55

|

||||

6 hops: 45

|

||||

7 hops: 35

|

||||

8+ hops: 20

|

||||

unreachable: 0

|

||||

```

|

||||

|

||||

Gate Multipliers (in basis points):

|

||||

```

|

||||

behind feature flag: ×7000

|

||||

auth required: ×8000

|

||||

admin only: ×8500

|

||||

non-default config: ×7500

|

||||

```

|

||||

|

||||

Final R:

|

||||

```

|

||||

R = bucketScore * gateMultiplier / 10000

|

||||

```

|

||||

|

||||

### 2.3 Evidence (E)

|

||||

|

||||

Points:

|

||||

```

|

||||

runtime trace: +60

|

||||

DAST/integration test: +30

|

||||

SAST precise sink: +20

|

||||

SCA presence only: +10

|

||||

```

|

||||

|

||||

Freshness Multiplier (basis points):

|

||||

```

|

||||

≤ 7 days: ×10000

|

||||

≤ 30 days: ×9000

|

||||

≤ 90 days: ×7500

|

||||

≤ 180 days: ×6000

|

||||

≤ 365 days: ×4000

|

||||

> 365 days: ×2000

|

||||

```

|

||||

|

||||

Final E:

|

||||

```

|

||||

E = min(100, sum(points)) * freshness / 10000

|

||||

```

|

||||

|

||||

### 2.4 Provenance (P)

|

||||

|

||||

```

|

||||

unsigned/unknown: 0

|

||||

signed image: 30

|

||||

signed + SBOM hash-linked: 60

|

||||

signed + SBOM + DSSE attestations: 80

|

||||

above + reproducible build match: 100

|

||||

```

|

||||

|

||||

## 3. SCORE POLICY YAML SCHEMA

|

||||

|

||||

```yaml

|

||||

policyVersion: score.v1

|

||||

weightsBps:

|

||||

baseSeverity: 1000

|

||||

reachability: 4500

|

||||

evidence: 3000

|

||||

provenance: 1500

|

||||

|

||||

reachability:

|

||||

hopBuckets:

|

||||

- { maxHops: 2, score: 100 }

|

||||

- { maxHops: 3, score: 85 }

|

||||

- { maxHops: 4, score: 70 }

|

||||

- { maxHops: 5, score: 55 }

|

||||

- { maxHops: 6, score: 45 }

|

||||

- { maxHops: 7, score: 35 }

|

||||

- { maxHops: 9999, score: 20 }

|

||||

unreachableScore: 0

|

||||

gateMultipliersBps:

|

||||

featureFlag: 7000

|

||||

authRequired: 8000

|

||||

adminOnly: 8500

|

||||

nonDefaultConfig: 7500

|

||||

|

||||

evidence:

|

||||

points:

|

||||

runtime: 60

|

||||

dast: 30

|

||||

sast: 20

|

||||

sca: 10

|

||||

freshnessBuckets:

|

||||

- { maxAgeDays: 7, multiplierBps: 10000 }

|

||||

- { maxAgeDays: 30, multiplierBps: 9000 }

|

||||

- { maxAgeDays: 90, multiplierBps: 7500 }

|

||||

- { maxAgeDays: 180, multiplierBps: 6000 }

|

||||

- { maxAgeDays: 365, multiplierBps: 4000 }

|

||||

- { maxAgeDays: 99999, multiplierBps: 2000 }

|

||||

|

||||

provenance:

|

||||

levels:

|

||||

unsigned: 0

|

||||

signed: 30

|

||||

signedWithSbom: 60

|

||||

signedWithSbomAndAttestations: 80

|

||||

reproducible: 100

|

||||

|

||||

overrides:

|

||||

- name: knownExploitedAndReachable

|

||||

when:

|

||||

flags:

|

||||

knownExploited: true

|

||||

minReachability: 70

|

||||

setScore: 95

|

||||

|

||||

- name: unreachableAndOnlySca

|

||||

when:

|

||||

maxReachability: 0

|

||||

maxEvidence: 10

|

||||

clampMaxScore: 25

|

||||

```

|

||||

|

||||

## 4. SCORE DATA CONTRACTS

|

||||

|

||||

### 4.1 ScoreInput

|

||||

|

||||

```json

|

||||

{

|

||||

"asOf": "2025-12-14T10:20:30Z",

|

||||

"policyVersion": "score.v1",

|

||||

"reachabilityDigest": "sha256:...",

|

||||

"evidenceDigest": "sha256:...",

|

||||

"provenanceDigest": "sha256:...",

|

||||

"baseSeverityDigest": "sha256:..."

|

||||

}

|

||||

```

|

||||

|

||||

### 4.2 ScoreResult

|

||||

|

||||

```json

|

||||

{

|

||||

"scoreId": "score_...",

|

||||

"riskScore": 73,

|

||||

"subscores": {

|

||||

"baseSeverity": 75,

|

||||

"reachability": 85,

|

||||

"evidence": 60,

|

||||

"provenance": 60

|

||||

},

|

||||

"cvss": {

|

||||

"v": "3.1",

|

||||

"base": 7.5,

|

||||

"environmental": 5.3,

|

||||

"vector": "CVSS:3.1/AV:N/AC:L/..."

|

||||

},

|

||||

"inputsRef": ["evidence_sha256:...", "env_sha256:..."],

|

||||

"policyVersion": "score.v1",

|

||||

"policyDigest": "sha256:...",

|

||||

"engineVersion": "stella-scorer@1.8.2",

|

||||

"computedAt": "2025-12-09T10:20:30Z",

|

||||

"resultDigest": "sha256:...",

|

||||

"explain": [

|

||||

{"factor": "reachability", "value": 85, "reason": "3 hops from HTTP endpoint"},

|

||||

{"factor": "evidence", "value": 60, "reason": "Runtime trace (60pts), 20 days old (×90%)"}

|

||||

]

|

||||

}

|

||||

```

|

||||

|

||||

### 4.3 ReachabilityReport

|

||||

|

||||

```json

|

||||

{

|

||||

"artifactDigest": "sha256:...",

|

||||

"graphDigest": "sha256:...",

|

||||

"vulnId": "CVE-2024-1234",

|

||||

"vulnerableSymbol": "org.example.VulnClass.vulnMethod",

|

||||

"entrypoints": ["POST /api/upload"],

|

||||

"shortestPath": {

|

||||

"hops": 3,

|

||||

"nodes": [

|

||||

{"symbol": "UploadController.handleUpload", "file": "Controller.cs", "line": 42},

|

||||

{"symbol": "ProcessorService.process", "file": "Service.cs", "line": 18},

|

||||

{"symbol": "org.example.VulnClass.vulnMethod", "file": null, "line": null}

|

||||

]

|

||||

},

|

||||

"gates": [

|

||||

{"type": "authRequired", "detail": "Requires JWT token"},

|

||||

{"type": "featureFlag", "detail": "FEATURE_UPLOAD_V2=true"}

|

||||

],

|

||||

"computedAt": "2025-12-14T10:15:30Z",

|

||||

"toolVersion": "reachability-analyzer@2.1.0"

|

||||

}

|

||||

```

|

||||

|

||||

### 4.4 EvidenceBundle

|

||||

|

||||

```json

|

||||

{

|

||||

"evidenceId": "sha256:...",

|

||||

"artifactDigest": "sha256:...",

|

||||

"vulnId": "CVE-2024-1234",

|

||||

"type": "RUNTIME",

|

||||

"tool": "runtime-tracer@1.0.0",

|

||||

"timestamp": "2025-12-10T14:30:00Z",

|

||||

"confidence": 95,

|

||||

"subject": "org.example.VulnClass.vulnMethod",

|

||||

"payloadDigest": "sha256:..."

|

||||

}

|

||||

```

|

||||

|

||||

### 4.5 ProvenanceReport

|

||||

|

||||

```json

|

||||

{

|

||||

"artifactDigest": "sha256:...",

|

||||

"signatureChecks": [

|

||||

{"signer": "CI-KEY-1", "algorithm": "ECDSA-P256", "result": "VALID"}

|

||||

],

|

||||

"sbomDigest": "sha256:...",

|

||||

"sbomType": "cyclonedx-1.6",

|

||||

"attestations": ["sha256:...", "sha256:..."],

|

||||

"transparencyLogRefs": ["rekor://..."],

|

||||

"reproducibleMatch": true,

|

||||

"computedAt": "2025-12-14T10:15:30Z",

|

||||

"toolVersion": "provenance-verifier@1.0.0"

|

||||

}

|

||||

```

|

||||

|

||||

## 5. DETERMINISM CONSTRAINTS

|

||||

|

||||

### 5.1 Fixed-Point Math

|

||||

|

||||

- Use integer basis points (100% = 10,000 bps)

|

||||

- No floating point in scoring math

|

||||

- Round only at final display

|

||||

|

||||

### 5.2 Canonical Serialization

|

||||

|

||||

- RFC-style canonical JSON (JCS)

|

||||

- Sort keys and arrays deterministically

|

||||

- Stable ordering for explanation lists by `(factorId, contributingObjectDigest)`

|

||||

|

||||

### 5.3 Time Handling

|

||||

|

||||

- No implicit time

|

||||

- `asOf` is explicit input

|

||||

- Freshness = `asOf - evidence.timestamp`

|

||||

- Use monotonic time internally

|

||||

|

||||

## 6. FIDELITY METRICS

|

||||

|

||||

### 6.1 Bitwise Fidelity (BF)

|

||||

|

||||

```

|

||||

BF = identical_outputs / total_replays

|

||||

Target: ≥ 0.98

|

||||

```

|

||||

|

||||

### 6.2 Semantic Fidelity (SF)

|

||||

|

||||

- Normalized object comparison (same packages, versions, CVEs, severities, verdicts)

|

||||

- Allows formatting differences

|

||||

|

||||

### 6.3 Policy Fidelity (PF)

|

||||

|

||||

- Final policy decision (pass/fail + reason codes) matches

|

||||

|

||||

## 7. SCAN MANIFEST SCHEMA

|

||||

|

||||

```json

|

||||

{

|

||||

"manifest_version": "1.0",

|

||||

"scan_id": "scan_123",

|

||||

"created_at": "2025-12-12T10:15:30Z",

|

||||

|

||||

"input": {

|

||||

"type": "oci_image",

|

||||

"image_ref": "registry/app@sha256:...",

|

||||

"layers": ["sha256:...", "sha256:..."],

|

||||

"source_provenance": {"repo_sha": "abc123", "build_id": "ci-999"}

|

||||

},

|

||||

|

||||

"scanner": {

|

||||

"engine": "stella",

|

||||

"scanner_image_digest": "sha256:...",

|

||||

"scanner_version": "2025.12.0",

|

||||

"config_digest": "sha256:...",

|

||||

"flags": ["--deep", "--vex"]

|

||||

},

|

||||

|

||||

"feeds": {

|

||||

"vuln_feed_bundle_digest": "sha256:...",

|

||||

"license_db_digest": "sha256:..."

|

||||

},

|

||||

|

||||

"policy": {

|

||||

"policy_bundle_digest": "sha256:...",

|

||||

"policy_set": "prod-default"

|

||||

},

|

||||

|

||||

"environment": {

|

||||

"arch": "amd64",

|

||||

"os": "linux",

|

||||

"tz": "UTC",

|

||||

"locale": "C",

|

||||

"network": "disabled",

|

||||

"clock_mode": "frozen",

|

||||

"clock_value": "2025-12-12T10:15:30Z"

|

||||

},

|

||||

|

||||

"normalization": {

|

||||

"canonicalizer_version": "1.2.0",

|

||||

"sbom_schema": "cyclonedx-1.6",

|

||||

"vex_schema": "cyclonedx-vex-1.0"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

## 8. MISMATCH CLASSIFICATION TAXONOMY

|

||||

|

||||

```

|

||||

- Feed drift

|

||||

- Policy drift

|

||||

- Runtime drift

|

||||

- Scanner drift

|

||||

- Nondeterminism (ordering, concurrency, RNG, time-based logic)

|

||||

- External IO

|

||||

```

|

||||

|

||||

## 9. POSTGRESQL SCHEMA

|

||||

|

||||

```sql

|

||||

CREATE TABLE scan_manifest (

|

||||

manifest_id UUID PRIMARY KEY,

|

||||

created_at TIMESTAMPTZ NOT NULL DEFAULT NOW(),

|

||||

|

||||

artifact_digest TEXT NOT NULL,

|

||||

feeds_merkle_root TEXT NOT NULL,

|

||||

engine_build_hash TEXT NOT NULL,

|

||||

policy_lattice_hash TEXT NOT NULL,

|

||||

|

||||

ruleset_hash TEXT NOT NULL,

|

||||

config_flags JSONB NOT NULL,

|

||||

|

||||

environment_fingerprint JSONB NOT NULL,

|

||||

|

||||

raw_manifest JSONB NOT NULL,

|

||||

raw_manifest_sha256 TEXT NOT NULL

|

||||

);

|

||||

|

||||

CREATE TABLE scan_execution (

|

||||

execution_id UUID PRIMARY KEY,

|

||||

manifest_id UUID NOT NULL REFERENCES scan_manifest(manifest_id) ON DELETE CASCADE,

|

||||

|

||||

started_at TIMESTAMPTZ NOT NULL,

|

||||

finished_at TIMESTAMPTZ NOT NULL,

|

||||

|

||||

t_ingest_ms INT NOT NULL,

|

||||

t_analyze_ms INT NOT NULL,

|

||||

t_reachability_ms INT NOT NULL,

|

||||

t_vex_ms INT NOT NULL,

|

||||

t_sign_ms INT NOT NULL,

|

||||

t_publish_ms INT NOT NULL,

|

||||

|

||||

proof_bundle_sha256 TEXT NOT NULL,

|

||||

findings_sha256 TEXT NOT NULL,

|

||||

vex_bundle_sha256 TEXT NOT NULL,

|

||||

|

||||

replay_mode BOOLEAN NOT NULL DEFAULT FALSE

|

||||

);

|

||||

|

||||

CREATE TABLE classification_history (

|

||||

id BIGSERIAL PRIMARY KEY,

|

||||

artifact_digest TEXT NOT NULL,

|

||||

manifest_id UUID NOT NULL REFERENCES scan_manifest(manifest_id) ON DELETE CASCADE,

|

||||

execution_id UUID NOT NULL REFERENCES scan_execution(execution_id) ON DELETE CASCADE,

|

||||

|

||||

previous_status TEXT NOT NULL,

|

||||

new_status TEXT NOT NULL,

|

||||

cause TEXT NOT NULL,

|

||||

|

||||

changed_at TIMESTAMPTZ NOT NULL DEFAULT NOW()

|

||||

);

|

||||

|

||||

CREATE VIEW scan_tte AS

|

||||

SELECT

|

||||

execution_id,

|

||||

manifest_id,

|

||||

(finished_at - started_at) AS tte_interval

|

||||

FROM scan_execution;

|

||||

|

||||

CREATE MATERIALIZED VIEW fn_drift_stats AS

|

||||

SELECT

|

||||

date_trunc('day', changed_at) AS day_bucket,

|

||||

COUNT(*) FILTER (WHERE new_status = 'affected') AS affected_count,

|

||||

COUNT(*) AS total_reclassified,

|

||||

ROUND(

|

||||

(COUNT(*) FILTER (WHERE new_status = 'affected')::numeric /

|

||||

NULLIF(COUNT(*), 0)) * 100, 4

|

||||

) AS drift_percent

|

||||

FROM classification_history

|

||||

GROUP BY 1;

|

||||

```

|

||||

|

||||

## 10. C# CANONICAL DATA STRUCTURES

|

||||

|

||||

```csharp

|

||||

public sealed record CanonicalScanManifest

|

||||

{

|

||||

public required string ArtifactDigest { get; init; }

|

||||

public required string FeedsMerkleRoot { get; init; }

|

||||

public required string EngineBuildHash { get; init; }

|

||||

public required string PolicyLatticeHash { get; init; }

|

||||

public required string RulesetHash { get; init; }

|

||||

|

||||

public required IReadOnlyDictionary<string, string> ConfigFlags { get; init; }

|

||||

public required EnvironmentFingerprint Environment { get; init; }

|

||||

}

|

||||

|

||||

public sealed record EnvironmentFingerprint

|

||||

{

|

||||

public required string CpuModel { get; init; }

|

||||

public required string RuntimeVersion { get; init; }

|

||||

public required string Os { get; init; }

|

||||

public required IReadOnlyDictionary<string, string> Extra { get; init; }

|

||||

}

|

||||

|

||||

public sealed record ScanExecutionMetrics

|

||||

{

|

||||

public required int IngestMs { get; init; }

|

||||

public required int AnalyzeMs { get; init; }

|

||||

public required int ReachabilityMs { get; init; }

|

||||

public required int VexMs { get; init; }

|

||||

public required int SignMs { get; init; }

|

||||

public required int PublishMs { get; init; }

|

||||

}

|

||||

```

|

||||

|

||||

## 11. CANONICALIZATION IMPLEMENTATION

|

||||

|

||||

```csharp

|

||||

internal static class CanonicalJson

|

||||

{

|

||||

private static readonly JsonSerializerOptions Options = new()

|

||||

{

|

||||

WriteIndented = false,

|

||||

PropertyNamingPolicy = JsonNamingPolicy.CamelCase

|

||||

};

|

||||

|

||||

public static string Serialize(object obj)

|

||||

{

|

||||

using var stream = new MemoryStream();

|

||||

using (var writer = new Utf8JsonWriter(stream, new JsonWriterOptions

|

||||

{

|

||||

Indented = false,

|

||||

SkipValidation = false

|

||||

}))

|

||||

{

|

||||

JsonSerializer.Serialize(writer, obj, obj.GetType(), Options);

|

||||

}

|

||||

|

||||

var bytes = stream.ToArray();

|

||||

var canonical = JsonCanonicalizer.Canonicalize(bytes);

|

||||

|

||||

return canonical;

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### 11.1 Full Canonical JSON with Sorted Keys

|

||||

|

||||

> **Added**: 2025-12-17 from "Building a Deeper Moat Beyond Reachability" advisory

|

||||

|

||||

```csharp

|

||||

using System.Security.Cryptography;

|

||||

using System.Text;

|

||||

using System.Text.Json;

|

||||

|

||||

public static class CanonJson

|

||||

{

|

||||

public static byte[] Canonicalize<T>(T obj)

|

||||

{

|

||||

var json = JsonSerializer.SerializeToUtf8Bytes(obj, new JsonSerializerOptions

|

||||

{

|

||||

WriteIndented = false,

|

||||

PropertyNamingPolicy = JsonNamingPolicy.CamelCase

|

||||

});

|

||||

|

||||

using var doc = JsonDocument.Parse(json);

|

||||

using var ms = new MemoryStream();

|

||||

using var writer = new Utf8JsonWriter(ms, new JsonWriterOptions { Indented = false });

|

||||

|

||||

WriteElementSorted(doc.RootElement, writer);

|

||||

writer.Flush();

|

||||

return ms.ToArray();

|

||||

}

|

||||

|

||||

private static void WriteElementSorted(JsonElement el, Utf8JsonWriter w)

|

||||

{

|

||||

switch (el.ValueKind)

|

||||

{

|

||||

case JsonValueKind.Object:

|

||||

w.WriteStartObject();

|

||||

foreach (var prop in el.EnumerateObject().OrderBy(p => p.Name, StringComparer.Ordinal))

|

||||

{

|

||||

w.WritePropertyName(prop.Name);

|

||||

WriteElementSorted(prop.Value, w);

|

||||

}

|

||||

w.WriteEndObject();

|

||||

break;

|

||||

|

||||

case JsonValueKind.Array:

|

||||

w.WriteStartArray();

|

||||

foreach (var item in el.EnumerateArray())

|

||||

WriteElementSorted(item, w);

|

||||

w.WriteEndArray();

|

||||

break;

|

||||

|

||||

default:

|

||||

el.WriteTo(w);

|

||||

break;

|

||||

}

|

||||

}

|

||||

|

||||

public static string Sha256Hex(ReadOnlySpan<byte> bytes)

|

||||

=> Convert.ToHexString(SHA256.HashData(bytes)).ToLowerInvariant();

|

||||

}

|

||||

```

|

||||

|

||||

## 11.2 SCORE PROOF LEDGER

|

||||

|

||||

> **Added**: 2025-12-17 from "Building a Deeper Moat Beyond Reachability" advisory

|

||||

|

||||

The Score Proof Ledger provides an append-only trail of scoring decisions with per-node hashing.

|

||||

|

||||

### Proof Node Types

|

||||

|

||||

```csharp

|

||||

public enum ProofNodeKind { Input, Transform, Delta, Score }

|

||||

|

||||

public sealed record ProofNode(

|

||||

string Id,

|

||||

ProofNodeKind Kind,

|

||||

string RuleId,

|

||||

string[] ParentIds,

|

||||

string[] EvidenceRefs, // digests / refs inside bundle

|

||||

double Delta, // 0 for non-Delta nodes

|

||||

double Total, // running total at this node

|

||||

string Actor, // module name

|

||||

DateTimeOffset TsUtc,

|

||||

byte[] Seed,

|

||||

string NodeHash // sha256 over canonical node (excluding NodeHash)

|

||||

);

|

||||

```

|

||||

|

||||

### Proof Hashing

|

||||

|

||||

```csharp

|

||||

public static class ProofHashing

|

||||

{

|

||||

public static ProofNode WithHash(ProofNode n)

|

||||

{

|

||||

var canonical = CanonJson.Canonicalize(new

|

||||

{

|

||||

n.Id, n.Kind, n.RuleId, n.ParentIds, n.EvidenceRefs, n.Delta, n.Total,

|

||||

n.Actor, n.TsUtc, Seed = Convert.ToBase64String(n.Seed)

|

||||

});

|

||||

|

||||

return n with { NodeHash = "sha256:" + CanonJson.Sha256Hex(canonical) };

|

||||

}

|

||||

|

||||

public static string ComputeRootHash(IEnumerable<ProofNode> nodesInOrder)

|

||||

{

|

||||

// Deterministic: root hash over canonical JSON array of node hashes in order.

|

||||

var arr = nodesInOrder.Select(n => n.NodeHash).ToArray();

|

||||

var bytes = CanonJson.Canonicalize(arr);

|

||||

return "sha256:" + CanonJson.Sha256Hex(bytes);

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### Minimal Ledger

|

||||

|

||||

```csharp

|

||||

public sealed class ProofLedger

|

||||

{

|

||||

private readonly List<ProofNode> _nodes = new();

|

||||

public IReadOnlyList<ProofNode> Nodes => _nodes;

|

||||

|

||||

public void Append(ProofNode node)

|

||||

{

|

||||

_nodes.Add(ProofHashing.WithHash(node));

|

||||

}

|

||||

|

||||

public string RootHash() => ProofHashing.ComputeRootHash(_nodes);

|

||||

}

|

||||

```

|

||||

|

||||

### Score Replay Invariant

|

||||

|

||||

The score replay must produce identical ledger root hashes given:

|

||||

- Same manifest (artifact, snapshots, policy)

|

||||

- Same seed

|

||||

- Same timestamp (or frozen clock)

|

||||

|

||||

```csharp

|

||||

public class DeterminismTests

|

||||

{

|

||||

[Fact]

|

||||

public void Score_Replay_IsBitIdentical()

|

||||

{

|

||||

var seed = Enumerable.Repeat((byte)7, 32).ToArray();

|

||||

var inputs = new ScoreInputs(9.0, 0.50, false, ReachabilityClass.Unknown, new("enforced","ro"));

|

||||

|

||||

var (s1, l1) = RiskScoring.Score(inputs, "scanA", seed, DateTimeOffset.Parse("2025-01-01T00:00:00Z"));

|

||||

var (s2, l2) = RiskScoring.Score(inputs, "scanA", seed, DateTimeOffset.Parse("2025-01-01T00:00:00Z"));

|

||||

|

||||

Assert.Equal(s1, s2, 10);

|

||||

Assert.Equal(l1.RootHash(), l2.RootHash());

|

||||

Assert.True(l1.Nodes.Zip(l2.Nodes).All(z => z.First.NodeHash == z.Second.NodeHash));

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

## 12. REPLAY RUNNER

|

||||

|

||||

```csharp

|

||||

public static class ReplayRunner

|

||||

{

|

||||

public static ReplayResult Replay(Guid manifestId, IScannerEngine engine)

|

||||

{

|

||||

var manifest = ManifestRepository.Load(manifestId);

|

||||

var canonical = CanonicalJson.Serialize(manifest.RawObject);

|

||||

var canonicalHash = Sha256(canonical);

|

||||

|

||||

if (canonicalHash != manifest.RawManifestSHA256)

|

||||

throw new InvalidOperationException("Manifest integrity violation.");

|

||||

|

||||

using var feeds = FeedSnapshotResolver.Open(manifest.FeedsMerkleRoot);

|

||||

|

||||

var exec = engine.Scan(new ScanRequest

|

||||

{

|

||||

ArtifactDigest = manifest.ArtifactDigest,

|

||||

Feeds = feeds,

|

||||

LatticeHash = manifest.PolicyLatticeHash,

|

||||

EngineBuildHash = manifest.EngineBuildHash,

|

||||

CanonicalManifest = canonical

|

||||

});

|

||||

|

||||

return new ReplayResult(

|

||||

exec.FindingsHash == manifest.FindingsSHA256,

|

||||

exec.VexBundleHash == manifest.VexBundleSHA256,

|

||||

exec.ProofBundleHash == manifest.ProofBundleSHA256,

|

||||

exec

|

||||

);

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

## 13. BENCHMARK METRICS

|

||||

|

||||

### 13.1 Time-to-Evidence (TTE)

|

||||

|

||||

**Definition:**

|

||||

```

|

||||

TTE = t(proof_ready) – t(artifact_ingested)

|

||||

```

|

||||

|

||||

**Targets:**

|

||||

- P50 < 2m for typical containers (≤ 500 MB)

|

||||

- P95 < 5m including cold-start/offline-bundle mode

|

||||

|

||||

**Stage Breakdown:**

|

||||

- t_ingest_ms

|

||||

- t_analyze_ms

|

||||

- t_reachability_ms

|

||||

- t_vex_ms

|

||||

- t_sign_ms

|

||||

- t_publish_ms

|

||||

|

||||

### 13.2 False-Negative Drift Rate (FN-DRIFT)

|

||||

|

||||

**Definition (rolling 30d window):**

|

||||

```

|

||||

FN-Drift = (# artifacts re-classified from {unaffected/unknown} → affected) / (total artifacts re-evaluated)

|

||||

```

|

||||

|

||||

**Stratification:**

|

||||

- feed delta

|

||||

- rule delta

|

||||

- lattice/policy delta

|

||||

- reachability delta

|

||||

|

||||

**Targets:**

|

||||

- Engine-caused FN-Drift ≈ 0

|

||||

- Feed-caused FN-Drift: faster is better

|

||||

|

||||

### 13.3 Deterministic Reproducibility

|

||||

|

||||

**Proof Object:**

|

||||

```json

|

||||

{

|

||||

"artifact_digest": "sha256:...",

|

||||

"scan_manifest_hash": "sha256:...",

|

||||

"feeds_merkle_root": "sha256:...",

|

||||

"engine_build_hash": "sha256:...",

|

||||

"policy_lattice_hash": "sha256:...",

|

||||

"findings_sha256": "sha256:...",

|

||||

"vex_bundle_sha256": "sha256:...",

|

||||

"proof_bundle_sha256": "sha256:..."

|

||||

}

|

||||

```

|

||||

|

||||

**Metric:**

|

||||

```

|

||||

Repro rate = identical_outputs / total_replays

|

||||

Target: 100%

|

||||

```

|

||||

|

||||

### 13.4 Detection Metrics

|

||||

|

||||

```

|

||||

true_positive_count (TP)

|

||||

false_positive_count (FP)

|

||||

false_negative_count (FN)

|

||||

|

||||

precision = TP / (TP + FP)

|

||||

recall = TP / (TP + FN)

|

||||

|

||||

fp_reduction = (baseline_fp_rate - stella_fp_rate) / baseline_fp_rate

|

||||

```

|

||||

|

||||

### 13.5 Proof Coverage

|

||||

|

||||

```

|

||||

proof_coverage_all = findings_with_valid_receipts / total_findings

|

||||

|

||||

proof_coverage_vex = vex_items_with_valid_receipts / total_vex_items

|

||||

|

||||

proof_coverage_reachable = reachable_findings_with_proofs / total_reachable_findings

|

||||

```

|

||||

|

||||

## 14. SLO THRESHOLDS

|

||||

|

||||

**Fidelity:**

|

||||

- BF ≥ 0.98 (general)

|

||||

- BF ≥ 0.95 (regulated projects)

|

||||

- PF ≈ 1.0 (unless policy changed intentionally)

|

||||

|

||||

**Alerts:**

|

||||

- BF drops ≥2% week-over-week → warn

|

||||

- BF < 0.90 overall → page/block release

|

||||

- Regulated BF < 0.95 → page/block release

|

||||

|

||||

## 15. DETERMINISTIC PACKAGING (BUNDLES)

|

||||

|

||||

Determinism applies to *packaging*, not only algorithms.

|

||||

|

||||

Rules for proof bundles and offline kits:

|

||||

- Prefer `tar` with deterministic ordering; avoid formats that inject timestamps by default.

|

||||

- Canonical file order: lexicographic path sort; include an `index.json` listing files and their digests in the same order.

|

||||

- Normalize file metadata: fixed uid/gid, fixed mtime, stable permissions; record the chosen policy in the manifest.

|

||||

- Compression must be reproducible (fixed level/settings; no embedded timestamps).

|

||||

- Bundle hash is computed over the canonical archive bytes and must be DSSE-signed.

|

||||

|

||||

## 16. BENCHMARK HARNESS (MOAT METRICS)

|

||||

|

||||

Use the repo benchmark harness as the single place where moat metrics are measured and enforced:

|

||||

- Harness root: `bench/README.md` (layout, verifiers, comparison tools).

|

||||

- Evidence contracts: `docs/benchmarks/vex-evidence-playbook.md` and `docs/replay/DETERMINISTIC_REPLAY.md`.

|

||||

|

||||

Developer rules:

|

||||

- No feature touching scans/policy/proofs ships without at least one benchmark scenario or an extension of an existing one.

|

||||

- If golden outputs change intentionally, record a short “why” note (which metric improved, which contract changed) and keep artifacts deterministic.

|

||||

- Bench runs must record and validate `graphRevisionId` and per-verdict receipts (see `docs/product-advisories/14-Dec-2025 - Proof and Evidence Chain Technical Reference.md`).

|

||||

|

||||

---

|

||||

|

||||

**Document Version**: 1.0

|

||||

**Target Platform**: .NET 10, PostgreSQL ≥16, Angular v17

|

||||

@@ -0,0 +1,474 @@

|

||||

# Developer Onboarding Technical Reference

|

||||

|

||||

**Source Advisories**:

|

||||

- 01-Dec-2025 - Common Developers guides

|

||||

- 29-Nov-2025 - StellaOps – Mid-Level .NET Onboarding (Quick Start)

|

||||

- 30-Nov-2025 - Implementor Guidelines for Stella Ops

|

||||

- 30-Nov-2025 - Standup Sprint Kickstarters

|

||||

|

||||

**Last Updated**: 2025-12-14

|

||||

**Revision**: 1.1 (Corrected to match actual implementation)

|

||||

|

||||

---

|

||||

|

||||

## 0. WHERE TO START (IN-REPO)

|

||||

|

||||

- `docs/README.md` (doc map and module dossiers)

|

||||

- `docs/07_HIGH_LEVEL_ARCHITECTURE.md` (end-to-end system model)

|

||||

- `docs/18_CODING_STANDARDS.md` (C# conventions, repo rules, gates)

|

||||

- `docs/19_TEST_SUITE_OVERVIEW.md` (test layers, CI expectations)

|

||||

- `docs/technical/development/README.md` (developer tooling and workflows)

|

||||

- `docs/10_PLUGIN_SDK_GUIDE.md` (plugin SDK + packaging)

|

||||

- `LICENSE` (AGPL-3.0-or-later obligations)

|

||||

|

||||

## 1. CORE ENGINEERING PRINCIPLES

|

||||

|

||||