feat: add entropy policy banner and policy gate indicator components

Some checks failed

Docs CI / lint-and-preview (push) Has been cancelled

Some checks failed

Docs CI / lint-and-preview (push) Has been cancelled

- Implemented EntropyPolicyBannerComponent with configuration for entropy policies, including thresholds, current scores, and mitigation steps. - Created PolicyGateIndicatorComponent to display the status of policy gates, including passed, failed, and warning gates, with detailed views for determinism and entropy gates. - Added HTML and SCSS for both components to ensure proper styling and layout. - Introduced computed properties and signals for reactive state management in Angular. - Included remediation hints and actions for user interaction within the policy gate indicator.

This commit is contained in:

@@ -0,0 +1,684 @@

|

||||

Here’s a practical, first‑time‑friendly guide to using VEX in Stella Ops, plus a concrete .NET pattern you can drop in today.

|

||||

|

||||

---

|

||||

|

||||

# VEX in a nutshell

|

||||

|

||||

* **VEX (Vulnerability Exploitability eXchange)**: a small JSON document that says whether specific CVEs *actually* affect a product/version.

|

||||

* **OpenVEX**: SBOM‑agnostic; references products/components directly (URIs, PURLs, hashes). Great for canonical internal models.

|

||||

* **CycloneDX VEX / SPDX VEX**: tie VEX statements closely to a specific SBOM instance (component BOM ref IDs). Great when the BOM is your source of truth.

|

||||

|

||||

**Our strategy:**

|

||||

|

||||

* **Store VEX separately** from SBOMs (deterministic, easier air‑gap bundling).

|

||||

* **Link by strong references** (PURLs + content hashes + optional SBOM component IDs).

|

||||

* **Translate on ingest** between OpenVEX ↔ CycloneDX VEX as needed so downstream tools stay happy.

|

||||

|

||||

---

|

||||

|

||||

# Translation model (OpenVEX ↔ CycloneDX VEX)

|

||||

|

||||

1. **Identity mapping**

|

||||

|

||||

* Prefer **PURL** for packages; fallback to **SHA256 (or SHA512)** of artifact; optionally include **SBOM `bom-ref`** if known.

|

||||

2. **Product scope**

|

||||

|

||||

* OpenVEX “product” → CycloneDX `affects` with `bom-ref` (if available) or a synthetic ref derived from PURL/hash.

|

||||

3. **Status mapping**

|

||||

|

||||

* `affected | not_affected | under_investigation | fixed` map 1:1.

|

||||

* Keep **timestamps**, **justification**, **impact statement**, and **origin**.

|

||||

4. **Evidence**

|

||||

|

||||

* Preserve links to advisories, commits, tests; attach as CycloneDX `analysis/evidence` notes (or OpenVEX `metadata/notes`).

|

||||

|

||||

**Collision rules (deterministic):**

|

||||

|

||||

* New statement wins if:

|

||||

|

||||

* Newer `timestamp` **and**

|

||||

* Higher **provenance trust** (signed by vendor/Authority) or equal with a lexicographic tiebreak (issuer keyID).

|

||||

|

||||

---

|

||||

|

||||

# Storage model (MongoDB‑friendly)

|

||||

|

||||

* **Collections**

|

||||

|

||||

* `vex.documents` – one doc per VEX file (OpenVEX or CycloneDX VEX).

|

||||

* `vex.statements` – *flattened*, one per (product/component, vuln).

|

||||

* `artifacts` – canonical component index (PURL, hashes, optional SBOM refs).

|

||||

* **Reference keys**

|

||||

|

||||

* `artifactKey = purl || sha256 || (groupId:name:version for .NET/NuGet)`

|

||||

* `vulnKey = cveId || ghsaId || internalId`

|

||||

* **Deterministic IDs**

|

||||

|

||||

* `_id = sha256(canonicalize(statement-json-without-signature))`

|

||||

* **Signatures**

|

||||

|

||||

* Keep DSSE/Sigstore envelopes in `vex.documents.signatures[]` for audit & replay.

|

||||

|

||||

---

|

||||

|

||||

# Air‑gap bundling

|

||||

|

||||

Package **SBOMs + VEX + artifacts index + trust roots** as a single tarball:

|

||||

|

||||

```

|

||||

/bundle/

|

||||

sboms/*.json

|

||||

vex/*.json # OpenVEX & CycloneDX VEX allowed

|

||||

index/artifacts.jsonl # purl, hashes, bom-ref map

|

||||

trust/rekor.merkle.roots

|

||||

trust/fulcio.certs.pem

|

||||

trust/keys/*.pub

|

||||

manifest.json # content list + sha256 + issuedAt

|

||||

```

|

||||

|

||||

* **Deterministic replay:** re‑ingest is pure function of bundle bytes → identical DB state.

|

||||

|

||||

---

|

||||

|

||||

# .NET 10 implementation (C#) – deterministic ingestion

|

||||

|

||||

### Core models

|

||||

|

||||

```csharp

|

||||

public record ArtifactRef(

|

||||

string? Purl,

|

||||

string? Sha256,

|

||||

string? BomRef);

|

||||

|

||||

public enum VexStatus { Affected, NotAffected, UnderInvestigation, Fixed }

|

||||

|

||||

public record VexStatement(

|

||||

string StatementId, // sha256 of canonical payload

|

||||

ArtifactRef Artifact,

|

||||

string VulnId, // e.g., "CVE-2024-1234"

|

||||

VexStatus Status,

|

||||

string? Justification,

|

||||

string? ImpactStatement,

|

||||

DateTimeOffset Timestamp,

|

||||

string IssuerKeyId, // from DSSE/Signing

|

||||

int ProvenanceScore); // Authority policy

|

||||

```

|

||||

|

||||

### Canonicalizer (stable order, no env fields)

|

||||

|

||||

```csharp

|

||||

static string Canonicalize(VexStatement s)

|

||||

{

|

||||

var payload = new {

|

||||

artifact = new { s.Artifact.Purl, s.Artifact.Sha256, s.Artifact.BomRef },

|

||||

vulnId = s.VulnId,

|

||||

status = s.Status.ToString(),

|

||||

justification = s.Justification,

|

||||

impact = s.ImpactStatement,

|

||||

timestamp = s.Timestamp.UtcDateTime

|

||||

};

|

||||

// Use System.Text.Json with deterministic ordering

|

||||

var opts = new System.Text.Json.JsonSerializerOptions {

|

||||

WriteIndented = false

|

||||

};

|

||||

string json = System.Text.Json.JsonSerializer.Serialize(payload, opts);

|

||||

// Normalize unicode + newline

|

||||

json = json.Normalize(NormalizationForm.FormKC).Replace("\r\n","\n");

|

||||

return json;

|

||||

}

|

||||

|

||||

static string Sha256(string s)

|

||||

{

|

||||

using var sha = System.Security.Cryptography.SHA256.Create();

|

||||

var bytes = sha.ComputeHash(System.Text.Encoding.UTF8.GetBytes(s));

|

||||

return Convert.ToHexString(bytes).ToLowerInvariant();

|

||||

}

|

||||

```

|

||||

|

||||

### Ingest pipeline

|

||||

|

||||

```csharp

|

||||

public sealed class VexIngestor

|

||||

{

|

||||

readonly IVexParser _parser; // OpenVEX & CycloneDX adapters

|

||||

readonly IArtifactIndex _artifacts;

|

||||

readonly IVexRepo _repo; // Mongo-backed

|

||||

readonly IPolicy _policy; // tie-break rules

|

||||

|

||||

public async Task IngestAsync(Stream vexJson, SignatureEnvelope? sig)

|

||||

{

|

||||

var doc = await _parser.ParseAsync(vexJson); // yields normalized statements

|

||||

var issuer = sig?.KeyId ?? "unknown";

|

||||

foreach (var st in doc.Statements)

|

||||

{

|

||||

var canon = Canonicalize(st);

|

||||

var id = Sha256(canon);

|

||||

var withMeta = st with {

|

||||

StatementId = id,

|

||||

IssuerKeyId = issuer,

|

||||

ProvenanceScore = _policy.Score(sig, st)

|

||||

};

|

||||

|

||||

// Upsert artifact (purl/hash/bomRef)

|

||||

await _artifacts.UpsertAsync(withMeta.Artifact);

|

||||

|

||||

// Deterministic merge

|

||||

var existing = await _repo.GetAsync(id)

|

||||

?? await _repo.FindByKeysAsync(withMeta.Artifact, st.VulnId);

|

||||

if (existing is null || _policy.IsNewerAndStronger(existing, withMeta))

|

||||

await _repo.UpsertAsync(withMeta);

|

||||

}

|

||||

|

||||

if (sig is not null) await _repo.AttachSignatureAsync(doc.DocumentId, sig);

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### Parsers (adapters)

|

||||

|

||||

* `OpenVexParser` – reads OpenVEX; emits `VexStatement` with `ArtifactRef(PURL/hash)`

|

||||

* `CycloneDxVexParser` – resolves `bom-ref` → look up PURL/hash via `IArtifactIndex` (if SBOM present); if not, store bom‑ref and mark artifact unresolved for later backfill.

|

||||

|

||||

---

|

||||

|

||||

# Why this works for Stella Ops

|

||||

|

||||

* **SBOM‑agnostic core** (OpenVEX‑first) maps cleanly to your MongoDB canonical stores and `.NET 10` services.

|

||||

* **SBOM‑aware edges** (CycloneDX VEX) are still supported via adapters and `bom-ref` backfill.

|

||||

* **Deterministic everything**: canonical JSON → SHA256 IDs → reproducible merges → perfect for audits and offline environments.

|

||||

* **Air‑gap ready**: single bundle with trust roots, replayable on any node.

|

||||

|

||||

---

|

||||

|

||||

# Next steps (plug‑and‑play)

|

||||

|

||||

1. Implement the two parsers (`OpenVexParser`, `CycloneDxVexParser`).

|

||||

2. Add the repo/index interfaces to your `StellaOps.Vexer` service:

|

||||

|

||||

* `IVexRepo` (Mongo collections `vex.documents`, `vex.statements`)

|

||||

* `IArtifactIndex` (your canonical PURL/hash map)

|

||||

3. Wire `Policy` to Authority to score signatures and apply tie‑breaks.

|

||||

4. Add a `bundle ingest` CLI: `vexer ingest /bundle/manifest.json`.

|

||||

5. Expose GraphQL (HotChocolate) queries:

|

||||

|

||||

* `vexStatements(artifactKey, vulnId)`, `vexStatus(artifactKey)`, `evidence(...)`.

|

||||

|

||||

If you want, I can generate the exact Mongo schemas, HotChocolate types, and a minimal test bundle to validate the ingest end‑to‑end.

|

||||

Below is a complete, developer-ready implementation plan for the **VEX ingestion, translation, canonicalization, storage, and merge-policy pipeline** inside **Stella Ops.Vexer**, aligned with your architecture, deterministic requirements, MongoDB model, DSSE/Authority workflow, and `.NET 10` standards.

|

||||

|

||||

This is structured so an average developer can follow it step-by-step without ambiguity.

|

||||

It is broken into phases, each with clear tasks, acceptance criteria, failure modes, interfaces, and code pointers.

|

||||

|

||||

---

|

||||

|

||||

# Stella Ops.Vexer

|

||||

|

||||

## Full Implementation Plan (Developer-Executable)

|

||||

|

||||

---

|

||||

|

||||

# 1. Core Objectives

|

||||

|

||||

Develop a deterministic, replayable, SBOM-agnostic but SBOM-compatible VEX subsystem supporting:

|

||||

|

||||

* OpenVEX and CycloneDX VEX ingestion.

|

||||

* Canonicalization → SHA-256 identity.

|

||||

* Cross-linking to artifacts (purl, hash, bom-ref).

|

||||

* Merge policies driven by Authority trust/lattice rules.

|

||||

* Complete offline reproducibility.

|

||||

* MongoDB canonical storage.

|

||||

* Exposed through gRPC/REST/GraphQL.

|

||||

|

||||

---

|

||||

|

||||

# 2. Module Structure (to be implemented)

|

||||

|

||||

```

|

||||

src/StellaOps.Vexer/

|

||||

Application/

|

||||

Commands/

|

||||

Queries/

|

||||

Ingest/

|

||||

Translation/

|

||||

Merge/

|

||||

Policies/

|

||||

Domain/

|

||||

Entities/

|

||||

ValueObjects/

|

||||

Services/

|

||||

Infrastructure/

|

||||

Mongo/

|

||||

AuthorityClient/

|

||||

Hashing/

|

||||

Signature/

|

||||

BlobStore/

|

||||

Presentation/

|

||||

GraphQL/

|

||||

REST/

|

||||

gRPC/

|

||||

```

|

||||

|

||||

Every subfolder must compile in strict mode (treat warnings as errors).

|

||||

|

||||

---

|

||||

|

||||

# 3. Data Model (MongoDB)

|

||||

|

||||

## 3.1 `vex.statements` collection

|

||||

|

||||

Document schema:

|

||||

|

||||

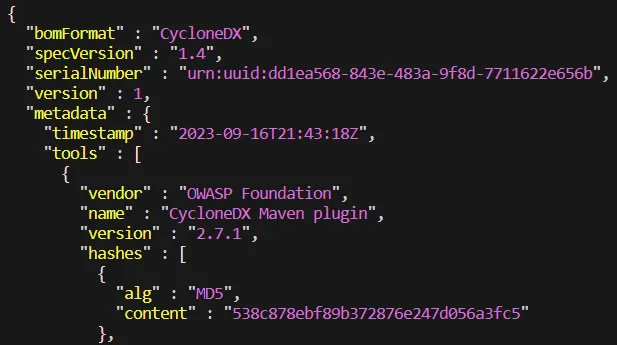

```json

|

||||

{

|

||||

"_id": "sha256(canonical-json)",

|

||||

"artifact": {

|

||||

"purl": "pkg:nuget/... or null",

|

||||

"sha256": "hex or null",

|

||||

"bomRef": "optional ref",

|

||||

"resolved": true | false

|

||||

},

|

||||

"vulnId": "CVE-XXXX-YYYY",

|

||||

"status": "affected | not_affected | under_investigation | fixed",

|

||||

"justification": "...",

|

||||

"impact": "...",

|

||||

"timestamp": "2024-01-01T12:34:56Z",

|

||||

"issuerKeyId": "FULCIO-KEY-ID",

|

||||

"provenanceScore": 0–100,

|

||||

"documentId": "UUID of vex.documents entry",

|

||||

"sourceFormat": "openvex|cyclonedx",

|

||||

"createdAt": "...",

|

||||

"updatedAt": "..."

|

||||

}

|

||||

```

|

||||

|

||||

## 3.2 `vex.documents` collection

|

||||

|

||||

```

|

||||

{

|

||||

"_id": "<uuid>",

|

||||

"format": "openvex|cyclonedx",

|

||||

"rawBlobId": "<blob-id in blobstore>",

|

||||

"signatures": [

|

||||

{

|

||||

"type": "dsse",

|

||||

"verified": true,

|

||||

"issuerKeyId": "F-123...",

|

||||

"timestamp": "...",

|

||||

"bundleEvidence": {...}

|

||||

}

|

||||

],

|

||||

"ingestedAt": "...",

|

||||

"statementIds": ["sha256-1", "sha256-2", ...]

|

||||

}

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

# 4. Components to Implement

|

||||

|

||||

## 4.1 Parsing Layer

|

||||

|

||||

### Interfaces

|

||||

|

||||

```csharp

|

||||

public interface IVexParser

|

||||

{

|

||||

ValueTask<ParsedVexDocument> ParseAsync(Stream jsonStream);

|

||||

}

|

||||

|

||||

public sealed record ParsedVexDocument(

|

||||

string DocumentId,

|

||||

string Format,

|

||||

IReadOnlyList<ParsedVexStatement> Statements);

|

||||

```

|

||||

|

||||

### Tasks

|

||||

|

||||

1. Implement `OpenVexParser`.

|

||||

|

||||

* Use System.Text.Json source generators.

|

||||

* Validate OpenVEX schema version.

|

||||

* Extract product → component mapping.

|

||||

* Map to internal `ArtifactRef`.

|

||||

|

||||

2. Implement `CycloneDxVexParser`.

|

||||

|

||||

* Support 1.5+ “vex” extension.

|

||||

* bom-ref resolution through `IArtifactIndex`.

|

||||

* Mark unresolved `bom-ref` but store them.

|

||||

|

||||

### Acceptance Criteria

|

||||

|

||||

* Both parsers produce identical internal representation of statements.

|

||||

* Unknown fields must not corrupt canonicalization.

|

||||

* 100% deterministic mapping for same input.

|

||||

|

||||

---

|

||||

|

||||

## 4.2 Canonicalizer

|

||||

|

||||

Implement deterministic ordering, UTF-8 normalization, stable JSON.

|

||||

|

||||

### Tasks

|

||||

|

||||

1. Create `Canonicalizer` class.

|

||||

2. Apply:

|

||||

|

||||

* Property order: artifact, vulnId, status, justification, impact, timestamp.

|

||||

* Remove optional metadata (issuerKeyId, provenance).

|

||||

* Normalize Unicode → NFKC.

|

||||

* Replace CRLF → LF.

|

||||

3. Generate SHA-256.

|

||||

|

||||

### Interface

|

||||

|

||||

```csharp

|

||||

public interface IVexCanonicalizer

|

||||

{

|

||||

string Canonicalize(VexStatement s);

|

||||

string ComputeId(string canonicalJson);

|

||||

}

|

||||

```

|

||||

|

||||

### Acceptance Criteria

|

||||

|

||||

* Hash identical on all OS, time, locale, machines.

|

||||

* Replaying the same bundle yields same `_id`.

|

||||

|

||||

---

|

||||

|

||||

## 4.3 Authority / Signature Verification

|

||||

|

||||

### Tasks

|

||||

|

||||

1. Implement DSSE envelope reader.

|

||||

2. Integrate Authority client:

|

||||

|

||||

* Verify certificate chain (Fulcio/GOST/eIDAS etc).

|

||||

* Obtain trust lattice score.

|

||||

* Produce `ProvenanceScore`: int.

|

||||

|

||||

### Interface

|

||||

|

||||

```csharp

|

||||

public interface ISignatureVerifier

|

||||

{

|

||||

ValueTask<SignatureVerificationResult> VerifyAsync(Stream payload, Stream envelope);

|

||||

}

|

||||

```

|

||||

|

||||

### Acceptance Criteria

|

||||

|

||||

* If verification fails → Vexer stores document but flags signature invalid.

|

||||

* Scores map to priority in merge policy.

|

||||

|

||||

---

|

||||

|

||||

## 4.4 Merge Policies

|

||||

|

||||

### Implement Default Policy

|

||||

|

||||

1. Newer timestamp wins.

|

||||

2. If timestamps equal:

|

||||

|

||||

* Higher provenance score wins.

|

||||

* If both equal, lexicographically smaller issuerKeyId wins.

|

||||

|

||||

### Interface

|

||||

|

||||

```csharp

|

||||

public interface IVexMergePolicy

|

||||

{

|

||||

bool ShouldReplace(VexStatement existing, VexStatement incoming);

|

||||

}

|

||||

```

|

||||

|

||||

### Acceptance Criteria

|

||||

|

||||

* Merge decisions reproducible.

|

||||

* Deterministic ordering even when values equal.

|

||||

|

||||

---

|

||||

|

||||

## 4.5 Ingestion Pipeline

|

||||

|

||||

### Steps

|

||||

|

||||

1. Accept `multipart/form-data` or referenced blob ID.

|

||||

2. Parse via correct parser.

|

||||

3. Verify signature (optional).

|

||||

4. For each statement:

|

||||

|

||||

* Canonicalize.

|

||||

* Compute `_id`.

|

||||

* Upsert artifact into `artifacts` (via `IArtifactIndex`).

|

||||

* Resolve bom-ref (if CycloneDX).

|

||||

* Existing statement? Apply merge policy.

|

||||

* Insert or update.

|

||||

5. Create `vex.documents` entry.

|

||||

|

||||

### Class

|

||||

|

||||

`VexIngestService`

|

||||

|

||||

### Required Methods

|

||||

|

||||

```csharp

|

||||

public Task<IngestResult> IngestAsync(VexIngestRequest request);

|

||||

```

|

||||

|

||||

### Acceptance Tests

|

||||

|

||||

* Idempotent: ingesting same VEX repeated → DB unchanged.

|

||||

* Deterministic under concurrency.

|

||||

* Air-gap replay produces identical DB state.

|

||||

|

||||

---

|

||||

|

||||

## 4.6 Translation Layer

|

||||

|

||||

### Implement two converters:

|

||||

|

||||

* `OpenVexToCycloneDxTranslator`

|

||||

* `CycloneDxToOpenVexTranslator`

|

||||

|

||||

### Rules

|

||||

|

||||

* Prefer PURL → hash → synthetic bom-ref.

|

||||

* Single VEX statement → one CycloneDX “analysis” entry.

|

||||

* Preserve justification, impact, notes.

|

||||

|

||||

### Acceptance Criteria

|

||||

|

||||

* Round-trip OpenVEX → CycloneDX → OpenVEX produces equal canonical hashes (except format markers).

|

||||

|

||||

---

|

||||

|

||||

## 4.7 Artifact Index Backfill

|

||||

|

||||

### Reason

|

||||

|

||||

CycloneDX VEX may refer to bom-refs not yet known at ingestion.

|

||||

|

||||

### Tasks

|

||||

|

||||

1. Store unresolved artifacts.

|

||||

2. Create background `BackfillWorker`:

|

||||

|

||||

* Watches `sboms.documents` ingestion events.

|

||||

* Matches bom-refs.

|

||||

* Updates statements with resolved PURL/hashes.

|

||||

* Recomputes canonical JSON + SHA-256 (new version stored as new ID).

|

||||

3. Marks old unresolved statement as superseded.

|

||||

|

||||

### Acceptance Criteria

|

||||

|

||||

* Backfilling is monotonic: no overwriting original.

|

||||

* Deterministic after backfill: same SBOM yields same final ID.

|

||||

|

||||

---

|

||||

|

||||

## 4.8 Bundle Ingestion (Air-Gap Mode)

|

||||

|

||||

### Structure

|

||||

|

||||

```

|

||||

bundle/

|

||||

sboms/*.json

|

||||

vex/*.json

|

||||

index/artifacts.jsonl

|

||||

trust/*

|

||||

manifest.json

|

||||

```

|

||||

|

||||

### Tasks

|

||||

|

||||

1. Implement `BundleIngestService`.

|

||||

2. Stages:

|

||||

|

||||

* Validate manifest + hashes.

|

||||

* Import trust roots (local only).

|

||||

* Ingest SBOMs first.

|

||||

* Ingest VEX documents.

|

||||

3. Reproduce same IDs on all nodes.

|

||||

|

||||

### Acceptance Criteria

|

||||

|

||||

* Byte-identical bundle → byte-identical DB.

|

||||

* Works offline completely.

|

||||

|

||||

---

|

||||

|

||||

# 5. Interfaces for GraphQL/REST/gRPC

|

||||

|

||||

Expose:

|

||||

|

||||

## Queries

|

||||

|

||||

* `vexStatement(id)`

|

||||

* `vexStatementsByArtifact(purl/hash)`

|

||||

* `vexStatus(purl)` → latest merged status

|

||||

* `vexDocument(id)`

|

||||

* `affectedComponents(vulnId)`

|

||||

|

||||

## Mutations

|

||||

|

||||

* `ingestVexDocument`

|

||||

* `translateVex(format)`

|

||||

* `exportVexDocument(id, targetFormat)`

|

||||

* `replayBundle(bundleId)`

|

||||

|

||||

All responses must include deterministic IDs.

|

||||

|

||||

---

|

||||

|

||||

# 6. Detailed Developer Tasks by Sprint

|

||||

|

||||

## Sprint 1: Foundation

|

||||

|

||||

1. Create solution structure.

|

||||

2. Add Mongo DB contexts.

|

||||

3. Implement data entities.

|

||||

4. Implement hashing + canonicalizer.

|

||||

5. Implement IVexParser interface.

|

||||

|

||||

## Sprint 2: Parsers

|

||||

|

||||

1. Implement OpenVexParser.

|

||||

2. Implement CycloneDxParser.

|

||||

3. Develop strong unit tests for JSON normalization.

|

||||

|

||||

## Sprint 3: Signature & Authority

|

||||

|

||||

1. DSSE envelope reader.

|

||||

2. Call Authority to verify signatures.

|

||||

3. Produce provenance scores.

|

||||

|

||||

## Sprint 4: Merge Policy Engine

|

||||

|

||||

1. Implement deterministic lattice merge.

|

||||

2. Unit tests: 20+ collision scenarios.

|

||||

|

||||

## Sprint 5: Ingestion Pipeline

|

||||

|

||||

1. Implement ingest service end-to-end.

|

||||

2. Insert/update logic.

|

||||

3. Add GraphQL endpoints.

|

||||

|

||||

## Sprint 6: Translation Layer

|

||||

|

||||

1. OpenVEX↔CycloneDX converter.

|

||||

2. Tests for round-trip.

|

||||

|

||||

## Sprint 7: Backfill System

|

||||

|

||||

1. Bom-ref resolver worker.

|

||||

2. Rehashing logic for updated artifacts.

|

||||

3. Events linking SBOM ingestion to backfill.

|

||||

|

||||

## Sprint 8: Air-Gap Bundle

|

||||

|

||||

1. BundleIngestService.

|

||||

2. Manifest verification.

|

||||

3. Trust root local loading.

|

||||

|

||||

## Sprint 9: Hardening

|

||||

|

||||

1. Fuzz parsers.

|

||||

2. Deterministic stress tests.

|

||||

3. Concurrency validation.

|

||||

4. Storage compaction.

|

||||

|

||||

---

|

||||

|

||||

# 7. Failure Handling Matrix

|

||||

|

||||

| Failure | Action | Logged? | Retries |

|

||||

| ------------------- | -------------------------------------- | ------- | ------- |

|

||||

| Invalid JSON | Reject document | Yes | 0 |

|

||||

| Invalid schema | Reject | Yes | 0 |

|

||||

| Signature invalid | Store document, mark signature invalid | Yes | 0 |

|

||||

| Artifact unresolved | Store unresolved, enqueue backfill | Yes | 3 |

|

||||

| Merge conflict | Apply policy | Yes | 0 |

|

||||

| Canonical mismatch | Hard fail | Yes | 0 |

|

||||

|

||||

---

|

||||

|

||||

# 8. Developer Unit Test Checklist

|

||||

|

||||

### must have tests for:

|

||||

|

||||

* Canonicalization stability (100 samples).

|

||||

* Identical input twice → identical `_id`.

|

||||

* Parsing OpenVEX with multi-product definitions.

|

||||

* Parsing CycloneDX with missing bom-refs.

|

||||

* Merge policy tie-breakers.

|

||||

* Air-gap replay reproducibility.

|

||||

* Translation equivalence.

|

||||

|

||||

---

|

||||

|

||||

# 9. Deliverables for Developers

|

||||

|

||||

They must produce:

|

||||

|

||||

1. Interfaces + DTOs + document schemas.

|

||||

2. Canonicalizer with 100% deterministic output.

|

||||

3. Two production-grade parsers.

|

||||

4. Signature verification pipeline.

|

||||

5. Merge policies aligned with Authority trust model.

|

||||

6. End-to-end ingestion service.

|

||||

7. Translation layer.

|

||||

8. Backfill worker.

|

||||

9. Air-gap bundle script + service.

|

||||

10. GraphQL APIs.

|

||||

|

||||

---

|

||||

|

||||

If you want, I can next produce:

|

||||

|

||||

* A full **developer handbook** (60–90 pages).

|

||||

* Full **technical architecture ADRs**.

|

||||

* A concrete **scaffold** with compiles-clean `.NET 10` project.

|

||||

* Complete **test suite specification**.

|

||||

* A **README.md** for new joiners.

|

||||

@@ -0,0 +1,754 @@

|

||||

Here’s a practical way to make a cross‑platform, hash‑stable JSON “fingerprint” for things like a `graph_revision_id`, so your hashes don’t change between OS/locale settings.

|

||||

|

||||

---

|

||||

|

||||

### What “canonical JSON” means (in plain terms)

|

||||

|

||||

* **Deterministic order:** Always write object properties in a fixed order (e.g., lexicographic).

|

||||

* **Stable numbers:** Serialize numbers the same way everywhere (no locale, no extra zeros).

|

||||

* **Normalized text:** Normalize all strings to Unicode **NFC** so accented/combined characters don’t vary.

|

||||

* **Consistent bytes:** Encode as **UTF‑8** with **LF** (`\n`) newlines only.

|

||||

|

||||

These ideas match the JSON Canonicalization Scheme (RFC 8785)—use it as your north star for stable hashing.

|

||||

|

||||

---

|

||||

|

||||

### Drop‑in C# helper (targets .NET 8/10)

|

||||

|

||||

This gives you a canonical UTF‑8 byte[] and a SHA‑256 hex hash. It:

|

||||

|

||||

* Recursively sorts object properties,

|

||||

* Emits numbers with invariant formatting,

|

||||

* Normalizes all string values to **NFC**,

|

||||

* Uses `\n` endings,

|

||||

* Produces a SHA‑256 for `graph_revision_id`.

|

||||

|

||||

```csharp

|

||||

using System;

|

||||

using System.Buffers.Text;

|

||||

using System.Collections.Generic;

|

||||

using System.Globalization;

|

||||

using System.Linq;

|

||||

using System.Security.Cryptography;

|

||||

using System.Text;

|

||||

using System.Text.Json;

|

||||

using System.Text.Json.Nodes;

|

||||

using System.Text.Unicode;

|

||||

|

||||

public static class CanonJson

|

||||

{

|

||||

// Entry point: produce canonical UTF-8 bytes

|

||||

public static byte[] ToCanonicalUtf8(object? value)

|

||||

{

|

||||

// 1) Serialize once to JsonNode to work with types safely

|

||||

var initialJson = JsonSerializer.SerializeToNode(

|

||||

value,

|

||||

new JsonSerializerOptions

|

||||

{

|

||||

NumberHandling = JsonNumberHandling.AllowReadingFromString,

|

||||

Encoder = System.Text.Encodings.Web.JavaScriptEncoder.UnsafeRelaxedJsonEscaping // we will control escaping

|

||||

});

|

||||

|

||||

// 2) Canonicalize (sort keys, normalize strings, normalize numbers)

|

||||

var canonNode = CanonicalizeNode(initialJson);

|

||||

|

||||

// 3) Write in a deterministic manner

|

||||

var sb = new StringBuilder(4096);

|

||||

WriteCanonical(canonNode!, sb);

|

||||

|

||||

// 4) Ensure LF only

|

||||

var lf = sb.ToString().Replace("\r\n", "\n").Replace("\r", "\n");

|

||||

|

||||

// 5) UTF-8 bytes

|

||||

return Encoding.UTF8.GetBytes(lf);

|

||||

}

|

||||

|

||||

// Convenience: compute SHA-256 hex for graph_revision_id

|

||||

public static string ComputeGraphRevisionId(object? value)

|

||||

{

|

||||

var bytes = ToCanonicalUtf8(value);

|

||||

using var sha = SHA256.Create();

|

||||

var hash = sha.ComputeHash(bytes);

|

||||

var sb = new StringBuilder(hash.Length * 2);

|

||||

foreach (var b in hash) sb.Append(b.ToString("x2"));

|

||||

return sb.ToString();

|

||||

}

|

||||

|

||||

// --- Internals ---

|

||||

|

||||

private static JsonNode? CanonicalizeNode(JsonNode? node)

|

||||

{

|

||||

if (node is null) return null;

|

||||

|

||||

switch (node)

|

||||

{

|

||||

case JsonValue v:

|

||||

if (v.TryGetValue<string>(out var s))

|

||||

{

|

||||

// Normalize strings to NFC

|

||||

var nfc = s.Normalize(NormalizationForm.FormC);

|

||||

return JsonValue.Create(nfc);

|

||||

}

|

||||

if (v.TryGetValue<double>(out var d))

|

||||

{

|

||||

// RFC-like minimal form: Invariant, no thousand sep; handle -0 => 0

|

||||

if (d == 0) d = 0; // squash -0

|

||||

return JsonValue.Create(d);

|

||||

}

|

||||

if (v.TryGetValue<long>(out var l))

|

||||

{

|

||||

return JsonValue.Create(l);

|

||||

}

|

||||

// Fallback keep as-is

|

||||

return v;

|

||||

|

||||

case JsonArray arr:

|

||||

var outArr = new JsonArray();

|

||||

foreach (var elem in arr)

|

||||

outArr.Add(CanonicalizeNode(elem));

|

||||

return outArr;

|

||||

|

||||

case JsonObject obj:

|

||||

// Sort keys lexicographically (RFC 8785 uses code unit order)

|

||||

var sorted = new JsonObject();

|

||||

foreach (var kvp in obj.OrderBy(k => k.Key, StringComparer.Ordinal))

|

||||

sorted[kvp.Key] = CanonicalizeNode(kvp.Value);

|

||||

return sorted;

|

||||

|

||||

default:

|

||||

return node;

|

||||

}

|

||||

}

|

||||

|

||||

// Deterministic writer matching our canonical rules

|

||||

private static void WriteCanonical(JsonNode node, StringBuilder sb)

|

||||

{

|

||||

switch (node)

|

||||

{

|

||||

case JsonObject obj:

|

||||

sb.Append('{');

|

||||

bool first = true;

|

||||

foreach (var kvp in obj)

|

||||

{

|

||||

if (!first) sb.Append(',');

|

||||

first = false;

|

||||

WriteString(kvp.Key, sb); // property name

|

||||

sb.Append(':');

|

||||

WriteCanonical(kvp.Value!, sb);

|

||||

}

|

||||

sb.Append('}');

|

||||

break;

|

||||

|

||||

case JsonArray arr:

|

||||

sb.Append('[');

|

||||

for (int i = 0; i < arr.Count; i++)

|

||||

{

|

||||

if (i > 0) sb.Append(',');

|

||||

WriteCanonical(arr[i]!, sb);

|

||||

}

|

||||

sb.Append(']');

|

||||

break;

|

||||

|

||||

case JsonValue val:

|

||||

if (val.TryGetValue<string>(out var s))

|

||||

{

|

||||

WriteString(s, sb);

|

||||

}

|

||||

else if (val.TryGetValue<long>(out var l))

|

||||

{

|

||||

sb.Append(l.ToString(CultureInfo.InvariantCulture));

|

||||

}

|

||||

else if (val.TryGetValue<double>(out var d))

|

||||

{

|

||||

// Minimal form close to RFC 8785 guidance:

|

||||

// - No NaN/Infinity in JSON

|

||||

// - Invariant culture, trim trailing zeros and dot

|

||||

if (double.IsNaN(d) || double.IsInfinity(d))

|

||||

throw new InvalidOperationException("Non-finite numbers are not valid in canonical JSON.");

|

||||

if (d == 0) d = 0; // squash -0

|

||||

var sNum = d.ToString("G17", CultureInfo.InvariantCulture);

|

||||

// Trim redundant zeros in exponentless decimals

|

||||

if (sNum.Contains('.') && !sNum.Contains("e") && !sNum.Contains("E"))

|

||||

{

|

||||

sNum = sNum.TrimEnd('0').TrimEnd('.');

|

||||

}

|

||||

sb.Append(sNum);

|

||||

}

|

||||

else

|

||||

{

|

||||

// bool / null

|

||||

if (val.TryGetValue<bool>(out var b))

|

||||

sb.Append(b ? "true" : "false");

|

||||

else

|

||||

sb.Append("null");

|

||||

}

|

||||

break;

|

||||

|

||||

default:

|

||||

sb.Append("null");

|

||||

break;

|

||||

}

|

||||

}

|

||||

|

||||

private static void WriteString(string s, StringBuilder sb)

|

||||

{

|

||||

sb.Append('"');

|

||||

foreach (var ch in s)

|

||||

{

|

||||

switch (ch)

|

||||

{

|

||||

case '\"': sb.Append("\\\""); break;

|

||||

case '\\': sb.Append("\\\\"); break;

|

||||

case '\b': sb.Append("\\b"); break;

|

||||

case '\f': sb.Append("\\f"); break;

|

||||

case '\n': sb.Append("\\n"); break;

|

||||

case '\r': sb.Append("\\r"); break;

|

||||

case '\t': sb.Append("\\t"); break;

|

||||

default:

|

||||

if (char.IsControl(ch))

|

||||

{

|

||||

sb.Append("\\u");

|

||||

sb.Append(((int)ch).ToString("x4"));

|

||||

}

|

||||

else

|

||||

{

|

||||

sb.Append(ch);

|

||||

}

|

||||

break;

|

||||

}

|

||||

}

|

||||

sb.Append('"');

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

**Usage in your code (e.g., Stella Ops):**

|

||||

|

||||

```csharp

|

||||

var payload = new {

|

||||

graphId = "core-vuln-edges",

|

||||

version = 3,

|

||||

edges = new[]{ new { from = "pkg:nuget/Newtonsoft.Json@13.0.3", to = "pkg:nuget/System.Text.Json@8.0.4" } },

|

||||

meta = new { generatedAt = DateTime.UtcNow.ToString("yyyy-MM-ddTHH:mm:ssZ") }

|

||||

};

|

||||

|

||||

// Canonical bytes (UTF-8 + LF) for storage/attestation:

|

||||

var canon = CanonJson.ToCanonicalUtf8(payload);

|

||||

|

||||

// Stable revision id (SHA-256 hex):

|

||||

var graphRevisionId = CanonJson.ComputeGraphRevisionId(payload);

|

||||

Console.WriteLine(graphRevisionId);

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

### Operational tips

|

||||

|

||||

* **Freeze locales:** Always run with `CultureInfo.InvariantCulture` when formatting numbers/dates before they hit JSON.

|

||||

* **Reject non‑finite numbers:** Don’t allow `NaN`/`Infinity`—they’re not valid JSON and will break canonicalization.

|

||||

* **One writer, everywhere:** Use this same helper in CI, build agents, and runtime so the hash never drifts.

|

||||

* **Record the scheme:** Store the **canonicalization version** (e.g., `canon_v="JCS‑like v1"`) alongside the hash to allow future upgrades without breaking verification.

|

||||

|

||||

If you want, I can adapt this to stream very large JSONs (avoid `JsonNode`) or emit a **DSSE**/in‑toto style envelope with the canonical bytes as the payload for your attestation chain.

|

||||

Here’s a concrete, step‑by‑step implementation plan you can hand to the devs so they know exactly what to build and how it all fits together.

|

||||

|

||||

I’ll break it into phases:

|

||||

|

||||

1. **Design & scope**

|

||||

2. **Canonical JSON library**

|

||||

3. **Graph canonicalization & `graph_revision_id` calculation**

|

||||

4. **Tooling, tests & cross‑platform verification**

|

||||

5. **Integration & rollout**

|

||||

|

||||

---

|

||||

|

||||

## 1. Design & scope

|

||||

|

||||

### 1.1. Goals

|

||||

|

||||

* Produce a **stable, cross‑platform hash** (e.g. SHA‑256) from JSON content.

|

||||

* This hash becomes your **`graph_revision_id`** for supply‑chain graphs.

|

||||

* Hash **must not change** due to:

|

||||

|

||||

* OS differences (Windows/Linux/macOS)

|

||||

* Locale differences

|

||||

* Whitespace/property order differences

|

||||

* Unicode normalization issues (e.g. accented chars)

|

||||

|

||||

### 1.2. Canonicalization strategy (what devs should implement)

|

||||

|

||||

You’ll use **two levels of canonicalization**:

|

||||

|

||||

1. **Domain-level canonicalization (graph)**

|

||||

Make sure semantically equivalent graphs always serialize to the same in‑memory structure:

|

||||

|

||||

* Sort arrays (e.g. nodes, edges) in a deterministic way (ID, then type, etc.).

|

||||

* Remove / ignore non-semantic or unstable fields (timestamps, debug info, transient IDs).

|

||||

2. **Encoding-level canonicalization (JSON)**

|

||||

Convert that normalized object into **canonical JSON**:

|

||||

|

||||

* Object keys sorted lexicographically (`StringComparer.Ordinal`).

|

||||

* Strings normalized to **Unicode NFC**.

|

||||

* Numbers formatted with **InvariantCulture**, no locale effects.

|

||||

* No NaN/Infinity (reject or map them before hashing).

|

||||

* UTF‑8 output with **LF (`\n`) only**.

|

||||

|

||||

You already have a C# canonical JSON helper from me; this plan is about turning it into a production-ready component and wiring it through the system.

|

||||

|

||||

---

|

||||

|

||||

## 2. Canonical JSON library

|

||||

|

||||

**Owner:** backend platform team

|

||||

**Deliverable:** `StellaOps.CanonicalJson` (or similar) shared library

|

||||

|

||||

### 2.1. Project setup

|

||||

|

||||

* Create a **.NET class library**:

|

||||

|

||||

* `src/StellaOps.CanonicalJson/StellaOps.CanonicalJson.csproj`

|

||||

* Target same framework as your services (e.g. `net8.0`).

|

||||

* Add reference to `System.Text.Json`.

|

||||

|

||||

### 2.2. Public API design

|

||||

|

||||

In `CanonicalJson.cs` (or `CanonJson.cs`):

|

||||

|

||||

```csharp

|

||||

namespace StellaOps.CanonicalJson;

|

||||

|

||||

public static class CanonJson

|

||||

{

|

||||

// Version of your canonicalization algorithm (important for future changes)

|

||||

public const string CanonicalizationVersion = "canon-json-v1";

|

||||

|

||||

public static byte[] ToCanonicalUtf8<T>(T value);

|

||||

|

||||

public static string ToCanonicalString<T>(T value);

|

||||

|

||||

public static byte[] ComputeSha256<T>(T value);

|

||||

|

||||

public static string ComputeSha256Hex<T>(T value);

|

||||

}

|

||||

```

|

||||

|

||||

**Behavioral requirements:**

|

||||

|

||||

* `ToCanonicalUtf8`:

|

||||

|

||||

* Serializes input to a `JsonNode`.

|

||||

* Applies canonicalization rules (sort keys, normalize strings, normalize numbers).

|

||||

* Writes minimal JSON with:

|

||||

|

||||

* No extra spaces.

|

||||

* Keys in lexicographic order.

|

||||

* UTF‑8 bytes and LF newlines only.

|

||||

* `ComputeSha256Hex`:

|

||||

|

||||

* Uses `ToCanonicalUtf8` and computes SHA‑256.

|

||||

* Returns lower‑case hex string.

|

||||

|

||||

### 2.3. Canonicalization rules (dev checklist)

|

||||

|

||||

**Objects (`JsonObject`):**

|

||||

|

||||

* Sort keys using `StringComparer.Ordinal`.

|

||||

* Recursively canonicalize child nodes.

|

||||

|

||||

**Arrays (`JsonArray`):**

|

||||

|

||||

* Preserve order as given by caller.

|

||||

*(The “graph canonicalization” step will make sure this order is semantically stable before JSON.)*

|

||||

|

||||

**Strings:**

|

||||

|

||||

* Normalize to **NFC**:

|

||||

|

||||

```csharp

|

||||

var normalized = original.Normalize(NormalizationForm.FormC);

|

||||

```

|

||||

* When writing JSON:

|

||||

|

||||

* Escape `"`, `\`, control characters (`< 0x20`) using `\uXXXX` format.

|

||||

* Use `\n`, `\r`, `\t`, `\b`, `\f` for standard escapes.

|

||||

|

||||

**Numbers:**

|

||||

|

||||

* Support at least `long`, `double`, `decimal`.

|

||||

* Use **InvariantCulture**:

|

||||

|

||||

```csharp

|

||||

someNumber.ToString("G17", CultureInfo.InvariantCulture);

|

||||

```

|

||||

* Normalize `-0` to `0`.

|

||||

* No grouping separators, no locale decimals.

|

||||

* Reject `NaN`, `+Infinity`, `-Infinity` with a clear exception.

|

||||

|

||||

**Booleans & null:**

|

||||

|

||||

* Emit `true`, `false`, `null` (lowercase).

|

||||

|

||||

**Newlines:**

|

||||

|

||||

* Ensure final string has only `\n`:

|

||||

|

||||

```csharp

|

||||

json = json.Replace("\r\n", "\n").Replace("\r", "\n");

|

||||

```

|

||||

|

||||

### 2.4. Error handling & logging

|

||||

|

||||

* Throw a **custom exception** for unsupported content:

|

||||

|

||||

* `CanonicalJsonException : Exception`.

|

||||

* Example triggers:

|

||||

|

||||

* Non‑finite numbers (NaN/Infinity).

|

||||

* Types that can’t be represented in JSON.

|

||||

* Log the path to the field where canonicalization failed (for debugging).

|

||||

|

||||

---

|

||||

|

||||

## 3. Graph canonicalization & `graph_revision_id`

|

||||

|

||||

This is where the library gets used and where the semantics of the graph are defined.

|

||||

|

||||

**Owner:** team that owns your supply‑chain graph model / graph ingestion.

|

||||

**Deliverables:**

|

||||

|

||||

* Domain-specific canonicalization for graphs.

|

||||

* Stable `graph_revision_id` computation integrated into services.

|

||||

|

||||

### 3.1. Define what goes into the hash

|

||||

|

||||

Create a short **spec document** (internal) that answers:

|

||||

|

||||

1. **What object is being hashed?**

|

||||

|

||||

* For example:

|

||||

|

||||

```json

|

||||

{

|

||||

"graphId": "core-vuln-edges",

|

||||

"schemaVersion": "3",

|

||||

"nodes": [...],

|

||||

"edges": [...],

|

||||

"metadata": {

|

||||

"source": "scanner-x",

|

||||

"epoch": 1732730885

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

2. **Which fields are included vs excluded?**

|

||||

|

||||

* Include:

|

||||

|

||||

* Graph identity (ID, schema version).

|

||||

* Nodes (with stable key set).

|

||||

* Edges (with stable key set).

|

||||

* Exclude or **normalize**:

|

||||

|

||||

* Raw timestamps of ingestion.

|

||||

* Non-deterministic IDs (if they’re not part of graph semantics).

|

||||

* Any environment‑specific details.

|

||||

|

||||

3. **Versioning:**

|

||||

|

||||

* Add:

|

||||

|

||||

* `canonicalizationVersion` (from `CanonJson.CanonicalizationVersion`).

|

||||

* `graphHashSchemaVersion` (separate from graph schema version).

|

||||

|

||||

Example JSON passed into `CanonJson`:

|

||||

|

||||

```json

|

||||

{

|

||||

"graphId": "...",

|

||||

"graphSchemaVersion": "3",

|

||||

"graphHashSchemaVersion": "1",

|

||||

"canonicalizationVersion": "canon-json-v1",

|

||||

"nodes": [...],

|

||||

"edges": [...]

|

||||

}

|

||||

```

|

||||

|

||||

### 3.2. Domain-level canonicalizer

|

||||

|

||||

Create a class like `GraphCanonicalizer` in your graph domain assembly:

|

||||

|

||||

```csharp

|

||||

public interface IGraphCanonicalizer<TGraph>

|

||||

{

|

||||

object ToCanonicalGraphObject(TGraph graph);

|

||||

}

|

||||

```

|

||||

|

||||

Implementation tasks:

|

||||

|

||||

1. **Choose a deterministic ordering for arrays:**

|

||||

|

||||

* Nodes: sort by `(nodeType, nodeId)` or `(packageUrl, version)`.

|

||||

* Edges: sort by `(from, to, edgeType)`.

|

||||

|

||||

2. **Strip / transform unstable fields:**

|

||||

|

||||

* Example: external IDs that may change but are not semantically relevant.

|

||||

* Replace `DateTime` with a normalized string format (if it must be part of the semantics).

|

||||

|

||||

3. **Output DTOs with primitive types only:**

|

||||

|

||||

* Create DTOs like:

|

||||

|

||||

```csharp

|

||||

public sealed record CanonicalNode(

|

||||

string Id,

|

||||

string Type,

|

||||

string Name,

|

||||

string? Version,

|

||||

IReadOnlyDictionary<string, string>? Attributes

|

||||

);

|

||||

```

|

||||

|

||||

* Use simple `record` types / POCOs that serialize cleanly with `System.Text.Json`.

|

||||

|

||||

4. **Combine into a single canonical graph object:**

|

||||

|

||||

```csharp

|

||||

public sealed record CanonicalGraphDto(

|

||||

string GraphId,

|

||||

string GraphSchemaVersion,

|

||||

string GraphHashSchemaVersion,

|

||||

string CanonicalizationVersion,

|

||||

IReadOnlyList<CanonicalNode> Nodes,

|

||||

IReadOnlyList<CanonicalEdge> Edges

|

||||

);

|

||||

```

|

||||

|

||||

`ToCanonicalGraphObject` returns `CanonicalGraphDto`.

|

||||

|

||||

### 3.3. `graph_revision_id` calculator

|

||||

|

||||

Add a service:

|

||||

|

||||

```csharp

|

||||

public interface IGraphRevisionCalculator<TGraph>

|

||||

{

|

||||

string CalculateRevisionId(TGraph graph);

|

||||

}

|

||||

|

||||

public sealed class GraphRevisionCalculator<TGraph> : IGraphRevisionCalculator<TGraph>

|

||||

{

|

||||

private readonly IGraphCanonicalizer<TGraph> _canonicalizer;

|

||||

|

||||

public GraphRevisionCalculator(IGraphCanonicalizer<TGraph> canonicalizer)

|

||||

{

|

||||

_canonicalizer = canonicalizer;

|

||||

}

|

||||

|

||||

public string CalculateRevisionId(TGraph graph)

|

||||

{

|

||||

var canonical = _canonicalizer.ToCanonicalGraphObject(graph);

|

||||

return CanonJson.ComputeSha256Hex(canonical);

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

**Wire this up in DI** for all services that handle graph creation/update.

|

||||

|

||||

### 3.4. Persistence & APIs

|

||||

|

||||

1. **Database schema:**

|

||||

|

||||

* Add a `graph_revision_id` column (string, length 64) to graph tables/collections.

|

||||

* Optionally add `graph_hash_schema_version` and `canonicalization_version` columns for debugging.

|

||||

|

||||

2. **Write path:**

|

||||

|

||||

* On graph creation/update:

|

||||

|

||||

* Build the domain model.

|

||||

* Use `GraphRevisionCalculator` to get `graph_revision_id`.

|

||||

* Store it alongside the graph.

|

||||

|

||||

3. **Read path & APIs:**

|

||||

|

||||

* Ensure all relevant APIs return `graph_revision_id` for clients.

|

||||

* If you use it in attestation / DSSE payloads, include it there too.

|

||||

|

||||

---

|

||||

|

||||

## 4. Tooling, tests & cross‑platform verification

|

||||

|

||||

This is where you make sure it **actually behaves identically** on all platforms and input variations.

|

||||

|

||||

### 4.1. Unit tests for `CanonJson`

|

||||

|

||||

Create a dedicated test project: `tests/StellaOps.CanonicalJson.Tests`.

|

||||

|

||||

**Test categories & examples:**

|

||||

|

||||

1. **Property ordering:**

|

||||

|

||||

* Input 1: `{"b":1,"a":2}`

|

||||

* Input 2: `{"a":2,"b":1}`

|

||||

* Assert: `ToCanonicalString` is identical + same hash.

|

||||

|

||||

2. **Whitespace variations:**

|

||||

|

||||

* Input with lots of spaces/newlines vs compact.

|

||||

* Canonical outputs must match.

|

||||

|

||||

3. **Unicode normalization:**

|

||||

|

||||

* One string using precomposed characters.

|

||||

* Same text using combining characters.

|

||||

* Canonical output must match (NFC).

|

||||

|

||||

4. **Number formatting:**

|

||||

|

||||

* `1`, `1.0`, `1.0000000000` → must canonicalize to the same representation.

|

||||

* `-0.0` → canonicalizes to `0`.

|

||||

|

||||

5. **Booleans & null:**

|

||||

|

||||

* Check exact lowercase output: `true`, `false`, `null`.

|

||||

|

||||

6. **Error behaviors:**

|

||||

|

||||

* Try serializing `double.NaN` → expect `CanonicalJsonException`.

|

||||

|

||||

### 4.2. Integration tests for graph hashing

|

||||

|

||||

Create tests in graph service test project:

|

||||

|

||||

1. Build two graphs that are **semantically identical** but:

|

||||

|

||||

* Nodes/edges inserted in different order.

|

||||

* Fields ordered differently.

|

||||

* Different whitespace in strings (if your app might introduce such).

|

||||

|

||||

2. Assert:

|

||||

|

||||

* `CalculateRevisionId` yields the same result.

|

||||

* Canonical DTOs match expected snapshots (optional snapshot tests).

|

||||

|

||||

3. Build graphs that differ in a meaningful way (e.g., extra edge).

|

||||

|

||||

* Assert that `graph_revision_id` is different.

|

||||

|

||||

### 4.3. Cross‑platform smoke tests

|

||||

|

||||

**Goal:** Prove same hash on Windows, Linux and macOS.

|

||||

|

||||

Implementation idea:

|

||||

|

||||

1. Add a small console tool: `StellaOps.CanonicalJson.Tool`:

|

||||

|

||||

* Usage:

|

||||

`stella-canon hash graph.json`

|

||||

* Prints:

|

||||

|

||||

* Canonical JSON (optional flag).

|

||||

* SHA‑256 hex.

|

||||

|

||||

2. In CI:

|

||||

|

||||

* Run the same test JSON on:

|

||||

|

||||

* Windows runner.

|

||||

* Linux runner.

|

||||

* Assert hashes are equal (store expected in a test harness or artifact).

|

||||

|

||||

---

|

||||

|

||||

## 5. Integration into your pipelines & rollout

|

||||

|

||||

### 5.1. Where to compute `graph_revision_id`

|

||||

|

||||

Decide (and document) **one place** where the ID is authoritative, for example:

|

||||

|

||||

* After ingestion + normalization step, **before** persisting to your graph store.

|

||||

* Or in a dedicated “graph revision service” used by ingestion pipelines.

|

||||

|

||||

Implementation:

|

||||

|

||||

* Update the ingestion service:

|

||||

|

||||

1. Parse incoming data into internal graph model.

|

||||

2. Apply domain canonicalizer → `CanonicalGraphDto`.

|

||||

3. Use `GraphRevisionCalculator` → `graph_revision_id`.

|

||||

4. Persist graph + revision ID.

|

||||

|

||||

### 5.2. Migration / backfill plan

|

||||

|

||||

If you already have graphs in production:

|

||||

|

||||

1. Add new columns/fields for `graph_revision_id` (nullable).

|

||||

2. Write a migration job:

|

||||

|

||||

* Fetch existing graph.

|

||||

* Canonicalize + hash.

|

||||

* Store `graph_revision_id`.

|

||||

3. For a transition period:

|

||||

|

||||

* Accept both “old” and “new” graphs.

|

||||

* Use `graph_revision_id` where available; fall back to legacy IDs when necessary.

|

||||

4. After backfill is complete:

|

||||

|

||||

* Make `graph_revision_id` mandatory for new graphs.

|

||||

* Phase out any legacy revision logic.

|

||||

|

||||

### 5.3. Feature flag & safety

|

||||

|

||||

* Gate the use of `graph_revision_id` in high‑risk flows (e.g., attestations, policy decisions) behind a **feature flag**:

|

||||

|

||||

* `graphRevisionIdEnabled`.

|

||||

* Roll out gradually:

|

||||

|

||||

* Start in staging.

|

||||

* Then a subset of production tenants.

|

||||

* Monitor for:

|

||||

|

||||

* Unexpected changes in revision IDs on unchanged graphs.

|

||||

* Errors from `CanonicalJsonException`.

|

||||

|

||||

---

|

||||

|

||||

## 6. Documentation for developers & operators

|

||||

|

||||

Have a short internal doc (or page) with:

|

||||

|

||||

1. **Canonical JSON spec summary:**

|

||||

|

||||

* Sorting rules.

|

||||

* Unicode NFC requirement.

|

||||

* Number format rules.

|

||||

* Non‑finite numbers not allowed.

|

||||

|

||||

2. **Graph hashing spec:**

|

||||

|

||||

* Fields included in the hash.

|

||||

* Fields explicitly ignored.

|

||||

* Array ordering rules for nodes/edges.

|

||||

* Current:

|

||||

|

||||

* `graphHashSchemaVersion = "1"`

|

||||

* `CanonicalizationVersion = "canon-json-v1"`

|

||||

|

||||

3. **Examples:**

|

||||

|

||||

* Sample graph JSON input.

|

||||

* Canonical JSON output.

|

||||

* Expected SHA‑256.

|

||||

|

||||

4. **Operational guidance:**

|

||||

|

||||

* How to run the CLI tool to debug:

|

||||

|

||||

* “Why did this graph get a new `graph_revision_id`?”

|

||||

* What to do on canonicalization errors (usually indicates bad data).

|

||||

|

||||

---

|

||||

|

||||

If you’d like, next step I can do is: draft the **actual C# projects and folder structure** (with file names + stub code) so your team can just copy/paste the skeleton into the repo and start filling in the domain-specific bits.

|

||||

@@ -0,0 +1,775 @@

|

||||

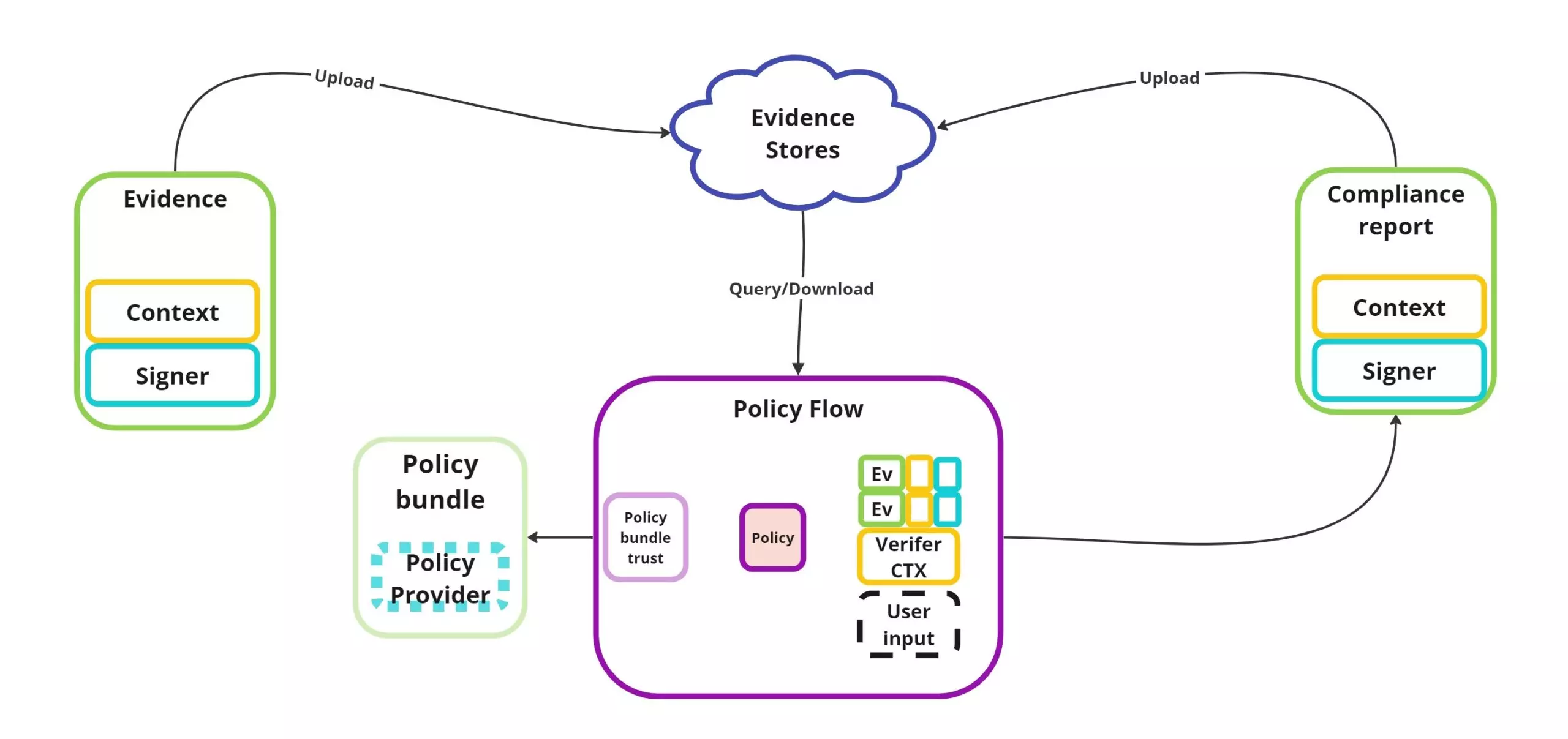

Here’s a crisp, practical idea to harden Stella Ops: make the SBOM → VEX pipeline **deterministic and verifiable** by treating it as a series of signed, hash‑anchored state transitions—so every rebuild yields the *same* provenance envelope you can mathematically check across air‑gapped nodes.

|

||||

|

||||

---

|

||||

|

||||

### What this means (plain English)

|

||||

|

||||

* **SBOM** (what’s inside): list of packages, files, and their hashes.

|

||||

* **VEX** (what’s affected): statements like “CVE‑2024‑1234 is **not** exploitable here because X.”

|

||||

* **Deterministic**: same inputs → byte‑identical outputs, every time.

|

||||

* **Verifiable transitions**: each step (ingest → normalize → resolve → reachability → VEX) emits a signed attestation that pins its inputs/outputs by content hash.

|

||||

|

||||

---

|

||||

|

||||

### Minimal design you can drop into Stella Ops

|

||||

|

||||

1. **Canonicalize everything**

|

||||

|

||||

* Sort JSON keys, normalize whitespace/line endings.

|

||||

* Freeze timestamps by recording them only in an outer envelope (not inside payloads used for hashing).

|

||||

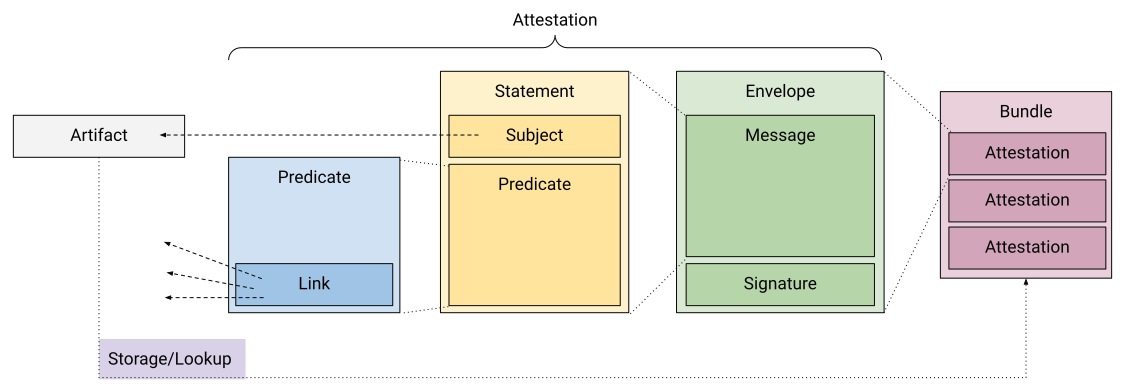

2. **Edge‑level attestations**

|

||||

|

||||

* For each dependency edge in the reachability graph `(nodeA → nodeB via symbol S)`, emit a tiny DSSE payload:

|

||||

|

||||

* `{edge_id, from_purl, to_purl, rule_id, witness_hashes[]}`

|

||||

* Hash is over the canonical payload; sign via DSSE (Sigstore or your Authority PKI).

|

||||

3. **Step attestations (pipeline states)**

|

||||

|

||||

* For each stage (`Sbomer`, `Scanner`, `Vexer/Excititor`, `Concelier`):

|

||||

|

||||

* Emit `predicateType`: `stellaops.dev/attestations/<stage>`

|

||||

* Include `input_digests[]`, `output_digests[]`, `parameters_digest`, `tool_version`

|

||||

* Sign with stage key; record the public key (or cert chain) in Authority.

|

||||

4. **Provenance envelope**

|

||||

|

||||

* Build a top‑level DSSE that includes:

|

||||

|

||||

* Merkle root of **all** edge attestations.

|

||||

* Merkle roots of each stage’s outputs.

|

||||

* Mapping table of `PURL ↔ build‑ID (ELF/PE/Mach‑O)` for stable identity.

|

||||

5. **Replay manifest**

|

||||

|

||||

* A single, declarative file that pins:

|

||||

|

||||

* Feeds (CPE/CVE/VEX sources + exact digests)

|

||||

* Rule/lattice versions and parameters

|

||||

* Container images + layers’ SHA256

|

||||

* Platform toggles (e.g., PQC on/off)

|

||||

* Running **replay** on this manifest must reproduce the same Merkle roots.

|

||||

6. **Air‑gap sync**

|

||||

|

||||

* Export only the envelopes + Merkle roots + public certs.

|

||||

* On the target, verify chains and recompute roots from the replay manifest—no internet required.

|

||||

|

||||

---

|

||||

|

||||

### Slim C# shapes (DTOs) for DSSE predicates

|

||||

|

||||

```csharp

|

||||

public record EdgeAttestation(

|

||||

string EdgeId,

|

||||

string FromPurl,

|

||||

string ToPurl,

|

||||

string RuleId,

|

||||

string[] WitnessHashes, // e.g., CFG slice, symbol tables, lineage JSON

|

||||

string CanonicalAlgo = "SHA256");

|

||||

|

||||

public record StepAttestation(

|

||||

string Stage, // "Sbomer" | "Scanner" | "Excititor" | "Concelier"

|

||||

string ToolVersion,

|

||||

string[] InputDigests,

|

||||

string[] OutputDigests,

|

||||

string ParametersDigest, // hash of canonicalized params

|

||||

DateTimeOffset StartedAt,

|

||||

DateTimeOffset FinishedAt);

|

||||

|

||||

public record ProvenanceEnvelope(

|

||||

string ReplayManifestDigest,

|

||||

string EdgeMerkleRoot,

|

||||

Dictionary<string,string> StageMerkleRoots, // stage -> root

|

||||

Dictionary<string,string> PurlToBuildId); // stable identity map

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

### Determinism checklist (quick win)

|

||||

|

||||

* Canonical JSON (stable key order) everywhere.

|

||||

* No wall‑clock timestamps inside hashed payloads.

|

||||

* Only reference inputs by digest, never by URL.

|

||||

* Lock rule sets / lattice policies by digest.

|

||||

* Normalize file paths (POSIX style) and line endings.

|

||||

* Container images by **digest**, not tags.

|

||||

|

||||

---

|

||||

|

||||

### Why it’s worth it

|

||||

|

||||

* **Auditability:** every VEX claim is backed by a verifiable graph path with signed edges.

|

||||

* **Reproducibility:** regulators (and customers) can replay your exact scan and get identical roots.

|

||||

* **Integrity at scale:** air‑gapped sites can validate without trusting your network—just the math.

|

||||

|

||||

If you want, I’ll turn this into ready‑to‑paste `.proto` contracts + a small .NET library (`StellaOps.Attestations`) with DSSE signing/verification helpers and Merkle builders.

|

||||

Got it — let’s turn that sketch into a concrete implementation plan your devs can actually execute.

|

||||

|

||||

I’ll structure this as:

|

||||

|

||||

1. **Objectives & scope**

|

||||

2. **High-level architecture**

|

||||

3. **Workstreams & milestones**

|