save progress

This commit is contained in:

@@ -0,0 +1,395 @@

|

||||

# Reachability Drift Detection

|

||||

|

||||

**Date**: 2025-12-17

|

||||

**Status**: ANALYZED - Ready for Implementation Planning

|

||||

**Related Advisories**:

|

||||

- 14-Dec-2025 - Smart-Diff Technical Reference

|

||||

- 14-Dec-2025 - Reachability Analysis Technical Reference

|

||||

|

||||

---

|

||||

|

||||

## 1. EXECUTIVE SUMMARY

|

||||

|

||||

This advisory proposes extending StellaOps' Smart-Diff capabilities to detect **reachability drift** - changes in whether vulnerable code paths are reachable from application entry points between container image versions.

|

||||

|

||||

**Core Insight**: Raw diffs don't equal risk. Most changed lines don't matter for exploitability. Reachability drift detection fuses **call-stack reachability graphs** with **Smart-Diff metadata** to flag only paths that went from **unreachable to reachable** (or vice-versa), tied to **SBOM components** and **VEX statements**.

|

||||

|

||||

---

|

||||

|

||||

## 2. GAP ANALYSIS vs EXISTING INFRASTRUCTURE

|

||||

|

||||

### 2.1 What Already Exists (Leverage Points)

|

||||

|

||||

| Component | Location | Status |

|

||||

|-----------|----------|--------|

|

||||

| `MaterialRiskChangeDetector` | `Scanner.SmartDiff.Detection` | DONE - R1-R4 rules |

|

||||

| `VexCandidateEmitter` | `Scanner.SmartDiff.Detection` | DONE - Absent API detection |

|

||||

| `ReachabilityGateBridge` | `Scanner.SmartDiff.Detection` | DONE - Lattice to 3-bit |

|

||||

| `ReachabilitySignal` | `Signals.Contracts` | DONE - Call path model |

|

||||

| `ReachabilityLatticeState` | `Signals.Contracts.Evidence` | DONE - 5-state enum |

|

||||

| `CallPath`, `CallPathNode` | `Signals.Contracts.Evidence` | DONE - Path representation |

|

||||

| `ReachabilityEvidenceChain` | `Signals.Contracts.Evidence` | DONE - Proof chain |

|

||||

| `vex.graph_nodes/edges` | DB Schema | DONE - Graph storage |

|

||||

| `scanner.risk_state_snapshots` | DB Schema | DONE - State storage |

|

||||

| `scanner.material_risk_changes` | DB Schema | DONE - Change storage |

|

||||

| `FnDriftCalculator` | `Scanner.Core.Drift` | DONE - Classification drift |

|

||||

| `SarifOutputGenerator` | `Scanner.SmartDiff.Output` | DONE - CI output |

|

||||

| Reachability Benchmark | `bench/reachability-benchmark/` | DONE - Ground truth cases |

|

||||

| Language Analyzers | `Scanner.Analyzers.Lang.*` | PARTIAL - Package detection, limited call graph |

|

||||

|

||||

### 2.2 What's Missing (New Implementation Required)

|

||||

|

||||

| Component | Advisory Ref | Gap Description |

|

||||

|-----------|-------------|-----------------|

|

||||

| **Call Graph Extractor (.NET)** | §7 C# Roslyn | No MSBuildWorkspace/Roslyn analysis exists |

|

||||

| **Call Graph Extractor (Go)** | §7 Go SSA | No golang.org/x/tools/go/ssa integration |

|

||||

| **Call Graph Extractor (Java)** | §7 | No Soot/WALA integration |

|

||||

| **Call Graph Extractor (Node)** | §7 | No @babel/traverse integration |

|

||||

| **`scanner.code_changes` table** | §4 Smart-Diff | AST-level diff facts not persisted |

|

||||

| **Drift Cause Explainer** | §6 Timeline | No causal attribution on path nodes |

|

||||

| **Path Viewer UI** | §UX | No Angular component for call path visualization |

|

||||

| **Cross-scan Function-level Drift** | §6 | State drift exists, function-level doesn't |

|

||||

| **Entrypoint Discovery (per-framework)** | §3 | Limited beyond package.json/manifest parsing |

|

||||

|

||||

### 2.3 Terminology Mapping

|

||||

|

||||

| Advisory Term | StellaOps Equivalent | Notes |

|

||||

|--------------|---------------------|-------|

|

||||

| `commit_sha` | `scan_id` | StellaOps is image-centric, not commit-centric |

|

||||

| `call_node` | `vex.graph_nodes` | Existing schema, extend don't duplicate |

|

||||

| `call_edge` | `vex.graph_edges` | Existing schema |

|

||||

| `reachability_drift` | `scanner.material_risk_changes` | Add `cause`, `path_nodes` columns |

|

||||

| Risk Drift | Material Risk Change | Existing term is more precise |

|

||||

| Router, Signals | Signals module only | Router module is not implemented |

|

||||

|

||||

---

|

||||

|

||||

## 3. RECOMMENDED IMPLEMENTATION PATH

|

||||

|

||||

### 3.1 What to Ship (Delta from Current State)

|

||||

|

||||

```

|

||||

NEW TABLES:

|

||||

├── scanner.code_changes # AST-level diff facts

|

||||

└── scanner.call_graph_snapshots # Per-scan call graph cache

|

||||

|

||||

NEW COLUMNS:

|

||||

├── scanner.material_risk_changes.cause # TEXT - "guard_removed", "new_route", etc.

|

||||

├── scanner.material_risk_changes.path_nodes # JSONB - Compressed path representation

|

||||

└── scanner.material_risk_changes.base_scan_id # UUID - For cross-scan comparison

|

||||

|

||||

NEW SERVICES:

|

||||

├── CallGraphExtractor.DotNet # Roslyn-based for .NET projects

|

||||

├── CallGraphExtractor.Node # AST-based for Node.js

|

||||

├── DriftCauseExplainer # Attribute causes to code changes

|

||||

└── PathCompressor # Compress paths for storage/UI

|

||||

|

||||

NEW UI:

|

||||

└── PathViewerComponent # Angular component for call path visualization

|

||||

```

|

||||

|

||||

### 3.2 What NOT to Ship (Avoid Duplication)

|

||||

|

||||

- **Don't create `call_node`/`call_edge` tables** - Use existing `vex.graph_nodes`/`vex.graph_edges`

|

||||

- **Don't add `commit_sha` columns** - Use `scan_id` consistently

|

||||

- **Don't build React components** - Angular v17 is the stack

|

||||

|

||||

### 3.3 Use Valkey for Graph Caching

|

||||

|

||||

Valkey is already integrated in `Router.Gateway.RateLimit`. Use it for:

|

||||

- **Call graph snapshot caching** - Fast cross-instance lookups

|

||||

- **Reachability result caching** - Avoid recomputation

|

||||

- **Key pattern**: `stella:callgraph:{scan_id}:{lang}:{digest}`

|

||||

|

||||

```yaml

|

||||

# Configuration pattern (align with existing Router rate limiting)

|

||||

reachability:

|

||||

valkey_connection: "localhost:6379"

|

||||

valkey_bucket: "stella-reachability"

|

||||

cache_ttl_hours: 24

|

||||

circuit_breaker:

|

||||

failure_threshold: 5

|

||||

timeout_seconds: 30

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## 4. TECHNICAL DESIGN

|

||||

|

||||

### 4.1 Call Graph Extraction Model

|

||||

|

||||

```csharp

|

||||

/// <summary>

|

||||

/// Per-scan call graph snapshot for drift comparison.

|

||||

/// </summary>

|

||||

public sealed record CallGraphSnapshot

|

||||

{

|

||||

public required string ScanId { get; init; }

|

||||

public required string GraphDigest { get; init; } // Content hash

|

||||

public required string Language { get; init; }

|

||||

public required DateTimeOffset ExtractedAt { get; init; }

|

||||

public required ImmutableArray<CallGraphNode> Nodes { get; init; }

|

||||

public required ImmutableArray<CallGraphEdge> Edges { get; init; }

|

||||

public required ImmutableArray<string> EntrypointIds { get; init; }

|

||||

}

|

||||

|

||||

public sealed record CallGraphNode

|

||||

{

|

||||

public required string NodeId { get; init; } // Stable identifier

|

||||

public required string Symbol { get; init; } // Fully qualified name

|

||||

public required string File { get; init; }

|

||||

public required int Line { get; init; }

|

||||

public required string Package { get; init; }

|

||||

public required string Visibility { get; init; } // public/internal/private

|

||||

public required bool IsEntrypoint { get; init; }

|

||||

public required bool IsSink { get; init; }

|

||||

public string? SinkCategory { get; init; } // CMD_EXEC, SQL_RAW, etc.

|

||||

}

|

||||

|

||||

public sealed record CallGraphEdge

|

||||

{

|

||||

public required string SourceId { get; init; }

|

||||

public required string TargetId { get; init; }

|

||||

public required string CallKind { get; init; } // direct/virtual/delegate

|

||||

}

|

||||

```

|

||||

|

||||

### 4.2 Code Change Facts Model

|

||||

|

||||

```csharp

|

||||

/// <summary>

|

||||

/// AST-level code change facts from Smart-Diff.

|

||||

/// </summary>

|

||||

public sealed record CodeChangeFact

|

||||

{

|

||||

public required string ScanId { get; init; }

|

||||

public required string File { get; init; }

|

||||

public required string Symbol { get; init; }

|

||||

public required CodeChangeKind Kind { get; init; }

|

||||

public required JsonDocument Details { get; init; }

|

||||

}

|

||||

|

||||

public enum CodeChangeKind

|

||||

{

|

||||

Added,

|

||||

Removed,

|

||||

SignatureChanged,

|

||||

GuardChanged, // Boolean condition around call modified

|

||||

DependencyChanged, // Callee package/version changed

|

||||

VisibilityChanged // public<->internal<->private

|

||||

}

|

||||

```

|

||||

|

||||

### 4.3 Drift Cause Attribution

|

||||

|

||||

```csharp

|

||||

/// <summary>

|

||||

/// Explains why a reachability flip occurred.

|

||||

/// </summary>

|

||||

public sealed class DriftCauseExplainer

|

||||

{

|

||||

public DriftCause Explain(

|

||||

CallGraphSnapshot baseGraph,

|

||||

CallGraphSnapshot headGraph,

|

||||

string sinkSymbol,

|

||||

IReadOnlyList<CodeChangeFact> codeChanges)

|

||||

{

|

||||

// Find shortest path to sink in head graph

|

||||

var path = ShortestPath(headGraph.EntrypointIds, sinkSymbol, headGraph);

|

||||

if (path is null)

|

||||

return DriftCause.Unknown;

|

||||

|

||||

// Check each node on path for code changes

|

||||

foreach (var nodeId in path.NodeIds)

|

||||

{

|

||||

var node = headGraph.Nodes.First(n => n.NodeId == nodeId);

|

||||

var change = codeChanges.FirstOrDefault(c => c.Symbol == node.Symbol);

|

||||

|

||||

if (change is not null)

|

||||

{

|

||||

return change.Kind switch

|

||||

{

|

||||

CodeChangeKind.GuardChanged => DriftCause.GuardRemoved(node.Symbol, node.File, node.Line),

|

||||

CodeChangeKind.Added => DriftCause.NewPublicRoute(node.Symbol),

|

||||

CodeChangeKind.VisibilityChanged => DriftCause.VisibilityEscalated(node.Symbol),

|

||||

CodeChangeKind.DependencyChanged => DriftCause.DepUpgraded(change.Details),

|

||||

_ => DriftCause.CodeModified(node.Symbol)

|

||||

};

|

||||

}

|

||||

}

|

||||

|

||||

return DriftCause.Unknown;

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### 4.4 Database Schema Extensions

|

||||

|

||||

```sql

|

||||

-- New table: Code change facts from AST-level Smart-Diff

|

||||

CREATE TABLE scanner.code_changes (

|

||||

id UUID PRIMARY KEY DEFAULT gen_random_uuid(),

|

||||

tenant_id UUID NOT NULL,

|

||||

scan_id TEXT NOT NULL,

|

||||

file TEXT NOT NULL,

|

||||

symbol TEXT NOT NULL,

|

||||

change_kind TEXT NOT NULL, -- added|removed|signature|guard|dep|visibility

|

||||

details JSONB,

|

||||

detected_at TIMESTAMPTZ NOT NULL DEFAULT NOW(),

|

||||

|

||||

CONSTRAINT code_changes_unique UNIQUE (tenant_id, scan_id, file, symbol)

|

||||

);

|

||||

|

||||

CREATE INDEX idx_code_changes_scan ON scanner.code_changes(scan_id);

|

||||

CREATE INDEX idx_code_changes_symbol ON scanner.code_changes(symbol);

|

||||

|

||||

-- New table: Per-scan call graph snapshots (compressed)

|

||||

CREATE TABLE scanner.call_graph_snapshots (

|

||||

id UUID PRIMARY KEY DEFAULT gen_random_uuid(),

|

||||

tenant_id UUID NOT NULL,

|

||||

scan_id TEXT NOT NULL,

|

||||

language TEXT NOT NULL,

|

||||

graph_digest TEXT NOT NULL, -- Content hash for dedup

|

||||

node_count INT NOT NULL,

|

||||

edge_count INT NOT NULL,

|

||||

entrypoint_count INT NOT NULL,

|

||||

extracted_at TIMESTAMPTZ NOT NULL DEFAULT NOW(),

|

||||

cas_uri TEXT NOT NULL, -- Reference to CAS for full graph

|

||||

|

||||

CONSTRAINT call_graph_snapshots_unique UNIQUE (tenant_id, scan_id, language)

|

||||

);

|

||||

|

||||

CREATE INDEX idx_call_graph_snapshots_digest ON scanner.call_graph_snapshots(graph_digest);

|

||||

|

||||

-- Extend existing material_risk_changes table

|

||||

ALTER TABLE scanner.material_risk_changes

|

||||

ADD COLUMN IF NOT EXISTS cause TEXT,

|

||||

ADD COLUMN IF NOT EXISTS path_nodes JSONB,

|

||||

ADD COLUMN IF NOT EXISTS base_scan_id TEXT;

|

||||

|

||||

CREATE INDEX IF NOT EXISTS idx_material_risk_changes_cause

|

||||

ON scanner.material_risk_changes(cause) WHERE cause IS NOT NULL;

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## 5. UI DESIGN

|

||||

|

||||

### 5.1 Risk Drift Card (PR/Commit View)

|

||||

|

||||

```

|

||||

┌─────────────────────────────────────────────────────────────────────┐

|

||||

│ RISK DRIFT ▼ │

|

||||

├─────────────────────────────────────────────────────────────────────┤

|

||||

│ +3 new reachable paths -2 mitigated paths │

|

||||

│ │

|

||||

│ ┌─ NEW REACHABLE ──────────────────────────────────────────────┐ │

|

||||

│ │ POST /payments → PaymentsController.Capture → ... → │ │

|

||||

│ │ crypto.Verify(legacy) │ │

|

||||

│ │ │ │

|

||||

│ │ [pkg:payments@1.8.2] [CVE-2024-1234] [EPSS 0.72] [VEX:affected]│ │

|

||||

│ │ │ │

|

||||

│ │ Cause: guard removed in AuthFilter.cs:42 │ │

|

||||

│ │ │ │

|

||||

│ │ [View Path] [Quarantine Route] [Pin Version] [Add Exception] │ │

|

||||

│ └───────────────────────────────────────────────────────────────┘ │

|

||||

│ │

|

||||

│ ┌─ MITIGATED ──────────────────────────────────────────────────┐ │

|

||||

│ │ GET /admin → AdminController.Execute → ... → cmd.Run │ │

|

||||

│ │ │ │

|

||||

│ │ [pkg:admin@2.0.0] [CVE-2024-5678] [VEX:not_affected] │ │

|

||||

│ │ │ │

|

||||

│ │ Reason: Vulnerable API removed in upgrade │ │

|

||||

│ └───────────────────────────────────────────────────────────────┘ │

|

||||

└─────────────────────────────────────────────────────────────────────┘

|

||||

```

|

||||

|

||||

### 5.2 Path Viewer Component

|

||||

|

||||

```

|

||||

┌─────────────────────────────────────────────────────────────────────┐

|

||||

│ CALL PATH: POST /payments → crypto.Verify(legacy) [Collapse] │

|

||||

├─────────────────────────────────────────────────────────────────────┤

|

||||

│ │

|

||||

│ ○ POST /payments [ENTRYPOINT] │

|

||||

│ │ PaymentsController.cs:45 │

|

||||

│ │ │

|

||||

│ ├──○ PaymentsController.Capture() │

|

||||

│ │ │ PaymentsController.cs:89 │

|

||||

│ │ │ │

|

||||

│ │ ├──○ PaymentService.ProcessPayment() │

|

||||

│ │ │ │ PaymentService.cs:156 │

|

||||

│ │ │ │ │

|

||||

│ │ │ ├──● CryptoHelper.Verify() ← GUARD REMOVED │

|

||||

│ │ │ │ │ CryptoHelper.cs:42 [Changed: AuthFilter removed] │

|

||||

│ │ │ │ │ │

|

||||

│ │ │ │ └──◆ crypto.Verify(legacy) [VULNERABLE SINK] │

|

||||

│ │ │ │ pkg:crypto@1.2.3 │

|

||||

│ │ │ │ CVE-2024-1234 (CVSS 9.8) │

|

||||

│ │

|

||||

│ Legend: ○ Node ● Changed ◆ Sink ─ Call │

|

||||

└─────────────────────────────────────────────────────────────────────┘

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## 6. POLICY INTEGRATION

|

||||

|

||||

### 6.1 CI Gate Behavior

|

||||

|

||||

```yaml

|

||||

# Policy wiring for drift detection

|

||||

smart_diff:

|

||||

gates:

|

||||

# Fail PR when new reachable paths to affected sinks

|

||||

- condition: "delta_reachable > 0 AND vex_status IN ['affected', 'under_investigation']"

|

||||

action: block

|

||||

message: "New reachable paths to vulnerable sinks detected"

|

||||

|

||||

# Warn when new paths to any sink

|

||||

- condition: "delta_reachable > 0"

|

||||

action: warn

|

||||

message: "New reachable paths detected - review recommended"

|

||||

|

||||

# Auto-mitigate when VEX confirms not_affected

|

||||

- condition: "vex_status == 'not_affected' AND vex_justification IN ['component_not_present', 'fix_applied']"

|

||||

action: allow

|

||||

auto_mitigate: true

|

||||

```

|

||||

|

||||

### 6.2 Exit Codes

|

||||

|

||||

| Code | Meaning |

|

||||

|------|---------|

|

||||

| 0 | Success, no material drift |

|

||||

| 1 | Success, material drift found (info) |

|

||||

| 2 | Success, hardening regression detected |

|

||||

| 3 | Success, new KEV reachable |

|

||||

| 10+ | Errors |

|

||||

|

||||

---

|

||||

|

||||

## 7. SPRINT STRUCTURE

|

||||

|

||||

### 7.1 Master Sprint: SPRINT_3600_0001_0001

|

||||

|

||||

**Topic**: Reachability Drift Detection

|

||||

**Dependencies**: SPRINT_3500 (Smart-Diff) - COMPLETE

|

||||

|

||||

### 7.2 Sub-Sprints

|

||||

|

||||

| ID | Topic | Priority | Effort | Dependencies |

|

||||

|----|-------|----------|--------|--------------|

|

||||

| SPRINT_3600_0002_0001 | Call Graph Infrastructure | P0 | Large | Master |

|

||||

| SPRINT_3600_0003_0001 | Drift Detection Engine | P0 | Medium | 3600.2 |

|

||||

| SPRINT_3600_0004_0001 | UI and Evidence Chain | P1 | Medium | 3600.3 |

|

||||

|

||||

---

|

||||

|

||||

## 8. REFERENCES

|

||||

|

||||

- `docs/product-advisories/14-Dec-2025 - Smart-Diff Technical Reference.md`

|

||||

- `docs/product-advisories/14-Dec-2025 - Reachability Analysis Technical Reference.md`

|

||||

- `docs/implplan/SPRINT_3500_0001_0001_smart_diff_master.md`

|

||||

- `docs/reachability/lattice.md`

|

||||

- `bench/reachability-benchmark/README.md`

|

||||

@@ -0,0 +1,751 @@

|

||||

Here’s a practical, first‑time‑friendly blueprint for making your security workflow both **explainable** and **provable**—from triage to approval.

|

||||

|

||||

# Explainable triage UX (what & why)

|

||||

|

||||

Show every risk score with the minimum evidence a responder needs to trust it:

|

||||

|

||||

* **Reachable path:** the concrete call‑chain (or network path) proving the vuln is actually hit.

|

||||

* **Entrypoint boundary:** the external surface (HTTP route, CLI verb, cron, message topic) that leads to that path.

|

||||

* **VEX status:** the exploitability decision (Affected/Not Affected/Under Investigation/Fixed) with rationale.

|

||||

* **Last‑seen timestamp:** when this evidence was last observed/generated.

|

||||

|

||||

## UI pattern (compact, 1‑click expand)

|

||||

|

||||

* **Row (collapsed):** `Score 72 • CVE‑2024‑12345 • service: api-gateway • package: x.y.z`

|

||||

* **Expand panel (evidence):**

|

||||

|

||||

* **Path:** `POST /billing/charge → BillingController.Pay() → StripeClient.Create()`

|

||||

* **Boundary:** `Ingress: /billing/charge (JWT: required, scope: payments:write)`

|

||||

* **VEX:** `Not Affected (runtime guard strips untrusted input before sink)`

|

||||

* **Last seen:** `2025‑12‑18T09:22Z` (scan: sbomer#c1a2, policy run: lattice#9f0d)

|

||||

* **Actions:** “Open proof bundle”, “Re-run check”, “Create exception (time‑boxed)”

|

||||

|

||||

## Data contract (what the panel needs)

|

||||

|

||||

```json

|

||||

{

|

||||

"finding_id": "f-7b3c",

|

||||

"cve": "CVE-2024-12345",

|

||||

"component": {"name": "stripe-sdk", "version": "6.1.2"},

|

||||

"reachable_path": [

|

||||

"HTTP POST /billing/charge",

|

||||

"BillingController.Pay",

|

||||

"StripeClient.Create"

|

||||

],

|

||||

"entrypoint": {"type":"http","route":"/billing/charge","auth":"jwt:payments:write"},

|

||||

"vex": {"status":"not_affected","justification":"runtime_sanitizer_blocks_sink","timestamp":"2025-12-18T09:22:00Z"},

|

||||

"last_seen":"2025-12-18T09:22:00Z",

|

||||

"attestation_refs": ["sha256:…sbom", "sha256:…vex", "sha256:…policy"]

|

||||

}

|

||||

```

|

||||

|

||||

# Evidence‑linked approvals (what & why)

|

||||

|

||||

Make “Approve to ship” contingent on **verifiable proof**, not screenshots:

|

||||

|

||||

* **Chain** must exist and be machine‑verifiable: **SBOM → VEX → policy decision**.

|

||||

* Use **in‑toto/DSSE** attestations or **SLSA provenance** so each link has a signature, subject digest, and predicate.

|

||||

* **Gate** merges/deploys only when the chain validates.

|

||||

|

||||

## Pipeline gate (simple policy)

|

||||

|

||||

* Require:

|

||||

|

||||

1. **SBOM attestation** referencing the exact image digest

|

||||

2. **VEX attestation** covering all listed components (or explicit allow‑gaps)

|

||||

3. **Policy decision attestation** (e.g., “risk ≤ threshold AND all reachable vulns = Not Affected/Fixed”)

|

||||

|

||||

### Minimal decision attestation (DSSE envelope → JSON payload)

|

||||

|

||||

```json

|

||||

{

|

||||

"predicateType": "stella/policy-decision@v1",

|

||||

"subject": [{"name":"registry/org/app","digest":{"sha256":"<image-digest>"}}],

|

||||

"predicate": {

|

||||

"policy": "risk_threshold<=75 && reachable_vulns.all(v => v.vex in ['not_affected','fixed'])",

|

||||

"inputs": {

|

||||

"sbom_ref": "sha256:<sbom>",

|

||||

"vex_ref": "sha256:<vex>"

|

||||

},

|

||||

"result": {"allowed": true, "score": 61, "exemptions":[]},

|

||||

"evidence_refs": ["sha256:<reachability-proof-bundle>"],

|

||||

"run_at": "2025-12-18T09:23:11Z"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

# How this lands in your product (concrete moves)

|

||||

|

||||

* **Backend:** add `/findings/:id/evidence` (returns the contract above) + `/approvals/:artifact/attestations`.

|

||||

* **Storage:** keep **proof bundles** (graphs, call stacks, logs) as content‑addressed blobs; store DSSE envelopes alongside.

|

||||

* **UI:** one list → expandable rows; chips for VEX status; “Open proof” shows the call graph and boundary in 1 view.

|

||||

* **CLI/API:** `stella verify image:<digest> --require sbom,vex,decision` returns a signed summary; pipelines fail on non‑zero.

|

||||

* **Metrics:**

|

||||

|

||||

* **% changes with complete attestations** (target ≥95%)

|

||||

* **TTFE (time‑to‑first‑evidence)** from alert → panel open (target ≤30s)

|

||||

* **Post‑deploy reversions** due to missing proof (trend to zero)

|

||||

|

||||

# Starter acceptance checklist

|

||||

|

||||

* [ ] Every risk row expands to path, boundary, VEX, last‑seen in <300 ms.

|

||||

* [ ] “Approve” button disabled until SBOM+VEX+Decision attestations validate for the **exact artifact digest**.

|

||||

* [ ] One‑click “Show DSSE chain” renders the three envelopes with subject digests and signers.

|

||||

* [ ] Audit log captures who approved, which digests, and which evidence hashes.

|

||||

|

||||

If you want, I can turn this into ready‑to‑drop **.NET 10** endpoints + a small React panel with mocked data so your team can wire it up fast.

|

||||

Below is a “build‑it” guide for Stella Ops that goes past the concept level: concrete services, schemas, pipelines, signing/storage choices, UI components, and the exact invariants you should enforce so triage is **explainable** and approvals are **provably evidence‑linked**.

|

||||

|

||||

---

|

||||

|

||||

## 1) Start with the invariants (the rules your system must never violate)

|

||||

|

||||

If you implement nothing else, implement these invariants—they’re what make the UX trustworthy and the approvals auditable.

|

||||

|

||||

### Artifact anchoring invariant

|

||||

|

||||

Every finding, every piece of evidence, and every approval must be anchored to an immutable **subject digest** (e.g., container image digest `sha256:…`, binary SHA, or SBOM digest).

|

||||

|

||||

* No “latest tag” approvals.

|

||||

* No “approve commit” without mapping to the built artifact digest.

|

||||

|

||||

### Evidence closure invariant

|

||||

|

||||

A policy decision is only valid if it references **exactly** the evidence it used:

|

||||

|

||||

* `inputs.sbom_ref`

|

||||

* `inputs.vex_ref`

|

||||

* `inputs.reachability_ref` (optional but recommended)

|

||||

* `inputs.scan_ref` (optional)

|

||||

* and any config/IaC refs used for boundary/exposure.

|

||||

|

||||

### Signature chain invariant

|

||||

|

||||

Evidence is only admissible if it is:

|

||||

|

||||

1. structured (machine readable),

|

||||

2. signed (DSSE/in‑toto),

|

||||

3. verifiable (trusted identity/keys),

|

||||

4. retrievable by digest.

|

||||

|

||||

DSSE is specifically designed to authenticate both the message and its type (payload type) and avoid canonicalization pitfalls. ([GitHub][1])

|

||||

|

||||

### Staleness invariant

|

||||

|

||||

Evidence must have:

|

||||

|

||||

* `last_seen` and `expires_at` (or TTL),

|

||||

* a “stale evidence” behavior in policy (deny or degrade score).

|

||||

|

||||

---

|

||||

|

||||

## 2) Choose the canonical formats and where you’ll store “proof”

|

||||

|

||||

### Attestation envelope: DSSE + in‑toto Statement

|

||||

|

||||

Use:

|

||||

|

||||

* **in‑toto Attestation Framework** “Statement” as the payload model (“subject + predicateType + predicate”). ([GitHub][2])

|

||||

* Wrap it in **DSSE** for signing. ([GitHub][1])

|

||||

* If you use Sigstore bundles, the DSSE envelope is expected to carry an in‑toto statement and uses `payloadType` like `application/vnd.in-toto+json`. ([Sigstore][3])

|

||||

|

||||

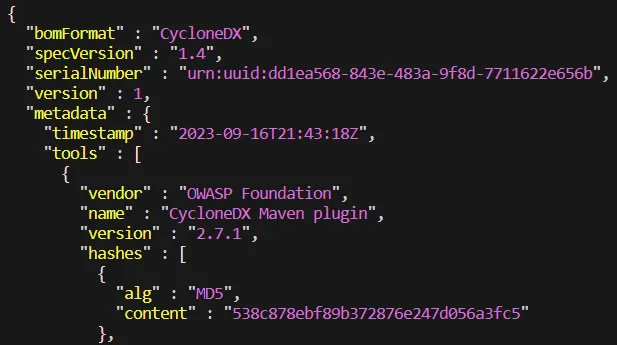

### SBOM format: CycloneDX or SPDX

|

||||

|

||||

* SPDX is an ISO/IEC standard and has v3.0 and v2.3 lines in the ecosystem. ([spdx.dev][4])

|

||||

* CycloneDX is an ECMA standard (ECMA‑424) and widely used for application security contexts. ([GitHub][5])

|

||||

|

||||

Pick one as **your canonical** (internally), but ingest both.

|

||||

|

||||

### VEX format: OpenVEX (practical) + map to “classic” VEX statuses

|

||||

|

||||

VEX’s value is triage noise reduction: vendors can assert whether a product is affected, fixed, under investigation, or not affected. ([NTIA][6])

|

||||

OpenVEX is a minimal, embeddable implementation of VEX intended for interoperability. ([GitHub][7])

|

||||

|

||||

### Where to store proof: OCI registry referrers

|

||||

|

||||

Use OCI “subject/referrers” so proofs travel with the artifact:

|

||||

|

||||

* OCI 1.1 introduces an explicit `subject` field and referrers graph for signatures/attestations/SBOMs. ([opencontainers.org][8])

|

||||

* ORAS documentation explains linking artifacts via `subject`. ([Oras][9])

|

||||

* Microsoft docs show `oras attach … --artifact-type …` patterns (works across registries that support referrers). ([Microsoft Learn][10])

|

||||

|

||||

---

|

||||

|

||||

## 3) System architecture (services + data flow)

|

||||

|

||||

### Services (minimum set)

|

||||

|

||||

1. **Ingestor**

|

||||

|

||||

* Pulls scanner outputs (SCA/SAST/IaC), SBOM, runtime signals.

|

||||

2. **Evidence Builder**

|

||||

|

||||

* Computes reachability, entrypoints, boundary/auth context, score explanation.

|

||||

3. **Attestation Service**

|

||||

|

||||

* Creates in‑toto statements, wraps DSSE, signs (cosign/KMS), stores to registry.

|

||||

4. **Policy Engine**

|

||||

|

||||

* Evaluates allow/deny + reason codes, emits signed decision attestation.

|

||||

* Use OPA/Rego for maintainable declarative policies. ([openpolicyagent.org][11])

|

||||

5. **Stella Ops API**

|

||||

|

||||

* Serves findings + evidence panels to the UI (fast, cached).

|

||||

6. **UI**

|

||||

|

||||

* Explainable triage panel + chain viewer + approve button.

|

||||

|

||||

### Event flow (artifact‑centric)

|

||||

|

||||

1. Build produces `image@sha256:X`

|

||||

2. Generate SBOM → sign + attach

|

||||

3. Run vuln scan → sign + attach (optional but useful)

|

||||

4. Evidence Builder creates:

|

||||

|

||||

* reachability proof

|

||||

* boundary proof

|

||||

* vex doc (or imports vendor VEX + adds your context)

|

||||

5. Policy engine evaluates → emits “decision attestation”

|

||||

6. UI shows explainable triage + “approve” gating

|

||||

|

||||

---

|

||||

|

||||

## 4) Data model (the exact objects you need)

|

||||

|

||||

### Core IDs you should standardize

|

||||

|

||||

* `subject_digest`: `sha256:<image digest>`

|

||||

* `subject_name`: `registry/org/app`

|

||||

* `finding_key`: `(subject_digest, detector, cve, component_purl, location)` stable hash

|

||||

* `component_purl`: package URL (PURL) canonical component identifier

|

||||

|

||||

### Tables (Postgres suggested)

|

||||

|

||||

**artifacts**

|

||||

|

||||

* `id (uuid)`

|

||||

* `name`

|

||||

* `digest` (unique)

|

||||

* `created_at`

|

||||

|

||||

**findings**

|

||||

|

||||

* `id (uuid)`

|

||||

* `artifact_digest`

|

||||

* `cve`

|

||||

* `component_purl`

|

||||

* `severity`

|

||||

* `raw_score`

|

||||

* `risk_score`

|

||||

* `status` (open/triaged/accepted/fixed)

|

||||

* `first_seen`, `last_seen`

|

||||

|

||||

**evidence**

|

||||

|

||||

* `id (uuid)`

|

||||

* `finding_id`

|

||||

* `kind` (reachable_path | boundary | score_explain | vex | ...)

|

||||

* `payload_json` (jsonb, small)

|

||||

* `blob_ref` (content-addressed URI for big payloads)

|

||||

* `last_seen`

|

||||

* `expires_at`

|

||||

* `confidence` (0–1)

|

||||

* `source_attestation_digest` (nullable)

|

||||

|

||||

**attestations**

|

||||

|

||||

* `id (uuid)`

|

||||

* `artifact_digest`

|

||||

* `predicate_type`

|

||||

* `attestation_digest` (sha256 of DSSE envelope)

|

||||

* `signer_identity` (OIDC subject / cert identity)

|

||||

* `issued_at`

|

||||

* `registry_ref` (where attached)

|

||||

|

||||

**approvals**

|

||||

|

||||

* `id (uuid)`

|

||||

* `artifact_digest`

|

||||

* `decision_attestation_digest`

|

||||

* `approver`

|

||||

* `approved_at`

|

||||

* `expires_at`

|

||||

* `reason`

|

||||

|

||||

---

|

||||

|

||||

## 5) Explainable triage: how to compute the “Path + Boundary + VEX + Last‑seen”

|

||||

|

||||

### 5.1 Reachable path proof (call chain / flow)

|

||||

|

||||

You need a uniform reachability result type:

|

||||

|

||||

* `reachable = true` with an explicit path

|

||||

* `reachable = false` with justification (e.g., symbol absent, dead code)

|

||||

* `reachable = unknown` with reason (insufficient symbols, dynamic dispatch)

|

||||

|

||||

**Implementation strategy**

|

||||

|

||||

1. **Symbol mapping**: map CVE → vulnerable symbols/functions/classes

|

||||

|

||||

* Use one or more:

|

||||

|

||||

* vendor advisory → patched functions

|

||||

* diff mining (commit that fixes CVE) to extract changed symbols

|

||||

* curated mapping in your DB for high volume CVEs

|

||||

2. **Program graph extraction** at build time:

|

||||

|

||||

* Produce a call graph or dependency graph per language.

|

||||

* Store as compact adjacency list (or protobuf) keyed by `subject_digest`.

|

||||

3. **Entrypoint discovery**:

|

||||

|

||||

* HTTP routes (framework metadata)

|

||||

* gRPC service methods

|

||||

* queue/stream consumers

|

||||

* cron/CLI handlers

|

||||

4. **Path search**:

|

||||

|

||||

* BFS/DFS from entrypoints to vulnerable symbols.

|

||||

* Record the shortest path + top‑K alternatives.

|

||||

5. **Proof bundle**:

|

||||

|

||||

* path nodes with stable IDs

|

||||

* file hashes + line ranges (no raw source required)

|

||||

* tool version + config hash

|

||||

* graph digest

|

||||

|

||||

**Reachability evidence JSON (UI‑friendly)**

|

||||

|

||||

```json

|

||||

{

|

||||

"kind": "reachable_path",

|

||||

"result": "reachable",

|

||||

"confidence": 0.86,

|

||||

"entrypoints": [

|

||||

{"type":"http","route":"POST /billing/charge","auth":"jwt:payments:write"}

|

||||

],

|

||||

"paths": [{

|

||||

"path_id": "p-1",

|

||||

"steps": [

|

||||

{"node":"BillingController.Pay","file_hash":"sha256:aaa","lines":[41,88]},

|

||||

{"node":"StripeClient.Create","file_hash":"sha256:bbb","lines":[10,52]},

|

||||

{"node":"stripe-sdk.vulnFn","symbol":"stripe-sdk::parseWebhook","evidence":"symbol-match"}

|

||||

]

|

||||

}],

|

||||

"graph": {"digest":"sha256:callgraph...", "format":"stella-callgraph-v1"},

|

||||

"last_seen": "2025-12-18T09:22:00Z",

|

||||

"expires_at": "2025-12-25T09:22:00Z"

|

||||

}

|

||||

```

|

||||

|

||||

**UI rule:** never show “reachable” without a concrete, replayable path ID.

|

||||

|

||||

---

|

||||

|

||||

### 5.2 Boundary proof (the “why this is exposed” part)

|

||||

|

||||

Boundary proof answers: “Even if reachable, who can trigger it?”

|

||||

|

||||

**Data sources**

|

||||

|

||||

* Kubernetes ingress/service (exposure)

|

||||

* API gateway routes and auth policies

|

||||

* service mesh auth (mTLS, JWT)

|

||||

* IAM policies (for cloud events)

|

||||

* network policies (deny/allow)

|

||||

|

||||

**Boundary evidence schema**

|

||||

|

||||

```json

|

||||

{

|

||||

"kind": "boundary",

|

||||

"surface": {"type":"http","route":"POST /billing/charge"},

|

||||

"exposure": {"internet": true, "ports":[443]},

|

||||

"auth": {

|

||||

"mechanism":"jwt",

|

||||

"required_scopes":["payments:write"],

|

||||

"audience":"billing-api"

|

||||

},

|

||||

"rate_limits": {"enabled": true, "rps": 20},

|

||||

"controls": [

|

||||

{"type":"waf","status":"enabled"},

|

||||

{"type":"input_validation","status":"enabled","location":"BillingController.Pay"}

|

||||

],

|

||||

"last_seen": "2025-12-18T09:22:00Z",

|

||||

"confidence": 0.74

|

||||

}

|

||||

```

|

||||

|

||||

**How to build it**

|

||||

|

||||

* Create a “Surface Extractor” plugin per environment:

|

||||

|

||||

* `k8s-extractor`: reads ingress + service + annotations

|

||||

* `gateway-extractor`: reads API gateway config

|

||||

* `iac-extractor`: parses Terraform/CloudFormation

|

||||

* Normalize into the schema above.

|

||||

|

||||

---

|

||||

|

||||

### 5.3 VEX in Stella: statuses + justifications

|

||||

|

||||

VEX statuses you should support in UI:

|

||||

|

||||

* Not affected

|

||||

* Affected

|

||||

* Fixed

|

||||

* Under investigation ([NTIA][6])

|

||||

|

||||

OpenVEX will carry the machine readable structure. ([GitHub][7])

|

||||

|

||||

**Practical approach**

|

||||

|

||||

* Treat VEX as **the decision record** for exploitability.

|

||||

* Your policy can require VEX coverage for all “reachable” high severity vulns.

|

||||

|

||||

**Rule of thumb**

|

||||

|

||||

* If `reachable=true` AND boundary shows reachable surface + auth weak → VEX defaults to `affected` until mitigations proven.

|

||||

* If `reachable=false` with high confidence and stable proof → VEX may be `not_affected`.

|

||||

|

||||

---

|

||||

|

||||

### 5.4 Explainable risk score (don’t hide the formula)

|

||||

|

||||

Make score explainability first‑class.

|

||||

|

||||

**Recommended implementation**

|

||||

|

||||

* Store risk score as an additive model:

|

||||

|

||||

* `base = CVSS normalized`

|

||||

* `+ reachability_bonus`

|

||||

* `+ exposure_bonus`

|

||||

* `+ privilege_bonus`

|

||||

* `- mitigation_discount`

|

||||

* Emit a `score_explain` evidence object:

|

||||

|

||||

```json

|

||||

{

|

||||

"kind": "score_explain",

|

||||

"risk_score": 72,

|

||||

"contributions": [

|

||||

{"factor":"cvss","value":41,"reason":"CVSS 9.8"},

|

||||

{"factor":"reachability","value":18,"reason":"reachable path p-1"},

|

||||

{"factor":"exposure","value":10,"reason":"internet-facing route"},

|

||||

{"factor":"auth","value":3,"reason":"scope required lowers impact"}

|

||||

],

|

||||

"last_seen":"2025-12-18T09:22:00Z"

|

||||

}

|

||||

```

|

||||

|

||||

**UI rule:** “Score 72” must always be clickable to a stable breakdown.

|

||||

|

||||

---

|

||||

|

||||

## 6) The UI you should build (components + interaction rules)

|

||||

|

||||

### 6.1 Findings list row (collapsed)

|

||||

|

||||

Show only what helps scanning:

|

||||

|

||||

* Score badge

|

||||

* CVE + component

|

||||

* service

|

||||

* reachability chip: Reachable / Not reachable / Unknown

|

||||

* VEX chip

|

||||

* last_seen indicator (green/yellow/red)

|

||||

|

||||

### 6.2 Evidence drawer (expanded)

|

||||

|

||||

Tabs:

|

||||

|

||||

1. **Path**

|

||||

|

||||

* show entrypoint(s)

|

||||

* render call chain (simple list first; graph view optional)

|

||||

2. **Boundary**

|

||||

|

||||

* exposure, auth, controls

|

||||

3. **VEX**

|

||||

|

||||

* status + justification + issuer identity

|

||||

4. **Score**

|

||||

|

||||

* breakdown bar/list

|

||||

5. **Proof**

|

||||

|

||||

* attestation chain viewer (SBOM → VEX → Decision)

|

||||

* “Verify locally” action

|

||||

|

||||

### 6.3 “Open proof bundle” viewer

|

||||

|

||||

Must display:

|

||||

|

||||

* subject digest

|

||||

* signer identity

|

||||

* predicate type

|

||||

* digest of proof bundle

|

||||

* last_seen + tool versions

|

||||

|

||||

**This is where trust is built:** responders can see that the evidence is signed, tied to the artifact, and recent.

|

||||

|

||||

---

|

||||

|

||||

## 7) Proof‑linked evidence: how to generate and attach attestations

|

||||

|

||||

### 7.1 Statement format: in‑toto Attestation Framework

|

||||

|

||||

in‑toto’s model is:

|

||||

|

||||

* **Subjects** (the artifact digests)

|

||||

* **Predicate type** (schema ID)

|

||||

* **Predicate** (your actual data) ([GitHub][2])

|

||||

|

||||

### 7.2 DSSE envelope

|

||||

|

||||

Wrap statements using DSSE so payload type is signed too. ([GitHub][1])

|

||||

|

||||

### 7.3 Attach to OCI image via referrers

|

||||

|

||||

OCI “subject/referrers” makes attestations discoverable from the image digest. ([opencontainers.org][8])

|

||||

ORAS provides the operational model (“attach artifacts to an image”). ([Microsoft Learn][10])

|

||||

|

||||

### 7.4 Practical signing: cosign attest + verify

|

||||

|

||||

Cosign has built‑in in‑toto attestation support and can sign custom predicates. ([Sigstore][12])

|

||||

|

||||

Typical patterns (example only; adapt to your environment):

|

||||

|

||||

```bash

|

||||

# Attach an attestation

|

||||

cosign attest --predicate reachability.json \

|

||||

--type stella/reachability/v1 \

|

||||

<image@sha256:digest>

|

||||

|

||||

# Verify attestation

|

||||

cosign verify-attestation --type stella/reachability/v1 \

|

||||

<image@sha256:digest>

|

||||

```

|

||||

|

||||

(Use keyless OIDC or KMS keys depending on your org.)

|

||||

|

||||

---

|

||||

|

||||

## 8) Define your predicate types (this is the “contract” Stella enforces)

|

||||

|

||||

You’ll want at least these predicate types:

|

||||

|

||||

1. `stella/sbom@v1`

|

||||

|

||||

* embeds CycloneDX/SPDX (or references blob digest)

|

||||

|

||||

2. `stella/vex@v1`

|

||||

|

||||

* embeds OpenVEX document or references it ([GitHub][7])

|

||||

|

||||

3. `stella/reachability@v1`

|

||||

|

||||

* the reachability evidence above

|

||||

* includes `graph.digest`, `paths`, `confidence`, `expires_at`

|

||||

|

||||

4. `stella/boundary@v1`

|

||||

|

||||

* exposure/auth proof and `last_seen`

|

||||

|

||||

5. `stella/policy-decision@v1`

|

||||

|

||||

* the gating result, references all input attestation digests

|

||||

|

||||

6. Optional: `stella/human-approval@v1`

|

||||

|

||||

* “I approve deploy of subject digest X based on decision attestation Y”

|

||||

* keep it time‑boxed

|

||||

|

||||

---

|

||||

|

||||

## 9) The policy gate (how approvals become proof‑linked)

|

||||

|

||||

### 9.1 Use OPA/Rego for the gate

|

||||

|

||||

OPA policies are written in Rego. ([openpolicyagent.org][11])

|

||||

|

||||

**Gate input** should be a single JSON document assembled from verified attestations:

|

||||

|

||||

```json

|

||||

{

|

||||

"subject": {"name":"registry/org/app","digest":"sha256:..."},

|

||||

"sbom": {...},

|

||||

"vex": {...},

|

||||

"reachability": {...},

|

||||

"boundary": {...},

|

||||

"org_policy": {"max_risk": 75, "max_age_hours": 168}

|

||||

}

|

||||

```

|

||||

|

||||

**Example Rego (deny‑by‑default)**

|

||||

|

||||

```rego

|

||||

package stella.gate

|

||||

|

||||

default allow := false

|

||||

|

||||

# deny if evidence is stale

|

||||

stale_evidence {

|

||||

now := time.now_ns()

|

||||

exp := time.parse_rfc3339_ns(input.reachability.expires_at)

|

||||

now > exp

|

||||

}

|

||||

|

||||

# deny if any high severity reachable vuln is not resolved by VEX

|

||||

unresolved_reachable[v] {

|

||||

v := input.reachability.findings[_]

|

||||

v.severity in {"critical","high"}

|

||||

v.reachable == true

|

||||

not input.vex.resolution[v.cve] in {"not_affected","fixed"}

|

||||

}

|

||||

|

||||

allow {

|

||||

input.risk_score <= input.org_policy.max_risk

|

||||

not stale_evidence

|

||||

count(unresolved_reachable) == 0

|

||||

}

|

||||

```

|

||||

|

||||

### 9.2 Emit a signed policy decision attestation

|

||||

|

||||

When OPA returns `allow=true`, emit **another attestation**:

|

||||

|

||||

* predicate includes the policy version/hash and all input refs.

|

||||

* that’s what the UI “Approve” button targets.

|

||||

|

||||

This is the “evidence‑linked approval”: approval references the signed decision, and the decision references the signed evidence.

|

||||

|

||||

---

|

||||

|

||||

## 10) “Approve” button behavior (what Stella Ops should enforce)

|

||||

|

||||

### Disabled until…

|

||||

|

||||

* subject digest known

|

||||

* SBOM attestation found + signature verified

|

||||

* VEX attestation found + signature verified

|

||||

* Decision attestation found + signature verified

|

||||

* Decision’s `inputs` digests match the actual retrieved evidence

|

||||

|

||||

### When clicked…

|

||||

|

||||

1. Stella Ops creates a `stella/human-approval@v1` statement:

|

||||

|

||||

* `subject` = artifact digest

|

||||

* `predicate.decision_ref` = decision attestation digest

|

||||

* `predicate.expires_at` = short TTL (e.g., 7–30 days)

|

||||

2. Signs it with the approver identity

|

||||

3. Attaches it to the artifact (OCI referrer)

|

||||

|

||||

### Audit view must show

|

||||

|

||||

* approver identity

|

||||

* exact artifact digest

|

||||

* exact decision attestation digest

|

||||

* timestamp and expiry

|

||||

|

||||

---

|

||||

|

||||

## 11) Implementation details that matter in production

|

||||

|

||||

### 11.1 Verification library (shared by UI backend + CI gate)

|

||||

|

||||

Write one verifier module used everywhere:

|

||||

|

||||

**Inputs**

|

||||

|

||||

* image digest

|

||||

* expected predicate types

|

||||

* trust policy (allowed identities/issuers, keyless rules, KMS keys)

|

||||

|

||||

**Steps**

|

||||

|

||||

1. Discover referrers for `image@sha256:…`

|

||||

2. Filter by `predicateType`

|

||||

3. Verify DSSE + signature + identity

|

||||

4. Validate JSON schema for predicate

|

||||

5. Check `subject.digest` matches image digest

|

||||

6. Return “verified evidence set” + “errors”

|

||||

|

||||

### 11.2 Evidence privacy

|

||||

|

||||

Reachability proofs can leak implementation details.

|

||||

|

||||

* Store file hashes, symbol names, and line ranges

|

||||

* Gate raw source behind elevated permissions

|

||||

* Provide redacted proofs by default

|

||||

|

||||

### 11.3 Evidence TTL strategy

|

||||

|

||||

* SBOM: long TTL (weeks/months) if digest immutable

|

||||

* Boundary: short TTL (hours/days) because env changes

|

||||

* Reachability: medium TTL (days/weeks) depending on code churn

|

||||

* VEX: must be renewed if boundary/reachability changes

|

||||

|

||||

### 11.4 Handling “Unknown reachability”

|

||||

|

||||

Don’t force false certainty.

|

||||

|

||||

* Mark as `unknown` and show why (missing symbols, dynamic reflection, stripped binaries)

|

||||

* Policy can treat unknown as “reachable” for critical CVEs in internet‑facing services.

|

||||

|

||||

---

|

||||

|

||||

## 12) A concrete MVP path that still delivers value

|

||||

|

||||

If you want a minimal but real first release:

|

||||

|

||||

### MVP (2–3 deliverables)

|

||||

|

||||

1. **Evidence drawer** fed by:

|

||||

|

||||

* scanner output + SBOM + a simple “entrypoint map”

|

||||

2. **VEX workflow**

|

||||

|

||||

* allow engineers to set VEX status + justification

|

||||

3. **Signed decision gating**

|

||||

|

||||

* even if reachability is heuristic, the chain is real

|

||||

|

||||

Then iterate:

|

||||

|

||||

* add reachability graphs

|

||||

* add boundary extraction from IaC/K8s

|

||||

* tighten policy (staleness, confidence thresholds)

|

||||

|

||||

---

|

||||

|

||||

## 13) Quick checklist for “done enough to trust”

|

||||

|

||||

* [ ] Every finding expands to: Path, Boundary, VEX, Score, Proof

|

||||

* [ ] Every evidence tab shows `last_seen` + confidence

|

||||

* [ ] “Verify chain” works: SBOM → VEX → Decision all signed and bound to the artifact digest

|

||||

* [ ] Approve button signs a human approval attestation tied to the decision digest

|

||||

* [ ] CI gate verifies the same chain before deploy

|

||||

|

||||

---

|

||||

|

||||

If you want, I can also drop in:

|

||||

|

||||

* a full set of JSON Schemas for `stella/*@v1` predicates,

|

||||

* a reference verifier implementation outline in .NET 10 (Minimal API + a verifier class),

|

||||

* and a sample UI component tree (React) that renders path/boundary graphs and attestation chains.

|

||||

|

||||

[1]: https://github.com/secure-systems-lab/dsse?utm_source=chatgpt.com "DSSE: Dead Simple Signing Envelope"

|

||||

[2]: https://github.com/in-toto/attestation?utm_source=chatgpt.com "in-toto Attestation Framework"

|

||||

[3]: https://docs.sigstore.dev/about/bundle/?utm_source=chatgpt.com "Sigstore Bundle Format"

|

||||

[4]: https://spdx.dev/use/specifications/?utm_source=chatgpt.com "Specifications"

|

||||

[5]: https://github.com/CycloneDX/specification?utm_source=chatgpt.com "CycloneDX/specification"

|

||||

[6]: https://www.ntia.gov/sites/default/files/publications/vex_one-page_summary_0.pdf "VEX one-page summary"

|

||||

[7]: https://github.com/openvex/spec?utm_source=chatgpt.com "OpenVEX Specification"

|

||||

[8]: https://opencontainers.org/posts/blog/2024-03-13-image-and-distribution-1-1/?utm_source=chatgpt.com "OCI Image and Distribution Specs v1.1 Releases"

|

||||

[9]: https://oras.land/docs/concepts/reftypes/?utm_source=chatgpt.com "Attached Artifacts | OCI Registry As Storage"

|

||||

[10]: https://learn.microsoft.com/en-us/azure/container-registry/container-registry-manage-artifact?utm_source=chatgpt.com "Manage OCI Artifacts and Supply Chain Artifacts with ORAS"

|

||||

[11]: https://openpolicyagent.org/docs/policy-language?utm_source=chatgpt.com "Policy Language"

|

||||

[12]: https://docs.sigstore.dev/cosign/verifying/attestation/?utm_source=chatgpt.com "In-Toto Attestations"

|

||||

@@ -0,0 +1,259 @@

|

||||

I’m sharing this because the state of modern vulnerability prioritization and supply‑chain risk tooling is rapidly shifting toward *context‑aware, evidence‑driven insights* — not just raw lists of CVEs.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

Here’s what’s shaping the field:

|

||||

|

||||

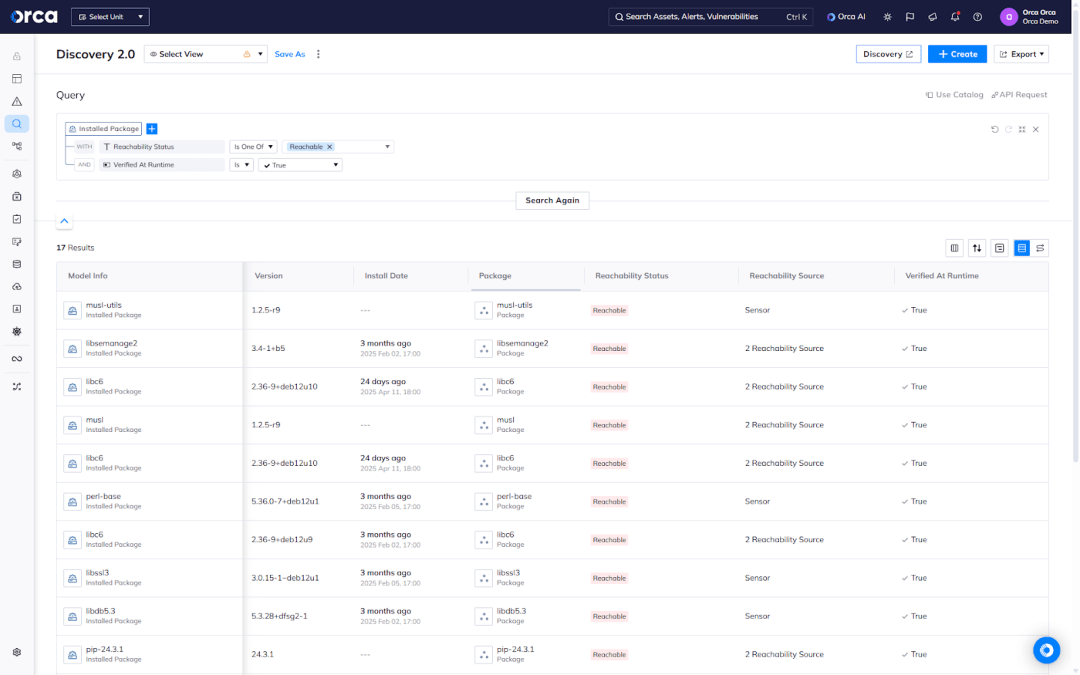

**• Reachability‑first triage is about ordering fixes by *actual call‑graph evidence*** — tools like Snyk analyze your code’s call graph to determine whether a vulnerable function is *actually reachable* from your application’s execution paths. Vulnerabilities with evidence of reachability are tagged (e.g., **REACHABLE**) so teams can focus on real exploit risk first, rather than just severity in a vacuum. This significantly reduces noise and alert fatigue by filtering out issues that can’t be invoked in context. ([Snyk User Docs][1])

|

||||

|

||||

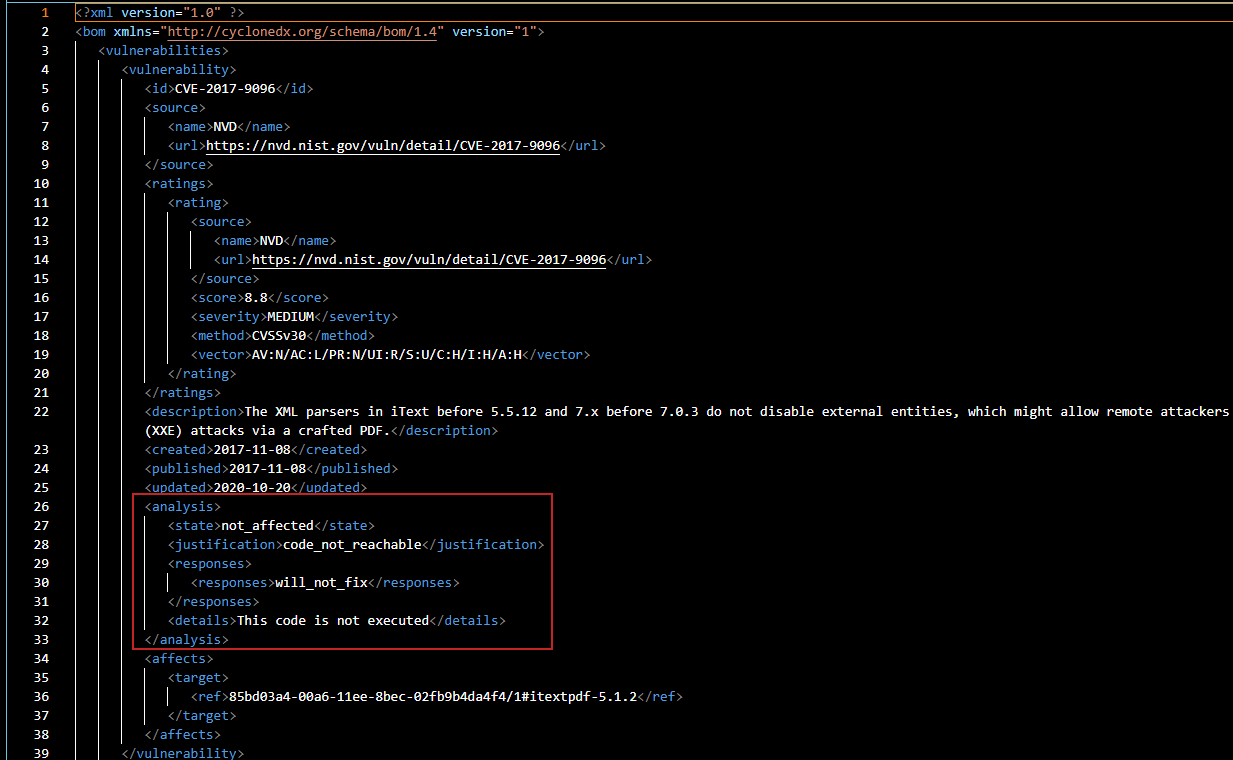

**• Inline VEX status with provenance turns static findings into contextual decisions.** *Vulnerability Exploitability eXchange (VEX)* is a structured way to annotate each finding with its *exploitability status* — like “not applicable,” “mitigated,” or “under investigation” — and attach that directly to SBOM/VEX records. Anchore Enterprise, for example, supports embedding these annotations and exporting them in both OpenVEX and CycloneDX VEX formats so downstream consumers see not just “there’s a CVE” but *what it means for your specific build or deployment*. ([Anchore][2])

|

||||

|

||||

**• OCI‑linked evidence chips (VEX attestations) bind context to images at the registry level.** Tools like Trivy can discover VEX attestations stored in OCI registries using flags like `--vex oci`. That lets scanners incorporate *pre‑existing attestations* into their vulnerability results — essentially layering registry‑attached statements about exploitability right into your scan output. ([Trivy][3])

|

||||

|

||||

Taken together, these trends illustrate a shift from *volume* (lists of vulnerabilities) to *value* (actionable, context‑specific risk insight) — especially if you’re building or evaluating risk tooling that needs to integrate call‑graph evidence, structured exploitability labels, and registry‑sourced attestations for high‑fidelity prioritization.

|

||||

|

||||

[1]: https://docs.snyk.io/manage-risk/prioritize-issues-for-fixing/reachability-analysis?utm_source=chatgpt.com "Reachability analysis"

|

||||

[2]: https://anchore.com/blog/anchore-enterprise-5-23-cyclonedx-vex-and-vdr-support/?utm_source=chatgpt.com "Anchore Enterprise 5.23: CycloneDX VEX and VDR Support"

|

||||

[3]: https://trivy.dev/docs/latest/supply-chain/vex/oci/?utm_source=chatgpt.com "Discover VEX Attestation in OCI Registry"

|

||||

Below are UX patterns that are “worth it” specifically for a VEX-first, evidence-driven scanner like Stella Ops. I’m not repeating generic “nice UI” ideas; these are interaction models that materially reduce triage time, raise trust, and turn your moats (determinism, proofs, lattice merge) into something users can feel.

|

||||

|

||||

## 1) Make “Claim → Evidence → Verdict” the core mental model

|

||||

|

||||

Every finding is a **Claim** (e.g., “CVE-X affects package Y in image Z”), backed by **Evidence** (SBOM match, symbol match, reachable path, runtime hit, vendor VEX, etc.), merged by **Semantics** (your lattice rules), producing a **Verdict** (policy outcome + signed attestation).

|

||||

|

||||

**UX consequence:** every screen should answer:

|

||||

|

||||

* What is being claimed?

|

||||

* What evidence supports it?

|

||||

* Which rule turned it into “block / allow / warn”?

|

||||

* Can I replay it identically?

|

||||

|

||||

## 2) “Risk Inbox” that behaves like an operator queue, not a report

|

||||

|

||||

Borrow the best idea from SOC tooling: a queue you can clear.

|

||||

|

||||

**List row structure (high impact):**

|

||||

|

||||

* Left: *Policy outcome* (BLOCK / WARN / PASS) as the primary indicator (not CVSS).

|

||||

* Middle: *Evidence chips* (REACHABLE, RUNTIME-SEEN, VEX-NOT-AFFECTED, ATTESTED, DIFF-NEW, etc.).

|

||||

* Right: *Blast radius* (how many artifacts/envs/services), plus “time since introduced”.

|

||||

|

||||

**Must-have filters:**

|

||||

|

||||

* “New since last release”

|

||||

* “Reachable only”

|

||||

* “Unknowns only”

|

||||

* “Policy blockers in prod”

|

||||

* “Conflicts (VEX merge disagreement)”

|

||||

* “No provenance (unsigned evidence)”

|

||||

|

||||

## 3) Delta-first everywhere (default view is “what changed”)

|

||||

|

||||

Users rarely want the full world; they want the delta relative to the last trusted point.

|

||||

|

||||

**Borrowed pattern:** PR diff mindset.

|

||||

|

||||

* Default to **Diff Lens**: “introduced / fixed / changed reachability / changed policy / changed EPSS / changed source trust”.

|

||||

* Every detail page has a “Before / After” toggle for: SBOM subgraph, reachability subgraph, VEX claims, policy trace.

|

||||

|

||||

This is one of the biggest “time saved per pixel” UX decisions you can make.

|

||||

|

||||

## 4) Evidence chips that are not decorative: click-to-proof

|

||||

|

||||

Chips should be actionable and open the exact proof.

|

||||

|

||||

Examples:

|

||||

|

||||

* **REACHABLE** → opens reachability subgraph viewer with the exact path(s) highlighted.

|

||||

* **ATTESTED** → opens DSSE/in-toto attestation viewer + signature verification status.

|

||||

* **VEX: NOT AFFECTED** → opens VEX statement with provenance + merge outcome.

|

||||

* **BINARY-MATCH** → opens mapping evidence (Build-ID / symbol / file hash) and confidence.

|

||||

|

||||

Rule: every chip either opens proof, or it doesn’t exist.

|

||||

|

||||

## 5) “Verdict Ladder” on every finding

|

||||

|

||||

A vertical ladder that shows the transformation from raw detection to final decision:

|

||||

|

||||

1. Detection source(s)

|

||||

2. Component identification (SBOM / installed / binary mapping)

|

||||

3. Applicability (platform, config flags, feature gates)

|

||||

4. Reachability (static path evidence)

|

||||

5. Runtime confirmation (if available)

|

||||

6. VEX merge & trust weighting

|

||||

7. Policy trace → final verdict

|

||||

8. Signed attestation reference (digest)

|

||||

|

||||

This turns your product from “scanner UI” into “auditor-grade reasoning UI”.

|

||||

|

||||

## 6) Reachability Explorer that is intentionally constrained

|

||||

|

||||

Reachability visualizations usually fail because they’re too generic.

|

||||

|

||||

Do this instead:

|

||||

|

||||

* Show **one shortest path** by default (operator mode).

|

||||

* Offer “show all paths” only on demand (expert mode).

|

||||

* Provide a **human-readable path narration** (“HTTP handler X → service Y → library Z → vulnerable function”) plus the reproducible anchors (file:line or symbol+offset).

|

||||

* Store and render the **subgraph evidence**, not a screenshot.

|

||||

|

||||

## 7) A “Policy Trace” panel that reads like a flight recorder

|

||||

|

||||

Borrow from OPA/rego trace concepts: show which rules fired, which evidence satisfied conditions, and where unknowns influenced outcome.

|

||||

|

||||

**UX element:** “Why blocked?” and “What would make it pass?”

|

||||

|

||||

* “Blocked because: reachable AND exploited AND no mitigation claim AND env=prod”

|

||||

* “Would pass if: VEX mitigated with evidence OR reachability unknown budget allows OR patch applied”

|

||||

|

||||

This directly enables your “risk budgets + diff-aware release gates”.

|

||||

|

||||

## 8) Unknowns are first-class, budgeted, and visual

|

||||

|

||||

Most tools hide unknowns. You want the opposite.

|

||||

|

||||

**Unknowns dashboard:**

|

||||

|

||||

* Unknown count by environment + trend.

|

||||

* Unknown categories (unmapped binaries, missing SBOM edges, unsigned VEX, stale feeds).

|

||||

* Policy thresholds (e.g., “fail if unknowns > N in prod”) with clear violation explanation.

|

||||

|

||||

**Micro-interaction:** unknowns should have a “convert to known” CTA (attach evidence, add mapping rule, import attestation, upgrade feed bundle).

|

||||

|

||||

## 9) VEX Conflict Studio: side-by-side merge with provenance

|

||||

|

||||

When two statements disagree, don’t just pick one. Show the conflict.

|

||||

|

||||

**Conflict card:**

|

||||

|

||||

* Left: Vendor VEX statement + signature/provenance

|

||||

* Right: Distro/internal statement + signature/provenance

|

||||

* Middle: lattice merge result + rule that decided it

|

||||

* Bottom: “Required evidence hook” checklist (feature flag off, config, runtime proof, etc.)

|

||||

|

||||

This makes your “Trust Algebra / Lattice Engine” tangible.

|

||||

|

||||

## 10) Exceptions as auditable objects (with TTL) integrated into triage

|

||||

|

||||

Exception UX should feel like creating a compliance-grade artifact, not clicking “ignore”.

|

||||

|

||||

**Exception form UX:**

|

||||

|

||||

* Scope selector: artifact digest(s), package range, env(s), time window

|

||||

* Required: rationale + evidence attachments

|

||||

* Optional: compensating controls (WAF, network isolation)

|

||||

* Auto-generated: signed exception attestation + audit pack link

|

||||

* Review workflow: “owner”, “approver”, “expires”, “renewal requires fresh evidence”

|

||||

|

||||

## 11) One-click “Audit Pack” export from any screen

|

||||

|

||||

Auditors don’t want screenshots; they want structured evidence.

|

||||

|

||||

From a finding/release:

|

||||

|

||||

* Included: SBOM (exact), VEX set (exact), merge rules version, policy version, reachability subgraph, signatures, feed snapshot hashes, delta verdict

|

||||

* Everything referenced by digest and replay manifest

|

||||

|

||||

UX: a single button “Generate Audit Pack”, plus “Replay locally” instructions.

|

||||

|

||||

## 12) Attestation Viewer that non-cryptographers can use

|

||||

|

||||

Most attestation UIs are unreadable. Make it layered:

|

||||

|

||||

* “Verified / Unverified” summary

|

||||

* Key identity, algorithm, timestamp

|

||||

* What was attested (subject digest, predicate type)

|

||||

* Links: “open raw DSSE JSON”, “copy digest”, “compare to current”

|

||||

|

||||

If you do crypto-sovereign modes (GOST/SM/eIDAS/FIPS), show algorithm badges and validation source.

|

||||

|

||||

## 13) Proof-of-Integrity Graph as a drill-down, not a science project

|

||||

|

||||

Graph UI should answer one question: “Can I trust this artifact lineage?”

|

||||

|

||||

Provide:

|

||||

|

||||

* A minimal lineage chain by default: Source → Build → SBOM → VEX → Scan Verdict → Deploy

|

||||

* Expand nodes on click (don’t render the whole universe)

|

||||

* Confidence meter derived from signed links and trusted issuers

|

||||

|

||||

## 14) “Remedy Plan” that is evidence-aware, not generic advice

|

||||

|

||||

Fix guidance must reflect reachability and delta:

|

||||

|

||||

* If reachable: prioritize patch/upgrade, show “patch removes reachable path” expectation

|

||||

* If not reachable: propose mitigation or deferred SLA with justification

|

||||

* Show “impact of upgrade” (packages touched, images affected, services impacted)